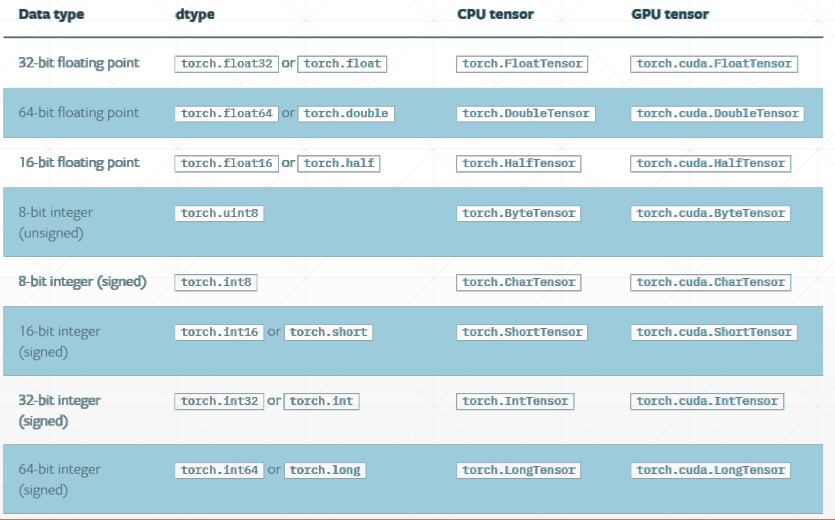

pytorch数据类型

python数据类型

- Int

- float

- Int array

- Float array

torch数据类型

- tensor of size()

- FloatTensor of size()

- Int array IntTensor of size [d1, d2 ,…]

- Float array FloatTensor of size [d1, d2, …]

数据类型案例:

>>> import torch >>> a=torch.randn(2,3) >>> a tensor([[-1.5869, -0.3355, -0.4608], [-0.6137, 1.1864, 0.1175]]) >>> a.type() 'torch.FloatTensor' >>> type(a) <class 'torch.Tensor'> >>> isinstance(a,torch.tensor) Traceback (most recent call last): File "<stdin>", line 1, in <module> TypeError: isinstance() arg 2 must be a type or tuple of types >>> isinstance(a,torch.Tensor) True >>> isinstance(a,torch.FloatTensor) True >>> a=a.cuda() >>> isinstance(a,torch.cuda.DoubleTensor) False >>> isinstance(a,torch.cuda.FloatTensor) True

对于pytorch没有字符串数据类型,nlp补充:

tensor中的维度与秩(dim/rank)

dim=0

>>> torch.tensor(1.3) tensor(1.3000) >>> a=torch.tensor(1) >>> a tensor(1) >>> a.shape torch.Size([]) >>> len(a.shape) 0

dim=1

>>> torch.tensor(1.3) tensor(1.3000) >>> a=torch.tensor(1) >>> a tensor(1) >>> a.shape torch.Size([]) >>> len(a.shape) 0 >>> a=torch.tensor([1]) >>> a.shape torch.Size([1]) >>> len(a.shape) 1 >>> import numpy as np >>> data=np.ones(4) >>> torch.from_numpy(data) tensor([1., 1., 1., 1.], dtype=torch.float64) >>> torch.from_numpy(data).shape torch.Size([4]) >>> torch.ones(4) tensor([1., 1., 1., 1.]) >>> torch.ones(4).shape torch.Size([4])

dim=2

>>> t=torch.randn([2,3]) >>> t tensor([[-0.6883, 0.7381, 1.0752], [-0.4672, -1.4004, -0.0808]]) >>> t.shape torch.Size([2, 3]) >>> len(t.shape) 2 >>> t.size() torch.Size([2, 3]) >>> t.size(0) 2 >>> t.size(1) 3

dim=3

切片取值:t[index1][index2] 或者t[index1,index2,...]

>>> t tensor([[[ 0.7085, 0.7726, 1.8999, 0.5519], [ 1.4031, 1.6307, -0.8214, -0.4323], [-0.6341, 1.7587, 0.2883, -0.6680]], [[-0.2242, -1.4333, 1.5554, -0.0299], [ 0.3667, -1.2395, 0.0835, -0.2902], [ 0.9397, -0.8892, -1.1647, 0.0984]]]) >>> t[0] tensor([[ 0.7085, 0.7726, 1.8999, 0.5519], [ 1.4031, 1.6307, -0.8214, -0.4323], [-0.6341, 1.7587, 0.2883, -0.6680]]) >>> t[0,1] tensor([ 1.4031, 1.6307, -0.8214, -0.4323]) >>> t[0][1] tensor([ 1.4031, 1.6307, -0.8214, -0.4323])

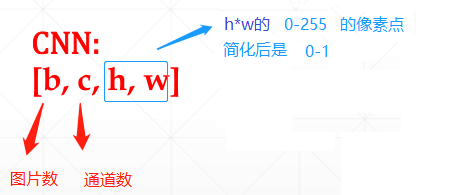

dim=4

>>> t=torch.randn(2,3,28,28)

>>> t

其实4维使我们常用的,因为在图片在处理中

>>> t.numel() 4704 >>> t.dim() 4

创建tensor

from List

>>> torch.tensor([[1,2],[3,4]]) tensor([[1, 2], [3, 4]]) >>> torch.Tensor([[1,2],[3,4]]) tensor([[1., 2.], [3., 4.]]) >>> torch.FloatTensor([[1,2],[3,4]]) tensor([[1., 2.], [3., 4.]])

from numpy

>>> torch.FloatTensor(np.array([[1,2],[3,4]])) tensor([[1., 2.], [3., 4.]]) >>> torch.FloatTensor(np.ones([2,3])) tensor([[1., 1., 1.], [1., 1., 1.]])

uninitialized

- Torch. empty()

- Torch. FloatTensor(d1,d2,d3)

NOT torch. FloatTensor([1,2])=torch. tensor([1,2])

- Torch. IntTensr(d1,d2,d3)

>>> torch.empty(1) tensor([0.]) >>> torch.empty(0,1) tensor([], size=(0, 1)) >>> torch.Tensor(2,3) tensor([[1., 1., 1.], [1., 1., 1.]]) >>> torch.IntTensor(2,3) tensor([[1065353216, 1065353216, 1065353216], [1065353216, 1065353216, 1065353216]], dtype=torch.int32) >>> torch.FloatTensor(2,3) tensor([[1., 1., 1.], [1., 1., 1.]])

- set default type

>>> import torch >>> torch.set_default_tensor_type(torch.DoubleTensor) >>> torch.FloatTensor([1.1,2]).type() 'torch.FloatTensor' >>> torch.Tensor([1.1,2]).type() 'torch.DoubleTensor' >>> torch.set_default_tensor_type(torch.FloatTensor) >>> torch.Tensor([1.1,2]).type() 'torch.FloatTensor'

rand/rand_like, randint

rand 0-1 randn 高斯分布

>>> torch.rand(2,3) tensor([[0.0637, 0.9635, 0.6905], [0.3561, 0.7971, 0.9637]]) >>> torch.randn(2,3) tensor([[-0.0682, 0.3351, 0.2154], [ 1.2318, -0.3260, 1.0314]])

torch.randint (int low, int high, tuple of ints size, torch.Generator generator, Tensor out, torch.dtype dtype, torch.layout layout, torch.device device, bool requires_grad)

normal

>>> torch.full([10],0) tensor([0., 0., 0., 0., 0., 0., 0., 0., 0., 0.]) >>> t=torch.normal(mean=torch.full([10],0),std=torch.arange(1,0,-0.1)) >>> t tensor([ 0.7669, -0.4030, 0.2255, -0.7768, -0.5819, -0.4236, -0.5407, -0.0523, -0.0868, 0.0574]) >>> t.sum() tensor(-1.8154) >>> t=torch.normal(mean=20,std=torch.arange(1,0,-0.1)) >>> t tensor([21.3688, 19.3070, 21.3453, 19.9982, 19.1107, 20.0866, 20.4991, 20.1489, 20.2040, 20.0152]) >>> t.sum() tensor(202.0836) >>> t.std() tensor(0.7334)

full

>>> torch.full([2,3],2) tensor([[2., 2., 2.], [2., 2., 2.]]) >>> torch.full([2],2) tensor([2., 2.]) >>> torch.full([],2) tensor(2.)

arange

>>> torch.arange(5) tensor([0, 1, 2, 3, 4]) >>> torch.arange(1,5) tensor([1, 2, 3, 4]) >>> torch.arange(1,5,2) tensor([1, 3])

linspace/logspace

>>> torch.linspace(1,5)

默认是:steps: _int=100

tensor([1.0000, 1.0404, 1.0808, 1.1212, 1.1616, 1.2020, 1.2424, 1.2828, 1.3232, 1.3636, 1.4040, 1.4444, 1.4848, 1.5253, 1.5657, 1.6061, 1.6465, 1.6869, 1.7273, 1.7677, 1.8081, 1.8485, 1.8889, 1.9293, 1.9697, 2.0101, 2.0505, 2.0909, 2.1313, 2.1717, 2.2121, 2.2525, 2.2929, 2.3333, 2.3737, 2.4141, 2.4545, 2.4949, 2.5354, 2.5758, 2.6162, 2.6566, 2.6970, 2.7374, 2.7778, 2.8182, 2.8586, 2.8990, 2.9394, 2.9798, 3.0202, 3.0606, 3.1010, 3.1414, 3.1818, 3.2222, 3.2626, 3.3030, 3.3434, 3.3838, 3.4242, 3.4646, 3.5051, 3.5455, 3.5859, 3.6263, 3.6667, 3.7071, 3.7475, 3.7879, 3.8283, 3.8687, 3.9091, 3.9495, 3.9899, 4.0303, 4.0707, 4.1111, 4.1515, 4.1919, 4.2323, 4.2727, 4.3131, 4.3535, 4.3939, 4.4343, 4.4747, 4.5152, 4.5556, 4.5960, 4.6364, 4.6768, 4.7172, 4.7576, 4.7980, 4.8384, 4.8788, 4.9192, 4.9596, 5.0000])

其他:

>>> torch.linspace(1,-1,steps=5)

tensor([ 1.0000, 0.5000, 0.0000, -0.5000, -1.0000])

>>> torch.logspace(0,1,steps=5)

tensor([ 1.0000, 1.7783, 3.1623, 5.6234, 10.0000])

Ones/zeros/eye

>>> torch.ones(2,3) tensor([[1., 1., 1.], [1., 1., 1.]]) >>> torch.zeros(2,3) tensor([[0., 0., 0.], [0., 0., 0.]]) >>> torch.eye(3,3) tensor([[1., 0., 0.], [0., 1., 0.], [0., 0., 1.]]) >>> torch.eye(2,3) tensor([[1., 0., 0.], [0., 1., 0.]])

randperm

>>> a=torch.rand(3,3) >>> a tensor([[0.9763, 0.3293, 0.3892], [0.4935, 0.8954, 0.3332], [0.8529, 0.9512, 0.4607]]) >>> rnd=torch.randperm(3) >>> rnd tensor([2, 1, 0]) >>> rnd=torch.randperm(3) >>> rnd tensor([1, 2, 0]) >>> a[rnd] tensor([[0.4935, 0.8954, 0.3332], [0.8529, 0.9512, 0.4607], [0.9763, 0.3293, 0.3892]])

索引与切片

Indexing

>>> a=torch.rand(3,4,28,28) >>> a.shape torch.Size([3, 4, 28, 28]) >>> a.shape[0] 3 >>> a[1].shape torch.Size([4, 28, 28]) >>> a[1,2].shape torch.Size([28, 28]) >>> a[1][2].shape torch.Size([28, 28])

select first/last N and select by steps

>>> a.shape torch.Size([3, 4, 28, 28]) >>> a[:2].shape torch.Size([2, 4, 28, 28]) >>> a[::2].shape torch.Size([2, 4, 28, 28]) >>> a[1,:,::4,::2].shape torch.Size([4, 7, 14]) >>> a[:2,:,::4,::2].shape torch.Size([2, 4, 7, 14])

...用法

>>> a[...,1].shape torch.Size([3, 4, 28]) >>> a[1,...,1].shape torch.Size([4, 28]) >>> a[1,...].shape torch.Size([4, 28, 28])

select by mask

>>> a=torch.randn(3,4) >>> a tensor([[-0.7844, 0.3613, 0.9439, 1.1414], [-0.1439, -0.0619, -0.0114, 1.4134], [ 0.2690, -0.2971, -1.2936, 1.1307]]) >>> a.ge(0) tensor([[False, True, True, True], [False, False, False, True], [ True, False, False, True]]) >>> bol=a.ge(0) >>> torch.masked_select(a,bol) tensor([0.3613, 0.9439, 1.1414, 1.4134, 0.2690, 1.1307])

select by flatten index

>>> a=torch.randn(3,4) >>> a tensor([[-0.7844, 0.3613, 0.9439, 1.1414], [-0.1439, -0.0619, -0.0114, 1.4134], [ 0.2690, -0.2971, -1.2936, 1.1307]]) >>> torch.take(a,torch.tensor([0,1])) tensor([-0.7844, 0.3613])

Tensor维度变换

- View/reshape

- Squeeze/unsqueeze

- Transpose/t/permute

- Expand/repeat

View、reshape--转化维度

>>> t=torch.rand(2,2,3,3) >>> t tensor([[[[0.2984, 0.2226, 0.2581], [0.3265, 0.1259, 0.9507], [0.8006, 0.4221, 0.2991]], [[0.3293, 0.4607, 0.9949], [0.8828, 0.2940, 0.9095], [0.1136, 0.3338, 0.3124]]], [[[0.3180, 0.0645, 0.3833], [0.3561, 0.6745, 0.5352], [0.8446, 0.2850, 0.1432]], [[0.2941, 0.7898, 0.5875], [0.2199, 0.1547, 0.8705], [0.9739, 0.8842, 0.9073]]]]) >>> t1=t.view(2,2*3*3) >>> t1 tensor([[0.2984, 0.2226, 0.2581, 0.3265, 0.1259, 0.9507, 0.8006, 0.4221, 0.2991, 0.3293, 0.4607, 0.9949, 0.8828, 0.2940, 0.9095, 0.1136, 0.3338, 0.3124], [0.3180, 0.0645, 0.3833, 0.3561, 0.6745, 0.5352, 0.8446, 0.2850, 0.1432, 0.2941, 0.7898, 0.5875, 0.2199, 0.1547, 0.8705, 0.9739, 0.8842, 0.9073]])

降维

>>> t1=t.reshape(2,3*3*2) >>> t1 tensor([[0.2984, 0.2226, 0.2581, 0.3265, 0.1259, 0.9507, 0.8006, 0.4221, 0.2991, 0.3293, 0.4607, 0.9949, 0.8828, 0.2940, 0.9095, 0.1136, 0.3338, 0.3124], [0.3180, 0.0645, 0.3833, 0.3561, 0.6745, 0.5352, 0.8446, 0.2850, 0.1432, 0.2941, 0.7898, 0.5875, 0.2199, 0.1547, 0.8705, 0.9739, 0.8842, 0.9073]])

升维

>>> t1.shape torch.Size([2, 18]) >>> t1.reshape(2,3,6) tensor([[[0.2984, 0.2226, 0.2581, 0.3265, 0.1259, 0.9507], [0.8006, 0.4221, 0.2991, 0.3293, 0.4607, 0.9949], [0.8828, 0.2940, 0.9095, 0.1136, 0.3338, 0.3124]], [[0.3180, 0.0645, 0.3833, 0.3561, 0.6745, 0.5352], [0.8446, 0.2850, 0.1432, 0.2941, 0.7898, 0.5875], [0.2199, 0.1547, 0.8705, 0.9739, 0.8842, 0.9073]]]) >>> t1.view(2,3,6) tensor([[[0.2984, 0.2226, 0.2581, 0.3265, 0.1259, 0.9507], [0.8006, 0.4221, 0.2991, 0.3293, 0.4607, 0.9949], [0.8828, 0.2940, 0.9095, 0.1136, 0.3338, 0.3124]], [[0.3180, 0.0645, 0.3833, 0.3561, 0.6745, 0.5352], [0.8446, 0.2850, 0.1432, 0.2941, 0.7898, 0.5875], [0.2199, 0.1547, 0.8705, 0.9739, 0.8842, 0.9073]]])

view(-1,xx,xx)是什么意思?

如果你不知道你想要某个维度是什么值,但确定其他的维度的值,那么你可以将该维度的值设置为-1(你可以将它扩展到具有更多维度的张量。只有一个轴值可以是-1)。

tensor会根据其他维度的值与变化之前tensor作比较计算出实现这一维度所需的适当值。

>>> a=torch.randn(3,4,28,28) >>> a.shape torch.Size([3, 4, 28, 28]) >>> a.view(-1,28,28).shape torch.Size([12, 28, 28]) >>> a.view(12,-1,4).shape torch.Size([12, 196, 4])

unsqueeze

正数索引,则在前增加1个维度;负数在后增加一个维度,该维度为1

>>> a=torch.randn(2,1,2,3) >>> a tensor([[[[ 0.4931, -0.4090, 1.1138], [-0.2826, 0.2546, 2.6305]]], [[[ 0.0871, -0.7915, 0.7440], [ 0.3542, -0.1926, 0.0917]]]]) >>> a.shape torch.Size([2, 1, 2, 3]) >>> a.unsqueeze(0) tensor([[[[[ 0.4931, -0.4090, 1.1138], [-0.2826, 0.2546, 2.6305]]], [[[ 0.0871, -0.7915, 0.7440], [ 0.3542, -0.1926, 0.0917]]]]]) >>> a.unsqueeze(0).shape torch.Size([1, 2, 1, 2, 3]) >>> a.unsqueeze(-1).shape torch.Size([2, 1, 2, 3, 1]) >>> a.unsqueeze(4).shape torch.Size([2, 1, 2, 3, 1]) >>> a.unsqueeze(3).shape torch.Size([2, 1, 2, 1, 3])

squeeze

减少一个维度值为1的1个维度

>>> a.shape torch.Size([2, 1, 2, 3]) >>> a.squeeze(0) tensor([[[[ 0.4931, -0.4090, 1.1138], [-0.2826, 0.2546, 2.6305]]], [[[ 0.0871, -0.7915, 0.7440], [ 0.3542, -0.1926, 0.0917]]]]) >>> a.squeeze(0).shape torch.Size([2, 1, 2, 3]) >>> a.squeeze(1).shape torch.Size([2, 2, 3]) >>> a.squeeze(2).shape torch.Size([2, 1, 2, 3])

Expand / repeat

▪ Expand: broadcasting

▪ Repeat: memory copied

>>> a=torch.rand(2,1,1,1) >>> a tensor([[[[0.4614]]], [[[0.6026]]]]) >>> a.expand(2,2,1,1) tensor([[[[0.4614]], [[0.4614]]], [[[0.6026]], [[0.6026]]]]) >>> a.repeat(2,2,1,1).shape torch.Size([4, 2, 1, 1]) >>>

Transpose

- 交换一个tensor的两个维度

>>> a.repeat(2,2,1,1).shape torch.Size([4, 2, 1, 1]) >>> a.shape torch.Size([2, 1, 1, 1]) >>> a.transpose(1,0).shape torch.Size([1, 2, 1, 1])

permute

- 多个维度交换

>>> a=torch.rand(2,3,4,5) >>> a.shape torch.Size([2, 3, 4, 5]) >>> a.permute(1,0,3,2).shape torch.Size([3, 2, 5, 4]) >>>

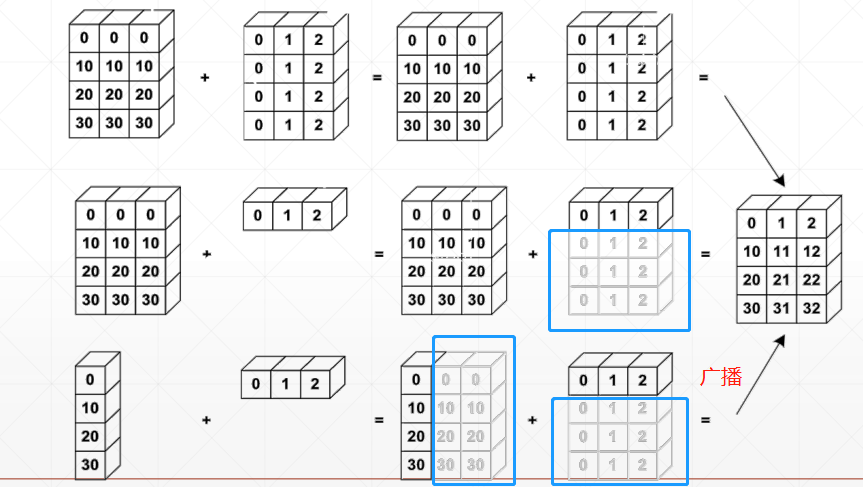

Broadcast自动扩展

▪ Expand

▪ without copying data

>>> a=torch.rand(2,1,2) >>> a tensor([[[0.0344, 0.1296]], [[0.0615, 0.0299]]]) >>> b=torch.rand(1) >>> b tensor([0.4872]) >>> a+b tensor([[[0.5216, 0.6168]], [[0.5487, 0.5171]]])

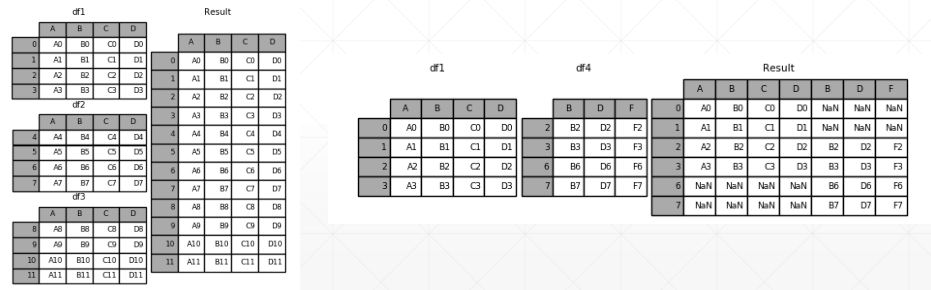

tensor的拼接和分拆

- Cat

- Stack

- Split

- Chunk

cat

把两个tensor按指定维度拼接起来,其他维度需要一致,否则会报错

>>> a=torch.rand(4,3,28,28) >>> b=torch.rand(3,3,28,28) >>> a+b Traceback (most recent call last): File "<stdin>", line 1, in <module> RuntimeError: The size of tensor a (4) must match the size of tensor b (3) at non-singleton dimension 0 >>> ab0=torch.cat([a,b],dim=0) >>> ab0.shape

补充

>>> a=torch.rand(1,10) >>> b=torch.rand(1,10) >>> ab0=torch.cat([a,b],dim=0) >>> ab0 tensor([[0.3638, 0.7604, 0.3703, 0.7216, 0.6735, 0.7905, 0.5711, 0.5189, 0.1007, 0.5366], [0.0569, 0.5928, 0.3540, 0.7903, 0.3928, 0.6856, 0.9303,0.4865, 0.7366, 0.8823]]) >>> ab1=torch.cat([a,b],dim=1) >>> ab1 tensor([[0.3638, 0.7604, 0.3703, 0.7216, 0.6735, 0.7905, 0.5711, 0.5189, 0.1007, 0.5366, 0.0569, 0.5928, 0.3540, 0.7903, 0.3928, 0.6856, 0.9303, 0.4865, 0.7366, 0.8823]])

>>> ab2=torch.cat([a,b],dim=2) Traceback (most recent call last): File "<stdin>", line 1, in <module> IndexError: Dimension out of range (expected to be in range of [-2, 1], but got 2)

stack

虽然也是拼接但是会创建一个新维度,区分前后合并的张量。

>>> ab0=torch.stack([a,b],dim=0) >>> ab0 tensor([[[0.3638, 0.7604, 0.3703, 0.7216, 0.6735, 0.7905, 0.5711, 0.5189,0.1007, 0.5366]],

[[0.0569, 0.5928, 0.3540, 0.7903, 0.3928, 0.6856, 0.9303, 0.4865, 0.7366, 0.8823]]])>>> ab0.shape torch.Size([2, 1, 10]) >>> ab1=torch.stack([a,b],dim=1) >>> ab1.shape torch.Size([1, 2, 10])

Split

维度拆分

torch.split(tensor, ssplit_size_or_section, dim=0)

当split_size_or_sections为int时,tenor结构和split_size_or_sections,正好匹配,那么ouput就是大小相同的块结构。如果按照split_size_or_sections结构,tensor不够了,那么就把剩下的那部分做一个块处理。

当split_size_or_sections 为list时,那么tensor结构会一共切分成len(list)这么多的小块,每个小块中的大小按照list中的大小决定,其中list中的数字总和应等于该维度的大小,否则会报错(注意这里与split_size_or_sections为int时的情况不同)。

>>> a=torch.rand(3,4,10) >>> a1,a2=a.split([1,2],dim=0) >>> a1.shape,a2.shape (torch.Size([1, 4, 10]), torch.Size([2, 4, 10])) >>> a1,a2=a.split(1,dim=0) Traceback (most recent call last): File "<stdin>", line 1, in <module> ValueError: too many values to unpack (expected 2) >>> a1,a2,a3=a.split(1,dim=0) >>> a.shape,b.shape,b.shape (torch.Size([3, 4, 10]), torch.Size([1, 10]), torch.Size([1, 10])) >>> a1.shape,a2.shape,a3.shape (torch.Size([1, 4, 10]), torch.Size([1, 4, 10]), torch.Size([1, 4, 10]))

chunk

其基本使用和torch.split()相同。

>>> a=torch.rand(3,4,10) >>> a1,a2=a.chunk(2,dim=0) >>> a1.shape,a2.shape (torch.Size([2, 4, 10]), torch.Size([1, 4, 10])) >>> a1,a2=a.chunk(3,dim=0) Traceback (most recent call last): File "<stdin>", line 1, in <module> ValueError: too many values to unpack (expected 2) >>> a1,a2,a3=a.chunk(3,dim=0) >>> a1.shape,a2.shape,a3.shape (torch.Size([1, 4, 10]), torch.Size([1, 4, 10]), torch.Size([1, 4, 10]))

区别:

(1)chunks只能是int型,而split_size_or_section可以是list。

(2)chunks在时,不满足该维度下的整除关系,会将块按照维度切分成1的结构。而split会报错。