1. 创建一个工程项目:scrapy startproject quanshuwang

2. cd quanshuwang (命令行下cd到项目目录下)

scrapy genspider quanshu www.quanshuwang.com 在spiders下会生成一个quanshu.py文件 (scrapy genspider 爬虫文件的名称 起始url)

3. 改settings.py

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36' ROBOTSTXT_OBEY = False

存本地、存数据库需要改:

ITEM_PIPELINES = {

'quanshuwang.pipelines.QuanshuwangPipeline': 300,

'quanshuwang.pipelines.MysqlSpider': 400,

}

4. 爬虫文件quanshu.py

# -*- coding: utf-8 -*-

import scrapy

from quanshuwang.items import QuanshuwangItem

class QuanshuSpider(scrapy.Spider):

name = 'quanshu'

# allowed_domains = ['www.quanshuwang.com/list/0_1.html']

start_urls = ['http://www.quanshuwang.com/list/0_1.html']

def parse(self, response):

try:

li_list = response.xpath('//ul[@class="seeWell cf"]/li')

# print(111)

# print(li_list)

for li in li_list:

item = QuanshuwangItem()

item['book_name'] = li.xpath('./span/a/text()').extract_first()

item['author'] = li.xpath('./span/a[2]/text()').extract_first()

item['desc'] = li.xpath('./span/em/text()').extract_first() # 当简介较多时,会有个‘更多’两字,可以不要了

yield item

next_page = response.xpath('//a[@class="next"]/@href').extract_first()

if next_page:

yield scrapy.Request(next_page, callback=self.parse)

except Exception as e:

print(e)

5. items.py文件

book_name,author,desc分别与我数据库的字段对应

class QuanshuItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

book_name = scrapy.Field()

author = scrapy.Field()

desc = scrapy.Field()

6. pipelines.py 管道文件

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html

# class QuanshuwangPipeline(object):

# f = None

#

# def open_spider(self, spider):

# self.f = open('book_list.txt', 'a+', encoding='utf-8')

#

# def process_item(self, item, spider):

# try:

# print('%s 正在下载...' % item['book_name'])

# book_name =item['book_name']

# author = item['author']

# desc = item['desc']

# self.f.write('《'+book_name+'》' + '

' +' 作者:' +author + '

' +' 简介:'+ desc.strip()+'

')

# except Exception as e:

# print(e)

# return item

# def close_spider(self, spider):

# self.f.close()

#将数据存储到mysql数据库中

import pymysql

class MysqlSpider(object):

conn = None

cursor = None

#开始爬虫

def open_spider(self,spider):

#连接数据库

self.conn = pymysql.connect(host='172.16.25.37',port=3306,user='root',password='root',db='scrapy')

# 获取游标指针

self.mycursor = self.conn.cursor()

#执行爬虫

def process_item(self,item,spider):

#sql语句

book_name = item['book_name']

author = item['author']

desc = item['desc']

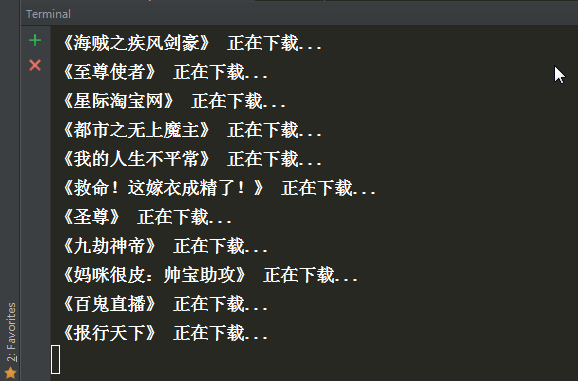

print('《'+book_name + '》'+' 正在下载...')

sql = "insert into quanshuwang VALUES (null,'%s','%s','%s')"%(book_name,author,desc)

try:

self.mycursor.execute(sql)

self.conn.commit()

# print(111)

except Exception as e:

print(e)

# print(222)

self.conn.rollback()

return item

#结束爬虫

def close_spider(self,spider):

#关闭游标和连接

self.mycursor.close()

self.conn.close()

pycharm:

数据库: