1. 创建项目:

CMD下

scrapy startproject zhilianJob

然后 cd zhilianJob , 创建爬虫文件 job.py: scrapy genspider job xxx.com

2. settings.py 中:

USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36'

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'zhilianJob.pipelines.ZhilianjobPipeline': 300,

}

3. 爬虫文件job.py中:

# -*- coding: utf-8 -*-

import scrapy

import json

from zhilianJob.items import ZhilianjobItem

class JobSpider(scrapy.Spider):

name = 'job'

# allowed_domains = ['www.sou.zhaopin.com']

# start_urls可以简写成:https://fe-api.zhaopin.com/c/i/sou?pageSize=90&cityId=538&kw=python&kt=3

start_urls = [

'https://fe-api.zhaopin.com/c/i/sou?pageSize=90&cityId=538&salary=0,0&workExperience=-1&education=-1&companyType=-1&employmentType=-1&jobWelfareTag=-1&kw=python&kt=3&=0&_v=0.02964699&x-zp-page-request-id=3e524df5d2b541dcb5ddb82028a5c1b6-1565749700925-710042&x-zp-client-id=2724abb6-fb33-43a0-af2e-f177d8a3e169']

def parse(self, response):

# print(response.text)

data = json.loads(response.text)

job = data['data']['results']

# print(job)

try:

for j in job:

item = ZhilianjobItem()

item['job_name'] = j['jobName']

item['job_firm'] = j['company']['name']

item['job_firmPeople'] = j['company']['size']['name']

item['job_salary'] = j['salary']

item['job_type'] = j['jobType']['items'][0]['name']

item['job_yaoqiu'] = j['eduLevel']['name'] + ',' + j['workingExp']['name']

item['job_welfare'] = ','.join(j['welfare'])

yield item

except Exception as e:

print(e)

4. items.py中:

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class ZhilianjobItem(scrapy.Item):

# define the fields for your item here like:

job_name = scrapy.Field() #工作名称

job_firm = scrapy.Field() #公司名称

job_firmPeople = scrapy.Field() #公司人数

job_type = scrapy.Field() #工作类型

job_salary = scrapy.Field() #薪水

job_yaoqiu = scrapy.Field() #工作要求

job_welfare = scrapy.Field() #福利

pass

5. 创建数据库,根据items中字段对应即可

6 . 管道文件pipelines.py:

import pymysql

class ZhilianjobPipeline(object):

conn = None

mycursor = None

def open_spider(self,spider):

self.conn = pymysql.connect(host='172.16.25.37',port=3306,user='root',password='root',db='scrapy')

# 获取游标

self.mycursor = self.conn.cursor()

print('正在清空之前的数据...')

# 我只打算要第一页的数据,所以每次爬取都是最新的,要把数据库里的之前的数据要清空

sql1 = "truncate table sh_python"

self.mycursor.execute(sql1)

print('已清空之前的数据,上海--python--第一页(90)...开始下载...')

def process_item(self, item, spider):

job_name = item['job_name']

job_firm = item['job_firm']

job_firmPeople = item['job_firmPeople']

job_salary = item['job_salary']

job_type = item['job_type']

job_yaoqiu = item['job_yaoqiu']

job_welfare = item['job_welfare']

try:

sql2 = "insert into sh_python VALUES (NULL ,'%s','%s','%s','%s','%s','%s','%s')"%(job_name,job_firm,job_firmPeople,job_salary,job_type,job_yaoqiu,job_welfare)

#执行sql

self.mycursor.execute(sql2)

#提交

self.conn.commit()

except Exception as e:

print(e)

self.conn.rollback()

return item

def close_spider(self,spider):

self.mycursor.close()

self.conn.close()

print('上海--python--第一页(90)...下载完毕...')

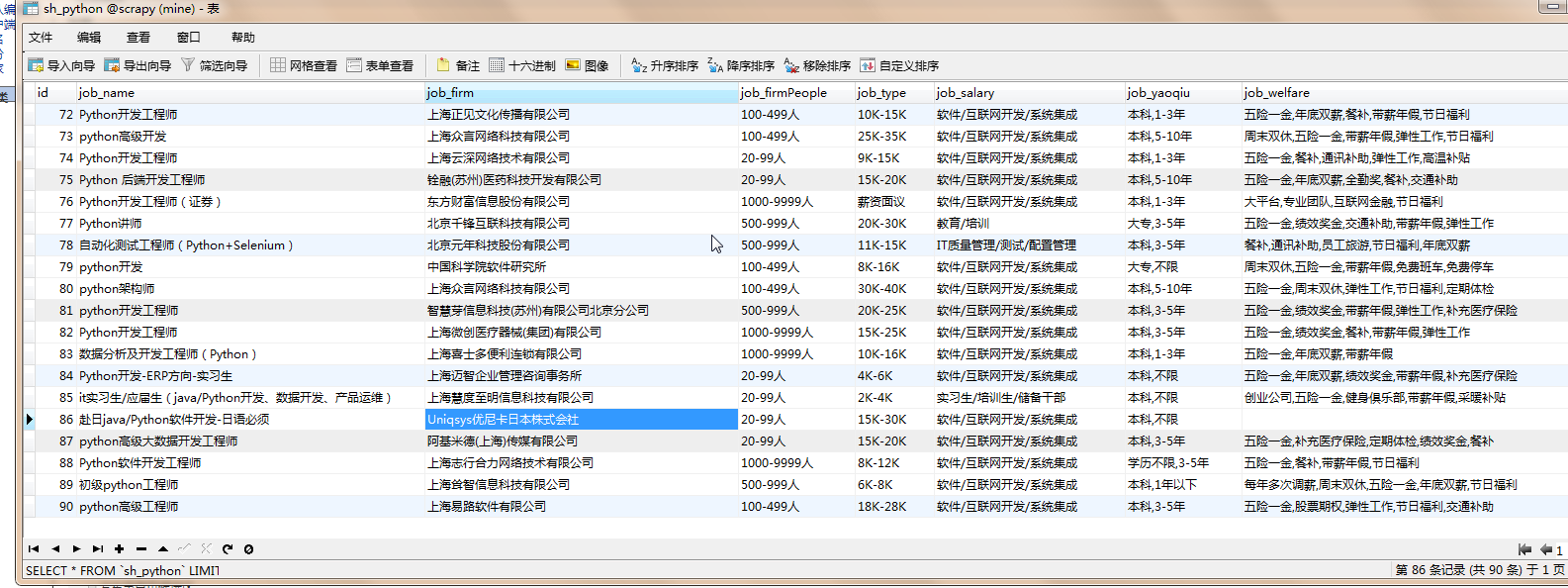

7. 看数据库是否有数据

成功。