一、需求及基础:

场景:

1、开发人员不能登录线上服务器查看详细日志

2、各个系统都有日志,日志数据分散难以查找

3、日志数据量大,查询速度慢,或者数据不够实时

4、一个调用会涉及到多个系统,难以在这些协调中快速定位数据

二、ELS的概念:

elasticsearch:搜索引擎,提供索引,搜索功能

Logstash:接收,处理,转发日志

Kibana:独立的、美观的图形数据web界面

三、安装及配置

1、 elasticsearch安装

安装java环境,1.8.20或以上的版本

1、安装Java环境 tar xf jdk-8u201-linux-x64.tar.gz vim /etc/profile export JAVA_HOME=/root/jdk export PATH=$JAVA_HOME/bin:$PATH source /etc/profile 2、安装elasticsearch wget https://download.elastic.co/elasticsearch/elasticsearch/elasticsearch-1.7.0.tar.gz tar xf elasticsearch-1.7.0.tar.gz ln -s elasticsearch-1.7.0 /usr/local/elasticsearch 3、修改配置文件 grep -n '^[a-z]' /usr/local/elasticsearch/config/elasticsearch.yml 32:cluster.name: elasticsearch #必须修改 40:node.name: "stu02" #必须修改 47:node.master: true 51:node.data: true 107:index.number_of_shards: 5 111:index.number_of_replicas: 1 145:path.conf: /usr/local/elasticsearch/config 149:path.data: /usr/local/elasticsearch/data 159:path.work: /usr/local/elasticsearch/work 163:path.logs: /usr/local/elasticsearch/logs 167:path.plugins: /usr/local/elasticsearch/plugins 184:bootstrap.mlockall: true 4、启动 /usr/local/elasticsearch/bin/elasticsearch -d #(可加入参数,-Xms512m -Xmx512m) netstat -lnt | egrep '9200|9300' jps -lvm

测试:

curl 127.0.0.1:9200 curl -i -XGET http://192.168.4.16:9200

安装ELS监控管理插件

1、离线安装插件 wget http://download.elasticsearch.org/elasticsearch/marvel/marvel-latest.zip /usr/local/elasticsearch/bin/plugin -i marvel -u file:///tmp/marvel-latest.zip 2、在线安装插件 /usr/local/elasticsearch/bin/plugin -i elasticsearch/marvel/latest/ /usr/local/elasticsearch/bin/plugin install mobz/elasticsearch-head 3、卸载插件 /usr/local/elasticsearch/bin/plugin -r marvel

生产可能需要配置已下:

max_file_descriptors: 64000 /etc/sysctl.conf sysctl -w vm_max_count=262144

2、 logstash安装

1、安装Java环境 tar xf jdk-8u201-linux-x64.tar.gz vim /etc/profile export JAVA_HOME=/root/jdk export PATH=$JAVA_HOME/bin:$PATH source /etc/profile 2、安装logstash wget https://download.elastic.co/logstash/logstash/logstash-1.5.3.tar.gz tar xf logstash-1.5.3.tar.gz ln -s /root/logstash-1.5.3/ /usr/local/logstash

标准输入和标准输出

/usr/local/logstash/bin/logstash -e 'input { stdin{} } output { stdout{} }'

使用ruby进行更详细的输出

/usr/local/logstash/bin/logstash -e 'input { stdin{} } output { stdout{codec => rubydebug}}'

输出到elasticsearch

/usr/local/logstash/bin/logstash -e 'input { stdin{} } output { elasticsearch

{ host => "192.168.4.16" protocol => "http"} }'

读取日志并输出到/tmp下

vim /etc/logstash/conf.d/logstash.conf

input { file { path => "/tmp/messages" } } output { file { path => "/tmp/log-%{+YYYY-MM-dd}messages.gz" gzip => true } }

测试配置文件

/usr/local/logstash/bin/logstash -f /etc/logstash/conf.d/logstash.conf -t

启动服务

/usr/local/logstash/bin/logstash -f /etc/logstash/conf.d/logstash.conf

测试

cat /var/log/messages >> /tmp/messages

ll /tmp/log-2019-02-11messages.gz

把输出直接传输到elasticsearch

vim /etc/logstash/conf.d/logstash.conf input { file { path => "/tmp/messages" } } output { file { path => "/tmp/log-%{+YYYY-MM-dd}.messages.gz" gzip => true } elasticsearch { host => ["192.168.4.16"] protocol => "http" index => "system-message-%{+YYYY.MM.dd}" } }

在集群管理平台查看结果

打开浏览器输入:http://192.168.4.16:9200/_plugin/head/

将logstash输出给redis

一台logstash的配置文件

input { file { path =>"/tmp/messages" } } output { redis { data_type => "list" key => "system-message" host => "192.168.4.17" port => "6379" db => "0" } }

另一台logstash的配置文件

input { redis { data_type => "list" key => "system-message" host => "192.168.4.17" port => "6379" db => "0" } } output { elasticsearch { host => ["192.168.4.16"] protocol => "http" index => "redis-message-%{+YYYY.MM.dd}" } }

logstash日志json格式

安装nginx,将nginx的日志设置为json模式

log_format logstash_json '{"@timestamp":"$time_iso8601",' #定义日志格式logstash_json '"host":"$server_addr",' '"clientip":"$remote_addr",' '"size":$body_bytes_sent,' '"responsetime":$request_time,' '"upstreamtime":"$upstream_response_time",' '"upstreamhost":"$upstream_addr",' '"http_host":"$host",' '"url":"$uri",' '"domain":"$host",' '"xff":"$http_x_forwarded_for",' '"referer":"$http_referer",' '"agent":"$http_user_agent",' '"status":"$status"}'; access_log /var/log/nginx/json.access.log logstash_json; #日志文件保存路径及使用上面定义的日志格式logstash_json

配置logstash将nginx的json日志并写入到redis

一台配置

input { file { path => "/var/log/nginx/json.access.log" #读取指定的json格式的日志 codec => "json" #指定json格式 } }

output { redis { data_type => "list" key => "nginx-json-log" #nginx的json格式日志的key名称 host => "192.168.4.17" port => "6379" db => "1" } }

另一台

input { redis { data_type => "list" key => "nginx-json-log" host => "192.168.4.17" port => "6379" db => "1" } } output { elasticsearch { host => ["192.168.4.16"] protocol => "http" index => "nginx-json-log-%{+YYYY.MM.dd}" } }

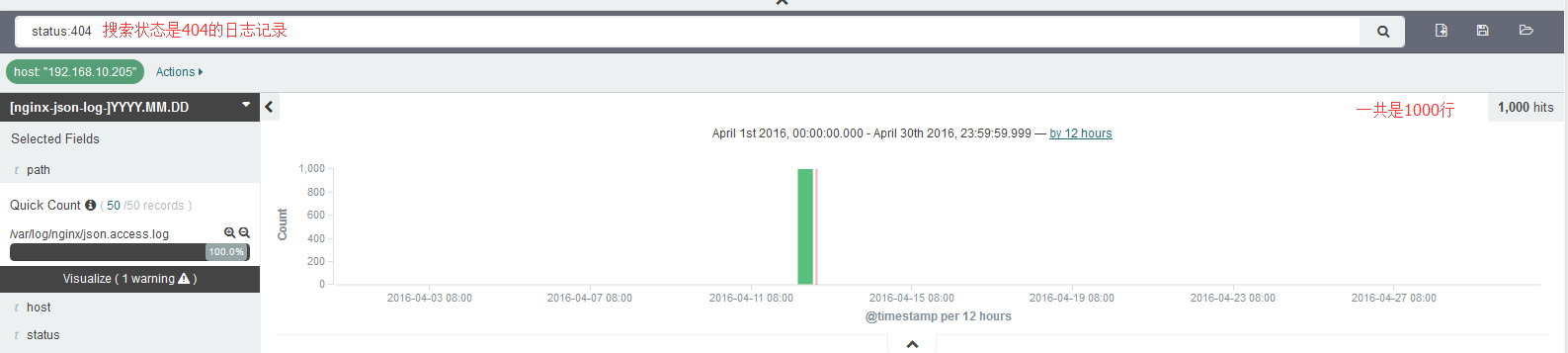

使用ab访问nginx测试日志

ab -n1000 -c10 http://192.168.4.16:81 #一共1000个请求,每次并发10个,即100次请求完成

打开浏览器输入:http://192.168.4.16:9200/_plugin/head/

3、kibana安装

1、安装 wget https://download.elastic.co/kibana/kibana/kibana-4.1.1-linux-x64.tar.gz tar xf kibana-4.1.1-linux-x64.tar.gz ln -s /root/kibana-4.1.1-linux-x64/ /usr/local/kibana 2、修改配置 vim /usr/local/kibana/config/kibana.yml elasticsearch_url: "http://192.168.4.16:9200" pid_file: /var/run/kibana.pid log_file: /usr/local/kibana/kibana.log 3、启动 /usr/local/kibana/bin/kibana #直接启动 nohup /usr/local/kibana/bin/kibana & #后台启动

访问测试:默认监听端口5601

打开浏览器输入:http://192.168.4.16:5601

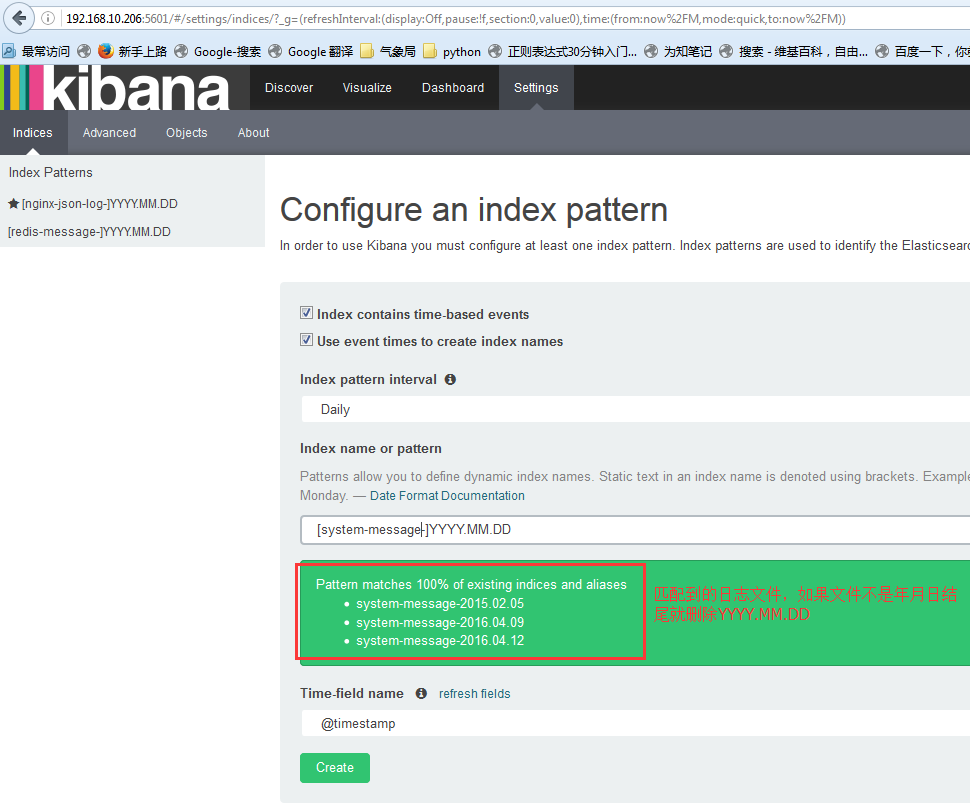

配置索引:索引的名称要和logstash的output生成的索引能进行匹配才可以

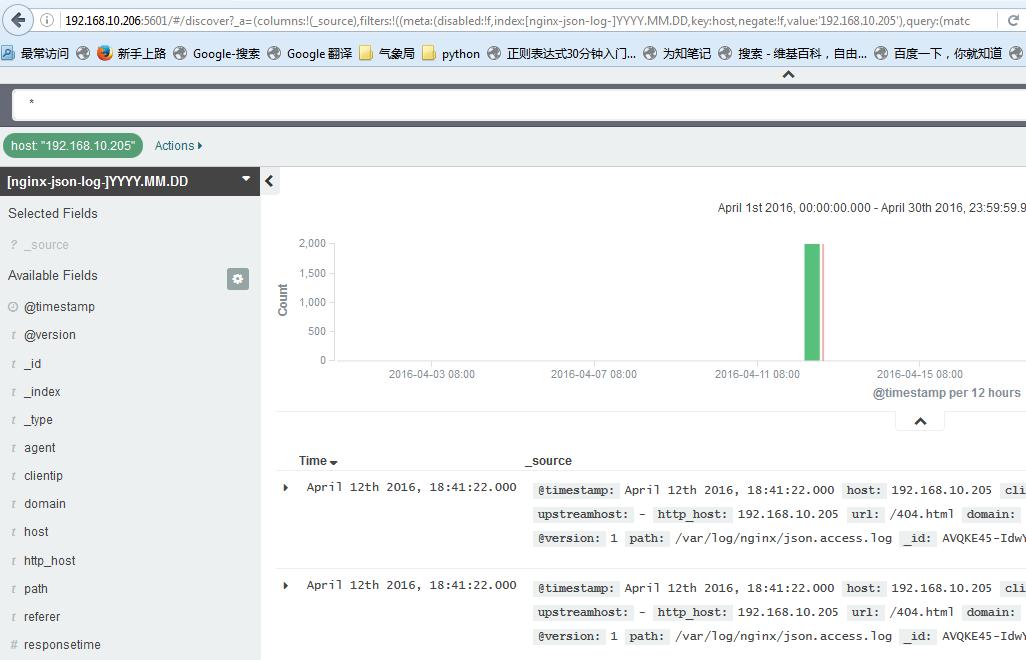

查看数据:默认显示最新的500个文档

数据精确搜索:

搜索高级语法:

status:404 OR status:500 #搜索状态是404或者是500之一的 status:301 AND status:200 #搜索即是301和200同时匹配的 status:[200 TO 300] #搜索指定范围的

其他的常用模块:

1、系统日志收集---> syslog:配置syslog结果写入到elasticsearch,指定端口514,主机就是要收集日志的服务器IP地址,即可使用

2、访问日志:nginx转换成json格式

3、错误日志:使用codec插件:

https://www.elastic.co/guide/en/logstash/1.5/codec-plugins.html input { stdin { codec => multiline { #多行日志,比如java的日志 pattern => "^s" #pattern => ".* .*" #找到换行符,会把多行认为是一行,即会把当前行和上一行合成一行,直到有换行符结束 what => "previous" } } }

4、运行日志 codec => json,如果不是json要使用grok进行匹配,相对比较麻烦,如果丢日志就看logstash.log,另外检查日志是否有效的json格式:

json效验地址:http://www.bejson.com/

5、kibana的时区和时间问题:kibana会自动根据浏览器将时间加8小时,通过logstash写入会自动解决,如果通过python脚本等写入会产生时间问题

6、在地图显示IP具体来源地址:

https://www.elastic.co/guide/en/logstash/1.5/filter-plugins.html

7、条件判断:

input { file { type => "apache" path => "/var/log/apache.log" } file { type => "tomcat" path => "/var/log/tomcat.log" } } filter { if [type] == "apache" { #假如索引为apache,就执行以下操作 redis { data_type => "list" key => "system-message-jack" host => "192.168.10.205" port => "6379" db => "0" } if [type] == "tomcat" { #假如索引为tomcat,就执行一次操作 redis { data_type => "list" key => "system-message-tomcat" host => "192.168.10.205" port => "6379" db => "1" #写不同的数据库 } }

测试logstash配置文件语法是否正确

/etc/init.d/logstash configtest

8、将tomcat日志的格式定义为json的格式:

directory="logs" prefix="localhost_access_log." suffix=".log" pattern="{"client":"%h", "client user":"%l",

"authenticated":"%u", "access time":"%t",

"method":"%r", "status":"%s",

"send bytes":"%b", "Query?string":"%q",

"partner":"%{Referer}i", "Agent version":"%{User-Agent}i"}"/>

取到的日志结果为:

{"client":"180.95.129.206", "client user":"-", "authenticated":"-", "access time":"[20/Apr/2016:03:47:40 +0000]", "method":"GET /image/android_logo.png HTTP/1.1", "status":"200", "send bytes":"1915", "Query string":"", "partner":"http://mobile.weathercn.com/index.do?id=101160101&partner=1000001003", "Agent version":"Mozilla/5.0 (Linux; U; Android 5.1.1; zh-cn; NX510J Build/LMY47V) AppleWebKit/537.36 (KHTML, like Gecko)Version/4.0 Chrome/37.0.0.0 MQQBrowser/6.6 Mobile Safari/537.36"}