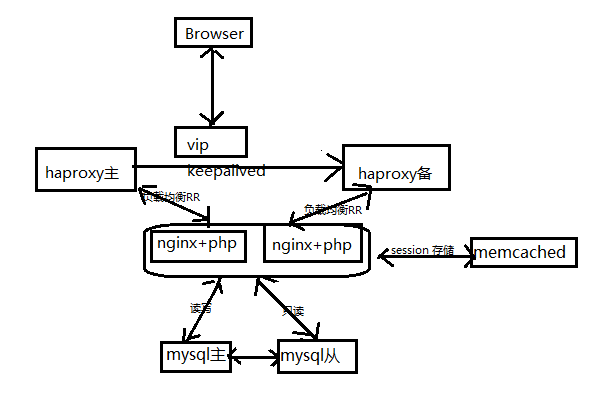

架构图:

配置思路

(1).系统初始化

Base环境下存放所有系统都要执行的状态,调整内核参数,dns,装zabbix-agent等

(2).功能模块(如:上面的haproxy)

如上面的haproxy nginx php memcached等服务,每一个服务都建一个目录,把每一个服务要执行的状态都放在这个目录下.

(3).业务模块

以业务为单位,一个业务里可能包含haproxy,nginx,php等,业务需要什么服务就把功能模块里对应的服务include

1.编辑配置文件修改file_roots,并且建立对应目录

2.系统初始化模块

(2)历史命令显示时间

(3).log日志记录谁在什么时间使用了什么命令

(4)内核调优

(5)将上面的状态include到 env_init.sls

(6).编写top.sls执行以上状态

(7)注:以上环境中用到的一些命令

3.功能模块-------基础包模块

4.功能模块--------haproxy模块

5.业务模块-------haproxy模块

[root@node1 cluster]# cd /srv/salt/prod/cluster/ [root@node1 cluster]# cat haproxy-outside.sls include: - haproxy.install #执行haproxy目录下的install.sls haproxy-service:#ID file.managed: - name: /etc/haproxy/haproxy.cfg #安装之后配置文件的名字 - source: salt://cluster/files/haproxy-outside.cfg #源配置文件,前面已经写好 - user: root - group: root - mode: 644 service.running: #service模块下的running方法,作用:启动服务 - name: haproxy #服务名字 - enable: True #是否开机启动 - reload: True #是否reload,如果不加,配置文件变了会restart - require: - cmd: haproxy-init #依赖haproxy-init下的cmd,意思是启动脚本那步必须执行成功 - watch: #关注某个文件状态 - file: haproxy-service #关注haproxy-service ID下的file模块里的文件,文件改变会reload

6.编辑top.sls

使用httpd测试一下

功能模块-----keepalived模块

写之前先找一台主机源码安装测试

http://www.keepalived.org/software/keepalived-1.2.19.tar.gz [root@node1 tools]# tar xf keepalived-1.2.19.tar.gz [root@node1 tools]# cd keepalived-1.2.19 [root@node1 keepalived-1.2.19]# ./configure --prefix=/usr/local/keepalived --disable-fwmark [root@node1 keepalived-1.2.19]# make && make install keepalived-1.2.19/keepalived/etc/init.d/keepalived.init #启动脚本 keepalived-1.2.19/keepalived/etc/keepalived/keepalived.conf #配置文件

配置keepalived模块路径及相关文件

[root@node1 ~]# mkdir /srv/salt/prod/keepalived [root@node1 ~]# mkdir /srv/salt/prod/keepalived/files [root@node1 keepalived]# cp ~/tools/keepalived-1.2.19.tar.gz /srv/salt/prod/keepalived/ files/ [root@node1 tools]#cp keepalived-1.2.19/keepalived/etc/init.d/keepalived.init /srv/salt/prod/keepalived/files/ #复制启动脚本 [root@node1 tools]#cp keepalived-1.2.19/keepalived/etc/keepalived/keepalived.conf /srv/salt/prod/keepalived/files/ #复制配置文件 [root@node1 tools]# cp keepalived-1.2.19/keepalived/etc/init.d/keepalived.sysconfig /srv/salt/prod/keepalived/files/ [root@node1 tools]# cd /srv/salt/prod/keepalived/files/ [root@node1 files]# vim keepalived.init #修改启动脚本路径 daemon /usr/local/keepalived/sbin/keepalived ${KEEPALIVED_OPTIONS}

1.keepalived功能模块

[root@node1 keepalived]# cd /srv/salt/prod/keepalived/ [root@node1 keepalived]# cat install.sls include: - pkg.pkg-init keepalived-install: file.managed: - name: /usr/local/src/keepalived-1.2.19.tar.gz - source: salt://keepalived/files/keepalived-1.2.19.tar.gz - user: root - group: root - mode: 755 cmd.run: - name: cd /usr/local/src/ && tar xf keepalived-1.2.19.tar.gz && cd keepalived-1.2.19 && ./configure --prefix=/usr/local/keepalived --disable-fwmark && make &&make install - unless: test -d /usr/local/keepalived - require: - pkg: pkg-init - file: keepalived-install keepalived-init: file.managed: - name: /etc/init.d/keepalived - source: salt://keepalived/files/keepalived.init - user: root - group: root - mode: 755 cmd.run: - name: chkconfig --add keepalived - unless: chkconfig --list |grep keepalived - require: - file: keepalived-init /etc/sysconfig/keepalived: file.managed: - source: salt://keepalived/files/keepalived.sysconfig - user: root - group: root - mode: 644 /etc/keepalived: file.directory: - user: root - group: root - mode: 755 [root@node1 files]# salt '*' state.sls keepalived.install env=prod #手动测试一下

2.keepalived业务模块

[root@node1 ~]# cd /srv/salt/prod/cluster/files/ [root@node1 files]# cat haproxy-outside-keepalived.cfg #keepalived配置文件,里面用到了jinja变量 #configutation file for keepalive globlal_defs { notification_email { saltstack@example.com } notification_email_from keepalived@example.com smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id `ROUTEID` } vrrp_instance haproxy_ha { state `STATEID` interface eth2 virtual_router_id 36 priority `PRIORITYID` advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.10.130 } }

[root@node1 ~]# cd /srv/salt/prod/cluster/ [root@node1 cluster]# cat haproxy-outside-keepalived.sls include: - keepalived.install keepalived-service: file.managed: - name: /etc/keepalived/keepalived.conf - source: salt://cluster/files/haproxy-outside-keepalived.cfg - user: root - group: root - mode: 644 - template: jinja {% if grains['fqdn'] == 'node1' %} - ROUTEID: haproxy_ha - STATEID: MASTER - PRIORITYID: 150 {% elif grains['fqdn'] == 'node2' %} - ROUTEID: haproxy_ha - STATEID: BACKUP - PRIORITYID: 100 {% endif %} service.running: - name: keepalived - enable: True - watch: - file: keepalived-service [root@node1 cluster]# salt '*' state.sls cluster.haproxy-outside-keepalived env=prod #测试一下

指定服务器执行keepalived模块

[root@node1 salt]# cat /srv/salt/base/top.sls base: '*': - init.env_init prod: 'node1': - cluster.haproxy-outside - cluster.haproxy-outside-keepalived 'node2': - cluster.haproxy-outside - cluster.haproxy-outside-keepalived [root@node1 salt]# salt '*' state.highstate #到这步执行成功的话就实现了keepalived+haproxy

遇到问题:发现keepalived 虚拟vip写不上去

查看日志 cat /var/log/messages,发现下面一句

Aug 11 15:10:12 node1 Keepalived_vrrp[29442]: VRRP_Instance(haproxy_ha{) sending 0 priority

haproxy_ha后面打了个空格解决

vrrp_instance haproxy_ha {

系统初始化模块--------------zabbix-agent

在配置文件里设置pillar路径

[root@node1 init]# vim /etc/salt/master pillar_roots: base: - /srv/pillar/base [root@node1 init]# /etc/init.d/salt-master restart

在pillar里建立top.sls和zabbix.sls

[root@node1 init]# mkdir /srv/pillar/base [root@node1 pillar]# cd base/ [root@node1 base]# cat top.sls base: '*': - zabbix [root@node1 base]# cat zabbix.sls zabbix-agent: Zabbix_Server: 192.168.10.129

[root@node1 init]# cd /srv/salt/base/init/ [root@node1 init]# cat zabbix_agent.sls zabbix-agent-install: pkg.installed: - name: zabbix-agent file.managed: - name: /etc/zabbix/zabbix_agentd.conf - source: salt://init/files/zabbix_agentd.conf - template: jinja - defaults: Server: {{ pillar['zabbix-agent']['Zabbix_Server'] }} #这里将pillar里ID为zabbix-agent,Zabbix_Server的值赋给变量Server - require: - pkg: zabbix-agent-install service.running: - name: zabbix-agent - enable: True - watch: - pkg: zabbix-agent-install - file: zabbix-agent-install

编写配置文件利用jinja将Server变量的值传给Server,也就是指定zabbix-Server地址

cp /etc/zabbix/zabbix_agentd.conf /srv/salt/base/init/files/ [root@node1 base]#vim /srv/salt/base/init/files/zabbix_agent.conf Server=`Server`

将zabbix_agent.sls include到env_init.sls

[root@node1 init]# cat env_init.sls include: - init.dns - init.history - init.audit - init.sysctl - init.zabbix_agent [root@node1 init]# salt '*' state.highstate