前言

本文将基于Linux内核通过调试跟踪,深入理解Linux的系统调用过程。本人学号05结尾,在arch/x86/entry/syscalls/syscall_64.tbl 可以找到05号为fstat系统调⽤,因此以系统调用fstat为例进行展开。

一、基本系统调用流程

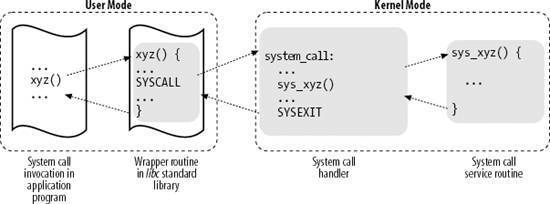

基本的系统调用流程可以大致分为4步,如下图所示:

1. 应用程序代码调用 xyz(),该函数是一个包装系统调用的库函数。

2. 库函数 xyz() 负责准备向内核传递的参数,并触发软中断以切换到内核。

3. CPU 被软中断打断后,执行中断处理函数,即系统调用处理函数(system_call)。

4. 系统调用处理函数调用系统调用服务例程(sys_xyz ),真正开始处理该系统调用。

二、触发fstat系统调用

理解了基本的系统调用流程后,接下来开始编写用户应用程序来触发fstat系统调用。源码如下:

#include "stdlib.h" #include "stdio.h" #include <asm/unistd.h> #include <sys/types.h> #include <sys/stat.h> #include <sys/mman.h> #include <fcntl.h> int main() { struct stat st; int fp = open("file.txt",O_RDWR); if(fp < 0){ printf("open file error! code:%d ",fp); return -1; } /*asm volatile( "mov %1,%%rdi " "mov %2,%%rsi " "mov $5,%%eax " // fstat is 5 "syscall " "mov %%rax,%0 " :"=m"(fp) :"m"(fp),"m"(st) );*/ fp = fstat(fp,&st); if(fp < 0){ printf("get file state error! code:%d ",fp); return -1; } printf("get file state success!%d ",fp); close(fp); return 0; }

然后静态编译,并且反汇编存入文件openfile.S中。

gcc -o openfile openfile.c -static objdump -S openfile > openfile.S

打开openfile.S文件,找到main函数,顺着函数调用可以找到syscall指令。

0000000000401ce5 <main>: 401ce5: f3 0f 1e fa endbr64 401ce9: 55 push %rbp 401cea: 48 89 e5 mov %rsp,%rbp 401ced: 48 81 ec b0 00 00 00 sub $0xb0,%rsp 401cf4: 64 48 8b 04 25 28 00 mov %fs:0x28,%rax 401cfb: 00 00 401cfd: 48 89 45 f8 mov %rax,-0x8(%rbp) 401d01: 31 c0 xor %eax,%eax 401d03: be 02 00 00 00 mov $0x2,%esi 401d08: 48 8d 3d f9 22 09 00 lea 0x922f9(%rip),%rdi # 494008 <_IO_stdin_used+0x8> 401d0f: b8 00 00 00 00 mov $0x0,%eax 401d14: e8 97 ed 04 00 callq 450ab0 <__libc_open> 401d19: 89 85 5c ff ff ff mov %eax,-0xa4(%rbp) 401d1f: 83 bd 5c ff ff ff 00 cmpl $0x0,-0xa4(%rbp) 401d26: 79 20 jns 401d48 <main+0x63> 401d28: 8b 85 5c ff ff ff mov -0xa4(%rbp),%eax 401d2e: 89 c6 mov %eax,%esi 401d30: 48 8d 3d da 22 09 00 lea 0x922da(%rip),%rdi # 494011 <_IO_stdin_used+0x11> 401d37: b8 00 00 00 00 mov $0x0,%eax 401d3c: e8 6f f0 00 00 callq 410db0 <_IO_printf> 401d41: b8 ff ff ff ff mov $0xffffffff,%eax 401d46: eb 76 jmp 401dbe <main+0xd9> 401d48: 48 8d 95 60 ff ff ff lea -0xa0(%rbp),%rdx 401d4f: 8b 85 5c ff ff ff mov -0xa4(%rbp),%eax 401d55: 48 89 d6 mov %rdx,%rsi 401d58: 89 c7 mov %eax,%edi 401d5a: e8 71 ec 04 00 callq 4509d0 <__fstat> 401d5f: 89 85 5c ff ff ff mov %eax,-0xa4(%rbp) 401d65: 83 bd 5c ff ff ff 00 cmpl $0x0,-0xa4(%rbp) 401d6c: 79 20 jns 401d8e <main+0xa9> 401d6e: 8b 85 5c ff ff ff mov -0xa4(%rbp),%eax 401d74: 89 c6 mov %eax,%esi 401d76: 48 8d 3d b3 22 09 00 lea 0x922b3(%rip),%rdi # 494030 <_IO_stdin_used+0x30> 401d7d: b8 00 00 00 00 mov $0x0,%eax 401d82: e8 29 f0 00 00 callq 410db0 <_IO_printf> 401d87: b8 ff ff ff ff mov $0xffffffff,%eax 401d8c: eb 30 jmp 401dbe <main+0xd9> 401d8e: 8b 85 5c ff ff ff mov -0xa4(%rbp),%eax 401d94: 89 c6 mov %eax,%esi 401d96: 48 8d 3d b2 22 09 00 lea 0x922b2(%rip),%rdi # 49404f <_IO_stdin_used+0x4f> 401d9d: b8 00 00 00 00 mov $0x0,%eax 401da2: e8 09 f0 00 00 callq 410db0 <_IO_printf> 401da7: 8b 85 5c ff ff ff mov -0xa4(%rbp),%eax 401dad: 89 c7 mov %eax,%edi 401daf: b8 00 00 00 00 mov $0x0,%eax 401db4: e8 97 ef 04 00 callq 450d50 <__close> 401db9: b8 00 00 00 00 mov $0x0,%eax 401dbe: 48 8b 4d f8 mov -0x8(%rbp),%rcx 401dc2: 64 48 33 0c 25 28 00 xor %fs:0x28,%rcx 401dc9: 00 00 401dcb: 74 05 je 401dd2 <main+0xed> 401dcd: e8 ce 27 05 00 callq 4545a0 <__stack_chk_fail> 401dd2: c9 leaveq 401dd3: c3 retq 401dd4: 66 2e 0f 1f 84 00 00 nopw %cs:0x0(%rax,%rax,1) 401ddb: 00 00 00 401dde: 66 90 xchg %ax,%ax 00000000004509d0 <__fstat>: 4509d0: f3 0f 1e fa endbr64 4509d4: 48 89 f2 mov %rsi,%rdx 4509d7: 89 fe mov %edi,%esi 4509d9: bf 01 00 00 00 mov $0x1,%edi 4509de: e9 6d 00 00 00 jmpq 450a50 <__fxstat> 4509e3: 66 2e 0f 1f 84 00 00 nopw %cs:0x0(%rax,%rax,1) 4509ea: 00 00 00 4509ed: 0f 1f 00 nopl (%rax) 0000000000450a50 <__fxstat>: 450a50: f3 0f 1e fa endbr64 450a54: 41 89 f8 mov %edi,%r8d 450a57: 89 f7 mov %esi,%edi 450a59: 48 89 d6 mov %rdx,%rsi 450a5c: 41 83 f8 01 cmp $0x1,%r8d 450a60: 77 2e ja 450a90 <__fxstat+0x40> 450a62: b8 05 00 00 00 mov $0x5,%eax 450a67: 0f 05 syscall 450a69: 48 3d 00 f0 ff ff cmp $0xfffffffffffff000,%rax 450a6f: 77 07 ja 450a78 <__fxstat+0x28> 450a71: c3 retq 450a72: 66 0f 1f 44 00 00 nopw 0x0(%rax,%rax,1) 450a78: 48 c7 c2 c0 ff ff ff mov $0xffffffffffffffc0,%rdx 450a7f: f7 d8 neg %eax 450a81: 64 89 02 mov %eax,%fs:(%rdx) 450a84: b8 ff ff ff ff mov $0xffffffff,%eax 450a89: c3 retq 450a8a: 66 0f 1f 44 00 00 nopw 0x0(%rax,%rax,1) 450a90: 48 c7 c0 c0 ff ff ff mov $0xffffffffffffffc0,%rax 450a97: 64 c7 00 16 00 00 00 movl $0x16,%fs:(%rax) 450a9e: b8 ff ff ff ff mov $0xffffffff,%eax 450aa3: c3 retq 450aa4: 66 2e 0f 1f 84 00 00 nopw %cs:0x0(%rax,%rax,1) 450aab: 00 00 00 450aae: 66 90 xchg %ax,%ax

通过调试程序发现调用fstat()函数后,会跳转到 ../sysdeps/unix/sysv/linux/wordsize-64/fxstat.c 文件里的__fxstat()函数,最终调用 INLINE_SYSCALL() 函数,其中会调用syscall指令触发系统调用中断。

#include <errno.h> #include <stddef.h> #include <sys/stat.h> #include <sysdep.h> #include <sys/syscall.h> /* Get information about the file FD in BUF. */ int __fxstat (int vers, int fd, struct stat *buf) { if (vers == _STAT_VER_KERNEL || vers == _STAT_VER_LINUX) return INLINE_SYSCALL (fstat, 2, fd, buf); __set_errno (EINVAL); return -1; } hidden_def (__fxstat) weak_alias (__fxstat, _fxstat); #undef __fxstat64 strong_alias (__fxstat, __fxstat64); hidden_ver (__fxstat, __fxstat64)

三、系统调用初始化

系统启动时会调用arch/x86/kernel/cpu/common.c 的 syscall_init()函数进行初始化。可以看到 MSR_STAR 的第 32-47 位设置为 kernel mode 的 cs,48-63位设置为 user mode 的 cs。而 IA32_LSTAR 被设置为函数 entry_SYSCALL_64 的起始地址(需要注意intel64位增加了MSR寄存器,用于快速找到系统调用的处理函数,这与32位机不同)。于是 syscall 时,跳转到 entry_SYSCALL_64 开始执行,其定义在 arch/x86/entry/entry_64.S中。

void syscall_init(void) { wrmsr(MSR_STAR, 0, (__USER32_CS << 16) | __KERNEL_CS); wrmsrl(MSR_LSTAR, (unsigned long)entry_SYSCALL_64); #ifdef CONFIG_IA32_EMULATION wrmsrl(MSR_CSTAR, (unsigned long)entry_SYSCALL_compat); /* * This only works on Intel CPUs. * On AMD CPUs these MSRs are 32-bit, CPU truncates MSR_IA32_SYSENTER_EIP. * This does not cause SYSENTER to jump to the wrong location, because * AMD doesn't allow SYSENTER in long mode (either 32- or 64-bit). */ wrmsrl_safe(MSR_IA32_SYSENTER_CS, (u64)__KERNEL_CS); wrmsrl_safe(MSR_IA32_SYSENTER_ESP, 0ULL); wrmsrl_safe(MSR_IA32_SYSENTER_EIP, (u64)entry_SYSENTER_compat); #else wrmsrl(MSR_CSTAR, (unsigned long)ignore_sysret); wrmsrl_safe(MSR_IA32_SYSENTER_CS, (u64)GDT_ENTRY_INVALID_SEG); wrmsrl_safe(MSR_IA32_SYSENTER_ESP, 0ULL); wrmsrl_safe(MSR_IA32_SYSENTER_EIP, 0ULL); #endif /* Flags to clear on syscall */ wrmsrl(MSR_SYSCALL_MASK, X86_EFLAGS_TF|X86_EFLAGS_DF|X86_EFLAGS_IF| X86_EFLAGS_IOPL|X86_EFLAGS_AC|X86_EFLAGS_NT); }

四、entry_SYSCALL_64

entry_SYSCALL_64作为系统调用(属于软中断)的处理函数,它的源代码如下:

ENTRY(entry_SYSCALL_64) /* * Interrupts are off on entry. * We do not frame this tiny irq-off block with TRACE_IRQS_OFF/ON, * it is too small to ever cause noticeable irq latency. */ SWAPGS_UNSAFE_STACK // KAISER 进内核态需要切到内核页表 SWITCH_KERNEL_CR3_NO_STACK /* * A hypervisor implementation might want to use a label * after the swapgs, so that it can do the swapgs * for the guest and jump here on syscall. */ GLOBAL(entry_SYSCALL_64_after_swapgs) // 将用户栈偏移保存到 per-cpu 变量 rsp_scratch 中 movq %rsp, PER_CPU_VAR(rsp_scratch) // 加载内核栈偏移 movq PER_CPU_VAR(cpu_current_top_of_stack), %rsp TRACE_IRQS_OFF /* Construct struct pt_regs on stack */ pushq $__USER_DS /* pt_regs->ss */ pushq PER_CPU_VAR(rsp_scratch) /* pt_regs->sp */ pushq %r11 /* pt_regs->flags */ pushq $__USER_CS /* pt_regs->cs */ pushq %rcx /* pt_regs->ip */ pushq %rax /* pt_regs->orig_ax */ pushq %rdi /* pt_regs->di */ pushq %rsi /* pt_regs->si */ pushq %rdx /* pt_regs->dx */ pushq %rcx /* pt_regs->cx */ pushq $-ENOSYS /* pt_regs->ax */ pushq %r8 /* pt_regs->r8 */ pushq %r9 /* pt_regs->r9 */ pushq %r10 /* pt_regs->r10 */ pushq %r11 /* pt_regs->r11 */ // 为r12-r15, rbp, rbx保留位置 sub $(6*8), %rsp /* pt_regs->bp, bx, r12-15 not saved */ /* * If we need to do entry work or if we guess we'll need to do * exit work, go straight to the slow path. */ movq PER_CPU_VAR(current_task), %r11 testl $_TIF_WORK_SYSCALL_ENTRY|_TIF_ALLWORK_MASK, TASK_TI_flags(%r11) jnz entry_SYSCALL64_slow_path entry_SYSCALL_64_fastpath: /* * Easy case: enable interrupts and issue the syscall. If the syscall * needs pt_regs, we'll call a stub that disables interrupts again * and jumps to the slow path. */ TRACE_IRQS_ON ENABLE_INTERRUPTS(CLBR_NONE) #if __SYSCALL_MASK == ~0 // 确保系统调用号没超过最大值,超过了则跳转到后面的符号 1 处进行返回 cmpq $__NR_syscall_max, %rax #else andl $__SYSCALL_MASK, %eax cmpl $__NR_syscall_max, %eax #endif ja 1f /* return -ENOSYS (already in pt_regs->ax) */ // 除系统调用外的其他调用都通过 rcx 来传第四个参数,因此将 r10 的内容设置到 rcx movq %r10, %rcx /* * This call instruction is handled specially in stub_ptregs_64. * It might end up jumping to the slow path. If it jumps, RAX * and all argument registers are clobbered. */ // 调用系统调用表中对应的函数 call *sys_call_table(, %rax, 8) .Lentry_SYSCALL_64_after_fastpath_call: // 将函数返回值压到栈中,返回时弹出 movq %rax, RAX(%rsp) 1: /* * If we get here, then we know that pt_regs is clean for SYSRET64. * If we see that no exit work is required (which we are required * to check with IRQs off), then we can go straight to SYSRET64. */ DISABLE_INTERRUPTS(CLBR_NONE) TRACE_IRQS_OFF movq PER_CPU_VAR(current_task), %r11 testl $_TIF_ALLWORK_MASK, TASK_TI_flags(%r11) jnz 1f LOCKDEP_SYS_EXIT TRACE_IRQS_ON /* user mode is traced as IRQs on */ movq RIP(%rsp), %rcx movq EFLAGS(%rsp), %r11 RESTORE_C_REGS_EXCEPT_RCX_R11 /* * This opens a window where we have a user CR3, but are * running in the kernel. This makes using the CS * register useless for telling whether or not we need to * switch CR3 in NMIs. Normal interrupts are OK because * they are off here. */ SWITCH_USER_CR3 movq RSP(%rsp), %rsp USERGS_SYSRET64 1: /* * The fast path looked good when we started, but something changed * along the way and we need to switch to the slow path. Calling * raise(3) will trigger this, for example. IRQs are off. */ TRACE_IRQS_ON ENABLE_INTERRUPTS(CLBR_NONE) SAVE_EXTRA_REGS movq %rsp, %rdi call syscall_return_slowpath /* returns with IRQs disabled */ jmp return_from_SYSCALL_64 entry_SYSCALL64_slow_path: /* IRQs are off. */ SAVE_EXTRA_REGS movq %rsp, %rdi call do_syscall_64 /* returns with IRQs disabled */ return_from_SYSCALL_64: RESTORE_EXTRA_REGS TRACE_IRQS_IRETQ /* we're about to change IF */ /* * Try to use SYSRET instead of IRET if we're returning to * a completely clean 64-bit userspace context. */ movq RCX(%rsp), %rcx movq RIP(%rsp), %r11 cmpq %rcx, %r11 /* RCX == RIP */ jne opportunistic_sysret_failed /* * On Intel CPUs, SYSRET with non-canonical RCX/RIP will #GP * in kernel space. This essentially lets the user take over * the kernel, since userspace controls RSP. * * If width of "canonical tail" ever becomes variable, this will need * to be updated to remain correct on both old and new CPUs. */ .ifne __VIRTUAL_MASK_SHIFT - 47 .error "virtual address width changed -- SYSRET checks need update" .endif /* Change top 16 bits to be the sign-extension of 47th bit */ shl $(64 - (__VIRTUAL_MASK_SHIFT+1)), %rcx sar $(64 - (__VIRTUAL_MASK_SHIFT+1)), %rcx /* If this changed %rcx, it was not canonical */ cmpq %rcx, %r11 jne opportunistic_sysret_failed cmpq $__USER_CS, CS(%rsp) /* CS must match SYSRET */ jne opportunistic_sysret_failed movq R11(%rsp), %r11 cmpq %r11, EFLAGS(%rsp) /* R11 == RFLAGS */ jne opportunistic_sysret_failed /* * SYSCALL clears RF when it saves RFLAGS in R11 and SYSRET cannot * restore RF properly. If the slowpath sets it for whatever reason, we * need to restore it correctly. * * SYSRET can restore TF, but unlike IRET, restoring TF results in a * trap from userspace immediately after SYSRET. This would cause an * infinite loop whenever #DB happens with register state that satisfies * the opportunistic SYSRET conditions. For example, single-stepping * this user code: * * movq $stuck_here, %rcx * pushfq * popq %r11 * stuck_here: * * would never get past 'stuck_here'. */ testq $(X86_EFLAGS_RF|X86_EFLAGS_TF), %r11 jnz opportunistic_sysret_failed /* nothing to check for RSP */ cmpq $__USER_DS, SS(%rsp) /* SS must match SYSRET */ jne opportunistic_sysret_failed /* * We win! This label is here just for ease of understanding * perf profiles. Nothing jumps here. */ syscall_return_via_sysret: /* rcx and r11 are already restored (see code above) */ RESTORE_C_REGS_EXCEPT_RCX_R11 /* * This opens a window where we have a user CR3, but are * running in the kernel. This makes using the CS * register useless for telling whether or not we need to * switch CR3 in NMIs. Normal interrupts are OK because * they are off here. */ // KAISER 返回用户态需要切回用户页表 SWITCH_USER_CR3 /* 根据压栈的内容,恢复 rsp 为用户态的栈顶 */ movq RSP(%rsp), %rsp USERGS_SYSRET64 // 无法快速返回,只能退化到 iret opportunistic_sysret_failed: /* * This opens a window where we have a user CR3, but are * running in the kernel. This makes using the CS * register useless for telling whether or not we need to * switch CR3 in NMIs. Normal interrupts are OK because * they are off here. */ SWITCH_USER_CR3 SWAPGS jmp restore_c_regs_and_iret END(entry_SYSCALL_64)

首先将当前用户态栈偏移 rsp 存到 per-cpu 变量 rsp_scratch 中,然后将 per-cpu 变量 cpu_current_top_of_stack ,即内核态的栈偏移加载到 rsp。随后将各寄存器中的值压入内核态的栈中,包括rax、rcx、r11、rdi、rsi、rdx、r10、r8、r9。接着根据系统调用号从系统调用表(sys_call_table) 中找到相应的处理函数并执行,最终通过 USERGS_SYSRET64 ,即 sysretq 返回。

参考文献:

[1].https://cloud.tencent.com/developer/article/1492374.