原理:其实就是用到redis的优点及特性,好处自己查---

1,scrapy 分布式爬虫配置:

settings.py

BOT_NAME = 'first' SPIDER_MODULES = ['first.spiders'] NEWSPIDER_MODULE = 'first.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'first (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure a delay for requests for the same website (default: 0) # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False ITEM_PIPELINES = { # 'first.pipelines.FirstPipeline': 300, 'scrapy_redis.pipelines.RedisPipeline':300, 'first.pipelines.VideoPipeline': 100, } #分布式爬虫 #指定redis数据库的连接参数 REDIS_HOST = '172.17.0.2' REDIS_PORT = 15672 REDIS_ENCODING = 'utf-8' # REDIS_PARAMS ={ # 'password': '123456', # 服务器的redis对应密码 # } #使用了scrapy_redis的调度器,在redis里分配请求 SCHEDULER = "scrapy_redis.scheduler.Scheduler" # # 确保所有爬虫共享相同的去重指纹 DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter" # Requests的调度策略,默认优先级队列 SCHEDULER_QUEUE_CLASS = 'scrapy_redis.queue.PriorityQueue' #(可选). 在redis中保持scrapy-redis用到的各个队列,从而允许暂停和暂停后恢复,也就是不清理redis queues SCHEDULER_PERSIST = True

spider.py

# -*- coding: utf-8 -*- import scrapy import redis from ..items import Video from scrapy.http import Request from scrapy_redis.spiders import RedisSpider class VideoRedis(RedisSpider): name = 'video_redis' allowed_domains = ['zuidazy2.net'] # start_urls = ['http://zuidazy2.net/'] redis_key = 'zuidazy2:start_urls' def parse(self, response): item = Video() res= response.xpath('//div[@class="xing_vb"]/ul/li/span[@class="xing_vb4"]/a/text()').extract_first() url = response.xpath('//div[@class="xing_vb"]/ul/li/span[@class="xing_vb4"]/a/@href').extract_first() v_type = response.xpath('//div[@class="xing_vb"]/ul/li/span[@class="xing_vb5"]/text()').extract_first() u_time = response.xpath('//div[@class="xing_vb"]/ul/li/span[@class="xing_vb6"]/text()').extract_first() if res is not None: item['name'] = res item['v_type'] = v_type item['u_time'] = u_time url = 'http://www.zuidazy2.net' + url yield scrapy.Request(url, callback=self.info_data,meta={'item': item},dont_filter=True) next_link = response.xpath('//div[@class="xing_vb"]/ul/li/div[@class="pages"]/a[last()-1]/@href').extract_first() if next_link: yield scrapy.Request('http://www.zuidazy2.net'+next_link,callback=self.parse,dont_filter=True) def info_data(self,data): item = data.meta['item'] res = data.xpath('//div[@id="play_2"]/ul/li/text()').extract() if res: item['url'] = res else: item['url'] = '' yield item

items.py

import scrapy class FirstItem(scrapy.Item): # define the fields for your item here like: content = scrapy.Field() class Video(scrapy.Item): name = scrapy.Field() url = scrapy.Field() v_type = scrapy.Field() u_time = scrapy.Field()

pipelines.py

from pymysql import * import aiomysql class FirstPipeline(object): def process_item(self, item, spider): print('*'*30,item['content']) return item class VideoPipeline(object): def __init__(self): self.conn = connect(host="39.99.37.85",port=3306,user="root",password="",database="queyou") self.cur = self.conn.cursor() def process_item(self, item, spider): # print(item) try: # insert_sql = f"insert into video values(0,'{item['name']}','{item['url']}','{item['v_type']}','{item['u_time']}')" # self.cur.execute(insert_sql) # # self.cur.execute("insert into video values(0,'"+item['name']+"','"+item['url']+"','"+item['v_type']+"','"+item['u_time']+"')") self.cur.execute("insert into video values(0,'"+item['name']+"','"+item['v_type']+"')") self.conn.commit() except Exception as e: print(e) finally: return item def close_spider(self, spider): self.conn.commit() # 提交数据 self.cur.close() self.conn.close()

2,Docker 安装和pull centos7 及安装依赖 (跳过)

3,cp 宿主机项目到 Centos7 容器中

docker cp /root/first 35fa:/root

4,安装redis 及 开启远程连接服务

具体在 /etc/redis.conf 中设置和开启redis服务

redis-server /etc/redis.conf

5,Docker虚拟主机多个及建立通信容器

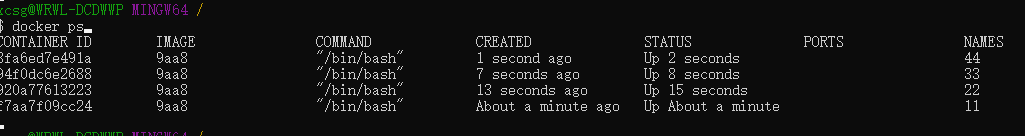

运行容器 docker run -tid --name 11 CONTAINER_ID 建立通信容器 docker run -tid --name 22 --link 11 CONTAINER_ID 进入容器 docker attach CONTAINER_ID 退出容器,不kill掉容器(通常exit会kill掉的) ctrl+q+p 查看网络情况 cat /etc/hosts

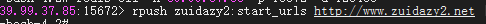

5,开启所有容器的spider 及定义start_url

完工!

附上打包好的镜像:链接: https://pan.baidu.com/s/1Sj244da0pOZvL3SZ_qagUg 提取码: qp23