现象:

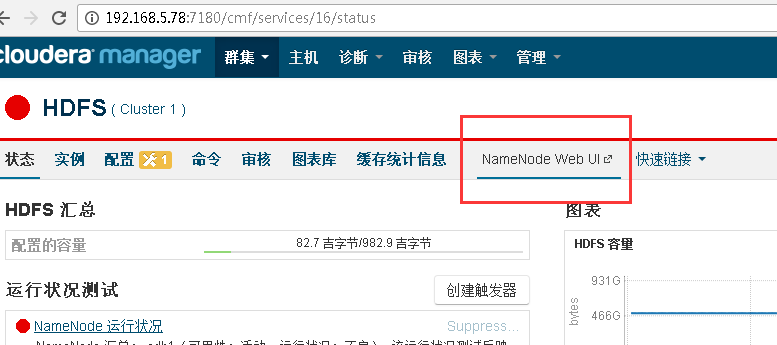

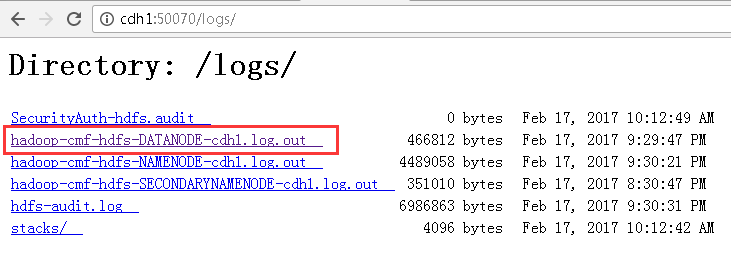

在集群中某节点, 启动DataNode服务后马上又Shutdown, 在操作系统没看到有DataNode的日志(可能是服务启动失败, 自动删除了日志文件),幸好在界面上可以查看报错的日志:

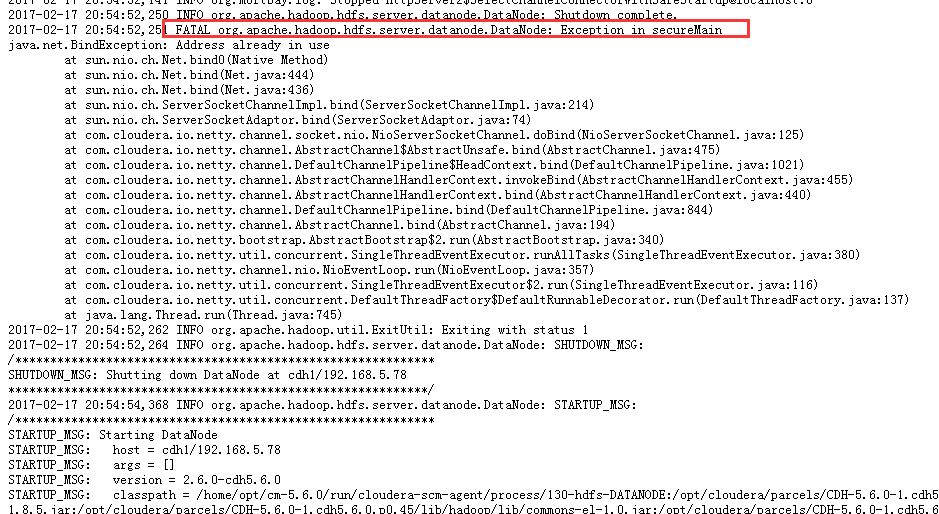

点开报错信息, 可以看到如下信息:

HDFS的端口为50010, 但是使用netstat -ntulp | grep 50010查看不到此端口。

分析:

原因:当应用程序崩溃后, 它会留下一个滞留的socket,以便能够提前重用socket, 当尝试绑定socket并重用它,你需要将socket的flag设置为SO_REUSEADDR,但是HDFS不是这么做的。解决办法是使用设置SO_REUSEADDR的应用程序绑定到这个端口, 然后停止这个应用程序。可以使用netcat工具实现。

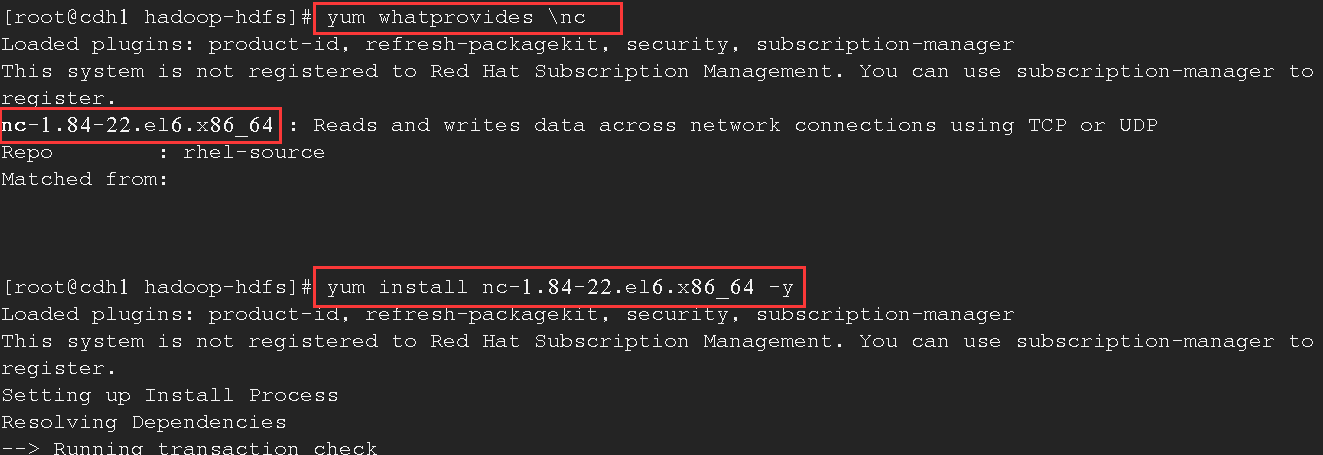

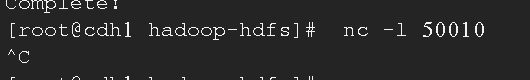

解决办法: 安装nc工具, 使用nc工具占用50010端口, 然后关闭nc服务, 再次启动DataNode后正常。

参考链接:

http://www.nosql.se/2013/10/hadoop-hdfs-datanode-java-net-bindexception-address-already-in-use/ 参考文字:

After an application crashes it might leave a lingering socket, so to reuse thatsocket early you need to set the socket flag SO_REUSEADDR when attempting to bind toit to be allowed to reuse it. The HDFS datanode doesn’t do that, and I didn’t want torestart the HBase regionserver (which was locking the socket with a connection it hadn’t realized was dead).The solution was to bind to the port with an application that sets SO_REUSEADDR andthen stop that application, I used netcat for that:# nc -l 50010

2017-02-17 20:54:52,250 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: Shutdown complete.2017-02-17 20:54:52,251 FATAL org.apache.hadoop.hdfs.server.datanode.DataNode: Exception in secureMainjava.net.BindException: Address already in useat sun.nio.ch.Net.bind0(Native Method)at sun.nio.ch.Net.bind(Net.java:444)at sun.nio.ch.Net.bind(Net.java:436)at sun.nio.ch.ServerSocketChannelImpl.bind(ServerSocketChannelImpl.java:214)at sun.nio.ch.ServerSocketAdaptor.bind(ServerSocketAdaptor.java:74)at com.cloudera.io.netty.channel.socket.nio.NioServerSocketChannel.doBind(NioServerSocketChannel.java:125)at com.cloudera.io.netty.channel.AbstractChannel$AbstractUnsafe.bind(AbstractChannel.java:475)at com.cloudera.io.netty.channel.DefaultChannelPipeline$HeadContext.bind(DefaultChannelPipeline.java:1021)at com.cloudera.io.netty.channel.AbstractChannelHandlerContext.invokeBind(AbstractChannelHandlerContext.java:455)at com.cloudera.io.netty.channel.AbstractChannelHandlerContext.bind(AbstractChannelHandlerContext.java:440)at com.cloudera.io.netty.channel.DefaultChannelPipeline.bind(DefaultChannelPipeline.java:844)at com.cloudera.io.netty.channel.AbstractChannel.bind(AbstractChannel.java:194)at com.cloudera.io.netty.bootstrap.AbstractBootstrap$2.run(AbstractBootstrap.java:340)at com.cloudera.io.netty.util.concurrent.SingleThreadEventExecutor.runAllTasks(SingleThreadEventExecutor.java:380)at com.cloudera.io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:357)at com.cloudera.io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:116)at com.cloudera.io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:137)at java.lang.Thread.run(Thread.java:745)2017-02-17 20:54:52,262 INFO org.apache.hadoop.util.ExitUtil: Exiting with status 12017-02-17 20:54:52,264 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG:/************************************************************SHUTDOWN_MSG: Shutting down DataNode at cdh1/192.168.5.78