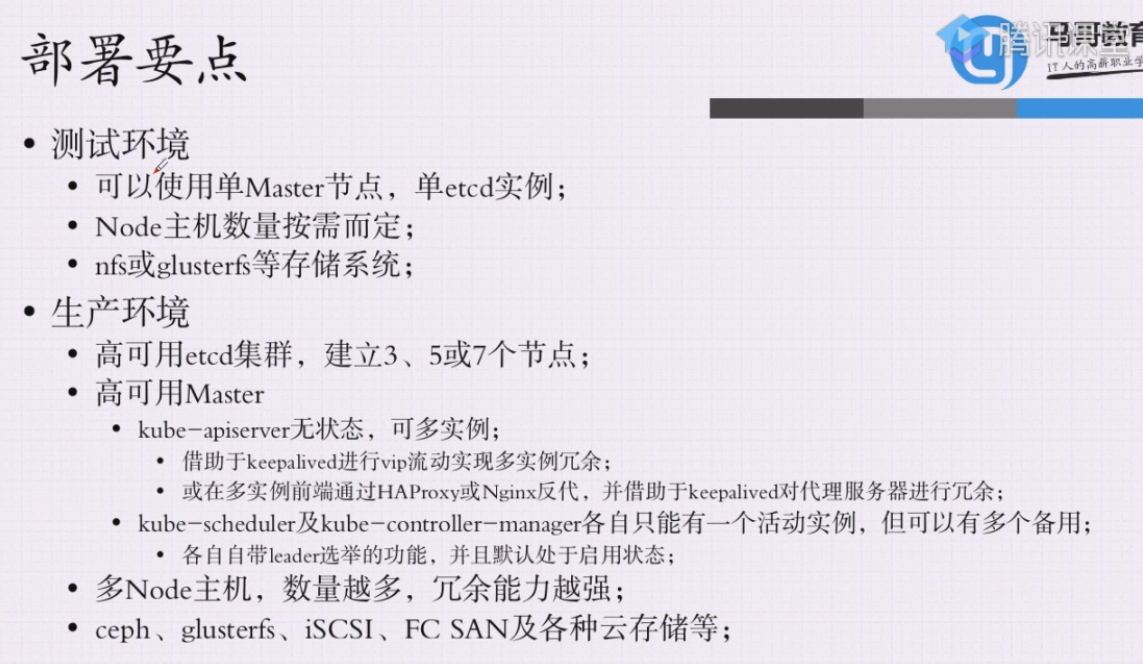

1. 部署要求

2. 部署工具

3. 准备环境

3.1 测试环境说明

本测试环境由master01、node01、node02、node03组成,所需配置为4核4G。域名为ilinux.io。

(1)设置NTP时间同步

(2)通过DNS完成各节点的主机名解析,测试环境主机数量较少也可以使用hosts文件进行

(3)关闭个节点的iptables和filewalld。

(4)各节点禁用selinux

(5)各节点禁用swap设备

(6)若要使用ipvs模型的proxy,各节点还需载入ipvs相关的各模块

3.2 设定时间同步

若各节点可以直接访问互联网,直接启动chronyd系统服务,并设定其跟随系统引导而启动。

# 所有节点安装

yum -y install chrony

# 所有节点启动

systemctl start chronyd

systemctl enable chronyd

不过,建议用户配置使用本地的时间服务器,在节点数量众多时尤其如此。存在可用本地时间服务器时,修改节点的/etc/crhony.conf配置文件,并将时间服务器指向相应的主机即可,配置格式如下:

server CHRONY-SERVER-NAME-OR-IP iburst

3.3 主机名解析

出于简化配置步骤的目的,本测试环境使用hosts文件进行各节点名称解析

10.0.0.11 master01 master01.ilinux.io

10.0.0.12 node01 node01.ilinux.io

10.0.0.13 node02 node02.ilinux.io

10.0.0.14 node03 node03.ilinux.io

3.4 关闭iptables或filewalld服务

步骤略

3.5 关闭selinux

步骤略

3.6 关闭swap设备

部署集群时,kubeadm默认会预先检查当前主机是否禁用了swap设备,并在未禁用时强制终止部署过程。

因此,在主机资源充裕的条件下,需要禁用所有的swap设备,否则,就需要在后文的kubeadm init及kubeadm join命令执行时额外使用相关的选项忽略检查错误。

# 所有节点

swapoff -a

tail -1 /etc/fstab # 禁用swap开机启动

#UUID=5880d9d0-d597-4f0c-b7b7-5bb40a9577ac swap swap defaults 0 0

3.7 启动ipvs内核模块(本次部署不使用)

创建内核模块载入相关脚本文件/etc/sysconfig/modules/ipvs.modules,设定自动载入的内核模块。

# 所有节点

cat > /etc/sysconfig/modules/ipvs.modules << EOF

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for mod in $(ls $ipvs_mods_dir | grep -o "^[^.]*")

do

/sbin/modinfo -F filename $mod &> /dev/null

if [ $? -eq 0 ]

then

/sbin/modprobe $mod

fi

done

EOF

# 修改文件权限,并手动为当前系统加载内核模块

chmod +x /etc/sysconfig/modules/ipvs.modules

bash /etc/sysconfig/modules/ipvs.modules

4. 使用kubeadm部署k8s

4.1 安装docker-ce

4.1.1 安装docker-ce

# 所有节点操作

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

yum makecache fast

yum -y install docker-ce

4.1.2 启动docker服务

# 所有节点操作

## docker自1.13版本起会自动设置iptables的FORWARD默认规则为DROP,这可能会影响Kubernets集群依赖的报文转发功能,因此,需要在/usr/lib/systemd/system/docker.service文件中,ExecStart=/usr/bin/dockerd的下面增加:

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT

## 最终配置如下

vim /usr/lib/systemd/system/docker.service

……省略部分内容

[Service]

# for containers run by docker

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

ExecStartPost=/usr/sbin/iptables -P FORWARD ACCEPT # 增加

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

……省略部分内容

## 启动docker

systemctl daemon-reload

systemctl start docker

systemctl enable docker

## 检查配置是否生效

[root@k8s-master ~]# iptables -nL

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain FORWARD (policy ACCEPT) # 这里的规则正确的为ACCEPT

target prot opt source destination

DOCKER-USER all -- 0.0.0.0/0 0.0.0.0/0

DOCKER-ISOLATION-STAGE-1 all -- 0.0.0.0/0 0.0.0.0/0

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

DOCKER all -- 0.0.0.0/0 0.0.0.0/0

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0

……省略部分内容

## 解决 docker info 显示的告警

WARNING: bridge-nf-call-iptables is disabled

WARNING: bridge-nf-call-ip6tables is disabled

[root@k8s-master ~]# sysctl -a|grep bridge

net.bridge.bridge-nf-call-arptables = 0

net.bridge.bridge-nf-call-ip6tables = 0 # 把这个值改为1

net.bridge.bridge-nf-call-iptables = 0 # 把这个值改为1

net.bridge.bridge-nf-filter-pppoe-tagged = 0

net.bridge.bridge-nf-filter-vlan-tagged = 0

net.bridge.bridge-nf-pass-vlan-input-dev = 0

sysctl: reading key "net.ipv6.conf.all.stable_secret"

sysctl: reading key "net.ipv6.conf.default.stable_secret"

sysctl: reading key "net.ipv6.conf.docker0.stable_secret"

sysctl: reading key "net.ipv6.conf.eth0.stable_secret"

sysctl: reading key "net.ipv6.conf.lo.stable_secret"

cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

## 重启docker

systemctl restart docker

4.2 安装kubernetes相关程序包

所有节点操作

yum源地址:https://developer.aliyun.com/mirror/?spm=a2c6h.13651104.0.d1002.2a422a7b083uEZ

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet

4.3 初始化master节点

# 若未禁用swap设备,则需要编辑kubelet的配置文件/etc/sysconfig/kubelet,设置忽略swap启用状态的错误,如下:

KUBELET_EXTRA_ARGS="--fail-swap-on=false" # 如果swap是启用状态,不报错

# kubeadm init命令支持两种初始化方式,一是通过命令行选项传递关键的部署设定,二是基于yaml格式的专用配置文件,允许用户自定义各个部署参数。建议使用二种

[root@k8s-master ~]# rpm -q kubeadm

kubeadm-1.20.4-0.x86_64

[root@k8s-master ~]# rpm -q kubelet

kubelet-1.20.4-0.x86_64

[root@k8s-master ~]# kubeadm init --apiserver-advertise-address=10.0.0.11 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.20.4 --service-cidr=10.1.0.0/16 --pod-network-cidr=10.244.0.0/16

……省略部分内容

Your Kubernetes control-plane has initialized successfully! # 初始化成功

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.11:6443 --token 16d7wn.7z6m9a73jlalki6j # 复制这个内容,等下添加node节点需要

--discovery-token-ca-cert-hash sha256:9cbcf118f00c8988664e5c97dbef0ec3be7989a9bbfb5e21a585dd09ca0d968a

# 如果忘记复制上面用来初始化node节点的内容,解决办法:https://blog.csdn.net/wzy_168/article/details/106552841

[root@k8s-master ~]# mkdir .kube

[root@k8s-master ~]# cp /etc/kubernetes/admin.conf .kube/config

[root@k8s-master ~]# systemctl enable kubelet.service # 初始化的时候会启动kubelet

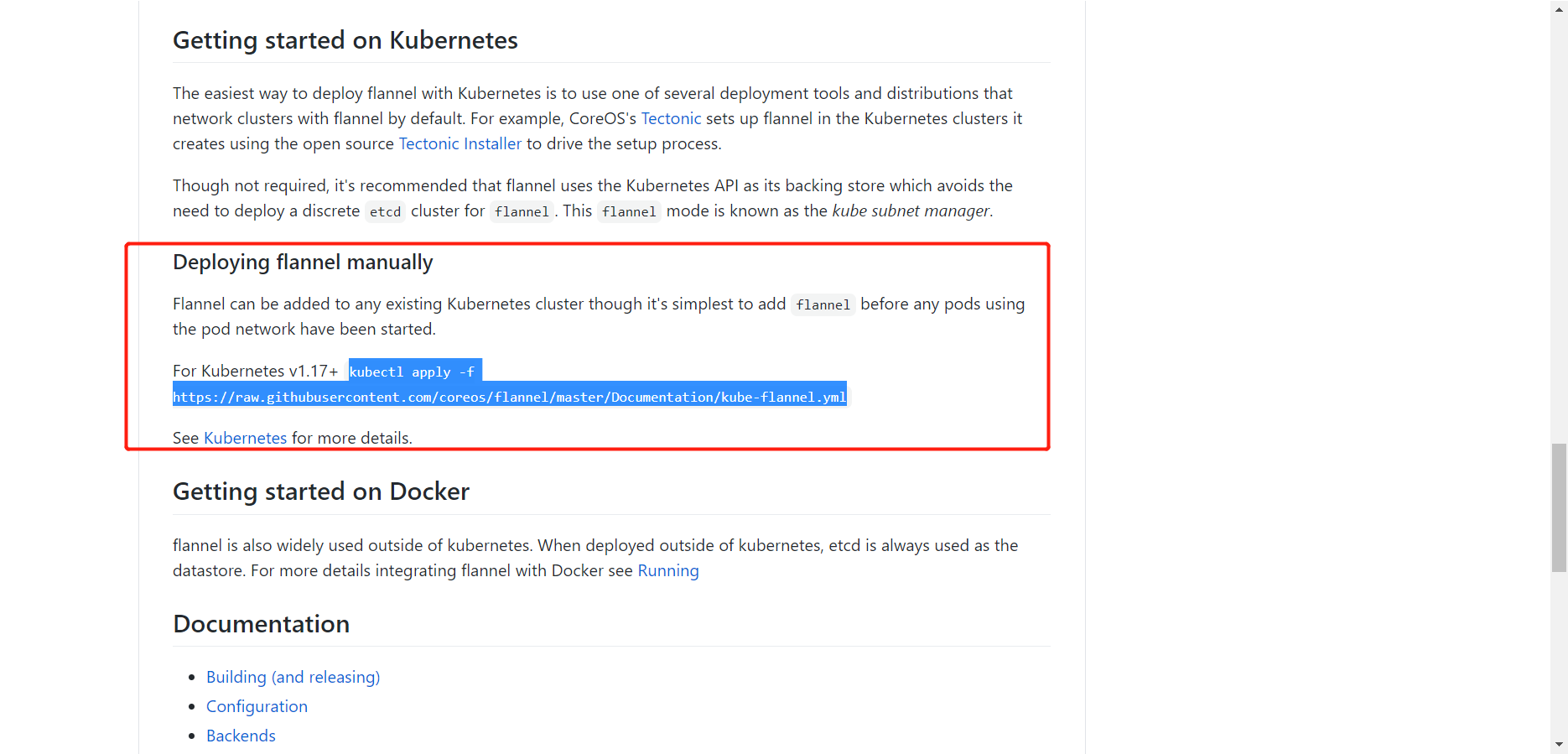

4.3.1 安装网络插件

地址:https://github.com/flannel-io/flannel

[root@k8s-master ~]# kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml # 这里需要网络好,网络要是不好,可能下不下来。不行百度报错,解决方法就是把raw.githubusercontent.com的IP解析出来,添加到hosts文件中即可。解析网站好像是国外的。

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@k8s-master ~]# kubectl get po -n kube-system # 这里pod的状态并不会马上running,需要等待一段时间才能全部正常

NAME READY STATUS RESTARTS AGE

coredns-7f89b7bc75-cmtlk 1/1 Running 0 22m

coredns-7f89b7bc75-rqn2r 1/1 Running 0 22m

etcd-k8s-master 1/1 Running 0 22m

kube-apiserver-k8s-master 1/1 Running 0 22m

kube-controller-manager-k8s-master 1/1 Running 0 22m

kube-flannel-ds-qlfrg 1/1 Running 0 2m30s

kube-proxy-wxv4l 1/1 Running 0 22m

kube-scheduler-k8s-master 1/1 Running 0 22m

[root@k8s-master ~]# kubectl get no # 到这里 主节点就初始化完成了

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 22m v1.20.4

4.4 初始化node节点

所有node节点操作相同

# 若未禁用swap设备,则需要编辑kubelet的配置文件/etc/sysconfig/kubelet,设置忽略swap启用状态的错误,如下:

KUBELET_EXTRA_ARGS="--fail-swap-on=false" # 如果swap是启用状态,不报错

## 初始化。这一步操作,也会先拉取对应的镜像,拉取镜像需要一定的时间

kubeadm join 10.0.0.11:6443 --token 16d7wn.7z6m9a73jlalki6j

--discovery-token-ca-cert-hash sha256:9cbcf118f00c8988664e5c97dbef0ec3be7989a9bbfb5e21a585dd09ca0d968a

~]# docker images # 等镜像拉取完毕后,就能成功加入集群

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.aliyuncs.com/google_containers/kube-proxy v1.20.4 c29e6c583067 41 hours ago 118MB

quay.io/coreos/flannel v0.13.1-rc2 dee1cac4dd20 2 weeks ago 64.3MB

registry.aliyuncs.com/google_containers/pause 3.2 80d28bedfe5d 12 months ago 683kB

~]# systemctl enable kubelet.service

[root@k8s-master ~]# kubectl get no

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 85m v1.20.4

k8s-node-1 Ready <none> 53m v1.20.4

k8s-node-2 Ready <none> 24m v1.20.4

node-03 Ready <none> 24m v1.20.4

5. 解决集群不健康问题

# 初始化完master节点后,使用命令查看集群状态,会发现如下问题

[root@master01 ~]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused # scheduler不健康

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused # controller-manager不健康

etcd-0 Healthy {"health":"true"}

# 原因是他们两个的yaml文件里面,没有开放对应的10251和10252端口。

# 解决办法如下,编辑下面这两个文件,然后删除- --port=0这行配置,最后重启kubelet服务就可以了。

/etc/kubernetes/manifests/kube-controller-manager.yaml

/etc/kubernetes/manifests/kube-scheduler.yaml

# 看网上说,这样的不健康好像也不影响使用。

6. 设置命令自动补全

kubectl completion bash > ~/.kube/completion.bash.inc

echo source ~/.kube/completion.bash.inc >> /root/.bashrc

source ~/.kube/completion.bash.inc

注意

这里在添加node节点时,要注意一个告警:

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.3. Latest validated version: 19.03

大概意思是,k8s官方认证过的可用版本的docker为19.03,而我们这里用的20.10.3。

由于这里是学习环境,所以没关系,但是生产环境一定要使用对应的认证过得版本。

7. 修改k8s证书过期时间

kubeadm安装的k8s,证书默认有效期为1年。可以通过手动更改为10年,甚至100年。

7.1 证书有效期查看

[root@master01 ~]# cd /etc/kubernetes/pki

[root@master01 pki]# ll

total 56

-rw-r--r-- 1 root root 1265 Feb 23 10:05 apiserver.crt

-rw-r--r-- 1 root root 1135 Feb 23 10:05 apiserver-etcd-client.crt

-rw------- 1 root root 1679 Feb 23 10:05 apiserver-etcd-client.key

-rw------- 1 root root 1675 Feb 23 10:05 apiserver.key

-rw-r--r-- 1 root root 1143 Feb 23 10:05 apiserver-kubelet-client.crt

-rw------- 1 root root 1679 Feb 23 10:05 apiserver-kubelet-client.key

-rw-r--r-- 1 root root 1066 Feb 23 10:05 ca.crt

-rw------- 1 root root 1675 Feb 23 10:05 ca.key

drwxr-xr-x 2 root root 162 Feb 23 10:05 etcd

-rw-r--r-- 1 root root 1078 Feb 23 10:05 front-proxy-ca.crt

-rw------- 1 root root 1679 Feb 23 10:05 front-proxy-ca.key

-rw-r--r-- 1 root root 1103 Feb 23 10:05 front-proxy-client.crt

-rw------- 1 root root 1675 Feb 23 10:05 front-proxy-client.key

-rw------- 1 root root 1675 Feb 23 10:05 sa.key

-rw------- 1 root root 451 Feb 23 10:05 sa.pub

[root@master01 pki]# for i in $(ls *.crt); do echo "===== $i ====="; openssl x509 -in $i -text -noout | grep -A 3 'Validity' ; done ===== apiserver.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT # 有效起始日期

Not After : Feb 23 02:05:22 2022 GMT # 有效终止日期

Subject: CN=kube-apiserver

===== apiserver-etcd-client.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Feb 23 02:05:23 2022 GMT

Subject: O=system:masters, CN=kube-apiserver-etcd-client

===== apiserver-kubelet-client.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Feb 23 02:05:22 2022 GMT

Subject: O=system:masters, CN=kube-apiserver-kubelet-client

===== ca.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Feb 21 02:05:22 2031 GMT

Subject: CN=kubernetes

===== front-proxy-ca.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Feb 21 02:05:22 2031 GMT

Subject: CN=front-proxy-ca

===== front-proxy-client.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Feb 23 02:05:22 2022 GMT

Subject: CN=front-proxy-client

7.2 证书过期时间修改

7.2.1 部署go环境

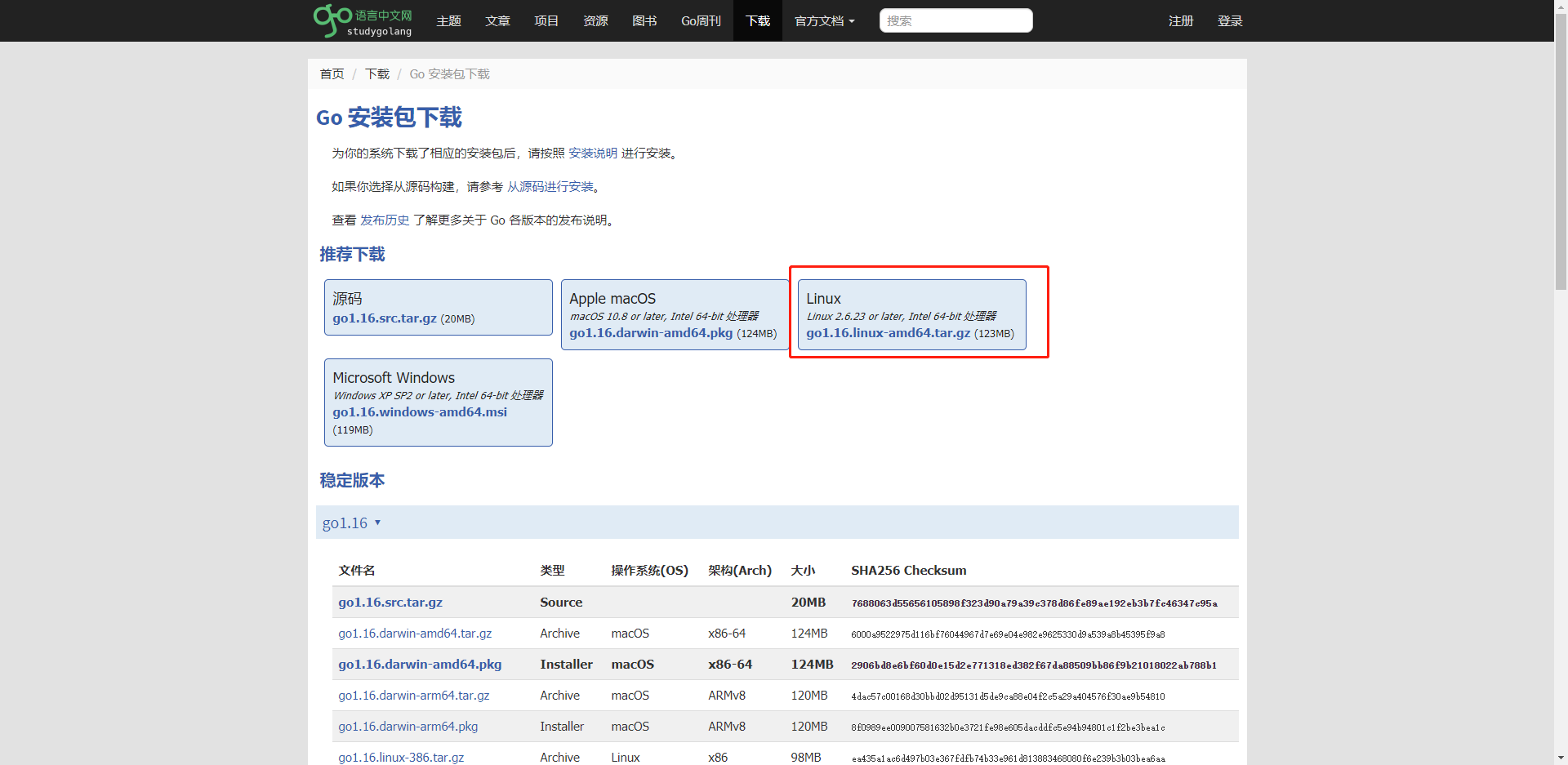

go包下载地址:https://studygolang.com/dl

[root@master01 pki]# cd

[root@master01 ~]# wget https://studygolang.com/dl/golang/go1.16.linux-amd64.tar.gz

[root@master01 ~]# tar zxf go1.16.linux-amd64.tar.gz -C /usr/local/

[root@master01 ~]# tail -1 /etc/profile

export PATH=$PATH:/usr/local/go/bin # 添加这行内容到该文件末尾

[root@master01 ~]# source /etc/profile

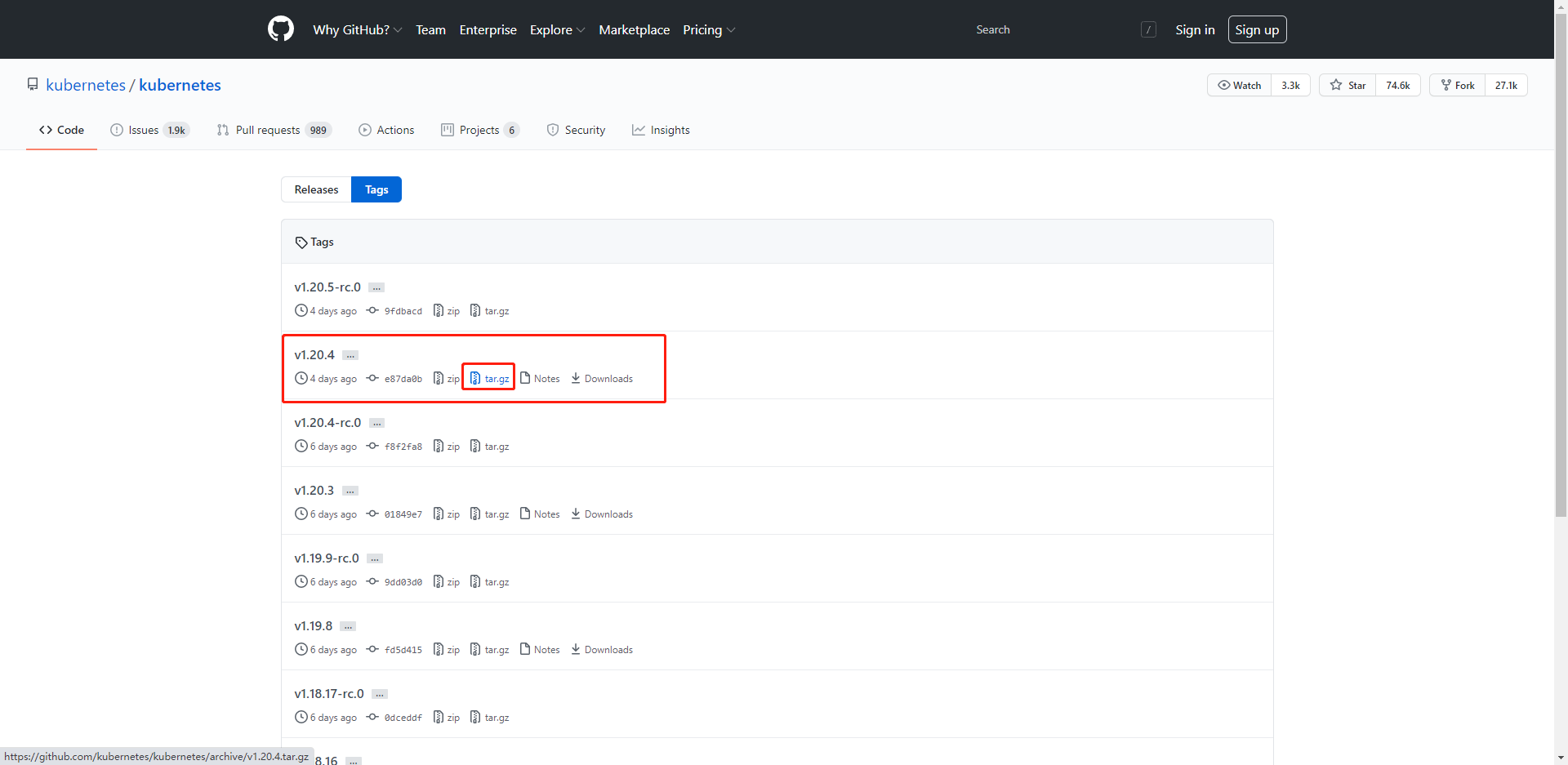

7.2.2 Kubernetes源码下载与更改证书策略

源码包下载时,必须下载与当前使用版本相同的版本

[root@master01 ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.4", GitCommit:"e87da0bd6e03ec3fea7933c4b5263d151aafd07c", GitTreeState:"clean", BuildDate:"2021-02-18T16:12:00Z", GoVersion:"go1.15.8", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"20", GitVersion:"v1.20.4", GitCommit:"e87da0bd6e03ec3fea7933c4b5263d151aafd07c", GitTreeState:"clean", BuildDate:"2021-02-18T16:03:00Z", GoVersion:"go1.15.8", Compiler:"gc", Platform:"linux/amd64"}

[root@master01 ~]# wget https://github.com/kubernetes/kubernetes/archive/v1.20.4.tar.gz

[root@master01 ~]# tar zxf kubernetes-1.20.4.tar.gz

[root@master01 ~]# cd kubernetes-1.20.4/

[root@master01 kubernetes-1.20.4]# vim cmd/kubeadm/app/util/pkiutil/pki_helpers.go

#……省略部分内容

func NewSignedCert(cfg *CertConfig, key crypto.Signer, caCert *x509.Certificate, caKey crypto.Signer) (*x509.Certificate, error) {

const effectyear = time.Hour * 24 * 365 * 100 # 添加这行内容

serial, err := cryptorand.Int(cryptorand.Reader, new(big.Int).SetInt64(math.MaxInt64))

if err != nil {

return nil, err

}

#……省略部分内容

DNSNames: cfg.AltNames.DNSNames,

IPAddresses: cfg.AltNames.IPs,

SerialNumber: serial,

NotBefore: caCert.NotBefore,

//NotAfter: time.Now().Add(kubeadmconstants.CertificateValidity).UTC(), # 注释原来的行

NotAfter: time.Now().Add(effectyear).UTC(), # 添加这行

KeyUsage: x509.KeyUsageKeyEncipherment | x509.KeyUsageDigitalSignature,

ExtKeyUsage: cfg.Usages,

[root@master01 kubernetes-1.20.4]# pwd

/root/kubernetes-1.20.4

[root@master01 kubernetes-1.20.4]# make WHAT=cmd/kubeadm GOFLAGS=-v

[root@master01 kubernetes-1.20.4]# echo $? # 确认编译是否成功

0

# 将更新后的kubeadm拷贝到指定位置

[root@master01 kubernetes-1.20.4]# cp -a _output/bin/kubeadm /root/kubeadm-new

7.2.3 更新kubeadm并备份原证书

[root@master01 kubernetes-1.20.4]# cp -a _output/bin/kubeadm /root/kubeadm-new

[root@master01 kubernetes-1.20.4]# mv /usr/bin/kubeadm /usr/bin/kubeadm_`date +%F`

[root@master01 kubernetes-1.20.4]# mv /root/kubeadm-new /usr/bin/kubeadm

[root@master01 kubernetes-1.20.4]# chmod 755 /usr/bin/kubeadm

[root@master01 kubernetes-1.20.4]# cp -a /etc/kubernetes/pki/ /etc/kubernetes/pki_`date +%F`

7.2.4 证书更新

[root@master01 kubernetes-1.20.4]# cd

[root@master01 ~]# kubeadm alpha certs renew all

[root@master01 ~]# cp -f /etc/kubernetes/admin.conf ~/.kube/config

[root@master01 ~]# cd /etc/kubernetes/pki

[root@master01 pki]# for i in $(ls *.crt); do echo "===== $i ====="; openssl x509 -in $i -text -noout | grep -A 3 'Validity' ; done

===== apiserver.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Jan 30 03:24:41 2121 GMT # 过期时间100年

Subject: CN=kube-apiserver

===== apiserver-etcd-client.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Jan 30 03:24:42 2121 GMT

Subject: O=system:masters, CN=kube-apiserver-etcd-client

===== apiserver-kubelet-client.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Jan 30 03:24:42 2121 GMT

Subject: O=system:masters, CN=kube-apiserver-kubelet-client

===== ca.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Feb 21 02:05:22 2031 GMT

Subject: CN=kubernetes

===== front-proxy-ca.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Feb 21 02:05:22 2031 GMT

Subject: CN=front-proxy-ca

===== front-proxy-client.crt =====

Validity

Not Before: Feb 23 02:05:22 2021 GMT

Not After : Jan 30 03:24:43 2121 GMT

Subject: CN=front-proxy-client

7.2.5 重启相关服务

完成更新证书后。您必须重新启动kube-apiserver、kube-controller-manager、kube-scheduler和etcd,以便它们能够使用新的证书。

kubectl delete po kube-apiserver-master01 -n kube-system

kubectl delete po kube-controller-manager-master01 -n kube-system

kubectl delete po kube-scheduler-master01 -n kube-system

kubectl delete po etcd-master01 -n kube-system

# 问题:怎么这些pod直接删除后又自动启动了?

## 通过大哥解释和百度:平常我们提得比较多的Pod,都是通过Deployment,DaemonSet,StatefulSet等方式创建管理的。今天我们介绍一种特殊的Pod,叫静态(Static) Pod。

### 静态Pod是由kubelet进行管理,仅存在于特定Node上的Pod,这些Pod是不能通过API Server进行管理的,无法与ReplicationController,Deployment或DaemonSet关联。

### kubelet会扫描staticPodPath,检测到这个目录下有yaml文件,就创建Pod了。如果要删除Pod,把这些配置文件删除即可。

[root@master01 ~]# cat /var/lib/kubelet/config.yaml | grep staticPodPath

staticPodPath: /etc/kubernetes/manifests

[root@master01 ~]# ll /etc/kubernetes/manifests

total 16

-rw------- 1 root root 2192 Feb 23 10:05 etcd.yaml

-rw------- 1 root root 3309 Feb 23 10:05 kube-apiserver.yaml

-rw------- 1 root root 2811 Feb 23 10:36 kube-controller-manager.yaml

-rw------- 1 root root 1398 Feb 23 10:36 kube-scheduler.yaml

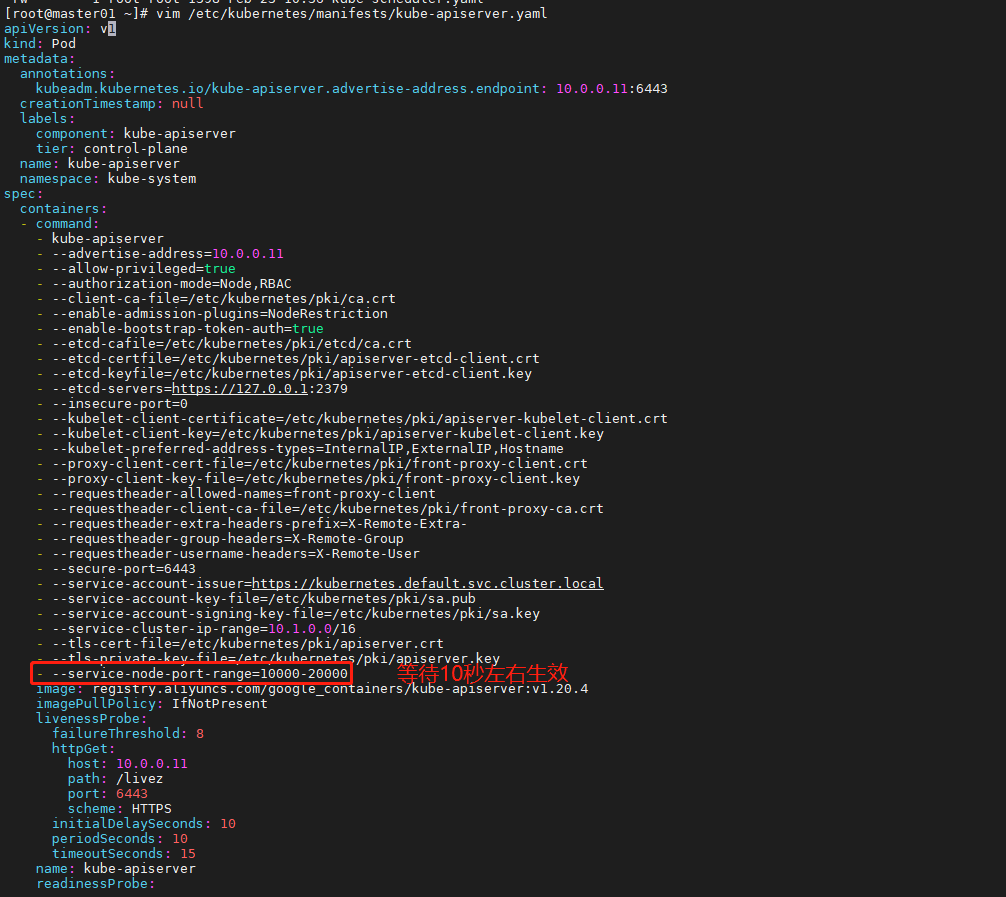

8. 修改默认端口范围

kubernetes默认端口号范围是 30000-32767

[root@master01 ~]# vim /etc/kubernetes/manifests/kube-apiserver.yaml

……省略部分内容

- --service-node-port-range=1000-50000 # 添加内容

……省略部分内容