参考:https://www.cnblogs.com/jiangxinyang/p/10210813.html

代码来源:https://github.com/jiangxinyang227/textClassifier

1、参数配置

import os import csv import time import datetime import random import json import warnings from collections import Counter from math import sqrt import gensim import pandas as pd import numpy as np import tensorflow as tf from sklearn.metrics import roc_auc_score, accuracy_score, precision_score, recall_score warnings.filterwarnings("ignore")

# 配置参数 class TrainingConfig(object): epoches = 10 evaluateEvery = 100 checkpointEvery = 100 learningRate = 0.001 class ModelConfig(object): embeddingSize = 200 filters = 128 # 内层一维卷积核的数量,外层卷积核的数量应该等于embeddingSize,因为要确保每个layer后的输出维度和输入维度是一致的。 numHeads = 8 # Attention 的头数 numBlocks = 1 # 设置transformer block的数量 epsilon = 1e-8 # LayerNorm 层中的最小除数 keepProp = 0.9 # multi head attention 中的dropout dropoutKeepProb = 0.5 # 全连接层的dropout l2RegLambda = 0.0 class Config(object): sequenceLength = 200 # 取了所有序列长度的均值 batchSize = 128 dataSource = "../data/preProcess/labeledTrain.csv" stopWordSource = "../data/english" numClasses = 1 # 二分类设置为1,多分类设置为类别的数目 rate = 0.8 # 训练集的比例 training = TrainingConfig() model = ModelConfig() # 实例化配置参数对象 config = Config()

2、生成训练数据

1)将数据加载进来,将句子分割成词表示,并去除低频词和停用词。

2)将词映射成索引表示,构建词汇-索引映射表,并保存成json的数据格式,之后做inference时可以用到。(注意,有的词可能不在word2vec的预训练词向量中,这种词直接用UNK表示)

3)从预训练的词向量模型中读取出词向量,作为初始化值输入到模型中。

4)将数据集分割成训练集和测试集

# 数据预处理的类,生成训练集和测试集 class Dataset(object): def __init__(self, config): self.config = config self._dataSource = config.dataSource self._stopWordSource = config.stopWordSource self._sequenceLength = config.sequenceLength # 每条输入的序列处理为定长 self._embeddingSize = config.model.embeddingSize self._batchSize = config.batchSize self._rate = config.rate self._stopWordDict = {} self.trainReviews = [] self.trainLabels = [] self.evalReviews = [] self.evalLabels = [] self.wordEmbedding =None self.labelList = [] def _readData(self, filePath): """ 从csv文件中读取数据集 """ df = pd.read_csv(filePath) if self.config.numClasses == 1: labels = df["sentiment"].tolist() elif self.config.numClasses > 1: labels = df["rate"].tolist() review = df["review"].tolist() reviews = [line.strip().split() for line in review] return reviews, labels def _labelToIndex(self, labels, label2idx): """ 将标签转换成索引表示 """ labelIds = [label2idx[label] for label in labels] return labelIds def _wordToIndex(self, reviews, word2idx): """ 将词转换成索引 """ reviewIds = [[word2idx.get(item, word2idx["UNK"]) for item in review] for review in reviews] return reviewIds def _genTrainEvalData(self, x, y, word2idx, rate): """ 生成训练集和验证集 """ reviews = [] for review in x: if len(review) >= self._sequenceLength: reviews.append(review[:self._sequenceLength]) else: reviews.append(review + [word2idx["PAD"]] * (self._sequenceLength - len(review))) trainIndex = int(len(x) * rate) trainReviews = np.asarray(reviews[:trainIndex], dtype="int64") trainLabels = np.array(y[:trainIndex], dtype="float32") evalReviews = np.asarray(reviews[trainIndex:], dtype="int64") evalLabels = np.array(y[trainIndex:], dtype="float32") return trainReviews, trainLabels, evalReviews, evalLabels def _genVocabulary(self, reviews, labels): """ 生成词向量和词汇-索引映射字典,可以用全数据集 """ allWords = [word for review in reviews for word in review] # 去掉停用词 subWords = [word for word in allWords if word not in self.stopWordDict] wordCount = Counter(subWords) # 统计词频 sortWordCount = sorted(wordCount.items(), key=lambda x: x[1], reverse=True) # 去除低频词 words = [item[0] for item in sortWordCount if item[1] >= 5] vocab, wordEmbedding = self._getWordEmbedding(words) self.wordEmbedding = wordEmbedding word2idx = dict(zip(vocab, list(range(len(vocab))))) uniqueLabel = list(set(labels)) label2idx = dict(zip(uniqueLabel, list(range(len(uniqueLabel))))) self.labelList = list(range(len(uniqueLabel))) # 将词汇-索引映射表保存为json数据,之后做inference时直接加载来处理数据 with open("../data/wordJson/word2idx.json", "w", encoding="utf-8") as f: json.dump(word2idx, f) with open("../data/wordJson/label2idx.json", "w", encoding="utf-8") as f: json.dump(label2idx, f) return word2idx, label2idx def _getWordEmbedding(self, words): """ 按照我们的数据集中的单词取出预训练好的word2vec中的词向量 """ wordVec = gensim.models.KeyedVectors.load_word2vec_format("../word2vec/word2Vec.bin", binary=True) vocab = [] wordEmbedding = [] # 添加 "pad" 和 "UNK", vocab.append("PAD") vocab.append("UNK") wordEmbedding.append(np.zeros(self._embeddingSize)) wordEmbedding.append(np.random.randn(self._embeddingSize)) for word in words: try: vector = wordVec.wv[word] vocab.append(word) wordEmbedding.append(vector) except: print(word + "不存在于词向量中") return vocab, np.array(wordEmbedding) def _readStopWord(self, stopWordPath): """ 读取停用词 """ with open(stopWordPath, "r") as f: stopWords = f.read() stopWordList = stopWords.splitlines() # 将停用词用列表的形式生成,之后查找停用词时会比较快 self.stopWordDict = dict(zip(stopWordList, list(range(len(stopWordList))))) def dataGen(self): """ 初始化训练集和验证集 """ # 初始化停用词 self._readStopWord(self._stopWordSource) # 初始化数据集 reviews, labels = self._readData(self._dataSource) # 初始化词汇-索引映射表和词向量矩阵 word2idx, label2idx = self._genVocabulary(reviews, labels) # 将标签和句子数值化 labelIds = self._labelToIndex(labels, label2idx) reviewIds = self._wordToIndex(reviews, word2idx) # 初始化训练集和测试集 trainReviews, trainLabels, evalReviews, evalLabels = self._genTrainEvalData(reviewIds, labelIds, word2idx, self._rate) self.trainReviews = trainReviews self.trainLabels = trainLabels self.evalReviews = evalReviews self.evalLabels = evalLabels data = Dataset(config) data.dataGen()

我们慢慢来看:

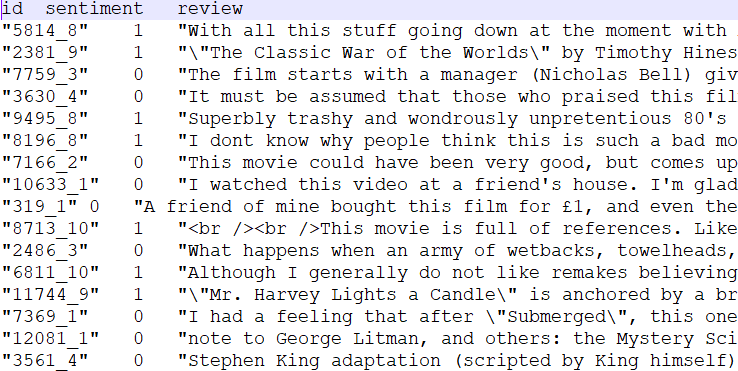

labeledTrain.csv中的部分数据:

第一列id,第二列标签,第三列评论。

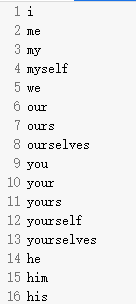

停用词english中部分数据:

_readData(filePath)方法返回的部分数据是:字列表和标签

['with', 'all', 'this', 'stuff', 'going', 'down', 'at', 'the', 'moment', 'with', 'mj', 'ive', 'started', 'listening', 'to', 'his', 'music', 'watching', 'the', 'odd', 'documentary', 'here', 'and', 'there', 'watched', 'the', 'wiz', 'and', 'watched', 'moonwalker', 'again', 'maybe', 'i', 'just', 'want', 'to', 'get', 'a', 'certain', 'insight', 'into', 'this', 'guy', 'who', 'i', 'thought', 'was', 'really', 'cool', 'in', 'the', 'eighties', 'just', 'to', 'maybe', 'make', 'up', 'my', 'mind', 'whether', 'he', 'is', 'guilty', 'or', 'innocent', 'moonwalker', 'is', 'part', 'biography', 'part', 'feature', 'film', 'which', 'i', 'remember', 'going', 'to', 'see', 'at', 'the', 'cinema', 'when', 'it', 'was', 'originally', 'released', 'some', 'of', 'it', 'has', 'subtle', 'messages', 'about', 'mjs', 'feeling', 'towards', 'the', 'press', 'and', 'also', 'the', 'obvious', 'message', 'of', 'drugs', 'are', 'bad', 'mkayvisually', 'impressive', 'but', 'of', 'course', 'this', 'is', 'all', 'about', 'michael', 'jackson', 'so', 'unless', 'you', 'remotely', 'like', 'mj', 'in', 'anyway', 'then', 'you', 'are', 'going', 'to', 'hate', 'this', 'and', 'find', 'it', 'boring', 'some', 'may', 'call', 'mj', 'an', 'egotist', 'for', 'consenting', 'to', 'the', 'making', 'of', 'this', 'movie', 'but', 'mj', 'and', 'most', 'of', 'his', 'fans', 'would', 'say', 'that', 'he', 'made', 'it', 'for', 'the', 'fans', 'which', 'if', 'true', 'is', 'really', 'nice', 'of', 'himthe', 'actual', 'feature', 'film', 'bit', 'when', 'it', 'finally', 'starts', 'is', 'only', 'on', 'for', '20', 'minutes', 'or', 'so', 'excluding', 'the', 'smooth', 'criminal', 'sequence', 'and', 'joe', 'pesci', 'is', 'convincing', 'as', 'a', 'psychopathic', 'all', 'powerful', 'drug', 'lord', 'why', 'he', 'wants', 'mj', 'dead', 'so', 'bad', 'is', 'beyond', 'me', 'because', 'mj', 'overheard', 'his', 'plans', 'nah', 'joe', 'pescis', 'character', 'ranted', 'that', 'he', 'wanted', 'people', 'to', 'know', 'it', 'is', 'he', 'who', 'is', 'supplying', 'drugs', 'etc', 'so', 'i', 'dunno', 'maybe', 'he', 'just', 'hates', 'mjs', 'musiclots', 'of', 'cool', 'things', 'in', 'this', 'like', 'mj', 'turning', 'into', 'a', 'car', 'and', 'a', 'robot', 'and', 'the', 'whole', 'speed', 'demon', 'sequence', 'also', 'the', 'director', 'must', 'have', 'had', 'the', 'patience', 'of', 'a', 'saint', 'when', 'it', 'came', 'to', 'filming', 'the', 'kiddy', 'bad', 'sequence', 'as', 'usually', 'directors', 'hate', 'working', 'with', 'one', 'kid', 'let', 'alone', 'a', 'whole', 'bunch', 'of', 'them', 'performing', 'a', 'complex', 'dance', 'scenebottom', 'line', 'this', 'movie', 'is', 'for', 'people', 'who', 'like', 'mj', 'on', 'one', 'level', 'or', 'another', 'which', 'i', 'think', 'is', 'most', 'people', 'if', 'not', 'then', 'stay', 'away', 'it', 'does', 'try', 'and', 'give', 'off', 'a', 'wholesome', 'message', 'and', 'ironically', 'mjs', 'bestest', 'buddy', 'in', 'this', 'movie', 'is', 'a', 'girl!', 'michael', 'jackson', 'is', 'truly', 'one', 'of', 'the', 'most', 'talented', 'people', 'ever', 'to', 'grace', 'this', 'planet', 'but', 'is', 'he', 'guilty', 'well', 'with', 'all', 'the', 'attention', 'ive', 'gave', 'this', 'subjecthmmm', 'well', 'i', 'dont', 'know', 'because', 'people', 'can', 'be', 'different', 'behind', 'closed', 'doors', 'i', 'know', 'this', 'for', 'a', 'fact', 'he', 'is', 'either', 'an', 'extremely', 'nice', 'but', 'stupid', 'guy', 'or', 'one', 'of', 'the', 'most', 'sickest', 'liars', 'i', 'hope', 'he', 'is', 'not', 'the', 'latter'] 1

_genVocabulary(self, reviews, labels)方法:

传入字列表组成的列表以及每个字列表对应的标签。

1)构建所有字构成的子空间

2)去掉停用词

3)去掉出现频率较低的词

4)调用_getWordEmbedding(words):传入处理后的字空间:

- 其中vocab, wordEmbedding的形状是:(31983,) (31983, 200)

5)word2idx是将每一个字都对应一个数值表示:

{'PAD': 0, 'UNK': 1, 'movie': 2, 'film': 3,... 需要注意的是padding用'PAD'表示,没有出现的字用'UNK'表示。

6)label2idx也是将标签映射成数值表示:

{0: 0, 1: 1} 。同时存储一个self.labelList

7)将word2idx和label2idx存储到json文件中。

_labelToIndex(labels, label2idx)方法和_wordToIndex(reviews, word2idx)方法:

将reviews和labels中的将字或者标签用数值表示。

_genTrainEvalData(x, y, word2idx, rate):划分训练集和测试集。

3、生成batch数据

采用生成器的形式向模型输入batch数据集,(生成器可以避免将所有的数据加入到内存中)

# 输出batch数据集 def nextBatch(x, y, batchSize): """ 生成batch数据集,用生成器的方式输出 """ perm = np.arange(len(x)) np.random.shuffle(perm) x = x[perm] y = y[perm] numBatches = len(x) // batchSize for i in range(numBatches): start = i * batchSize end = start + batchSize batchX = np.array(x[start: end], dtype="int64") batchY = np.array(y[start: end], dtype="float32") yield batchX, batchY

4、构建Transformer模型

关于transformer模型的一些使用心得:

1)我在这里选择固定的one-hot的position embedding比论文中提出的利用正弦余弦函数生成的position embedding的效果要好,可能的原因是论文中提出的position embedding是作为可训练的值传入的,这样就增加了模型的复杂度,在小数据集(IMDB训练集大小:20000)上导致性能有所下降。

2)mask可能不需要,添加mask和去除mask对结果基本没啥影响,也许在其他的任务或者数据集上有作用,但论文也并没有提出一定要在encoder结构中加入mask,mask更多的是用在decoder。

3)transformer的层数,transformer的层数可以根据自己的数据集大小调整,在小数据集上基本上一层就够了。

4)在subLayers上加dropout正则化,主要是在multi-head attention层加,因为feed forward是用卷积实现的,不加dropout应该没关系,当然如果feed forward用全连接层实现,那也加上dropout。

5)在小数据集上transformer的效果并不一定比Bi-LSTM + Attention好,在IMDB上效果就更差。

# 生成位置嵌入 def fixedPositionEmbedding(batchSize, sequenceLen): embeddedPosition = [] for batch in range(batchSize): x = [] for step in range(sequenceLen): a = np.zeros(sequenceLen) a[step] = 1 x.append(a) embeddedPosition.append(x) return np.array(embeddedPosition, dtype="float32") # 模型构建 class Transformer(object): """ Transformer Encoder 用于文本分类 """ def __init__(self, config, wordEmbedding): # 定义模型的输入 self.inputX = tf.placeholder(tf.int32, [None, config.sequenceLength], name="inputX") self.inputY = tf.placeholder(tf.int32, [None], name="inputY") self.dropoutKeepProb = tf.placeholder(tf.float32, name="dropoutKeepProb") self.embeddedPosition = tf.placeholder(tf.float32, [None, config.sequenceLength, config.sequenceLength], name="embeddedPosition") self.config = config # 定义l2损失 l2Loss = tf.constant(0.0) # 词嵌入层, 位置向量的定义方式有两种:一是直接用固定的one-hot的形式传入,然后和词向量拼接,在当前的数据集上表现效果更好。另一种 # 就是按照论文中的方法实现,这样的效果反而更差,可能是增大了模型的复杂度,在小数据集上表现不佳。 with tf.name_scope("embedding"): # 利用预训练的词向量初始化词嵌入矩阵 self.W = tf.Variable(tf.cast(wordEmbedding, dtype=tf.float32, name="word2vec") ,name="W") # 利用词嵌入矩阵将输入的数据中的词转换成词向量,维度[batch_size, sequence_length, embedding_size] self.embedded = tf.nn.embedding_lookup(self.W, self.inputX) self.embeddedWords = tf.concat([self.embedded, self.embeddedPosition], -1) with tf.name_scope("transformer"): for i in range(config.model.numBlocks): with tf.name_scope("transformer-{}".format(i + 1)): # 维度[batch_size, sequence_length, embedding_size] multiHeadAtt = self._multiheadAttention(rawKeys=self.inputX, queries=self.embeddedWords, keys=self.embeddedWords) # 维度[batch_size, sequence_length, embedding_size] self.embeddedWords = self._feedForward(multiHeadAtt, [config.model.filters, config.model.embeddingSize + config.sequenceLength]) outputs = tf.reshape(self.embeddedWords, [-1, config.sequenceLength * (config.model.embeddingSize + config.sequenceLength)]) outputSize = outputs.get_shape()[-1].value # with tf.name_scope("wordEmbedding"): # self.W = tf.Variable(tf.cast(wordEmbedding, dtype=tf.float32, name="word2vec"), name="W") # self.wordEmbedded = tf.nn.embedding_lookup(self.W, self.inputX) # with tf.name_scope("positionEmbedding"): # print(self.wordEmbedded) # self.positionEmbedded = self._positionEmbedding() # self.embeddedWords = self.wordEmbedded + self.positionEmbedded # with tf.name_scope("transformer"): # for i in range(config.model.numBlocks): # with tf.name_scope("transformer-{}".format(i + 1)): # # 维度[batch_size, sequence_length, embedding_size] # multiHeadAtt = self._multiheadAttention(rawKeys=self.wordEmbedded, queries=self.embeddedWords, # keys=self.embeddedWords) # # 维度[batch_size, sequence_length, embedding_size] # self.embeddedWords = self._feedForward(multiHeadAtt, [config.model.filters, config.model.embeddingSize]) # outputs = tf.reshape(self.embeddedWords, [-1, config.sequenceLength * (config.model.embeddingSize)]) # outputSize = outputs.get_shape()[-1].value with tf.name_scope("dropout"): outputs = tf.nn.dropout(outputs, keep_prob=self.dropoutKeepProb) # 全连接层的输出 with tf.name_scope("output"): outputW = tf.get_variable( "outputW", shape=[outputSize, config.numClasses], initializer=tf.contrib.layers.xavier_initializer()) outputB= tf.Variable(tf.constant(0.1, shape=[config.numClasses]), name="outputB") l2Loss += tf.nn.l2_loss(outputW) l2Loss += tf.nn.l2_loss(outputB) self.logits = tf.nn.xw_plus_b(outputs, outputW, outputB, name="logits") if config.numClasses == 1: self.predictions = tf.cast(tf.greater_equal(self.logits, 0.0), tf.float32, name="predictions") elif config.numClasses > 1: self.predictions = tf.argmax(self.logits, axis=-1, name="predictions") # 计算二元交叉熵损失 with tf.name_scope("loss"): if config.numClasses == 1: losses = tf.nn.sigmoid_cross_entropy_with_logits(logits=self.logits, labels=tf.cast(tf.reshape(self.inputY, [-1, 1]), dtype=tf.float32)) elif config.numClasses > 1: losses = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=self.logits, labels=self.inputY) self.loss = tf.reduce_mean(losses) + config.model.l2RegLambda * l2Loss def _layerNormalization(self, inputs, scope="layerNorm"): # LayerNorm层和BN层有所不同 epsilon = self.config.model.epsilon inputsShape = inputs.get_shape() # [batch_size, sequence_length, embedding_size] paramsShape = inputsShape[-1:] # LayerNorm是在最后的维度上计算输入的数据的均值和方差,BN层是考虑所有维度的 # mean, variance的维度都是[batch_size, sequence_len, 1] mean, variance = tf.nn.moments(inputs, [-1], keep_dims=True) beta = tf.Variable(tf.zeros(paramsShape)) gamma = tf.Variable(tf.ones(paramsShape)) normalized = (inputs - mean) / ((variance + epsilon) ** .5) outputs = gamma * normalized + beta return outputs def _multiheadAttention(self, rawKeys, queries, keys, numUnits=None, causality=False, scope="multiheadAttention"): # rawKeys 的作用是为了计算mask时用的,因为keys是加上了position embedding的,其中不存在padding为0的值 numHeads = self.config.model.numHeads keepProp = self.config.model.keepProp if numUnits is None: # 若是没传入值,直接去输入数据的最后一维,即embedding size. numUnits = queries.get_shape().as_list()[-1] # tf.layers.dense可以做多维tensor数据的非线性映射,在计算self-Attention时,一定要对这三个值进行非线性映射, # 其实这一步就是论文中Multi-Head Attention中的对分割后的数据进行权重映射的步骤,我们在这里先映射后分割,原则上是一样的。 # Q, K, V的维度都是[batch_size, sequence_length, embedding_size] Q = tf.layers.dense(queries, numUnits, activation=tf.nn.relu) K = tf.layers.dense(keys, numUnits, activation=tf.nn.relu) V = tf.layers.dense(keys, numUnits, activation=tf.nn.relu) # 将数据按最后一维分割成num_heads个, 然后按照第一维拼接 # Q, K, V 的维度都是[batch_size * numHeads, sequence_length, embedding_size/numHeads] Q_ = tf.concat(tf.split(Q, numHeads, axis=-1), axis=0) K_ = tf.concat(tf.split(K, numHeads, axis=-1), axis=0) V_ = tf.concat(tf.split(V, numHeads, axis=-1), axis=0) # 计算keys和queries之间的点积,维度[batch_size * numHeads, queries_len, key_len], 后两维是queries和keys的序列长度 similary = tf.matmul(Q_, tf.transpose(K_, [0, 2, 1])) # 对计算的点积进行缩放处理,除以向量长度的根号值 scaledSimilary = similary / (K_.get_shape().as_list()[-1] ** 0.5) # 在我们输入的序列中会存在padding这个样的填充词,这种词应该对最终的结果是毫无帮助的,原则上说当padding都是输入0时, # 计算出来的权重应该也是0,但是在transformer中引入了位置向量,当和位置向量相加之后,其值就不为0了,因此在添加位置向量 # 之前,我们需要将其mask为0。虽然在queries中也存在这样的填充词,但原则上模型的结果之和输入有关,而且在self-Attention中 # queryies = keys,因此只要一方为0,计算出的权重就为0。 # 具体关于key mask的介绍可以看看这里: https://github.com/Kyubyong/transformer/issues/3 # 利用tf,tile进行张量扩张, 维度[batch_size * numHeads, keys_len] keys_len = keys 的序列长度 keyMasks = tf.tile(rawKeys, [numHeads, 1]) # 增加一个维度,并进行扩张,得到维度[batch_size * numHeads, queries_len, keys_len] keyMasks = tf.tile(tf.expand_dims(keyMasks, 1), [1, tf.shape(queries)[1], 1]) # tf.ones_like生成元素全为1,维度和scaledSimilary相同的tensor, 然后得到负无穷大的值 paddings = tf.ones_like(scaledSimilary) * (-2 ** (32 + 1)) # tf.where(condition, x, y),condition中的元素为bool值,其中对应的True用x中的元素替换,对应的False用y中的元素替换 # 因此condition,x,y的维度是一样的。下面就是keyMasks中的值为0就用paddings中的值替换 maskedSimilary = tf.where(tf.equal(keyMasks, 0), paddings, scaledSimilary) # 维度[batch_size * numHeads, queries_len, key_len] # 在计算当前的词时,只考虑上文,不考虑下文,出现在Transformer Decoder中。在文本分类时,可以只用Transformer Encoder。 # Decoder是生成模型,主要用在语言生成中 if causality: diagVals = tf.ones_like(maskedSimilary[0, :, :]) # [queries_len, keys_len] tril = tf.contrib.linalg.LinearOperatorTriL(diagVals).to_dense() # [queries_len, keys_len] masks = tf.tile(tf.expand_dims(tril, 0), [tf.shape(maskedSimilary)[0], 1, 1]) # [batch_size * numHeads, queries_len, keys_len] paddings = tf.ones_like(masks) * (-2 ** (32 + 1)) maskedSimilary = tf.where(tf.equal(masks, 0), paddings, maskedSimilary) # [batch_size * numHeads, queries_len, keys_len] # 通过softmax计算权重系数,维度 [batch_size * numHeads, queries_len, keys_len] weights = tf.nn.softmax(maskedSimilary) # 加权和得到输出值, 维度[batch_size * numHeads, sequence_length, embedding_size/numHeads] outputs = tf.matmul(weights, V_) # 将多头Attention计算的得到的输出重组成最初的维度[batch_size, sequence_length, embedding_size] outputs = tf.concat(tf.split(outputs, numHeads, axis=0), axis=2) outputs = tf.nn.dropout(outputs, keep_prob=keepProp) # 对每个subLayers建立残差连接,即H(x) = F(x) + x outputs += queries # normalization 层 outputs = self._layerNormalization(outputs) return outputs def _feedForward(self, inputs, filters, scope="multiheadAttention"): # 在这里的前向传播采用卷积神经网络 # 内层 params = {"inputs": inputs, "filters": filters[0], "kernel_size": 1, "activation": tf.nn.relu, "use_bias": True} outputs = tf.layers.conv1d(**params) # 外层 params = {"inputs": outputs, "filters": filters[1], "kernel_size": 1, "activation": None, "use_bias": True} # 这里用到了一维卷积,实际上卷积核尺寸还是二维的,只是只需要指定高度,宽度和embedding size的尺寸一致 # 维度[batch_size, sequence_length, embedding_size] outputs = tf.layers.conv1d(**params) # 残差连接 outputs += inputs # 归一化处理 outputs = self._layerNormalization(outputs) return outputs def _positionEmbedding(self, scope="positionEmbedding"): # 生成可训练的位置向量 batchSize = self.config.batchSize sequenceLen = self.config.sequenceLength embeddingSize = self.config.model.embeddingSize # 生成位置的索引,并扩张到batch中所有的样本上 positionIndex = tf.tile(tf.expand_dims(tf.range(sequenceLen), 0), [batchSize, 1]) # 根据正弦和余弦函数来获得每个位置上的embedding的第一部分 positionEmbedding = np.array([[pos / np.power(10000, (i-i%2) / embeddingSize) for i in range(embeddingSize)] for pos in range(sequenceLen)]) # 然后根据奇偶性分别用sin和cos函数来包装 positionEmbedding[:, 0::2] = np.sin(positionEmbedding[:, 0::2]) positionEmbedding[:, 1::2] = np.cos(positionEmbedding[:, 1::2]) # 将positionEmbedding转换成tensor的格式 positionEmbedding_ = tf.cast(positionEmbedding, dtype=tf.float32) # 得到三维的矩阵[batchSize, sequenceLen, embeddingSize] positionEmbedded = tf.nn.embedding_lookup(positionEmbedding_, positionIndex) return positionEmbedded

5、定义计算metrics的函数

""" 定义各类性能指标 """ def mean(item: list) -> float: """ 计算列表中元素的平均值 :param item: 列表对象 :return: """ res = sum(item) / len(item) if len(item) > 0 else 0 return res def accuracy(pred_y, true_y): """ 计算二类和多类的准确率 :param pred_y: 预测结果 :param true_y: 真实结果 :return: """ if isinstance(pred_y[0], list): pred_y = [item[0] for item in pred_y] corr = 0 for i in range(len(pred_y)): if pred_y[i] == true_y[i]: corr += 1 acc = corr / len(pred_y) if len(pred_y) > 0 else 0 return acc def binary_precision(pred_y, true_y, positive=1): """ 二类的精确率计算 :param pred_y: 预测结果 :param true_y: 真实结果 :param positive: 正例的索引表示 :return: """ corr = 0 pred_corr = 0 for i in range(len(pred_y)): if pred_y[i] == positive: pred_corr += 1 if pred_y[i] == true_y[i]: corr += 1 prec = corr / pred_corr if pred_corr > 0 else 0 return prec def binary_recall(pred_y, true_y, positive=1): """ 二类的召回率 :param pred_y: 预测结果 :param true_y: 真实结果 :param positive: 正例的索引表示 :return: """ corr = 0 true_corr = 0 for i in range(len(pred_y)): if true_y[i] == positive: true_corr += 1 if pred_y[i] == true_y[i]: corr += 1 rec = corr / true_corr if true_corr > 0 else 0 return rec def binary_f_beta(pred_y, true_y, beta=1.0, positive=1): """ 二类的f beta值 :param pred_y: 预测结果 :param true_y: 真实结果 :param beta: beta值 :param positive: 正例的索引表示 :return: """ precision = binary_precision(pred_y, true_y, positive) recall = binary_recall(pred_y, true_y, positive) try: f_b = (1 + beta * beta) * precision * recall / (beta * beta * precision + recall) except: f_b = 0 return f_b def multi_precision(pred_y, true_y, labels): """ 多类的精确率 :param pred_y: 预测结果 :param true_y: 真实结果 :param labels: 标签列表 :return: """ if isinstance(pred_y[0], list): pred_y = [item[0] for item in pred_y] precisions = [binary_precision(pred_y, true_y, label) for label in labels] prec = mean(precisions) return prec def multi_recall(pred_y, true_y, labels): """ 多类的召回率 :param pred_y: 预测结果 :param true_y: 真实结果 :param labels: 标签列表 :return: """ if isinstance(pred_y[0], list): pred_y = [item[0] for item in pred_y] recalls = [binary_recall(pred_y, true_y, label) for label in labels] rec = mean(recalls) return rec def multi_f_beta(pred_y, true_y, labels, beta=1.0): """ 多类的f beta值 :param pred_y: 预测结果 :param true_y: 真实结果 :param labels: 标签列表 :param beta: beta值 :return: """ if isinstance(pred_y[0], list): pred_y = [item[0] for item in pred_y] f_betas = [binary_f_beta(pred_y, true_y, beta, label) for label in labels] f_beta = mean(f_betas) return f_beta def get_binary_metrics(pred_y, true_y, f_beta=1.0): """ 得到二分类的性能指标 :param pred_y: :param true_y: :param f_beta: :return: """ acc = accuracy(pred_y, true_y) recall = binary_recall(pred_y, true_y) precision = binary_precision(pred_y, true_y) f_beta = binary_f_beta(pred_y, true_y, f_beta) return acc, recall, precision, f_beta def get_multi_metrics(pred_y, true_y, labels, f_beta=1.0): """ 得到多分类的性能指标 :param pred_y: :param true_y: :param labels: :param f_beta: :return: """ acc = accuracy(pred_y, true_y) recall = multi_recall(pred_y, true_y, labels) precision = multi_precision(pred_y, true_y, labels) f_beta = multi_f_beta(pred_y, true_y, labels, f_beta) return acc, recall, precision, f_beta

6、训练模型

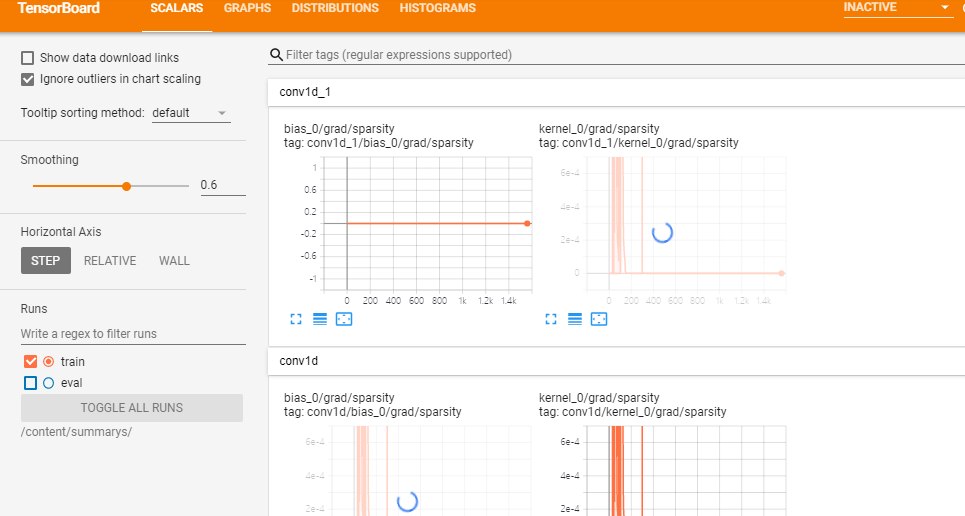

在训练时,我们定义了tensorBoard的输出,并定义了两种模型保存的方法。

# 训练模型 # 生成训练集和验证集 trainReviews = data.trainReviews trainLabels = data.trainLabels evalReviews = data.evalReviews evalLabels = data.evalLabels wordEmbedding = data.wordEmbedding labelList = data.labelList embeddedPosition = fixedPositionEmbedding(config.batchSize, config.sequenceLength) # 定义计算图 with tf.Graph().as_default(): session_conf = tf.ConfigProto(allow_soft_placement=True, log_device_placement=False) session_conf.gpu_options.allow_growth=True session_conf.gpu_options.per_process_gpu_memory_fraction = 0.9 # 配置gpu占用率 sess = tf.Session(config=session_conf) # 定义会话 with sess.as_default(): transformer = Transformer(config, wordEmbedding) globalStep = tf.Variable(0, name="globalStep", trainable=False) # 定义优化函数,传入学习速率参数 optimizer = tf.train.AdamOptimizer(config.training.learningRate) # 计算梯度,得到梯度和变量 gradsAndVars = optimizer.compute_gradients(transformer.loss) # 将梯度应用到变量下,生成训练器 trainOp = optimizer.apply_gradients(gradsAndVars, global_step=globalStep) # 用summary绘制tensorBoard gradSummaries = [] for g, v in gradsAndVars: if g is not None: tf.summary.histogram("{}/grad/hist".format(v.name), g) tf.summary.scalar("{}/grad/sparsity".format(v.name), tf.nn.zero_fraction(g)) outDir = os.path.abspath(os.path.join(os.path.curdir, "summarys")) print("Writing to {} ".format(outDir)) lossSummary = tf.summary.scalar("loss", transformer.loss) summaryOp = tf.summary.merge_all() trainSummaryDir = os.path.join(outDir, "train") trainSummaryWriter = tf.summary.FileWriter(trainSummaryDir, sess.graph) evalSummaryDir = os.path.join(outDir, "eval") evalSummaryWriter = tf.summary.FileWriter(evalSummaryDir, sess.graph) # 初始化所有变量 saver = tf.train.Saver(tf.global_variables(), max_to_keep=5) # 保存模型的一种方式,保存为pb文件 savedModelPath = "../model/transformer/savedModel" if os.path.exists(savedModelPath): os.rmdir(savedModelPath) builder = tf.saved_model.builder.SavedModelBuilder(savedModelPath) sess.run(tf.global_variables_initializer()) def trainStep(batchX, batchY): """ 训练函数 """ feed_dict = { transformer.inputX: batchX, transformer.inputY: batchY, transformer.dropoutKeepProb: config.model.dropoutKeepProb, transformer.embeddedPosition: embeddedPosition } _, summary, step, loss, predictions = sess.run( [trainOp, summaryOp, globalStep, transformer.loss, transformer.predictions], feed_dict) if config.numClasses == 1: acc, recall, prec, f_beta = get_binary_metrics(pred_y=predictions, true_y=batchY) elif config.numClasses > 1: acc, recall, prec, f_beta = get_multi_metrics(pred_y=predictions, true_y=batchY, labels=labelList) trainSummaryWriter.add_summary(summary, step) return loss, acc, prec, recall, f_beta def devStep(batchX, batchY): """ 验证函数 """ feed_dict = { transformer.inputX: batchX, transformer.inputY: batchY, transformer.dropoutKeepProb: 1.0, transformer.embeddedPosition: embeddedPosition } summary, step, loss, predictions = sess.run( [summaryOp, globalStep, transformer.loss, transformer.predictions], feed_dict) if config.numClasses == 1: acc, recall, prec, f_beta = get_binary_metrics(pred_y=predictions, true_y=batchY) elif config.numClasses > 1: acc, recall, prec, f_beta = get_multi_metrics(pred_y=predictions, true_y=batchY, labels=labelList) trainSummaryWriter.add_summary(summary, step) return loss, acc, prec, recall, f_beta for i in range(config.training.epoches): # 训练模型 print("start training model") for batchTrain in nextBatch(trainReviews, trainLabels, config.batchSize): loss, acc, prec, recall, f_beta = trainStep(batchTrain[0], batchTrain[1]) currentStep = tf.train.global_step(sess, globalStep) print("train: step: {}, loss: {}, acc: {}, recall: {}, precision: {}, f_beta: {}".format( currentStep, loss, acc, recall, prec, f_beta)) if currentStep % config.training.evaluateEvery == 0: print(" Evaluation:") losses = [] accs = [] f_betas = [] precisions = [] recalls = [] for batchEval in nextBatch(evalReviews, evalLabels, config.batchSize): loss, acc, precision, recall, f_beta = devStep(batchEval[0], batchEval[1]) losses.append(loss) accs.append(acc) f_betas.append(f_beta) precisions.append(precision) recalls.append(recall) time_str = datetime.datetime.now().isoformat() print("{}, step: {}, loss: {}, acc: {},precision: {}, recall: {}, f_beta: {}".format(time_str, currentStep, mean(losses), mean(accs), mean(precisions), mean(recalls), mean(f_betas))) if currentStep % config.training.checkpointEvery == 0: # 保存模型的另一种方法,保存checkpoint文件 path = saver.save(sess, "../model/Transformer/model/my-model", global_step=currentStep) print("Saved model checkpoint to {} ".format(path)) inputs = {"inputX": tf.saved_model.utils.build_tensor_info(transformer.inputX), "keepProb": tf.saved_model.utils.build_tensor_info(transformer.dropoutKeepProb)} outputs = {"predictions": tf.saved_model.utils.build_tensor_info(transformer.predictions)} prediction_signature = tf.saved_model.signature_def_utils.build_signature_def(inputs=inputs, outputs=outputs, method_name=tf.saved_model.signature_constants.PREDICT_METHOD_NAME) legacy_init_op = tf.group(tf.tables_initializer(), name="legacy_init_op") builder.add_meta_graph_and_variables(sess, [tf.saved_model.tag_constants.SERVING], signature_def_map={"predict": prediction_signature}, legacy_init_op=legacy_init_op) builder.save()

需要注意的是上述使用的tensorflow是1.x版本,运行部分结果:

train: step: 1453, loss: 0.0010375125566497445, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1454, loss: 0.0006778845563530922, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1455, loss: 0.007209389470517635, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1456, loss: 0.0059194001369178295, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1457, loss: 0.0002592140226624906, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1458, loss: 0.0020833390299230814, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1459, loss: 0.002238483401015401, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1460, loss: 0.0002154896064894274, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1461, loss: 0.0005287806270644069, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1462, loss: 0.0021261998917907476, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1463, loss: 0.003387441160157323, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1464, loss: 0.039518121629953384, acc: 0.9921875, recall: 1.0, precision: 0.9827586206896551, f_beta: 0.9913043478260869 train: step: 1465, loss: 0.017118675634264946, acc: 0.9921875, recall: 0.9848484848484849, precision: 1.0, f_beta: 0.9923664122137404 train: step: 1466, loss: 0.002999877789989114, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1467, loss: 0.00025316851679235697, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1468, loss: 0.0018627031240612268, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1469, loss: 0.00041201969725079834, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1470, loss: 0.0018406400922685862, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1471, loss: 0.0008799995994195342, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1472, loss: 0.004898811690509319, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1473, loss: 0.0004434642905835062, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1474, loss: 0.0011488802265375853, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1475, loss: 0.00015377056843135506, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1476, loss: 0.0103827565908432, acc: 0.9921875, recall: 0.9836065573770492, precision: 1.0, f_beta: 0.9917355371900827 train: step: 1477, loss: 0.0007278787670657039, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1478, loss: 0.0003133404243271798, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1479, loss: 0.00027148338267579675, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1480, loss: 0.0003839871205855161, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1481, loss: 0.0024200205225497484, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1482, loss: 0.0005372267332859337, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1483, loss: 0.0003639731730800122, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1484, loss: 0.0006208484992384911, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1485, loss: 0.006886144168674946, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1486, loss: 0.003239469835534692, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1487, loss: 0.0007233020151033998, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1488, loss: 0.0009364052675664425, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1489, loss: 0.0012875663815066218, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1490, loss: 0.00010077921615447849, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1491, loss: 0.0003866801271215081, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1492, loss: 0.006166142877191305, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1493, loss: 0.0022950763814151287, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1494, loss: 0.00023041354143060744, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1495, loss: 0.004388233181089163, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1496, loss: 0.00047737392014823854, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1497, loss: 0.0025870269164443016, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1498, loss: 0.002695532515645027, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1499, loss: 0.013221409171819687, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1500, loss: 0.00033678440377116203, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 Evaluation: 2020-07-29T03:37:24.417831, step: 1500, loss: 0.8976672161848117, acc: 0.8693910256410257,precision: 0.8834109059648474, recall: 0.8552037062592709, f_beta: 0.8682522378163627 Saved model checkpoint to /content/drive/My Drive/textClassifier/model/transformer/savedModel/-1500 train: step: 1501, loss: 0.0007042207289487123, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1502, loss: 0.0014643988106399775, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1503, loss: 0.0010233941720798612, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1504, loss: 0.02987544611096382, acc: 0.9921875, recall: 0.9852941176470589, precision: 1.0, f_beta: 0.9925925925925926 train: step: 1505, loss: 0.012284890748560429, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1506, loss: 0.0018061138689517975, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1507, loss: 0.00026604655431583524, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1508, loss: 0.003529384732246399, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1509, loss: 0.02039383351802826, acc: 0.9921875, recall: 1.0, precision: 0.9857142857142858, f_beta: 0.9928057553956835 train: step: 1510, loss: 0.0014776198659092188, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1511, loss: 0.0029119777027517557, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1512, loss: 0.004221439827233553, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1513, loss: 0.0045303404331207275, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1514, loss: 0.00019781164883170277, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1515, loss: 4.5770495489705354e-05, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1516, loss: 0.00016127500566653907, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1517, loss: 0.0008834836189635098, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1518, loss: 0.0012142667546868324, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1519, loss: 0.009638940915465355, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1520, loss: 0.004639771766960621, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1521, loss: 0.0028490747790783644, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1522, loss: 0.01262330450117588, acc: 0.9921875, recall: 0.9838709677419355, precision: 1.0, f_beta: 0.991869918699187 train: step: 1523, loss: 0.0011988620972260833, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1524, loss: 0.00021289248252287507, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1525, loss: 0.00034694239730015397, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1526, loss: 0.0029277384746819735, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1527, loss: 0.02414204739034176, acc: 0.984375, recall: 1.0, precision: 0.971830985915493, f_beta: 0.9857142857142858 train: step: 1528, loss: 0.013479265384376049, acc: 0.9921875, recall: 1.0, precision: 0.9838709677419355, f_beta: 0.991869918699187 train: step: 1529, loss: 0.05391649901866913, acc: 0.9921875, recall: 1.0, precision: 0.9830508474576272, f_beta: 0.9914529914529915 train: step: 1530, loss: 0.00015737551439087838, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1531, loss: 0.0007785416673868895, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1532, loss: 0.004696957301348448, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1533, loss: 0.024878593161702156, acc: 0.984375, recall: 0.9666666666666667, precision: 1.0, f_beta: 0.983050847457627 train: step: 1534, loss: 0.04452724754810333, acc: 0.984375, recall: 0.967741935483871, precision: 1.0, f_beta: 0.9836065573770492 train: step: 1535, loss: 0.00039763524546287954, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1536, loss: 0.000782095710746944, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1537, loss: 0.006687487475574017, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1538, loss: 0.00039404688868671656, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1539, loss: 0.014523430727422237, acc: 0.9921875, recall: 1.0, precision: 0.9855072463768116, f_beta: 0.9927007299270074 train: step: 1540, loss: 0.009221377782523632, acc: 0.9921875, recall: 1.0, precision: 0.9824561403508771, f_beta: 0.9911504424778761 train: step: 1541, loss: 0.005190445575863123, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1542, loss: 0.004177240654826164, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1543, loss: 0.0017567630857229233, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1544, loss: 0.0006568725220859051, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1545, loss: 0.002588574541732669, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1546, loss: 0.0001910639984998852, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1547, loss: 0.002887105569243431, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1548, loss: 0.03052239492535591, acc: 0.984375, recall: 0.967741935483871, precision: 1.0, f_beta: 0.9836065573770492 train: step: 1549, loss: 0.0018153332639485598, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1550, loss: 0.004794051870703697, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1551, loss: 0.0012018438428640366, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1552, loss: 0.00037542261998169124, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1553, loss: 0.002573814708739519, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1554, loss: 0.011914141476154327, acc: 0.9921875, recall: 1.0, precision: 0.9846153846153847, f_beta: 0.9922480620155039 train: step: 1555, loss: 0.014577670954167843, acc: 0.9921875, recall: 1.0, precision: 0.984375, f_beta: 0.9921259842519685 train: step: 1556, loss: 0.001725346315652132, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1557, loss: 0.0006375688826665282, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1558, loss: 0.000332293682731688, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1559, loss: 0.0018419102998450398, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 train: step: 1560, loss: 0.0025918420869857073, acc: 1.0, recall: 1.0, precision: 1.0, f_beta: 1.0 WARNING:tensorflow:From <ipython-input-16-f881542e6f65>:154: build_tensor_info (from tensorflow.python.saved_model.utils_impl) is deprecated and will be removed in a future version. Instructions for updating: This function will only be available through the v1 compatibility library as tf.compat.v1.saved_model.utils.build_tensor_info or tf.compat.v1.saved_model.build_tensor_info. WARNING:tensorflow:From <ipython-input-16-f881542e6f65>:163: calling SavedModelBuilder.add_meta_graph_and_variables (from tensorflow.python.saved_model.builder_impl) with legacy_init_op is deprecated and will be removed in a future version. Instructions for updating: Pass your op to the equivalent parameter main_op instead. INFO:tensorflow:No assets to save. INFO:tensorflow:No assets to write. INFO:tensorflow:SavedModel written to: /content/drive/My Drive/textClassifier//model/transformer/savedModel/saved_model.pb

最后使用:

%load_ext tensorboard %tensorboard --logdir "/content/summarys/"

打开tensorboard可视化: