论文地址:https://arxiv.org/abs/1710.10903

代码地址: https://github.com/Diego999/pyGAT

之前非稀疏矩阵版的解读:https://www.cnblogs.com/xiximayou/p/13622283.html

我们知道图的邻接矩阵可能是稀疏的,将整个图加载到内存中是十分耗费资源的,因此对邻接矩阵进行存储和计算是很有必要的。

我们已经讲解了图注意力网络的非稀疏矩阵版本,再来弄清其稀疏矩阵版本就轻松了,接下来我们将来看不同之处。

主运行代码在:execute_cora_sparse.py中

同样的,先加载数据:

adj, features, y_train, y_val, y_test, train_mask, val_mask, test_mask = process.load_data(dataset)

其中adj是coo_matrix类型,features是lil_matrix类型。

对于features,我们最终还是:

def preprocess_features(features): """Row-normalize feature matrix and convert to tuple representation""" rowsum = np.array(features.sum(1)) r_inv = np.power(rowsum, -1).flatten() r_inv[np.isinf(r_inv)] = 0. r_mat_inv = sp.diags(r_inv) features = r_mat_inv.dot(features) return features.todense(), sparse_to_tuple(features)

将其:

features, spars = process.preprocess_features(features)

转换为原始矩阵。

对于biases:

if sparse: biases = process.preprocess_adj_bias(adj) else: adj = adj.todense() adj = adj[np.newaxis] biases = process.adj_to_bias(adj, [nb_nodes], nhood=1)

如果是稀疏格式的,就调用biases = process.preprocess_adj_bias(adj):

def preprocess_adj_bias(adj): num_nodes = adj.shape[0] #2708 adj = adj + sp.eye(num_nodes) # self-loop 给对角上+1 adj[adj > 0.0] = 1.0 #大于0的值置为1 if not sp.isspmatrix_coo(adj): adj = adj.tocoo() adj = adj.astype(np.float32) #类型转换 indices = np.vstack((adj.col, adj.row)).transpose() # This is where I made a mistake, I used (adj.row, adj.col) instead # return tf.SparseTensor(indices=indices, values=adj.data, dense_shape=adj.shape) return indices, adj.data, adj.shape

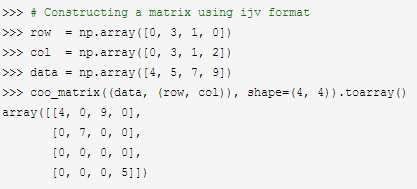

这里看两个例子:

我们可以通过indices,data,shape来构造一个coo_matrix。

在定义计算图中的占位符时:

if sparse: #bias_idx = tf.placeholder(tf.int64) #bias_val = tf.placeholder(tf.float32) #bias_shape = tf.placeholder(tf.int64) bias_in = tf.sparse_placeholder(dtype=tf.float32) else: bias_in = tf.placeholder(dtype=tf.float32, shape=(batch_size, nb_nodes, nb_nodes))

使用bias_in = tf.sparse_placeholder(dtype=tf.float32)。

再接着就是模型中了,在utils文件夹下的layers.py中:

# Experimental sparse attention head (for running on datasets such as Pubmed) # N.B. Because of limitations of current TF implementation, will work _only_ if batch_size = 1! def sp_attn_head(seq, out_sz, adj_mat, activation, nb_nodes, in_drop=0.0, coef_drop=0.0, residual=False): with tf.name_scope('sp_attn'): if in_drop != 0.0: seq = tf.nn.dropout(seq, 1.0 - in_drop) seq_fts = tf.layers.conv1d(seq, out_sz, 1, use_bias=False) # simplest self-attention possible f_1 = tf.layers.conv1d(seq_fts, 1, 1) f_2 = tf.layers.conv1d(seq_fts, 1, 1) f_1 = tf.reshape(f_1, (nb_nodes, 1)) f_2 = tf.reshape(f_2, (nb_nodes, 1)) f_1 = adj_mat*f_1 f_2 = adj_mat * tf.transpose(f_2, [1,0]) logits = tf.sparse_add(f_1, f_2) lrelu = tf.SparseTensor(indices=logits.indices, values=tf.nn.leaky_relu(logits.values), dense_shape=logits.dense_shape) coefs = tf.sparse_softmax(lrelu) if coef_drop != 0.0: coefs = tf.SparseTensor(indices=coefs.indices, values=tf.nn.dropout(coefs.values, 1.0 - coef_drop), dense_shape=coefs.dense_shape) if in_drop != 0.0: seq_fts = tf.nn.dropout(seq_fts, 1.0 - in_drop) # As tf.sparse_tensor_dense_matmul expects its arguments to have rank-2, # here we make an assumption that our input is of batch size 1, and reshape appropriately. # The method will fail in all other cases! coefs = tf.sparse_reshape(coefs, [nb_nodes, nb_nodes]) seq_fts = tf.squeeze(seq_fts) vals = tf.sparse_tensor_dense_matmul(coefs, seq_fts) vals = tf.expand_dims(vals, axis=0) vals.set_shape([1, nb_nodes, out_sz]) ret = tf.contrib.layers.bias_add(vals) # residual connection if residual: if seq.shape[-1] != ret.shape[-1]: ret = ret + conv1d(seq, ret.shape[-1], 1) # activation else: ret = ret + seq return activation(ret) # activation

相应的位置都要使用稀疏的方式。