Flume的定义

- Flume是一个分布式的、高可靠的、高可用的将大批量的不同数据源的日志数据收集、聚合、移动到数据中心(HDFS)进行存储的系统。即是日志采集和汇总的工具

- Logstash、FileBeat是ES栈的日志数据抽取工具,他们和Flume很类似,前者是轻量级、后者是重量级,若项目组使用的是ES栈技术,那完全可以使用Logstash取代Flume。

版本

- NG: 1.x的版本 (N=NEW)

- OG:0.9.x的版本,不用管(O=OLD)

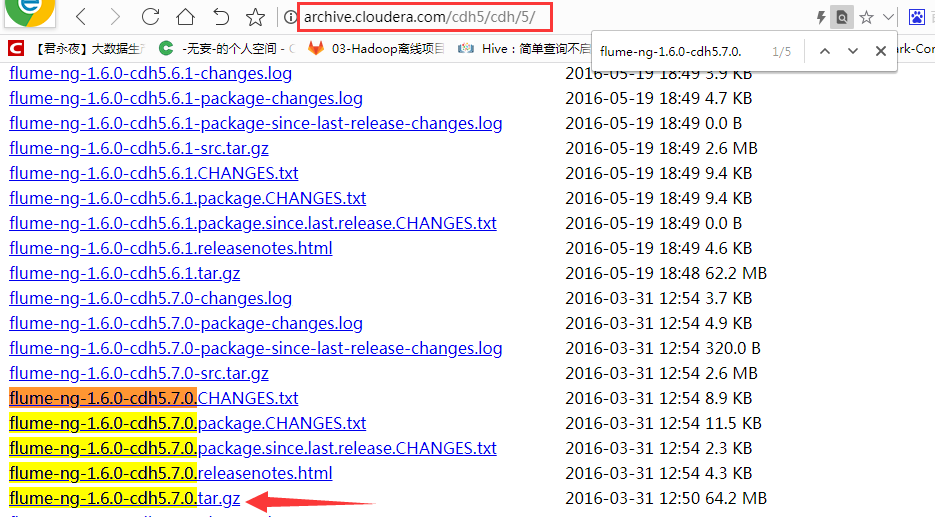

- 由于我使用的是CDH5.7.0,故选择flume-ng-1.6.0-cdh5.7.0版本,注意此1.6和社区的1.6有差别。

-

flume的优势:

- 可以高速采集数据,采集的数据能够以想要的文件格式及压缩方式存储在hdfs上

- 事务功能保证了数据在采集的过程中数据不丢失

- 部分Source保证了Flume挂了以后重启依旧能够继续在上一次采集点采集数据,真正做到数据零丢失

-

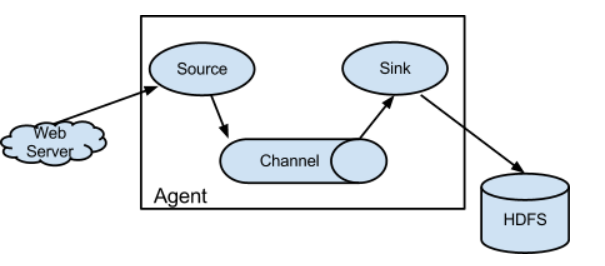

flume的组成

- flume有3大组件

- source(源端数据采集):Flume提供了各种各样的Source、同时还提供了自定义的Source

- Channel(临时存储聚合数据):主要用的是memory channel和File channel(生产最常用),生产中channel的数据一定是要监控的,防止sink挂了,撑爆channel

- Sink(移动数据到目标端):如HDFS、KAFKA、DB以及自定义的sink

-

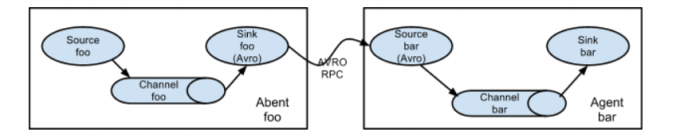

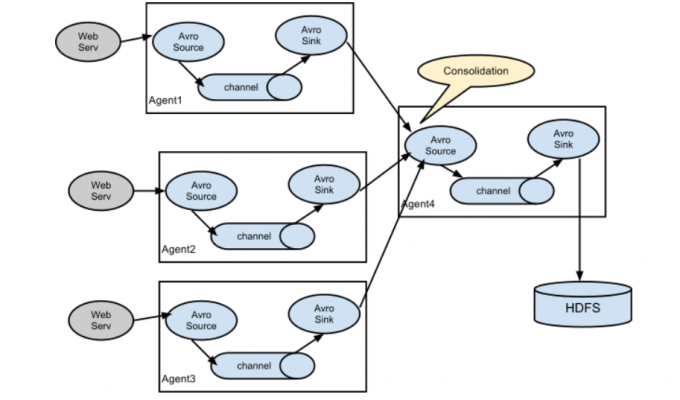

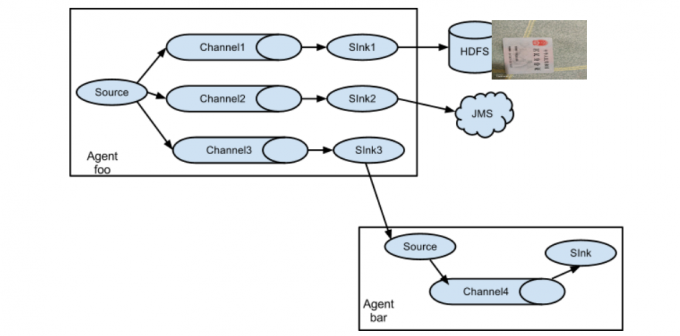

flume的架构

- flume的agent架构

- 单Agent:

- 串联Agent:

- 并联Agent(生产中最多的使用):

- 多sinkAgent(也很常见):

flume部署

-

打开官网http://archive.cloudera.com/cdh5/cdh/5/

-

上传并解压

[hadoop@hadoop001 app]$ rz flume-ng-1.6.0-cdh5.7.0.tar.gz [hadoop@hadoop001 app]$tar -xzvf flume-ng-1.6.0-cdh5.7.0.tar.gz

#修改配置文件,添加JAVA_HOME

[hadoop@hadoop001 app]$ cd ~/app/apache-flume-1.6.0-cdh5.7.0-bin [hadoop@hadoop001 apache-flume-1.6.0-cdh5.7.0-bin]$ cp ~/app/apache-flume-1.6.0-cdh5.7.0-bin/conf/flume-env.sh.template ~/app/apache-flume-1.6.0-cdh5.7.0-bin/conf/flume-env.sh [hadoop@hadoop001 apache-flume-1.6.0-cdh5.7.0-bin]$ vim ~/app/apache-flume-1.6.0-cdh5.7.0-bin/conf/flume-env.sh export JAVA_HOME=/usr/java/jdk1.8.0_45

#添加环境变量

hadoop@hadoop001 bin]$ soruce ~/.bash_profile export FLUME_HOME=/home/hadoop/app/apache-flume-1.6.0-cdh5.7.0-bin export PATH=$FLUME_HOME/bin:$PATH [hadoop@hadoop001 bin]$ source ~/.bash_profile [hadoop@hadoop001 bin]$ which flume-ng ~/app/apache-flume-1.6.0-cdh5.7.0-bin/bin/flume-ng

-

Agent配置使用案列

- Flume的使用其实就是Source、Channel、Sink的配置

- Agent=Source+Channel+Sink,其实agent就是Flume的配置文件

- 一个配置文件可以配置多个Agent的。

- Event:Flume数据传输的最小单位,一个EVent就是一条记录,由head和body两个部分组成,head存储的是管道,body存储的是字节数组

-

Flume文件配置

[hadoop@hadoop001 conf]$ vim /home/hadoop/app/apache-flume-1.6.0-cdh5.7.0-bin/conf/example.conf # example.conf: A single-node Flume configuration # Name the components on this agent a1.sources = r1 a1.sinks = k1 a1.channels = c1 # Describe/configure the source a1.sources.r1.type = netcat a1.sources.r1.bind = localhost a1.sources.r1.port = 44444 # Describe the sink a1.sinks.k1.type = logger # Use a channel which buffers events in memory a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 a1.sinks.k1.channel = c1

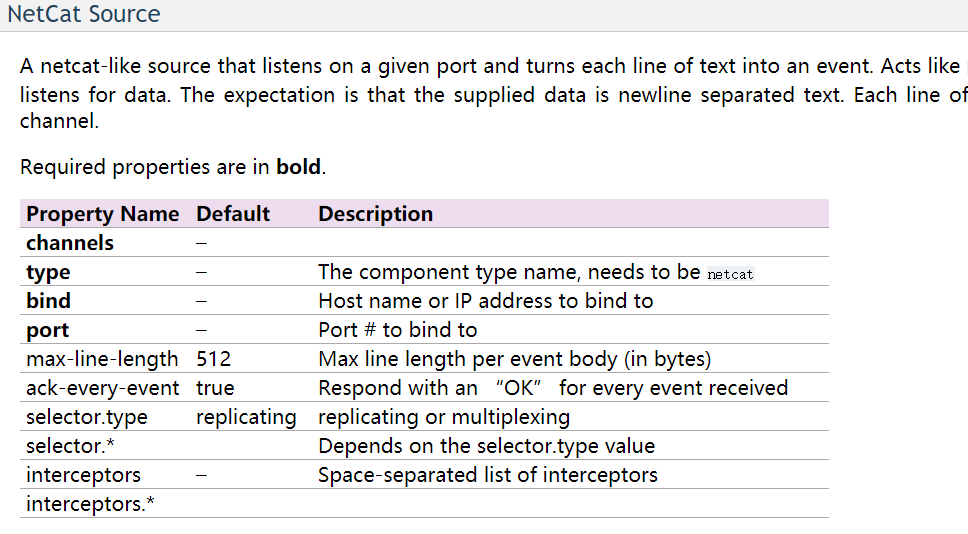

- NetSource:黑色的配置是必填项

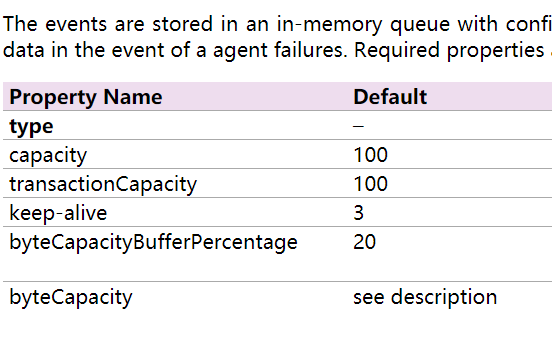

- memory channel:capatity=>channel的存储最大event(消息)个数,生产至少10万条,

- transationCapacity=>最多达到多少条必须提交事务。生产也必须调大。

- logger:就是控制台类型的sink

- 注意1:一个source可以绑定多个channel,但是一个sink只能绑定一个Channel

-

启动Agent以及测试

- 启动

#最后一行是为了方便观察输出INFO日志到控制台,可以去掉 flume-ng agent --name a1 --conf $FLUME_HOME/conf --conf-file $FLUME_HOME/conf/example.conf -Dflume.root.logger=INFO,console 使用telnet测试: [hadoop@hadoop001 ~]$ telnet localhost 44444 Trying 127.0.0.1... Connected to localhost. Escape character is '^]'. hello OK

Exec Source采集文件数据到hdfs

生产的架构是: log数据=》flume=》hdfs,这里我们采用简单的Exec Source通过tail -F 数据文件进行数据采集。

# example.conf: A single-node Flume configuration # Name the components on this agent exec-hdfs-agent.sources = exec-source exec-hdfs-agent.sinks = hdfs-sink exec-hdfs-agent.channels = memory-channel # Describe/configure the source exec-hdfs-agent.sources.exec-source.type = exec exec-hdfs-agent.sources.exec-source.command = tail -F /home/hadoop/data/access_10000.log exec-hdfs-agent.sources.exec-source.shell = /bin/sh -c # Describe the sink exec-hdfs-agent.sinks.hdfs-sink.type = hdfs exec-hdfs-agent.sinks.hdfs-sink.hdfs.path = hdfs://hadoop001:9000/flume/exec exec-hdfs-agent.sinks.hdfs-sink.hdfs.fileType = DataStream exec-hdfs-agent.sinks.hdfs-sink.hdfs.writeFormat = Text # Use a channel which buffers events in memory exec-hdfs-agent.channels.memory-channel.type = memory exec-hdfs-agent.channels.memory-channel.capacity = 1000 exec-hdfs-agent.channels.memory-channel.transactionCapacity = 100 # Bind the source and sink to the channel exec-hdfs-agent.sources.exec-source.channels = memory-channel exec-hdfs-agent.sinks.hdfs-sink.channel = memory-channel

- 写hdfs文件时先生成创建一个后缀名称为.tmp的文件,当写完成时,去掉了.tmp

- 缺点:

- 虽然此种tail方式可以将日志数据采集到hdfs,但是tail -F进程挂了咋办,还是会丢数据!生产上是行不通的。无法做到高可用。

- 其次上面的采集流程并未解决生成大量小文件的问题,无法做到高可靠

- tail只能监控一个文件,生产中更多的是监控一个文件夹。不能满足需求

-

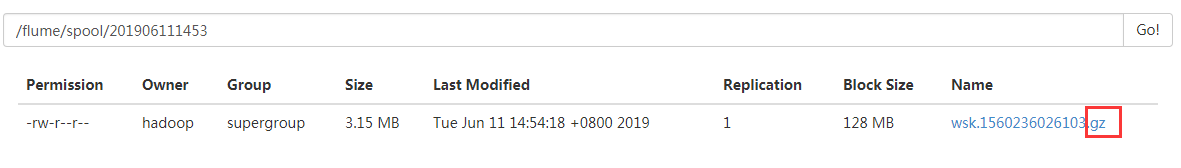

使用Spooling Directory Source采集文件夹数据到hdfs

- 写到HDFS上的文件大小最好是100M左右,比blocksize的值(128M)略低

- 一般使用rolllnterval(时间)、rollSize(大小)来控制文件的生成,哪个先触发就会生成HDFS文件,将根据条数的roll关闭。

- rollSize控制的大小是指的压缩前的,所以若hdfs文件使用了压缩,需调大rollsize的大小

- 当文件夹下的某个文件被采集到hdfs上,会有个。complete的标志

- 使用Spooling Directory Source采集文件数据时若该文件数据已经被采集,再对该文件做修改是会报错的停止的,其次若放进去一个已经完成采集的同名数据文件也是会报错停止的

- 写HDFS数据可按照时间分区,注意改时间刻度内无数据则不会生成该时间文件夹

- 生成的文件名称默认是前缀+时间戳,这个是可以更改的。

# example.conf: A single-node Flume configuration # Name the components on this agent spool-hdfs-agent.sources = spool-source spool-hdfs-agent.sinks = hdfs-sink spool-hdfs-agent.channels = memory-channel # Describe/configure the source spool-hdfs-agent.sources.spool-source.type = spooldir spool-hdfs-agent.sources.spool-source.spoolDir = /home/hadoop/data/flume/spool/input # Describe the sink spool-hdfs-agent.sinks.hdfs-sink.type = hdfs spool-hdfs-agent.sinks.hdfs-sink.hdfs.path = hdfs://hadoop001:9000/flume/spool/%Y%m%d%H%M spool-hdfs-agent.sinks.hdfs-sink.hdfs.useLocalTimeStamp = true spool-hdfs-agent.sinks.hdfs-sink.hdfs.fileType = CompressedStream spool-hdfs-agent.sinks.hdfs-sink.hdfs.writeFormat = Text spool-hdfs-agent.sinks.hdfs-sink.hdfs.codeC = gzip spool-hdfs-agent.sinks.hdfs-sink.hdfs.filePrefix = wsk spool-hdfs-agent.sinks.hdfs-sink.hdfs.rollInterval = 30 spool-hdfs-agent.sinks.hdfs-sink.hdfs.rollSize = 100000000 spool-hdfs-agent.sinks.hdfs-sink.hdfs.rollCount = 0 # Use a channel which buffers events in memory spool-hdfs-agent.channels.memory-channel.type = memory spool-hdfs-agent.channels.memory-channel.capacity = 1000 spool-hdfs-agent.channels.memory-channel.transactionCapacity = 100 # Bind the source and sink to the channel spool-hdfs-agent.sources.spool-source.channels = memory-channel spool-hdfs-agent.sinks.hdfs-sink.channel = memory-channel

上述的Spooling Directory Source配置虽然解决了小文件过多以及监控多个文件的问题,但是依旧有如下问题。

- 问题1:虽然能监控一个文件夹,但是无法监控递归的文件夹中的数据

- 问题2:若采集时Flume挂了,无法保证重启时还从之前文件读取的那一行继续采集数据

基于以上两个问题,此凡是生产也是不可接受的

-

(生产版本)使用Taildir Source采集文件夹数据到hdfs

- Taildir Source 是Apache flume1.7新推出的,但是CDH Flume1.6做了集成

- Taildir Source是高可靠(reliable)的source,他会实时的将文件偏移量写到json文件中并保存到磁盘。下次重启Flume时会读取Json文件获取文件O偏移量,然后从之前的位置读取数据,保证数据零丢失

- taildir Source可同时监控多个文件夹以及文件。即使文件在实时写入数据。

- Taildir Source也是无法采集递归文件下的数据,这需要改造源码

- Taildir Source监控一个文件夹下的所有文件一定要用.*正则

# example.conf: A single-node Flume configuration # Name the components on this agent taildir-hdfs-agent.sources = taildir-source taildir-hdfs-agent.sinks = hdfs-sink taildir-hdfs-agent.channels = memory-channel # Describe/configure the source taildir-hdfs-agent.sources.taildir-source.type = TAILDIR taildir-hdfs-agent.sources.taildir-source.filegroups = f1 taildir-hdfs-agent.sources.taildir-source.filegroups.f1 = /home/hadoop/data/flume/taildir/input/.* taildir-hdfs-agent.sources.taildir-source.positionFile = /home/hadoop/data/flume/taildir/taildir_position/taildir_position.json # Describe the sink taildir-hdfs-agent.sinks.hdfs-sink.type = hdfs taildir-hdfs-agent.sinks.hdfs-sink.hdfs.path = hdfs://hadoop001:9000/flume/taildir/%Y%m%d%H%M taildir-hdfs-agent.sinks.hdfs-sink.hdfs.useLocalTimeStamp = true taildir-hdfs-agent.sinks.hdfs-sink.hdfs.fileType = CompressedStream taildir-hdfs-agent.sinks.hdfs-sink.hdfs.writeFormat = Text taildir-hdfs-agent.sinks.hdfs-sink.hdfs.codeC = gzip taildir-hdfs-agent.sinks.hdfs-sink.hdfs.filePrefix = wsk taildir-hdfs-agent.sinks.hdfs-sink.hdfs.rollInterval = 30 taildir-hdfs-agent.sinks.hdfs-sink.hdfs.rollSize = 100000000 taildir-hdfs-agent.sinks.hdfs-sink.hdfs.rollCount = 0 # Use a channel which buffers events in memory taildir-hdfs-agent.channels.memory-channel.type = memory taildir-hdfs-agent.channels.memory-channel.capacity = 1000 taildir-hdfs-agent.channels.memory-channel.transactionCapacity = 100 # Bind the source and sink to the channel taildir-hdfs-agent.sources.taildir-source.channels = memory-channel taildir-hdfs-agent.sinks.hdfs-sink.channel = memory-channel