NFS网络文件系统的服务的配置

1 Preparation

Three Linux virtual machines

one: to be NFS Service

the other two: NFS Clinet

2 Install And Setting

2.1 checking System version

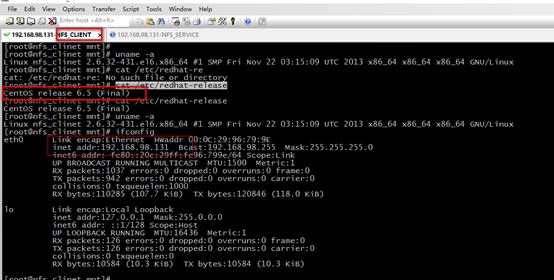

Client:

cat /etc/redhat-release

uname -a

ifconfig

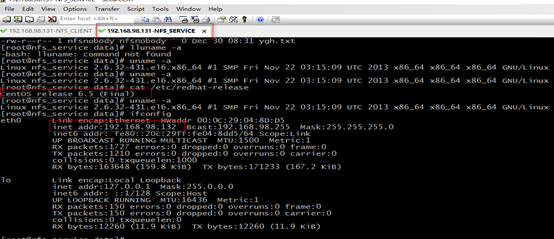

Service:

cat /etc/redhat-release

uname -a

ifconfig

you are better make sure three Linux System is same.

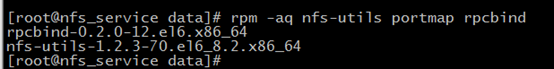

2.2 Checking RPC and NFS whether has installed at Service

Service:

rpm -aq nfs-utils portmap rpcbind

If the PRC and NFS haven’t installed, you can user “ yum install nfs-utils rpcbind -y”

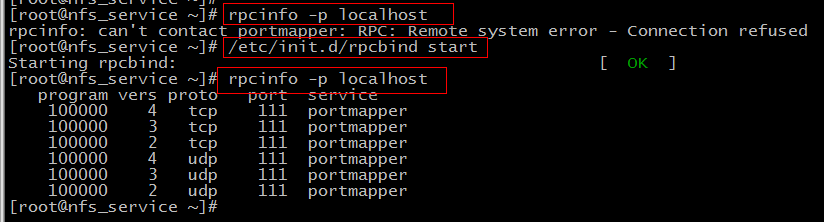

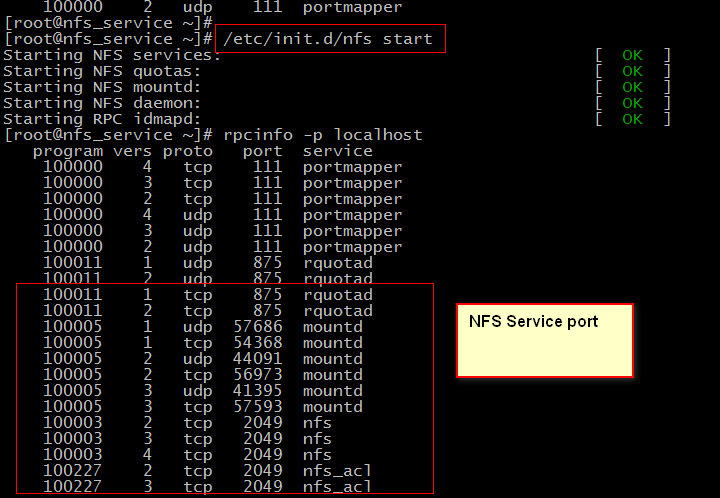

2.3 Start NFS and RPC Service at Service

switch to NFS Service

Firstly start RPC Service at service.

rpcinfo -p localhost: check RPC localhost port

“rpcinfo: can't contact portmapper: RPC: Remote system error - Connection refused”: show RPC Service does not start.

Secondly: start NFS Service

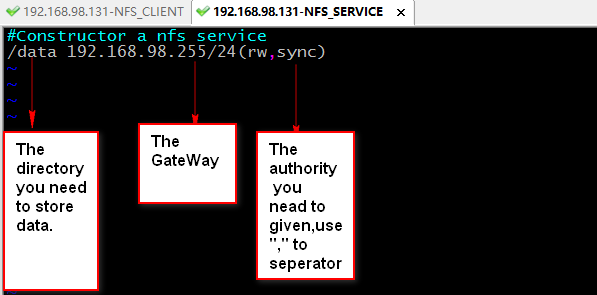

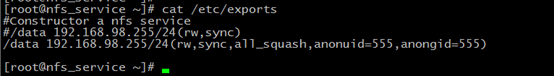

2.4 Setting NSF at Service

/etc/exports is setting of NFS, we need to set it by “VIM”.

The GateWay can be replaced by IP, it will make the /date used by only Client. So we always use gateway.

The authority includes:

ro: only read

wo:only write

sync:

use “,” as separator.

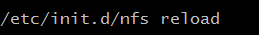

Then, you need to reload the nfs Service.

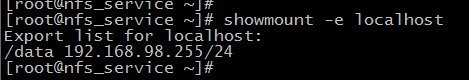

Then, you need to check the mount information for an NFS Server.

At this moment, The Service is OK.

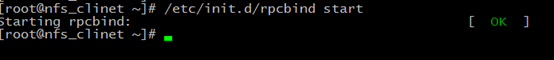

2.5 Setting RPC in Client

switch to NFS Client.

Firstly: start RPC Service.

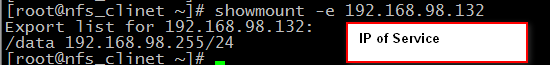

Check NFS information of NFS Server from Client, If show flowing it success.

If it don’t success ,you use ping SERVICE_IP or telnet SERVICE_IP 111(RPC port) to check where error occurs.

you are better make sure two client Linux System is same.

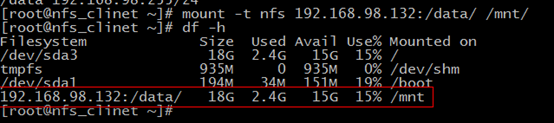

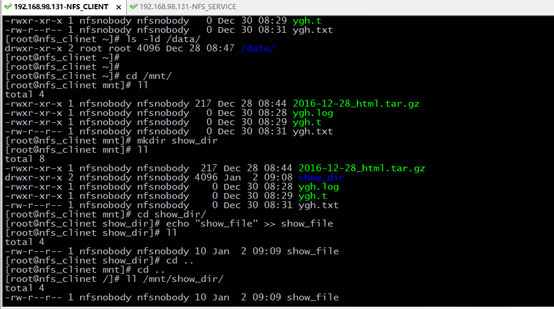

2.6 mount Service Data and update directory owner.

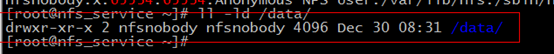

The you should update owner or group of /data at Service, or client will has authority errors.

If you set “w” authority, you need set “nfsnobody” to owner or group of /data and give writable authority.

“nfsnobody” is the default user of NFS.

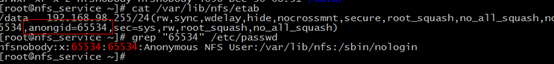

you can see it’s UID in /var/lib/nfs/etab

If show red information, it is OK.

The another client operations is same as first one.

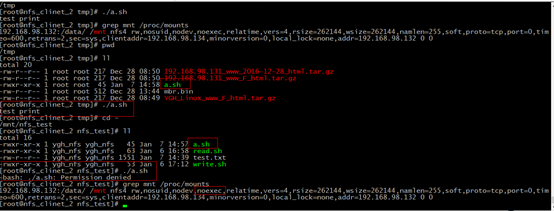

3 run and test

switch to Client.

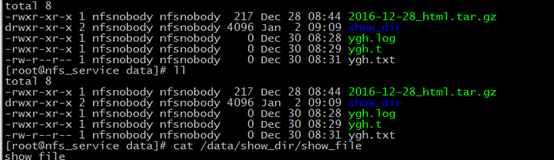

Then switch to Service

If the /date of Service is same as the /mnt of Client, indicates it success.

4 optimization

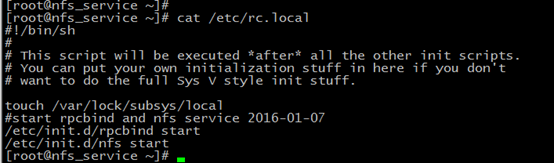

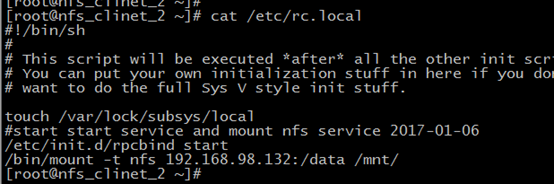

4.1 set start with System open

we add all NFS, RPC starting commands in /etc/rc.local file to manage easily.

at Service:

at Client: you better make sure two client is same.

4.2 update /etc/exports

we want all clients can ADD, VIEW, UPDATE, and DELETE file. So we should use the same user to execute the client process. So we can use

all_squash: indicates all user will be set anonuid, if anonuid is not given, using default nfsnobody to be the client process executor.

anonuid: give executor UID

anongid: give executor GID

It will prompt the system safety.

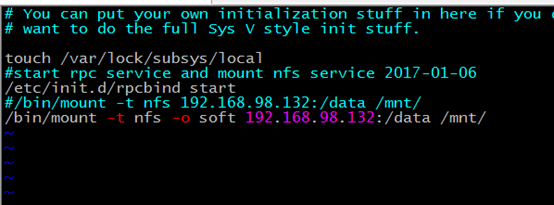

4.3 use soft not hard

hard: request NFS service all time

soft: request NFS service intermittent

but, the two options belong to mount.

We use soft we reduce the coupling(耦合性) between NFS Service and NFS Client.

So we should use flowing command to mount NFS Service and add it at /etc/rc.local

/bin/mount -t nfs -o soft 192.168.98.132:/data /mnt/.

4.4 rsize, wsize

rsize: the max size of cache of reading file.

wsize: the max size of buffer of write file

test data:

#test read and write speed about rsize,wsize

the rsize=1024,wsize=1024

time sh read.sh

real 0m43.792s

user 0m11.669s

sys 0m21.960s

time sh write.sh

20000+0 records in

20000+0 records out

184320000 bytes (184 MB) copied, 64.963 s, 2.8 MB/s

real 1m5.058s

user 0m0.006s

sys 0m6.103s

[root@nfs_clinet_2 nfs_test]# grep mnt /proc/mounts

192.168.98.132:/data/ /mnt nfs4 rw,relatime,vers=4,rsize=1024,wsize=1024,namlen=255,soft,proto=tcp,port=0,timeo=600,retrans=2,sec=sys,clientaddr=192.168.98.134,minorversion=0,local_lock=none,addr=192.168.98.132 0 0

the rsize=262144,wsize=262144,

time sh read.sh

real 0m37.112s

user 0m4.690s

sys 0m25.867s

time sh write.sh

20000+0 records in

20000+0 records out

184320000 bytes (184 MB) copied, 6.63189 s, 27.8 MB/s

real 0m6.645s

user 0m0.002s

sys 0m1.366s

grep mnt /proc/mounts

192.168.98.132:/data/ /mnt nfs4 rw,relatime,vers=4,rsize=262144,wsize=262144,namlen=255,soft,proto=tcp,port=0,timeo=600,retrans=2,sec=sys,clientaddr=192.168.98.134,minorversion=0,local_lock=none,addr=192.168.98.132 0 0

obvious the rsize and wsize bigger, the speed is more quickly.

In Centos 6.5 default rsize and wrize is so good ,we don’t need to update it.

4.5 noatime, nodiratime

noatime: when you access a file, will not update its atime

nodiratime: when you access a file, will not update its atiem

If you hope you NFS Service read/write more quickly, you can add these two options.

4.6 nosuid, noexec, nodev

nosuid: forbidden seting suid

noexec:forbidden executing scripts, including shell and scropts

nodev: Do not interpret character or block special devices on the file system.

The three is safe options, we can use these to prevent our system be invaded

The picture is show noexec option effect.

4.7 core optimization options

net.core.wmem_default 8388608 指定发送套接字的默认值

net.core.rmem_default 8388608 指定接收套接字的默认值

net.core.wmem_max 16777216 指定发送套接字的最大值

net.core.rmem_mxa 16777216 指定接收套接字的最大值

4.8 NFS的优点和缺点

优点:

1、 简单上手,数据在文件系统之上

2、 方便,部署快速,维护简单

3、 从软件角度来看,数据可靠性高,经久耐用,数据是在文件系统上的。

缺点:

1、 存在单点故障,如果NFS的服务器宕机,所有的客户端都无法访问

2、 在高并发场合,NFS文件系统的性能有限,在(2000万/日)还是可以的

3、 客户端验证是通过主机名和IP验证,安全性一般(但是在内网一般没问题)

4、 NFS的数据是明文的,对数据的完整性不做验证

5、 当多个客户机挂载NFS时,管理比较麻烦

4.9 生产场景的优化方案

1、使用SAS,SSD硬盘,raid0/raid10

2、服务端优化:NFS(all_squash,async)性能最优

3、客户端优化:(rsize,wsize 合理),noatime,nodirtime

4、内核优化

net.core.wmem_default 8388608 指定发送套接字的默认值

net.core.rmem_default 8388608 指定接收套接字的默认值

net.core.wmem_max 16777216 指定发送套接字的最大值

net.core.rmem_mxa 16777216 指定接收套接字的最大值

但是最好的优化是在前面加上缓存,不要仅仅使用系统的优化。