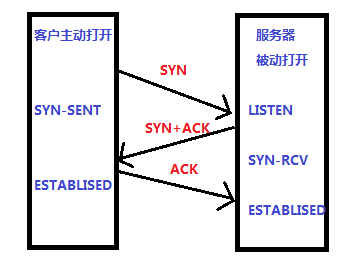

1.TCP建立连接的三次握手过程

先看一下三次握手的大概流程,客户端想要与服务器建立可靠传输通信,需经过三次握手,服务器是一直处于监听状态的,也就是LISTEN状态,用来监听客户的请求。此时客户端想要与服务器建立通信,主动发起请求,也就是三次握手,过程如下。

第一次:建立连接时,客户端发送SYN(syn=j)包到服务器,并进入SYN_SENT状态,等待服务器确认;

第二次:服务器收到SYN包,必须确认客户的SYN(ack=j+1),同时自己也发送一个SYN包(seq=k),即SYN+ACK包,此时服务器进入SYN_RECV状态;

第三次:客户端收到服务器的SYN+ACK包,向服务器发送确认包ACK(ack=k+1),客户端发送完后进入连接建立状态。

!注意,此时服务器还没有进入建立连接状态,服务器在收到客户端发送的ACK包后,进入ESTABLISHED(TCP连接成功)状态,这样三次握手就完成了。

三次握手的过程就是客户端发送两个包,服务端发送一个包,为什么是三次,这里就不详细解释了。

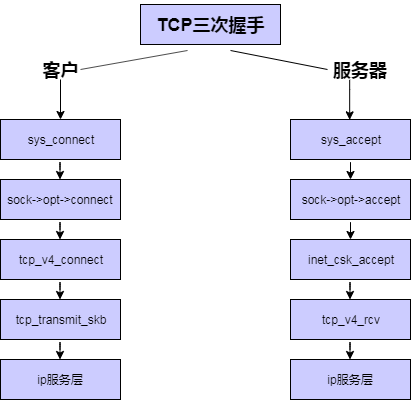

2.TCP三次握手过程在Linux内核中的执行流程

根据老师在课堂上讲的内容,我们知道TCP的三次握手从用户程序的角度看就是客户端connect和服务端accept建立起连接时背后的完成的工作,在内核socket接口层这两个socket API函数对应着sys_connect和sys_accept函数,进一步对应着sock->opt->connect和sock->opt->accept两个函数指针,在TCP协议中这两个函数指针对应着tcp_v4_connect函数和inet_csk_accept函数。tcp_v4_connect触发TCP数据发送过程,进入tcp_transmit_skb函数,该向IP层发送数据包;inet_csk_accept触发数据接收过程,接收数据通过tcp_v4_rcv函数实现,tcp_v4_rcv为TCP的总入口,数据包从IP层传递上来,进入该函数。

大概就是下图这样

通过上面分析,我们知道了TCP在客户端和服务端收发数据大概流程,客户端在tcp层通过调用tcp_transmit_skb向服务器发送带有SYN的TCP报文,服务器在tcp层通过tcp_v4_rcv函数处理接收到的SYN数据包,并调用相关函数向客户发送SYN+ACK包,客户端在tcp层也是通过tcp_v4_rcv函数接收数据进行处理,然后调用相关函数向服务器发送ACK包。

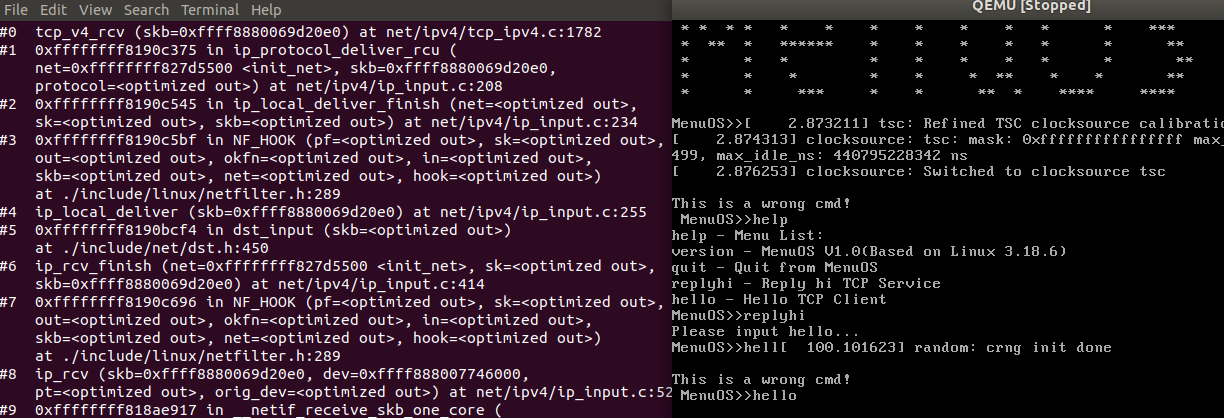

那么三次握手是在内核中是具体怎么执行的呢,又调用了那些内核函数呢,接下来我们通过gdb调试,观察内核栈调用,看看这是怎么样的一个过程。

3.gdb分析三次握手过程在内核中的调用栈

老样子,打开终端输入下列命令打开menuOS,通过在上面运行我们的网络程序,即replyhi/hello来分析Linux内核中TCP三次握手过程。

$ qemu -kernel ~/kernel/linux-5.0.1/arch/x86/boot/bzImage -initrd ~/kernel/linuxnet/rootfs.img -append nokaslr -s

打开另一终端,进入内核调试工具gdb,加载镜像符号表,连接qemu的gdb server,设置断点,这里把断点设置在tcp_v4_rcv处,通过上面分析,我们知道第一次执行tcp_v4_rcv应该是第一次握手的时候,服务端收到客户端发送的SYN包。看看是不是这么回事,里面具体又发生了什么。

(gdb) b tcp_v4_rcv

Breakpoint 1 at 0xffffffff81936870: file net/ipv4/tcp_ipv4.c, line 1782.

(gdb) c

Continuing.

Breakpoint 1, tcp_v4_rcv (skb=0xffff8880069d20e0) at net/ipv4/tcp_ipv4.c:1782

1782 {

(gdb) bt

#0 tcp_v4_rcv (skb=0xffff8880069d20e0) at net/ipv4/tcp_ipv4.c:1782

#1 0xffffffff8190c375 in ip_protocol_deliver_rcu (

net=0xffffffff827d5500 <init_net>, skb=0xffff8880069d20e0,

protocol=<optimized out>) at net/ipv4/ip_input.c:208

#2 0xffffffff8190c545 in ip_local_deliver_finish (net=<optimized out>,

sk=<optimized out>, skb=<optimized out>) at net/ipv4/ip_input.c:234

#3 0xffffffff8190c5bf in NF_HOOK (pf=<optimized out>, sk=<optimized out>,

out=<optimized out>, okfn=<optimized out>, in=<optimized out>,

skb=<optimized out>, net=<optimized out>, hook=<optimized out>)

at ./include/linux/netfilter.h:289

#4 ip_local_deliver (skb=0xffff8880069d20e0) at net/ipv4/ip_input.c:255

#5 0xffffffff8190bcf4 in dst_input (skb=<optimized out>)

at ./include/net/dst.h:450

#6 ip_rcv_finish (net=0xffffffff827d5500 <init_net>, sk=<optimized out>,

skb=0xffff8880069d20e0) at net/ipv4/ip_input.c:414

#7 0xffffffff8190c696 in NF_HOOK (pf=<optimized out>, sk=<optimized out>,

out=<optimized out>, okfn=<optimized out>, in=<optimized out>,

skb=<optimized out>, net=<optimized out>, hook=<optimized out>)

at ./include/linux/netfilter.h:289

#8 ip_rcv (skb=0xffff8880069d20e0, dev=0xffff888007746000,

pt=<optimized out>, orig_dev=<optimized out>) at net/ipv4/ip_input.c:524

#9 0xffffffff818ae917 in __netif_receive_skb_one_core (

skb=0xffff8880069d20e0, pfmemalloc=<optimized out>) at net/core/dev.c:4973

---Type <return> to continue, or q <return> to quit---

#10 0xffffffff818ae978 in __netif_receive_skb (skb=<optimized out>)

at net/core/dev.c:5083

#11 0xffffffff818aea64 in process_backlog (napi=0xffff888007823d50, quota=64)

at net/core/dev.c:5923

#12 0xffffffff818af110 in napi_poll (repoll=<optimized out>, n=<optimized out>)

at net/core/dev.c:6346

#13 net_rx_action (h=<optimized out>) at net/core/dev.c:6412

#14 0xffffffff81e000e4 in __do_softirq () at kernel/softirq.c:292

#15 0xffffffff81c00dba in do_softirq_own_stack ()

at arch/x86/entry/entry_64.S:1027

#16 0xffffffff8109b9c4 in do_softirq () at kernel/softirq.c:337

#17 0xffffffff8109ba35 in do_softirq () at kernel/softirq.c:329

#18 __local_bh_enable_ip (ip=<optimized out>, cnt=<optimized out>)

at kernel/softirq.c:189

#19 0xffffffff819100a3 in local_bh_enable ()

at ./include/linux/bottom_half.h:32

#20 rcu_read_unlock_bh () at ./include/linux/rcupdate.h:696

#21 ip_finish_output2 (net=<optimized out>, sk=<optimized out>,

skb=0xffff8880069d20e0) at net/ipv4/ip_output.c:231

#22 0xffffffff81910dfe in ip_finish_output (net=0xffffffff827d5500 <init_net>,

sk=0xffff88800619f700, skb=0xffff8880069d20e0) at net/ipv4/ip_output.c:317

#23 0xffffffff819125dd in NF_HOOK_COND (pf=<optimized out>,

hook=<optimized out>, in=<optimized out>, okfn=<optimized out>,

---Type <return> to continue, or q <return> to quit---

cond=<optimized out>, out=<optimized out>, skb=<optimized out>,

sk=<optimized out>, net=<optimized out>) at ./include/linux/netfilter.h:278

#24 ip_output (net=0xffff8880069d20e0, sk=0x6 <irq_stack_union+6>,

skb=0xffff8880069d20e0) at net/ipv4/ip_output.c:405

#25 0xffffffff81911d2b in dst_output (skb=<optimized out>, sk=<optimized out>,

net=<optimized out>) at ./include/net/dst.h:444

#26 ip_local_out (net=0xffffffff827d5500 <init_net>, sk=0xffff88800619f700,

skb=0xffff8880069d20e0) at net/ipv4/ip_output.c:124

#27 0xffffffff81912075 in __ip_queue_xmit (sk=0xffff8880069d20e0,

skb=0x6 <irq_stack_union+6>, fl=<optimized out>, tos=<optimized out>)

at net/ipv4/ip_output.c:505

#28 0xffffffff81932f80 in ip_queue_xmit (sk=<optimized out>,

skb=<optimized out>, fl=<optimized out>) at ./include/net/ip.h:198

#29 0xffffffff8192cca3 in __tcp_transmit_skb (sk=0xffff88800619f700,

skb=0xffff8880069d20e0, clone_it=<optimized out>,

gfp_mask=<optimized out>, rcv_nxt=<optimized out>)

at net/ipv4/tcp_output.c:1160

#30 0xffffffff8192ed7d in tcp_transmit_skb (gfp_mask=<optimized out>,

clone_it=<optimized out>, skb=<optimized out>, sk=<optimized out>)

at net/ipv4/tcp_output.c:1176

#31 tcp_connect (sk=0xffff88800619f700) at net/ipv4/tcp_output.c:3535

#32 0xffffffff819351f9 in tcp_v4_connect (sk=0xffff88800619f700,

uaddr=0xffffc90000043df8, addr_len=<optimized out>)

---Type <return> to continue, or q <return> to quit---

at net/ipv4/tcp_ipv4.c:315

#33 0xffffffff8194eac9 in __inet_stream_connect (sock=0xffff888006462500,

uaddr=0x6 <irq_stack_union+6>, addr_len=6, flags=<optimized out>,

is_sendmsg=<optimized out>) at net/ipv4/af_inet.c:655

#34 0xffffffff8194ec4b in inet_stream_connect (sock=0xffff888006462500,

uaddr=0xffffc90000043df8, addr_len=16, flags=2) at net/ipv4/af_inet.c:719

#35 0xffffffff8188b673 in __sys_connect (fd=<optimized out>,

uservaddr=0xffd431ec, addrlen=16) at net/socket.c:1663

#36 0xffffffff818eb977 in __do_compat_sys_socketcall (args=<optimized out>,

call=<optimized out>) at net/compat.c:869

#37 __se_compat_sys_socketcall (args=<optimized out>, call=<optimized out>)

at net/compat.c:838

#38 __ia32_compat_sys_socketcall (regs=<optimized out>) at net/compat.c:838

#39 0xffffffff8100450b in do_syscall_32_irqs_on (regs=<optimized out>)

at arch/x86/entry/common.c:326

#40 do_fast_syscall_32 (regs=0xffff8880069d20e0) at arch/x86/entry/common.c:397

#41 0xffffffff81c01631 in entry_SYSCALL_compat ()

at arch/x86/entry/entry_64_compat.S:257

#42 0x0000000000000000 in ?? ()

emm,这东西也太多了8,看起来有点复杂额,稍稍梳理一下,主要的有这么几个过程:

首先是通过系统调用和socket总入口sys_socketcall,进入__socket_connnect,这个过程上次实验已经分析过了。

接下来

inet_stream_connect-> __inet_stream_connect-> tcp_v4_connect-> tcp_connect-> tcp_transmit_skb-> __tcp_transmit_skb

这个过程主要是干嘛呢,待会儿源码分析一下

紧接着

ip_queue_xmit-> __ip_queue_xmit-> ip_local_out-> NF_HOOK_COND-> ip_finish_output-> rcu_read_unlock_bh-> local_bh_enable

-> __local_bh_enable_i-> ......中间太多了,省略-> ip_rcv_finish

这个过程主要是ip层的内容,主要是数据添加头部,确定路由,数据检查,发送机制,协议信息,分片,将数据发送到网卡或者交给tcp层等等,先暂时不分析它

最后

ip_local_deliver-> NF_HOOK-> ip_local_deliver_finish-> ip_protocol_deliver_rcu-> tcp_v4_rcv

ip层接收到数据之后经过一系列处理,通过tcp层的总接口tcp_v4_rcv将数据传给tcp层。

4.调用过程源码分析

接着上面的内容分析第一次握手在tcp层中的过程

inet_stream_connect-> __inet_stream_connect-> tcp_v4_connect-> tcp_connect-> tcp_transmit_skb-> __tcp_transmit_skb

客户端首先通过connect方法试图与服务端建立连接,通过syscall找到其在内核中的实现__sys_connect,__sys_connect方法对应的内核源码如下,这里sock->ops指向的是inet_stream_ops,因此sock->ops->connect调用的就是inet_stream_connect()。inet_stream_connect()对__inet_stream_connect()做了一个简单的封装。

// net/socket.c

int __sys_connect(int fd, struct sockaddr __user *uservaddr, int addrlen)

{

...

/* __sys_connect调用的主处理函数

* 函数指针指向inet_stream_connect()。

*/

err = sock->ops->connect(sock, (struct sockaddr *)&address, addrlen,

sock->file->f_flags);

...

}

inet_stream_connect()对__inet_stream_connect()做了封装

int inet_stream_connect(struct socket *sock, struct sockaddr *uaddr,

int addr_len, int flags)

{

int err;

lock_sock(sock->sk);

err = __inet_stream_connect(sock, uaddr, addr_len, flags, 0);

release_sock(sock->sk);

return err;

}

EXPORT_SYMBOL(inet_stream_connect);

__inet_stream_connect的主要功能:

- 调用tcp_v4_connect函数建立与服务器联系并发送SYN段;

- 获取连接超时时间timeo,如果timeo不为0,则会调用inet_wait_for_connect一直等待到连接成功或超时

// net/ipv4/af_inet.c

int __inet_stream_connect(struct socket *sock, struct sockaddr *uaddr,

int addr_len, int flags, int is_sendmsg)

{

struct sock *sk = sock->sk;

int err;

long timeo;

...

switch (sock->state) {

default:

err = -EINVAL;

goto out;

case SS_CONNECTED:/* 已经与对方端口连接*/

err = -EISCONN;

goto out;

case SS_CONNECTING:/*正在连接过程中*/

if (inet_sk(sk)->defer_connect)

err = is_sendmsg ? -EINPROGRESS : -EISCONN;

else

err = -EALREADY;

/* Fall out of switch with err, set for this state */

break;

case SS_UNCONNECTED:/* 只有此状态才能调用connect */

err = -EISCONN;

if (sk->sk_state != TCP_CLOSE)

goto out;

if (BPF_CGROUP_PRE_CONNECT_ENABLED(sk)) {

err = sk->sk_prot->pre_connect(sk, uaddr, addr_len);

if (err)

goto out;

}

/* 调用传输层接口tcp_v4_connect建立与服务器连接,并发送SYN段 */

err = sk->sk_prot->connect(sk, uaddr, addr_len);

if (err < 0)

goto out;

/* 发送SYN段后,设置状态为SS_CONNECTING */

sock->state = SS_CONNECTING;

if (!err && inet_sk(sk)->defer_connect)

goto out;

/* 如果是以非阻塞方式进行连接,则默认的返回值为EINPROGRESS,表示正在连接 */

err = -EINPROGRESS;

break;

}

/* 获取连接超时时间,如果指定非阻塞方式,则不等待直接返回 */

timeo = sock_sndtimeo(sk, flags & O_NONBLOCK);

...

/* 发送完SYN后,连接状态一般为这两种状态,但是如果连接建立非常快,则可能越过这两种状态 */

if ((1 << sk->sk_state) & (TCPF_SYN_SENT | TCPF_SYN_RECV)) {

int writebias = (sk->sk_protocol == IPPROTO_TCP) &&

tcp_sk(sk)->fastopen_req &&

tcp_sk(sk)->fastopen_req->data ? 1 : 0;

/* Error code is set above */

if (!timeo || !inet_wait_for_connect(sk, timeo, writebias))

goto out;/* 等待连接完成或超时 */

err = sock_intr_errno(timeo);

if (signal_pending(current))

goto out;

}

...

}

接下来就进入tcp_v4_connect了,主要是功能与服务器建立连接,发送SYN包,函数代码如下

// net/ipv4/tcp_ipv4.c

int tcp_v4_connect(struct sock *sk, struct sockaddr *uaddr, int addr_len)

{

struct sockaddr_in *usin = (struct sockaddr_in *)uaddr;

struct inet_sock *inet = inet_sk(sk);

struct tcp_sock *tp = tcp_sk(sk);

__be16 orig_sport, orig_dport;

__be32 daddr, nexthop;

struct flowi4 *fl4;

struct rtable *rt;

int err;

struct ip_options_rcu *inet_opt;

struct inet_timewait_death_row *tcp_death_row = &sock_net(sk)->ipv4.tcp_death_row;

if (addr_len < sizeof(struct sockaddr_in))//校验目的地址的长度及地址族的有效性

return -EINVAL;

if (usin->sin_family != AF_INET)

return -EAFNOSUPPORT;

nexthop = daddr = usin->sin_addr.s_addr;//将下一跳和目的地址都设置为源地址

inet_opt = rcu_dereference_protected(inet->inet_opt,

lockdep_sock_is_held(sk));

if (inet_opt && inet_opt->opt.srr) {

if (!daddr)

return -EINVAL;

nexthop = inet_opt->opt.faddr;

}

orig_sport = inet->inet_sport;

orig_dport = usin->sin_port;

fl4 = &inet->cork.fl.u.ip4;

rt = ip_route_connect(fl4, nexthop, inet->inet_saddr, //根据下一跳地址等信息查找目的路由缓存项

RT_CONN_FLAGS(sk), sk->sk_bound_dev_if,

IPPROTO_TCP,

orig_sport, orig_dport, sk);

if (IS_ERR(rt)) {

err = PTR_ERR(rt);

if (err == -ENETUNREACH)

IP_INC_STATS(sock_net(sk), IPSTATS_MIB_OUTNOROUTES);

return err;

}

if (rt->rt_flags & (RTCF_MULTICAST | RTCF_BROADCAST)) {//对TCP来说,不能使用多播和组播路由项

ip_rt_put(rt);

return -ENETUNREACH;

}

...

tcp_set_state(sk, TCP_SYN_SENT);

err = inet_hash_connect(tcp_death_row, sk); //绑定IP地址和端口,并将socket加入到连接表中

...

if (likely(!tp->repair)) {

if (!tp->write_seq)//使用加密的方法生成初始序列号

tp->write_seq = secure_tcp_seq(inet->inet_saddr,

inet->inet_daddr,

inet->inet_sport,

usin->sin_port);

tp->tsoffset = secure_tcp_ts_off(sock_net(sk),

inet->inet_saddr,

inet->inet_daddr);

}

...

err = tcp_connect(sk); //发送SYN请求

if (err)

goto failure;

return 0;

...

}

EXPORT_SYMBOL(tcp_v4_connect);

- 此函数前面部分是确定socket的源端口,目的ip及端口。目的IP和目的端口是由connect系统调用的入参指定。

- secure_tcp_seq函数用加密并与时间关联的非法生成初始序列号,以保证一个TCP连接的初始序列号很难被猜到,而且即使先后发起多次是源、目的端口都一样的连接,它们的起始序列号也都不一样。

- tcp_connect函数用于构建并发送一个SYN请求。

继续看看里面的tcp_connect函数

// net/ipv4/tcp_output.c

int tcp_connect(struct sock *sk)

{

struct tcp_sock *tp = tcp_sk(sk);

struct sk_buff *buff;

int err;

tcp_call_bpf(sk, BPF_SOCK_OPS_TCP_CONNECT_CB, 0, NULL);

if (inet_csk(sk)->icsk_af_ops->rebuild_header(sk))

return -EHOSTUNREACH; /* Routing failure or similar. */

tcp_connect_init(sk);//初始化TCP连接信息

if (unlikely(tp->repair)) {

tcp_finish_connect(sk, NULL);

return 0;

}

buff = sk_stream_alloc_skb(sk, 0, sk->sk_allocation, true);//构建SKB用于承载TCP报文

if (unlikely(!buff))

return -ENOBUFS;

tcp_init_nondata_skb(buff, tp->write_seq++, TCPHDR_SYN);//初始化不带数据的TCP SYN报文,SYN包的seq为tp->write_seq

tcp_mstamp_refresh(tp);

tp->retrans_stamp = tcp_time_stamp(tp);

tcp_connect_queue_skb(sk, buff);//将报文放入发送队列中

tcp_ecn_send_syn(sk, buff);

tcp_rbtree_insert(&sk->tcp_rtx_queue, buff);

/* Send off SYN; include data in Fast Open. */

err = tp->fastopen_req ? tcp_send_syn_data(sk, buff) ://如果TFO功能开启,则用tcp_send_syn_data函数发送带数据的SYN

tcp_transmit_skb(sk, buff, 1, sk->sk_allocation);//否则用tcp_transmit_skb发送之前构建好的不带数据的SYN

if (err == -ECONNREFUSED)

return err;

/* We change tp->snd_nxt after the tcp_transmit_skb() call

* in order to make this packet get counted in tcpOutSegs.

*/

tp->snd_nxt = tp->write_seq;

tp->pushed_seq = tp->write_seq;

buff = tcp_send_head(sk);

if (unlikely(buff)) {

tp->snd_nxt = TCP_SKB_CB(buff)->seq;

tp->pushed_seq = TCP_SKB_CB(buff)->seq;

}

TCP_INC_STATS(sock_net(sk), TCP_MIB_ACTIVEOPENS);

/* Timer for repeating the SYN until an answer. */

inet_csk_reset_xmit_timer(sk, ICSK_TIME_RETRANS, //设置重传定时器,如果在超时之前没有收到SYN|ACK,则重传SYN

inet_csk(sk)->icsk_rto, TCP_RTO_MAX);

return 0;

}

EXPORT_SYMBOL(tcp_connect);

总结一下tcp_connect的作用:

- 构造一个携带SYN标志位的TCP头,tcp_init_nondata_skb函数实现

- 发送带有SYN的TCP报文,tcp_transmit_skb函数实现

- 设置计时器超时重发,net_csk_reset_xmit_timer函数实现

tcp_transmit_skb是对__tcp_transmit_skb的封装,继续调用,进入__tcp_transmit_skb发送SYN报文

// net/ipv4/tcp_output.c

static int tcp_transmit_skb(struct sock *sk, struct sk_buff *skb, int clone_it,

gfp_t gfp_mask)

{

return __tcp_transmit_skb(sk, skb, clone_it, gfp_mask,

tcp_sk(sk)->rcv_nxt);

}

__tcp_transmit_skb函数

// net/ipv4/tcp_output.c

static int __tcp_transmit_skb(struct sock *sk, struct sk_buff *skb,

int clone_it, gfp_t gfp_mask, u32 rcv_nxt)

{

const struct inet_connection_sock *icsk = inet_csk(sk);

struct inet_sock *inet;

struct tcp_sock *tp;

struct tcp_skb_cb *tcb;

struct tcp_out_options opts;

unsigned int tcp_options_size, tcp_header_size;

struct sk_buff *oskb = NULL;

struct tcp_md5sig_key *md5;

struct tcphdr *th;

u64 prior_wstamp;

int err;

BUG_ON(!skb || !tcp_skb_pcount(skb));

tp = tcp_sk(sk);

if (clone_it) { //如果还有其他进程使用Socket Buffer,就要克隆Socket Buffer

TCP_SKB_CB(skb)->tx.in_flight = TCP_SKB_CB(skb)->end_seq

- tp->snd_una;

oskb = skb;

tcp_skb_tsorted_save(oskb) {

if (unlikely(skb_cloned(oskb)))

skb = pskb_copy(oskb, gfp_mask);

else

skb = skb_clone(oskb, gfp_mask);

} tcp_skb_tsorted_restore(oskb);

if (unlikely(!skb))

return -ENOBUFS;

}

prior_wstamp = tp->tcp_wstamp_ns;

tp->tcp_wstamp_ns = max(tp->tcp_wstamp_ns, tp->tcp_clock_cache);

skb->skb_mstamp_ns = tp->tcp_wstamp_ns;

inet = inet_sk(sk); //获取AF_INIT协议组套接字

tcb = TCP_SKB_CB(skb); //TCP选项结构体

memset(&opts, 0, sizeof(opts));

if (unlikely(tcb->tcp_flags & TCPHDR_SYN)) //是否是SYN请求数据包

tcp_options_size = tcp_syn_options(sk, skb, &opts, &md5);//构建TCP选项包括时间戳、窗口大小、选择回答SACK

else

tcp_options_size = tcp_established_options(sk, skb, &opts,//构建常规TCP选项

&md5);

tcp_header_size = tcp_options_size + sizeof(struct tcphdr);//tCP头部长度包括选择长度+ TCP头部

/* if no packet is in qdisc/device queue, then allow XPS to select

* another queue. We can be called from tcp_tsq_handler()

* which holds one reference to sk.

*

* TODO: Ideally, in-flight pure ACK packets should not matter here.

* One way to get this would be to set skb->truesize = 2 on them.

*/

skb->ooo_okay = sk_wmem_alloc_get(sk) < SKB_TRUESIZE(1);

/* If we had to use memory reserve to allocate this skb,

* this might cause drops if packet is looped back :

* Other socket might not have SOCK_MEMALLOC.

* Packets not looped back do not care about pfmemalloc.

*/

skb->pfmemalloc = 0;

skb_push(skb, tcp_header_size);

skb_reset_transport_header(skb);

skb_orphan(skb);

skb->sk = sk;

skb->destructor = skb_is_tcp_pure_ack(skb) ? __sock_wfree : tcp_wfree;

skb_set_hash_from_sk(skb, sk);

refcount_add(skb->truesize, &sk->sk_wmem_alloc);

skb_set_dst_pending_confirm(skb, sk->sk_dst_pending_confirm);

/* Build TCP header and checksum it. */

th = (struct tcphdr *)skb->data;//建立tcp头部并检查校验和

th->source = inet->inet_sport;

th->dest = inet->inet_dport;

th->seq = htonl(tcb->seq);

th->ack_seq = htonl(rcv_nxt);

*(((__be16 *)th) + 6) = htons(((tcp_header_size >> 2) << 12) |

tcb->tcp_flags);

th->check = 0;

th->urg_ptr = 0;

/* The urg_mode check is necessary during a below snd_una win probe */

if (unlikely(tcp_urg_mode(tp) && before(tcb->seq, tp->snd_up))) {

if (before(tp->snd_up, tcb->seq + 0x10000)) {

th->urg_ptr = htons(tp->snd_up - tcb->seq);

th->urg = 1;

} else if (after(tcb->seq + 0xFFFF, tp->snd_nxt)) {

th->urg_ptr = htons(0xFFFF);

th->urg = 1;

}

}

tcp_options_write((__be32 *)(th + 1), tp, &opts);//将选项信息写入TCP报文段

skb_shinfo(skb)->gso_type = sk->sk_gso_type;

if (likely(!(tcb->tcp_flags & TCPHDR_SYN))) {

th->window = htons(tcp_select_window(sk));

tcp_ecn_send(sk, skb, th, tcp_header_size);

} else {

/* RFC1323: The window in SYN & SYN/ACK segments

* is never scaled.

*/

th->window = htons(min(tp->rcv_wnd, 65535U));

}

#ifdef CONFIG_TCP_MD5SIG

/* Calculate the MD5 hash, as we have all we need now */

if (md5) {

sk_nocaps_add(sk, NETIF_F_GSO_MASK);

tp->af_specific->calc_md5_hash(opts.hash_location,

md5, sk, skb);

}

#endif

icsk->icsk_af_ops->send_check(sk, skb);//调用tcp_v4_send_check函数计算检验和

if (likely(tcb->tcp_flags & TCPHDR_ACK))

tcp_event_ack_sent(sk, tcp_skb_pcount(skb), rcv_nxt);

if (skb->len != tcp_header_size) {

tcp_event_data_sent(tp, sk);

tp->data_segs_out += tcp_skb_pcount(skb);

tp->bytes_sent += skb->len - tcp_header_size;

}

if (after(tcb->end_seq, tp->snd_nxt) || tcb->seq == tcb->end_seq)

TCP_ADD_STATS(sock_net(sk), TCP_MIB_OUTSEGS,

tcp_skb_pcount(skb));

tp->segs_out += tcp_skb_pcount(skb);

/* OK, its time to fill skb_shinfo(skb)->gso_{segs|size} */

skb_shinfo(skb)->gso_segs = tcp_skb_pcount(skb);

skb_shinfo(skb)->gso_size = tcp_skb_mss(skb);

/* Leave earliest departure time in skb->tstamp (skb->skb_mstamp_ns) */

/* Cleanup our debris for IP stacks */

memset(skb->cb, 0, max(sizeof(struct inet_skb_parm),

sizeof(struct inet6_skb_parm)));

err = icsk->icsk_af_ops->queue_xmit(sk, skb, &inet->cork.fl);//触发协议栈发送数据,调用ip_queue_xmit函数将报文段向下层传递

if (unlikely(err > 0)) { //如果发送失败,则类似于接收到显式拥塞通知的处理

tcp_enter_cwr(sk);

err = net_xmit_eval(err);

}

if (!err && oskb) {

tcp_update_skb_after_send(sk, oskb, prior_wstamp);

tcp_rate_skb_sent(sk, oskb);

}

return err;

}

__tcp_transmit_skb函数的主要任务是向ip层发送数据包,其中包括

- 初始化TCP协议头等数据结构

- 查看clone_it是否要克隆Socket Buffer,应用Socket Buffer可能正被其他进程使用,就要克隆一个份

- 构建TCP协议选项

- 阻塞控制,确定网络上有多少数据包最好

- 构建TCP协议头主要的数据域:源端口、目的端口、数据段初始序列号,计算窗口大小,如果是SYN请求包就不需要计算窗口大小

- 发送数据包到ip层,发送过程状态机切换,发送SYN包之后切换为SYN_SENT

emm,到这里客户端tcp层算是完成SYN包的发送了,经过下层传输到服务器网卡。

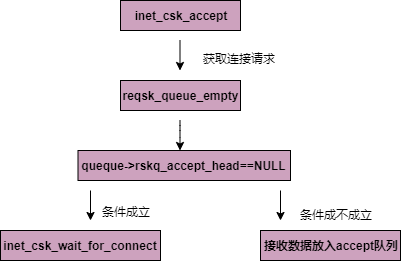

服务器此时tcp层inet_csk_accept正处于获取连接请求状态,也就是一直在监听,其执行过程如下

// net/ipv4/inet_connect_sock.c

struct sock *inet_csk_accept(struct sock *sk, int flags, int *err, bool kern)

{...

if (sk->sk_state != TCP_LISTEN)//判断是否是LISTEN状态

goto out_err;

/* Find already established connection */

if (reqsk_queue_empty(queue)) {//如果请求队列为空

long timeo = sock_rcvtimeo(sk, flags & O_NONBLOCK);

/* If this is a non blocking socket don't sleep */

error = -EAGAIN;

if (!timeo)

goto out_err;

error = inet_csk_wait_for_connect(sk, timeo);//执行此函数等待连接,继续监听

if (error)

goto out_err;

}

EXPORT_SYMBOL(inet_csk_accept);

reqsk_queue_empty函数

// include/net/request_sock.c

static inline bool reqsk_queue_empty(const struct request_sock_queue *queue)

{

return queue->rskq_accept_head == NULL;//判断请求是否为空

}

inet_csk_wait_for_connect函数,等待连接请求,直到reqsk_queue_empty为假,即icsk->icsk_accept_queue不为空

// net/ipv4/inet_connect_sock.c

static int inet_csk_wait_for_connect(struct sock *sk, long timeo)

{

struct inet_connection_sock *icsk = inet_csk(sk);

DEFINE_WAIT(wait);

int err;

for (;;) {//一直等待

prepare_to_wait_exclusive(sk_sleep(sk), &wait,

TASK_INTERRUPTIBLE);

release_sock(sk);

if (reqsk_queue_empty(&icsk->icsk_accept_queue))

timeo = schedule_timeout(timeo);

sched_annotate_sleep();

lock_sock(sk);

err = 0;

if (!reqsk_queue_empty(&icsk->icsk_accept_queue))//icsk->icsk_accept_queue不为空跳出循环

break;

err = -EINVAL;

if (sk->sk_state != TCP_LISTEN)//不是listen状态也跳出

break;

err = sock_intr_errno(timeo);//信号打断

if (signal_pending(current))

break;

err = -EAGAIN;

if (!timeo)//调度超时

break;

}

finish_wait(sk_sleep(sk), &wait);//结束等待状态

return err;

}

inet_csk_accept函数实现了tcp协议accept操作,如果请求队列中没有已完成握手的连接,并且套接字已经设置了阻塞标记,则需要加入调度队列等待连接的到来,inet_csk_wait_for_connect函数完成了这个功能。

等待状态如图

现在我们知道服务器端等待状态是怎么回事了,回到第一次握手,经过之前的分析,数据包经过一些列过程到达服务器的ip层,经过ip层处理后经过TCPv4的入口函数tcp_v4_rcv进入tcp层,emm,这里不详细分析源码了,下次再分析吧,大概流程是:

tcp_v4_rcv调用主处理函数tcp_v4_do_rcv,然后->tcp_v4_hnd_req-> tcp_rcv_state_process-> 接收SYN报文->发送SYN+ACK报文这样一个过程进行第二次握手。

同样,SYN+ACK报文到达客户端,客户端tcp层通过tcp_v4_rcv调用主处理函数tcp_v4_do_rcv,然后经过相关处理分析,调用tcp_rcv_synsent_state_process函数发送ACK包,进行第三次握手,服务器端接收ACK包,三次握手完成,连接成功建立。

先就分析到这里吧,Linux内核中的socket实在是太庞杂了,太深东西太多了,emm。