一 准备实验数据

1.1.下载数据

wget http://snap.stanford.edu/data/amazon/all.txt.gz

1.2.数据分析

1.2.1.数据格式

product/productId: B00006HAXW product/title: Rock Rhythm & Doo Wop: Greatest Early Rock product/price: unknown review/userId: A1RSDE90N6RSZF review/profileName: Joseph M. Kotow review/helpfulness: 9/9 review/score: 5.0 review/time: 1042502400 review/summary: Pittsburgh - Home of the OLDIES review/text: I have all of the doo wop DVD's and this one is as good or better than the 1st ones. Remember once these performers are gone, we'll never get to see them again. Rhino did an excellent job and if you like or love doo wop and Rock n Roll you'll LOVE this DVD !!

而,

- product/productId: asin, e.g. amazon.com/dp/B00006HAXW #亚马逊标准识别号码(英语:Amazon Standard Identification Number),简称ASIN(productId),是一个由十个字符(字母或数字)组成的唯一识别号码。由亚马逊及其伙伴分配,并用于亚马逊上的产品标识。

- product/title: title of the product

- product/price: price of the product

- review/userId: id of the user, e.g. A1RSDE90N6RSZF

- review/profileName: name of the user

- review/helpfulness: fraction of users who found the review helpful

- review/score: rating of the product

- review/time: time of the review (unix time)

- review/summary: review summary

- review/text: text of the review

1.2.2.数据格式转换

首先,我们需要把原始数据格式转换成dictionary

import pandas as pd import numpy as np import datetime import gzip import json from sklearn.decomposition import PCA from myria import * import simplejson def parse(filename): f = gzip.open(filename, 'r') entry = {} for l in f: l = l.strip() colonPos = l.find(':') if colonPos == -1: yield entry entry = {} continue eName = l[:colonPos] rest = l[colonPos+2:] entry[eName] = rest yield entry f = gzip.open('somefile.gz', 'w') #review_data = parse('kcore_5.json.gz') for e in parse("kcore_5.json.gz"): f.write(str(e)) f.close()

py文件执行时报错: string indices must be intergers

分析原因:

在.py文件中写的data={"a":"123","b":"456"},data类型为dict

而在.py文件中通过data= arcpy.GetParameter(0) 获取在GP中传过来的参数{"a":"123","b":"456"},data类型为字符串!!!

所以在后续的.py中用到的data['a']就会报如上错误!!!

解决方案:

data= arcpy.GetParameter(0)

data=json.loads(data) //将字符串转成json格式

或

data=eval(data) #本程序中我们采用eval()的方式,将字符串转成dict格式

二.数据预处理

思路:

#import libraries

# Helper functions

# Prepare the review data for training and testing the algorithms

# Preprocess product data for Content-based Recommender System

# Upload the data to the MySQL Database on an Amazon Web Services ( AWS) EC2 instance

2.1创建DataFrame

f parse(path): f = gzip.open(path, 'r') for l in f: yield eval(l) review_data = parse('/kcore_5.json.gz') productID = [] userID = [] score = [] reviewTime = [] rowCount = 0 while True: try: entry = next(review_data) productID.append(entry['asin']) userID.append(entry['reviewerID']) score.append(entry['overall']) reviewTime.append(entry['reviewTime']) rowCount += 1 if rowCount % 1000000 == 0: print 'Already read %s observations' % rowCount except StopIteration, e: print 'Read %s observations in total' % rowCount entry_list = pd.DataFrame({'productID': productID, 'userID': userID, 'score': score, 'reviewTime': reviewTime}) filename = 'review_data.csv' entry_list.to_csv(filename, index=False) print 'Save the data in the file %s' % filename break entry_list = pd.read_csv('review_data.csv')

2.2数据过滤

def filterReviewsByField(reviews, field, minNumReviews): reviewsCountByField = reviews.groupby(field).size() fieldIDWithNumReviewsPlus = reviewsCountByField[reviewsCountByField >= minNumReviews].index #print 'The number of qualified %s: ' % field, fieldIDWithNumReviewsPlus.shape[0] if len(fieldIDWithNumReviewsPlus) == 0: print 'The filtered reviews have become empty' return None else: return reviews[reviews[field].isin(fieldIDWithNumReviewsPlus)] def checkField(reviews, field, minNumReviews): return np.mean(reviews.groupby(field).size() >= minNumReviews) == 1 def filterReviews(reviews, minItemNumReviews, minUserNumReviews): filteredReviews = filterReviewsByField(reviews, 'productID', minItemNumReviews) if filteredReviews is None: return None if checkField(filteredReviews, 'userID', minUserNumReviews): return filteredReviews filteredReviews = filterReviewsByField(filteredReviews, 'userID', minUserNumReviews) if filteredReviews is None: return None if checkField(filteredReviews, 'productID', minItemNumReviews): return filteredReviews else: return filterReviews(filteredReviews, minItemNumReviews, minUserNumReviews) def filteredReviewsInfo(reviews, minItemNumReviews, minUserNumReviews): t1 = datetime.datetime.now() filteredReviews = filterReviews(reviews, minItemNumReviews, minUserNumReviews) print 'Mininum num of reviews in each item: ', minItemNumReviews print 'Mininum num of reviews in each user: ', minUserNumReviews print 'Dimension of filteredReviews: ', filteredReviews.shape if filteredReviews is not None else '(0, 4)' print 'Num of unique Users: ', filteredReviews['userID'].unique().shape[0] print 'Num of unique Product: ', filteredReviews['productID'].unique().shape[0] t2 = datetime.datetime.now() print 'Time elapsed: ', t2 - t1 return filteredReviews allReviewData = filteredReviewsInfo(entry_list, 100, 10) smallReviewData = filteredReviewsInfo(allReviewData, 150, 15)

理论知识

1. Combining predictions for accurate recommender systems

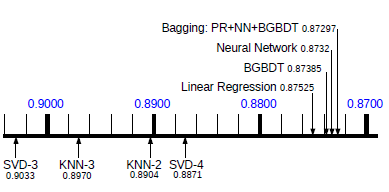

So, for practical applications we recommend to use a neural network in combination with bagging due to the fast prediction speed.

Collaborative ltering(协同过滤,筛选相似的推荐):电子商务推荐系统的主要算法,利用某兴趣相投、拥有共同经验之群体的喜好来推荐用户感兴趣的信息