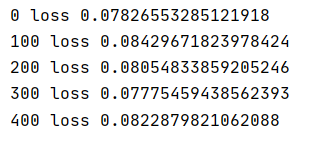

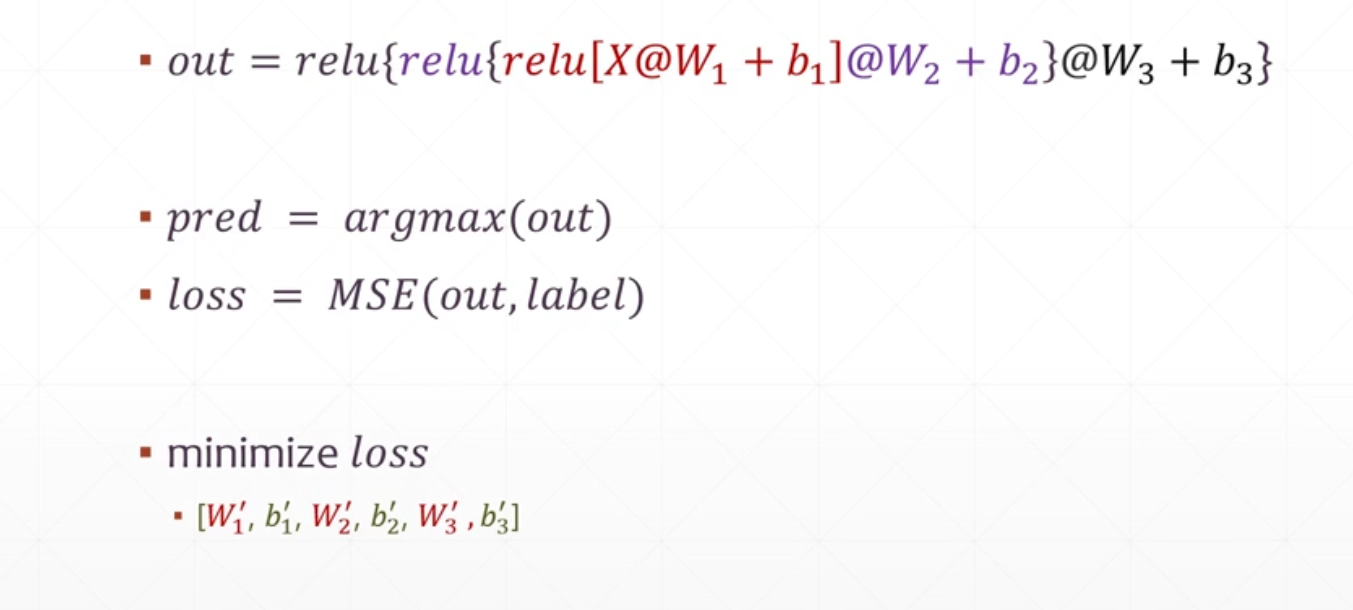

import tensorflow as tf from tensorflow import keras from tensorflow.keras import datasets import os os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2' #x:[60k,28,28] #y:[60k] (x,y),_ = datasets.mnist.load_data() # X:[0~255] => [0~1.] x = tf.convert_to_tensor(x,dtype= tf.float32) / 255. y = tf.convert_to_tensor(y,dtype= tf.int32) print(x.shape,y.shape,x.dtype,y.dtype) print(tf.reduce_max(x),tf.reduce_min(x))#查看最小值和最大值 print(tf.reduce_max(y),tf.reduce_min(y)) train_db = tf.data.Dataset.from_tensor_slices((x,y)).batch(128) #每次加载128个数据集 train_iter = iter(train_db) sample = next(train_iter) print('batch:',sample[0].shape,sample[1].shape) #数据集加载完毕 #[b,784] => [b,512] => [b,128] => [b,10] #[dim_in,din_out] , [dim_out] w1 = tf.Variable(tf.random.truncated_normal([784,256],stddev=0.1))#不加方差范围 会梯度爆炸 b1 = tf.Variable(tf.zeros([256])) w2 = tf.Variable(tf.random.truncated_normal([256,128],stddev=0.1)) b2 = tf.Variable(tf.zeros([128])) w3 = tf.Variable(tf.random.truncated_normal([128,10],stddev=0.1)) b3 = tf.Variable(tf.zeros([10])) lr = 1e-3 for epoch in range(10): #对每个数据集迭代10次 for step, (x, y) in enumerate(train_db): #外层循环:对128张图片进行一次循环 循环6k张图片 #x:[128,28,28] #y:[128] #[b,28,28] => [b,28*28] x = tf.reshape(x,[-1,28*28]) with tf.GradientTape() as type:#记录梯度信息 默认跟踪tf.Variable类型 #x:[b,28*28] # h1 = x@w1 + b1 #[b,784]@[784,256] + [256] => [b,256] +[256] h1 = x@w1 + b1 #自动broadcast h1 = tf.nn.relu(h1) #转换为非线性 h2 = h1@w2 + b2 h2 = tf.nn.relu(h2) out = h2@w3 + b3 #计算误差 #out:[b,10] #y:[b] => [b,10] y_onehot = tf.one_hot(y,depth=10) #mse = mean(sum(y-out)^2) loss = tf.square(y_onehot - out) #mean:scalar loss = tf.reduce_mean(loss) #计算梯度 grads = type.gradient(loss,[w1, b1, w2, b2, w3, b3]) #grads返回梯度数组 #w1 = w1 - lr * w1 grad w1.assign_sub(lr * grads[0]) #原地更新 类型保持不变 b1.assign_sub(lr * grads[1]) w2.assign_sub(lr * grads[2]) b2.assign_sub(lr * grads[3]) w3.assign_sub(lr * grads[4]) b3.assign_sub(lr * grads[5]) if step % 100 == 0: print(step, 'loss', float(loss))

运算结果: