爬取目标

1.本次代码是在python2上运行通过的,python3的最需改2行代码,用到其它python模块

- selenium 2.53.6 +firefox 44

- BeautifulSoup

- requests

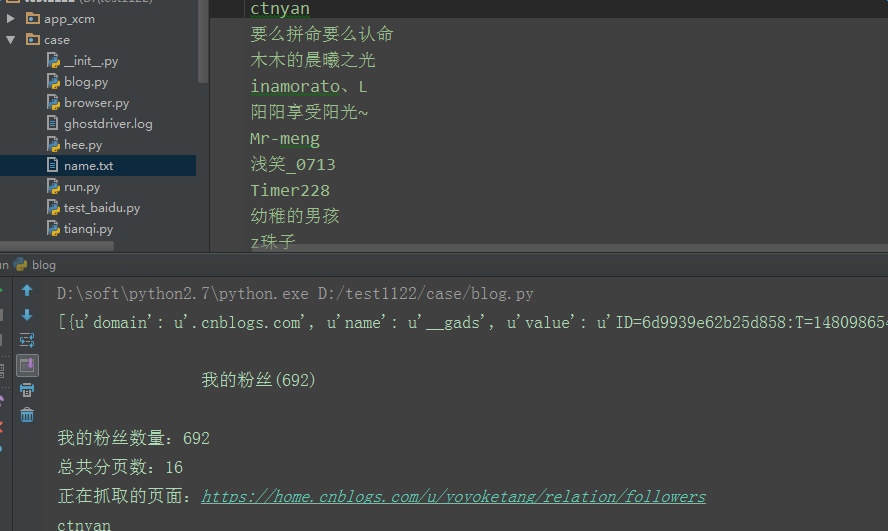

2.爬取目标网站,我的博客:https://home.cnblogs.com/u/yoyoketang

爬取内容:爬我的博客的所有粉丝的名称,并保存到txt

3.由于博客园的登录是需要人机验证的,所以是无法直接用账号密码登录,需借助selenium登录

selenium获取cookies

1.大前提:先手工操作浏览器,登录我的博客,并记住密码

(保证关掉浏览器后,下次打开浏览器访问我的博客时候是登录状态)

2.selenium默认启动浏览器是一个空的配置,默认不加载配置缓存文件,这里先得找到对应浏览器的配置文件地址,以火狐浏览器为例

3.使用driver.get_cookies()方法获取浏览器的cookies

# coding:utf-8

import requests

from selenium import webdriver

from bs4 import BeautifulSoup

import re

import time

# firefox浏览器配置文件地址

profile_directory = r'C:UsersadminAppDataRoamingMozillaFirefoxProfilesyn80ouvt.default'

# 加载配置

profile = webdriver.FirefoxProfile(profile_directory)

# 启动浏览器配置

driver = webdriver.Firefox(profile)

driver.get("https://home.cnblogs.com/u/yoyoketang/followers/")

time.sleep(3)

cookies = driver.get_cookies() # 获取浏览器cookies

print(cookies)

driver.quit()

(注:要是这里脚本启动浏览器后,打开的博客页面是未登录的,后面内容都不用看了,先检查配置文件是不是写错了)

requests添加登录的cookies

1.浏览器的cookies获取到后,接下来用requests去建一个session,在session里添加登录成功后的cookies

s = requests.session() # 新建session

# 添加cookies到CookieJar

c = requests.cookies.RequestsCookieJar()

for i in cookies:

c.set(i["name"], i['value'])

s.cookies.update(c) # 更新session里cookies

计算粉丝数和分页总数

1.由于我的粉丝的数据是分页展示的,这里一次只能请求到45个,所以先获取粉丝总数,然后计算出总的页数

# 发请求

r1 = s.get("https://home.cnblogs.com/u/yoyoketang/relation/followers")

soup = BeautifulSoup(r1.content, "html.parser")

# 抓取我的粉丝数

fensinub = soup.find_all(class_="current_nav")

print fensinub[0].string

num = re.findall(u"我的粉丝((.+?))", fensinub[0].string)

print u"我的粉丝数量:%s"%str(num[0])

# 计算有多少页,每页45条

ye = int(int(num[0])/45)+1

print u"总共分页数:%s"%str(ye)

保存粉丝名到txt

# 抓取第一页的数据

fensi = soup.find_all(class_="avatar_name")

for i in fensi:

name = i.string.replace("

", "").replace(" ","")

print name

with open("name.txt", "a") as f: # 追加写入

f.write(name.encode("utf-8")+"

")

# 抓第二页后的数据

for i in range(2, ye+1):

r2 = s.get("https://home.cnblogs.com/u/yoyoketang/relation/followers?page=%s"%str(i))

soup = BeautifulSoup(r1.content, "html.parser")

# 抓取我的粉丝数

fensi = soup.find_all(class_="avatar_name")

for i in fensi:

name = i.string.replace("

", "").replace(" ","")

print name

with open("name.txt", "a") as f: # 追加写入

f.write(name.encode("utf-8")+"

")

参考代码:

# coding:utf-8

import requests

from selenium import webdriver

from bs4 import BeautifulSoup

import re

import time

# firefox浏览器配置文件地址

profile_directory = r'C:UsersadminAppDataRoamingMozillaFirefoxProfilesyn80ouvt.default'

s = requests.session() # 新建session

url = "https://home.cnblogs.com/u/yoyoketang"

def get_cookies(url):

'''启动selenium获取登录的cookies'''

try:

# 加载配置

profile = webdriver.FirefoxProfile(profile_directory)

# 启动浏览器配置

driver = webdriver.Firefox(profile)

driver.get(url+"/followers")

time.sleep(3)

cookies = driver.get_cookies() # 获取浏览器cookies

print(cookies)

driver.quit()

return cookies

except Exception as msg:

print(u"启动浏览器报错了:%s" %str(msg))

def add_cookies(cookies):

'''往session添加cookies'''

try:

# 添加cookies到CookieJar

c = requests.cookies.RequestsCookieJar()

for i in cookies:

c.set(i["name"], i['value'])

s.cookies.update(c) # 更新session里cookies

except Exception as msg:

print(u"添加cookies的时候报错了:%s" % str(msg))

def get_ye_nub(url):

'''获取粉丝的页面数量'''

try:

# 发请求

r1 = s.get(url+"/relation/followers")

soup = BeautifulSoup(r1.content, "html.parser")

# 抓取我的粉丝数

fensinub = soup.find_all(class_="current_nav")

print(fensinub[0].string)

num = re.findall(u"我的粉丝((.+?))", fensinub[0].string)

print(u"我的粉丝数量:%s"%str(num[0]))

# 计算有多少页,每页45条

ye = int(int(num[0])/45)+1

print(u"总共分页数:%s"%str(ye))

return ye

except Exception as msg:

print(u"获取粉丝页数报错了,默认返回数量1 :%s"%str(msg))

return 1

def save_name(nub):

'''抓取页面的粉丝名称'''

try:

# 抓取第一页的数据

if nub <= 1:

url_page = url+"/relation/followers"

else:

url_page = url+"/relation/followers?page=%s" % str(nub)

print(u"正在抓取的页面:%s" %url_page)

r2 = s.get(url_page, verify=False)

soup = BeautifulSoup(r2.content, "html.parser")

fensi = soup.find_all(class_="avatar_name")

for i in fensi:

name = i.string.replace("

", "").replace(" ","")

print(name)

with open("name.txt", "a") as f: # 追加写入

f.write(name.encode("utf-8")+"

")

# python3的改成下面这两行

# with open("name.txt", "a", encoding="utf-8") as f: # 追加写入

# f.write(name+"

")

except Exception as msg:

print(u"抓取粉丝名称过程中报错了 :%s"%str(msg))

if __name__ == "__main__":

cookies = get_cookies(url)

add_cookies(cookies)

n = get_ye_nub(url)

for i in list(range(1, n+1)):

save_name(i)

---------------------------------python接口自动化完整版-------------------------

全书购买地址 https://yuedu.baidu.com/ebook/585ab168302b3169a45177232f60ddccda38e695

作者:上海-悠悠 QQ交流群:588402570

也可以关注下我的个人公众号: