Ubuntu14.04(indigo)实现RGBDSLAMv2(数据集和实时Kinect v2)

一、在.bag数据集上跑RGBDSLAMv2

RGBDSLAMv2指的是Felix Endres大神在2014年发表论文,实现的基于RGB-D 摄像头的SLAM系统,用于创建三维点云或者八叉树地图。

安装步骤重点参考原gitbub网址:https://github.com/felixendres/rgbdslam_v2

说明本人台式机硬件配置:

Intel(R)Core(TM)i5-6500 CPU @ 3.20GHz 3.20GHz;

RAM: 16.0GB;

GPU: NVIDIA GeForce GTX 1060 6GB。

1. 在Ubuntu14.04中安装ROS Indigo,参考网址:http://wiki.ros.org/cn/indigo/Installation/Ubuntu

2. 安装opencv2.4.9,参考网址:http://www.samontab.com/web/2014/06/installing-opencv-2-4-9-in-ubuntu-14-04-lts/

http://blog.csdn.net/baoke485800/article/details/51236198

系统更新

sudo apt-get update sudo apt-get upgrade

安装相关依赖包

sudo apt-get install build-essential libgtk2.0-dev libjpeg-dev libtiff4-dev libjasper-dev libopenexr-dev cmake python-dev python-numpy python-tk libtbb-dev libeigen3-dev yasm libfaac-dev libopencore-amrnb-dev libopencore-amrwb-dev libtheora-dev libvorbis-dev libxvidcore-dev libx264-dev libqt4-dev libqt4-opengl-dev sphinx-common texlive-latex-extra libv4l-dev libdc1394-22-dev libavcodec-dev libavformat-dev libswscale-dev default-jdk ant libvtk5-qt4-dev

利用wget获得Opencv2.4.9源文件,等下载完成后解压

wget http://sourceforge.net/projects/opencvlibrary/files/opencv-unix/2.4.9/opencv-2.4.9.zip

unzip opencv-2.4.9.zipcdopencv-2.4.9

cmake编译安装opencv源文件包

mkdir buildcdbuild

cmake -D WITH_TBB=ON -D BUILD_NEW_PYTHON_SUPPORT=ON -D WITH_V4L=ON -D INSTALL_C_EXAMPLES=ON -D INSTALL_PYTHON_EXAMPLES=ON -D BUILD_EXAMPLES=ON -D WITH_QT=ON -D WITH_OPENGL=ON -D WITH_VTK=ON ..

make -j4

sudo make install

配置opencv相关

sudo gedit /etc/ld.so.conf.d/opencv.conf

在打开的文件中(空文件也可)添加如下代码并保存

/usr/local/lib

执行以下代码

sudo ldconfig

打开另外一个文件

sudo gedit /etc/bash.bashrc

在文件末尾添加如下并保存退出

PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/usr/local/lib/pkgconfig

export PKG_CONFIG_PATH

检查opencv是否安装成功

cd ~/opencv-2.4.9/samples/c chmod +x build_all.sh ./build_all.sh

老版本的C语言接口

./facedetect --cascade="/usr/local/share/OpenCV/haarcascades/haarcascade_frontalface_alt.xml"--scale=1.5 lena.jpg

./facedetect --cascade="/usr/local/share/OpenCV/haarcascades/haarcascade_frontalface_alt.xml"--nested-cascade="/usr/local/share/OpenCV/haarcascades/haarcascade_eye.xml" --scale=1.5 lena.jpg

新的C++接口

~/opencv-2.4.9/build/bin/cpp-example-grabcut ~/opencv-2.4.9/samples/cpp/lena.jpg

OK,测试成功。(更多测试详见上面参考网址)

opencv官网unix版本下载地址:https://sourceforge.net/projects/opencvlibrary/files/opencv-unix/

3. 安装pcl-1.7.2,使用github源码安装,地址:https://github.com/PointCloudLibrary/pcl

4. 创建catkin工作空间:

#为rgbdslam单独创建一个catkin工作空间

mkdir rgbdslam_catkin_ws

cd rgbdslam_catkin_ws

mkdir src

cd ~/rgbdslam_catkin_ws/src

#将其初始化为catkin工作空间的源码存放文件夹

catkin_init_workspace

#进入catkin工作空间目录

cd ~/rgbdslam_catkin_ws/

#编译新建的catkin工作空间,生成build、devel文件夹,形成完整的catkin工作空间

catkin_make

#调用终端配置文件

source devel/setup.bash

5. 源码安装g2o, 参考原gitbub网址:https://github.com/felixendres/rgbdslam_v2

6. 编译安装RGBDSLAMv2

#进入catkin工作空间的源码存放文件夹

cd ~/rgbdslam_catkin_ws/src

#下载github上对应ROS Indigo版本的rgbdslam源码

wget -q http://github.com/felixendres/rgbdslam_v2/archive/indigo.zip

#解压

unzip -q indigo.zip

#进入catkin工作空间目录

cd ~/rgbdslam_catkin_ws/

#ROS依赖包更新

rosdep update

yuanlibin@yuanlibin:~/rgbdslam_catkin_ws$ rosdep update reading in sources list data from /etc/ros/rosdep/sources.list.d Hit https://raw.githubusercontent.com/ros/rosdistro/master/rosdep/osx-homebrew.yaml Hit https://raw.githubusercontent.com/ros/rosdistro/master/rosdep/base.yaml Hit https://raw.githubusercontent.com/ros/rosdistro/master/rosdep/python.yaml Hit https://raw.githubusercontent.com/ros/rosdistro/master/rosdep/ruby.yaml Hit https://raw.githubusercontent.com/ros/rosdistro/master/releases/fuerte.yaml Query rosdistro index https://raw.githubusercontent.com/ros/rosdistro/master/index.yaml Add distro "groovy" Add distro "hydro" Add distro "indigo" Add distro "jade" Add distro "kinetic" Add distro "lunar" updated cache in /home/yuanlibin/.ros/rosdep/sources.cache

#安装rgbdslam依赖包

rosdep install rgbdslam

正确运行后显示:#All required rosdeps installed successfully

#编译rgbdslam

catkin_make

正确运行后显示:[100%] Built target rgbdslam

source devel/setup.bash

最后运行

roslaunch rgbdslam rgbdslam.launch

会出现错误:

NODES / rgbdslam (rgbdslam/rgbdslam) ROS_MASTER_URI=http://localhost:11311 core service [/rosout] found ERROR: cannot launch node of type [rgbdslam/rgbdslam]: rgbdslam ROS path [0]=/opt/ros/indigo/share/ros ROS path [1]=/opt/ros/indigo/share ROS path [2]=/opt/ros/indigo/stacks No processes to monitor shutting down processing monitor... ... shutting down processing monitor complete

解决方法是将工作空间的路径加到 .bashrc 文件中,如本电脑示例::

echo "source /home/yuanlibin/rgbdslam_catkin_ws/devel/setup.bash" >> ~/.bashrc source ~/.bashrc

至此,RGBDSLAMv2已编译安装完成。

7. 下载TUM的.bag数据集文件,下载地址:https://vision.in.tum.de/data/datasets/rgbd-dataset/download

例如:rgbd_dataset_freiburg1_xyz.bag

查看.bag数据集的信息:

终端1

roscore

终端2

rosbag play rgbd_dataset_freiburg1_xyz.bag

终端3

rostopic info

最后的命令不要按enter键按tab键进行查看

yuanlibin@yuanlibin:~$ rostopic info / /camera/depth/camera_info /cortex_marker_array /camera/depth/image /imu /camera/rgb/camera_info /rosout /camera/rgb/image_color /rosout_agg /clock /tf yuanlibin@yuanlibin:~$

然后修改路径:/home/yuanlibin/rgbdslam_catkin_ws/src/rgbdslam_v2-indigo/launch下的rgbdslam.launch文件

其中第8、9行的输入数据设置

<param name="config/topic_image_mono" value="/camera/rgb/image_color"/> <param name="config/topic_image_depth" value="/camera/depth_registered/sw_registered/image_rect_raw"/>

需要修改为上述数据集相应的信息,修改如下:

<param name="config/topic_image_mono" value="/camera/rgb/image_color"/> <param name="config/topic_image_depth" value="/camera/depth/image"/>

在该文件中可以修改系统使用的特征:

SIFT, SIFTGPU, SURF, SURF128 (extended SURF), ORB.

8. 在数据集上跑RGBDSLAMv2

终端1

roscore

终端2

rosbag play rgbd_dataset_freiburg1_xyz.bag

终端3

roslaunch rgbdslam rgbdslam.launch

最后,就可以看到在数据集上运行RGBDSLAMv2重建的三维点云图了。

二、基于Kinect v1实时运行RGBDSLAMv2

1. 进行ROS indigo下Kinect v1的驱动安装与调试,可参考:http://www.cnblogs.com/yuanlibin/p/8608190.html

2. 在终端执行以下命令:

终端1

roscore

终端2

roslaunch rgbdslam openni+rgbdslam.launch

3. 移动Kinect v1,就可以看到实时重建的三维点云了。

三、基于Kinect v2实时运行RGBDSLAMv2

1. 运行Kinect v2 查看其输出数据信息:

终端1

roslaunch kinect2_bridge kinect2_bridge.launch

终端2(输入命令rostopic info后,不要按enter,要按table键进行查看)

yuanlibin@yuanlibin:~$ rostopic info / /kinect2/bond /kinect2/hd/camera_info /kinect2/hd/image_color /kinect2/hd/image_color/compressed /kinect2/hd/image_color_rect /kinect2/hd/image_color_rect/compressed /kinect2/hd/image_depth_rect /kinect2/hd/image_depth_rect/compressed /kinect2/hd/image_mono /kinect2/hd/image_mono/compressed /kinect2/hd/image_mono_rect /kinect2/hd/image_mono_rect/compressed /kinect2/hd/points /kinect2/qhd/camera_info /kinect2/qhd/image_color /kinect2/qhd/image_color/compressed /kinect2/qhd/image_color_rect /kinect2/qhd/image_color_rect/compressed /kinect2/qhd/image_depth_rect /kinect2/qhd/image_depth_rect/compressed /kinect2/qhd/image_mono /kinect2/qhd/image_mono/compressed /kinect2/qhd/image_mono_rect --More--

2. 在路径/home/yuanlibin/rgbdslam_catkin_ws/src/rgbdslam_v2-indigo/launch下新建一个rgbdslam_kinect2.launch文件,内容如下:

<launch> <node pkg="rgbdslam" type="rgbdslam" name="rgbdslam" cwd="node" required="true" output="screen"> <!-- Input data settings--> <param name="config/topic_image_mono" value="/kinect2/qhd/image_color_rect"/> <param name="config/camera_info_topic" value="/kinect2/qhd/camera_info"/> <param name="config/topic_image_depth" value="/kinect2/qhd/image_depth_rect"/> <param name="config/topic_points" value=""/> <!--if empty, poincloud will be reconstructed from image and depth --> <!-- These are the default values of some important parameters --> <param name="config/feature_extractor_type" value="ORB"/><!-- also available: SIFT, SIFTGPU, SURF, SURF128 (extended SURF), ORB. --> <param name="config/feature_detector_type" value="ORB"/><!-- also available: SIFT, SURF, GFTT (good features to track), ORB. --> <param name="config/detector_grid_resolution" value="3"/><!-- detect on a 3x3 grid (to spread ORB keypoints and parallelize SIFT and SURF) --> <param name="config/optimizer_skip_step" value="15"/><!-- optimize only every n-th frame --> <param name="config/cloud_creation_skip_step" value="2"/><!-- subsample the images' pixels (in both, width and height), when creating the cloud (and therefore reduce memory consumption) --> <param name="config/backend_solver" value="csparse"/><!-- pcg is faster and good for continuous online optimization, cholmod and csparse are better for offline optimization (without good initial guess)--> <param name="config/pose_relative_to" value="first"/><!-- optimize only a subset of the graph: "largest_loop" = Everything from the earliest matched frame to the current one. Use "first" to optimize the full graph, "inaffected" to optimize only the frames that were matched (not those inbetween for loops) --> <param name="config/maximum_depth" value="2"/> <param name="config/subscriber_queue_size" value="20"/> <param name="config/min_sampled_candidates" value="30"/><!-- Frame-to-frame comparisons to random frames (big loop closures) --> <param name="config/predecessor_candidates" value="20"/><!-- Frame-to-frame comparisons to sequential frames--> <param name="config/neighbor_candidates" value="20"/><!-- Frame-to-frame comparisons to graph neighbor frames--> <param name="config/ransac_iterations" value="140"/> <param name="config/g2o_transformation_refinement" value="1"/> <param name="config/icp_method" value="gicp"/> <!-- icp, gicp ... --> <!-- <param name="config/max_rotation_degree" value="20"/> <param name="config/max_translation_meter" value="0.5"/> <param name="config/min_matches" value="30"/> <param name="config/min_translation_meter" value="0.05"/> <param name="config/min_rotation_degree" value="3"/> <param name="config/g2o_transformation_refinement" value="2"/> <param name="config/min_rotation_degree" value="10"/> <param name="config/matcher_type" value="ORB"/> --> </node> </launch>

注意第3、4、5、7行的输入数据设置,应与上面查看到的信息一致。

在该文件中可以修改系统使用的特征:

SIFT, SIFTGPU, SURF, SURF128 (extended SURF), ORB.

3. 最后基于Kinect v2的实时运行RGBDSLAMv2

终端1

roslaunch rgbdslam rgbdslam_kinect2.launch

终端2

roslaunch kinect2_bridge kinect2_bridge.launch

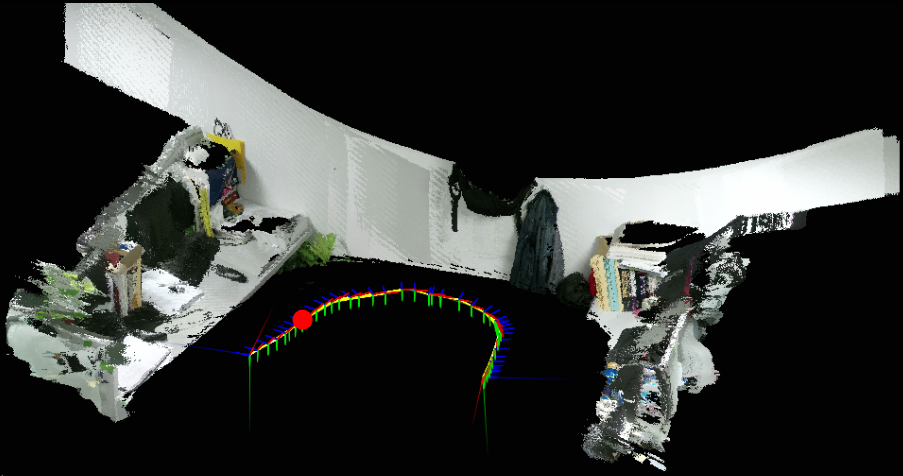

缓慢移动Kinect v2,就可以看到实时重建的三维点云了。自己实现的三维点云截图如下:

图1所示为实验室工位的全景三维点云图;

图2所示为全景图中红点处的侧视图。

图1. 实验室工位的全景三维点云图

图2. 全景图中红点处的侧视图