在放假前准备利用java爬虫webmagic爬取博客园,知乎,csdn,掘金,github等网站。

首先是webmagic介绍:

WebMagic是一个简单灵活的Java爬虫框架。基于WebMagic,你可以快速开发出一个高效、易维护的爬虫。

特性:

- 简单的API,可快速上手

- 模块化的结构,可轻松扩展

- 提供多线程和分布式支持

WebMagic的流程图:

利用Webmagic进行爬取博客园

项目测试采用maven形式

pom.xml配置jar包:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.zyk</groupId> <artifactId>CnBlog</artifactId> <version>1.0-SNAPSHOT</version> <name>CnBlog</name> <!-- FIXME change it to the project's website --> <url>http://www.example.com</url> <properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <maven.compiler.source>1.7</maven.compiler.source> <maven.compiler.target>1.7</maven.compiler.target> </properties> <dependencies> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.11</version> <scope>test</scope> </dependency> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-core</artifactId> <version>0.6.1</version> </dependency> <dependency> <groupId>us.codecraft</groupId> <artifactId>webmagic-extension</artifactId> <version>0.6.1</version> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <version>8.0.13</version> </dependency> </dependencies> <build> <pluginManagement><!-- lock down plugins versions to avoid using Maven defaults (may be moved to parent pom) --> <plugins> <!-- clean lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#clean_Lifecycle --> <plugin> <artifactId>maven-clean-plugin</artifactId> <version>3.1.0</version> </plugin> <!-- default lifecycle, jar packaging: see https://maven.apache.org/ref/current/maven-core/default-bindings.html#Plugin_bindings_for_jar_packaging --> <plugin> <artifactId>maven-resources-plugin</artifactId> <version>3.0.2</version> </plugin> <plugin> <artifactId>maven-compiler-plugin</artifactId> <version>3.8.0</version> </plugin> <plugin> <artifactId>maven-surefire-plugin</artifactId> <version>2.22.1</version> </plugin> <plugin> <artifactId>maven-jar-plugin</artifactId> <version>3.0.2</version> </plugin> <plugin> <artifactId>maven-install-plugin</artifactId> <version>2.5.2</version> </plugin> <plugin> <artifactId>maven-deploy-plugin</artifactId> <version>2.8.2</version> </plugin> <!-- site lifecycle, see https://maven.apache.org/ref/current/maven-core/lifecycles.html#site_Lifecycle --> <plugin> <artifactId>maven-site-plugin</artifactId> <version>3.7.1</version> </plugin> <plugin> <artifactId>maven-project-info-reports-plugin</artifactId> <version>3.0.0</version> </plugin> </plugins> </pluginManagement> </build> </project>

核心代码:

package com.zyk.test; import com.zyk.dao.CnblogsDao; import com.zyk.dao.CnblogsDaoImpl; import com.zyk.entity.Cnblogs; import us.codecraft.webmagic.Page; import us.codecraft.webmagic.Site; import us.codecraft.webmagic.Spider; import us.codecraft.webmagic.processor.PageProcessor; import java.text.SimpleDateFormat; import java.util.Calendar; import java.util.Date; import java.util.regex.Matcher; import java.util.regex.Pattern; public class BlogPageProcessor implements PageProcessor { private Site site = Site.me().setRetryTimes(10).setSleepTime(1000); private static int num = 0; CnblogsDao cnblogsDao=new CnblogsDaoImpl(); public static void main(String[] args) throws Exception { long startTime ,endTime; System.out.println("========zyk的爬虫【启动】!========="); startTime = new Date().getTime(); Spider.create(new BlogPageProcessor()).addUrl("https://www.cnblogs.com/cate/108698/").thread(200).run(); endTime = new Date().getTime(); System.out.println("========zyk小爬虫【结束】========="); System.out.println("一共爬到"+num+"篇博客!用时为:"+(endTime-startTime)/1000+"s"); } @Override public void process(Page page) { if(page.getUrl().regex("https://www.cnblogs.com/cate/108698/.*").match()){ page.addTargetRequests(page.getHtml().xpath("//div[@id='post_list']").links().regex("^(.*\.html)$").all()); page.addTargetRequests(page.getHtml().xpath("////div[@class='pager']").links().all()); } else{ try { Cnblogs cnblogs=new Cnblogs(); //获取url String url=page.getHtml().xpath("//a[@id='cb_post_title_url']/@href").get(); //获取标题 String title = page.getHtml().xpath("//a[@id='cb_post_title_url']/text()").toString(); //获取作者 String author = page.getHtml().xpath("//a[@id='Header1_HeaderTitle']/text()").toString(); //获取时间 String time=page.getHtml().xpath("//span[@id='post-date']/text()").toString(); //获取评论量 String comment = page.getHtml().xpath("////span[@id='stats_comment_count']/text()").get(); //获取阅读量 String view = page.getHtml().xpath("//div[@class='postDesc']/span[@id='post_view_count']/text()").toString(); System.out.println(comment); cnblogs.setUrl(url); cnblogs.setTitle(title); cnblogs.setAuthor(author); cnblogs.setTime(time); if(comment==null){ cnblogs.setComment("评论 - 0"); }else{ cnblogs.setComment(comment); } cnblogs.setComment(comment); cnblogs.setView(view); num++; cnblogsDao.saveBlog(cnblogs); } catch (Exception e) { e.printStackTrace(); } } } @Override public Site getSite() { return this.site; } }

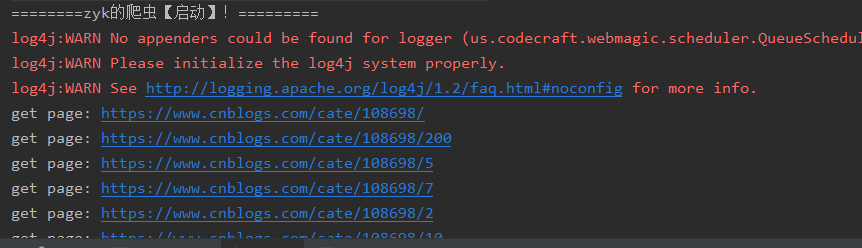

测试java爬虫的控制台结果:

可以看见有获取到页面情况。

上面的红色警告是由于没有配置log4j输出日志的缘故。