1.MSE(均方误差)

MSE是指真实值与预测值(估计值)差平方的期望,计算公式如下:

MSE = 1/m (Σ(ym-y'm)2),所得结果越大,表明预测效果越差,即y和y'相差越大

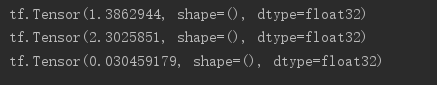

y = tf.constant([1,2,3,0,2]) y = tf.one_hot(y,depth=4) y = tf.cast(y,dtype=tf.float32) out = tf.random.normal([5,4]) # MSE标准定义方式 loss1 = tf.reduce_mean(tf.square(y-out)) # L2-norm的标准定义方式 loss2 = tf.square(tf.norm(y-out))/(5*4) # 直接调用losses中的MSE函数 loss3 = tf.reduce_mean(tf.losses.MSE(y,out)) print(loss1) print(loss2) print(loss3)

2.Cross Entropy Loss(交叉熵)

在理解交叉熵之前,首先来认识一下熵,计算公式如下:

Entropy = -ΣP(i)logP(i),越小的交叉熵对应越大的信息量,即模型越不稳定

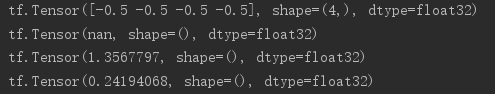

a = tf.fill([4],0.25) a = a*tf.math.log(a)/tf.math.log(2.) print(a) CEL = -tf.reduce_sum(a*tf.math.log(a)/tf.math.log(2.)) print(CEL) a = tf.constant([0.1,0.1,0.1,0.7]) CEL = -tf.reduce_sum(a*tf.math.log(a)/tf.math.log(2.)) print(CEL) a = tf.constant([0.01,0.01,0.01,0.97]) CEL = -tf.reduce_sum(a*tf.math.log(a)/tf.math.log(2.)) print(CEL)

交叉熵主要用于度量两个概率分布间的差异性信息,计算公式如下:

H(p,q) = -Σp(x)logq(x)

也可以写成如下式子:

H(p,q) = H(p) + DKL(p|q) ,其中DKL(p|q)代表p和q之间的距离

当p=q时,H(p,q) = H(p)

当p编码为one-hot时,h(p:[0,1,0]) = -1log1 = 0,H([0,1,0],[p0,p1,p2])=0+DKL(p|q)=-1logq1

loss1 = tf.losses.categorical_crossentropy([0,1,0,0],[0.25,0.25,0.25,0.25]) loss2 = tf.losses.categorical_crossentropy([0,1,0,0],[0.1,0.1,0.7,0.1]) loss3 = tf.losses.categorical_crossentropy([0,1,0,0],[0.01,0.97,0.01,0.01]) print(loss1) print(loss2) print(loss3)