import re

import urllib.request, urllib.error

import xlwt

from bs4 import BeautifulSoup

baseurl = "https://movie.douban.com/top250?start="

# 创建电影链接正则表达式对象,表示规则(字符串的模式):以<a href="开头 + 一组(.*?) + 以">结尾

findLink = re.compile(r'<a href="(.*?)">')

# 电影图片链接规则:以<img开头 + 中间匹配多个.* + 以src="开头 + 一组(.*?)", 忽略换行符re.S'

findImgSrc = re.compile(r'<img.*src="(.*?)"', re.S)

# 电影片名规则:以<span class="title"> + (.*?) + </span>

findTitle = re.compile(r'<span class="title">(.*?)</span>')

# 电影评分规则:<span class="rating_num" property="v:average"> + (.*?) + </span>

findRating = re.compile(r'<span class="rating_num" property="v:average">(.*?)</span>')

# 评价人数规则版一:<span> + (.*?) + </span>

# findJudge = re.compile(r'<span>(.*?)</span>')

# 评价人数规则版二:<span> + (.*?) + </span>

findJudge = re.compile(r'<span>(d*)人评价</span>')

# 概况规则:<span class="inq"> + (.*?) + </span>

findInq = re.compile(r'<span class="inq">(.*?)</span>')

# 相关内容规则:<p class=""> + (.*?) + </p>

findBd = re.compile(r'<p class="">(.*?)</p>', re.S)

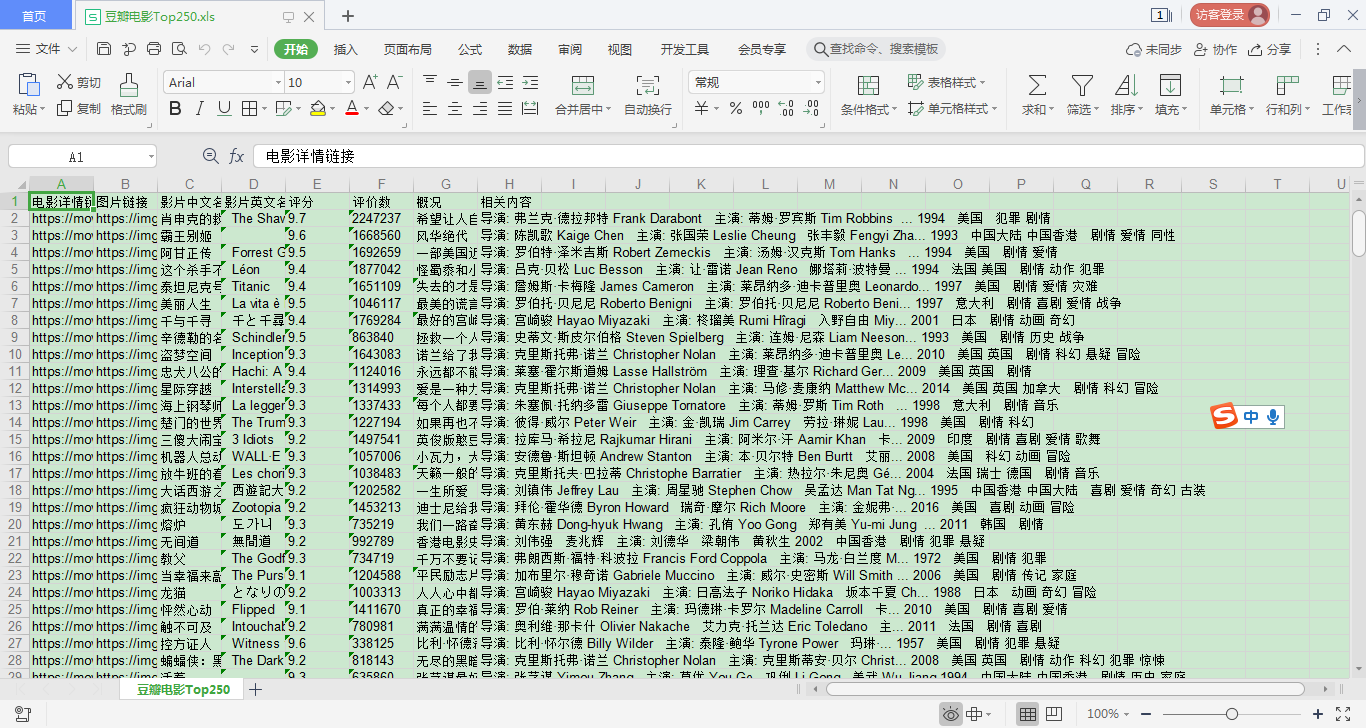

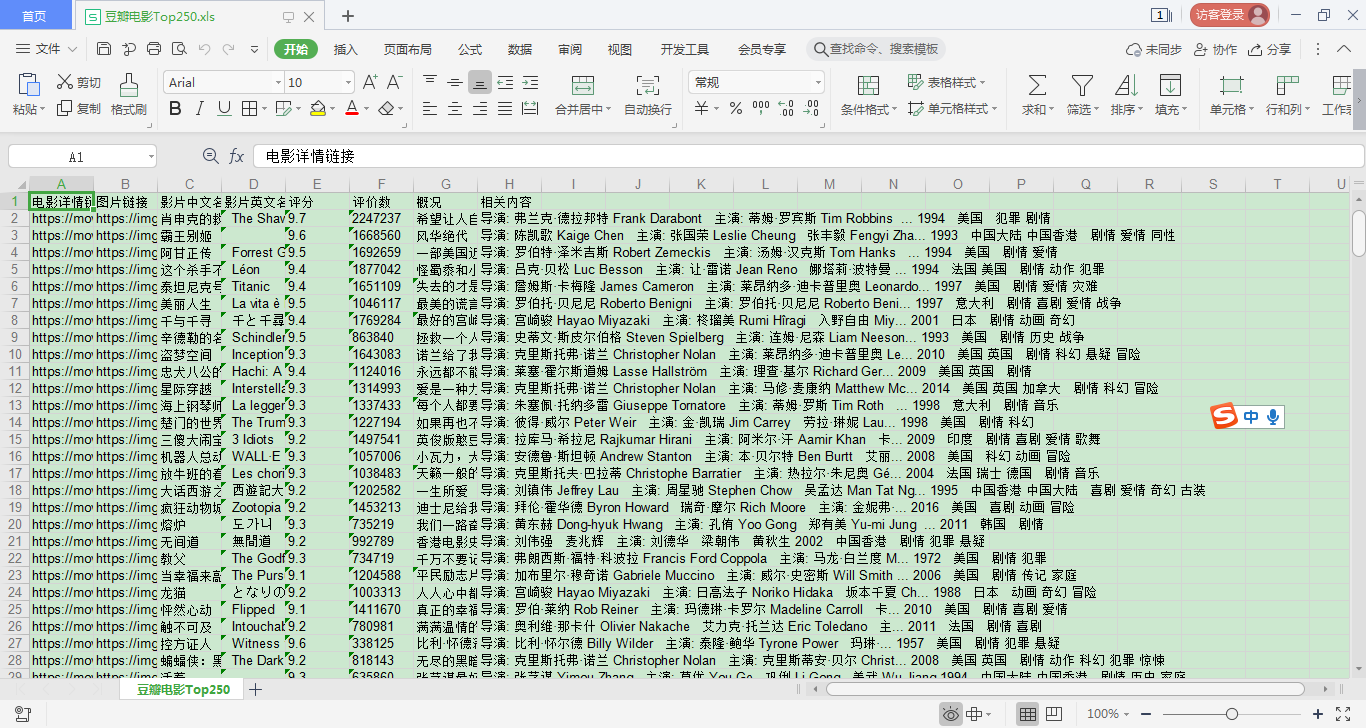

def main():

# 获取网页数据

datalist = getData(baseurl)

# 保存数据

savapath = "豆瓣电影Top250.xls"

saveData(datalist, savapath)

def getData(baseurl):

# 定义获取网页数据功能

datalist = []

for i in range(0, 10):

url = baseurl + str(i * 25)

html = askURL(url)

# 逐一解析数据

soup = BeautifulSoup(html, "html.parser")

# 查找符合要求标签是div同时属性class_是item的内容

for item in soup.find_all("div", class_="item"):

# 保存一部电影

data = []

# 电影的所有信息转字符串

item = str(item)

# 电影详情链接

link = re.findall(findLink, item)[0]

data.append(link)

# 图片

img = re.findall(findImgSrc, item)[0]

data.append(img)

# 标题

title = re.findall(findTitle, item)

# 标题判断中英文

if (len(title) == 2):

ctitle = title[0]

data.append(ctitle)

otitle = title[1].replace("/", "")

data.append(otitle)

else:

data.append(title[0])

# 无英文名时用空格填充

data.append(" ")

# 评分数

rating = re.findall(findRating, item)[0]

data.append(rating)

# 评价数

judge = re.findall(findJudge, item)[0]

data.append(judge)

# 概况

inq = re.findall(findInq, item)

# 概况判断是否空

if len(inq) != 0:

inq = inq[0].replace("。", "")

data.append(inq)

else:

data.append(" ")

# 相关内容

bd = re.findall(findBd, item)[0]

# 相关内容替换规则:'<br + 中间包含多个部分(s+)? + /> + 不止(s+)?',替换成" ",来着bd

bd = re.sub('<br(s+)?/>(s+)?', ' ', bd)

bd = re.sub('/', ' ', bd)

data.append(bd.strip())

datalist.append(data)

return datalist

def saveData(datalist, savapath):

print("开始保存")

# 创建工作表对象样式压缩效果

book = xlwt.Workbook(encoding="utf-8", style_compression=0)

# 创建工作表每个单元格是最新

sheet = book.add_sheet("豆瓣电影Top250", cell_overwrite_ok=True)

# 定义列名

col = ("电影详情链接", "图片链接", "影片中文名", "影片英文名", "评分", "评价数", "概况", "相关内容")

for i in range(0, 8):

# 添加列名

sheet.write(0, i, col[i])

for i in range(0, 250):

print("第%d条" % (i + 1))

data = datalist[i]

for j in range(0, 8):

sheet.write(i + 1, j, data[j])

book.save(savapath)

# 指定url内容

def askURL(baseurl):

# 伪造请求头

headers = {

"user-agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.132 Safari/537.36"}

# 封装对象

request = urllib.request.Request(baseurl, headers=headers)

html = ""

try:

response = urllib.request.urlopen(request)

html = response.read().decode("utf-8")

except urllib.error.URLError as e:

if hasattr(e, "code"):

print(e.code)

if hasattr(e, "reason"):

print(e.reason)

return html

if __name__ == '__main__':

main()

print("保存成功")