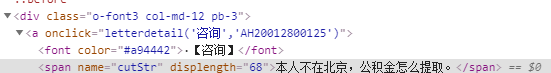

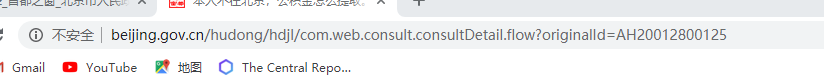

通过这几天的学习,发现有些网页的爬取比较简单,比如小说,但是其他爬取北京市政百姓信件这个网页并没有想象中那么简单,在翻页的时候,网址并没有发生改变,后来通过询问同学,了解了ajax技术,通过scrapy框架和json来进行爬取,首先信件列表网页找到详细页面的url地址,发现网页中并没有完整的url地址,但是地址的区别主要是在后面,后面的数字是在网页中a标签的onclick属性中,获取了这串数字就可以找到具体的网页地址,之后在利用xpath进行信件相关内容的爬取。

# -*- coding: utf-8 -*-

import json

import random

import string

import scrapy

class XinjianSpider(scrapy.Spider):

name = 'xinjian'

allowed_domains = ['www.beijing.gov.cn']

# custome_setting可用于自定义每个spider的设置,而setting.py中的都是全局属性的,当你的

# scrapy工程里有多个spider的时候这个custom_setting就显得很有用了

custom_settings = {

"DEFAULT_REQUEST_HEADERS": {

'authority': 'www.beijing.gov.cn',

# 请求报文可通过一个“Accept”报文头属性告诉服务端 客户端接受什么类型的响应。

'accept': 'application/json, text/javascript, */*; q=0.01',

# 指定客户端可接受的内容编码

'accept-encoding': 'gzip, deflate',

# 指定客户端可接受的语言类型

'accept-language': 'zh-CN,zh;q=0.9',

'Connection': 'keep-alive', # 就是告诉服务器我参数内容的类型,该项会影响传递是from data还是payload传递

'Content-Type': 'text/json',

# 跨域的时候get,post都会显示origin,同域的时候get不显示origin,post显示origin,说明请求从哪发起,仅仅包括协议和域名

'origin': 'http://www.beijing.gov.cn',

# 表示这个请求是从哪个URL过来的,原始资源的URI

'referer': 'http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow',

# 设置请求头信息User-Agent来模拟浏览器

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36',

'x-requested-with': 'XMLHttpRequest',

# cookie也是报文属性,传输过去

'cookie': 'HDJLJSID=39DBD6D5E12B9F0F8834E297FAFC973B; __jsluid_h=e6e550159f01ae9aceff30d191b09911; sensorsdata2015jssdkcross=%7B%22distinct_id%22%3A%2216f9edc47471cb-0059c45dfa78d6-c383f64-1049088-16f9edc474895%22%7D; _gscu_564121711=80128103kc5dx617; X-LB=1.1.44.637df82f; _va_ref=%5B%22%22%2C%22%22%2C1580462724%2C%22https%3A%2F%2Fwww.baidu.com%2Flink%3Furl%3DM-f5ankfbAnnYIH43aTQ0bvcFij9-hVxwm64pCc6rhCu5DYwg6xEVis-OVjqGinh%26wd%3D%26eqid%3Dd6b151bf000cfb36000000025e1c5d84%22%5D; _va_ses=*; route=74cee48a71a9ef78636a55b3fa493f67; _va_id=b24752d801da28d7.1578917255.10.1580462811.1580450943.',

}

}

# 需要重写start_requests方法

def start_requests(self):

# 网页里ajax链接

url = "http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.mailList.biz.ext"

# 所有请求集合

requests = []

for i in range(0, 33750, 1000):

random_random = random.random()

# 封装post请求体参数

my_data = {'PageCond/begin': i, 'PageCond/length': 1000, 'PageCond/isCount': 'true', 'keywords': '',

'orgids': '', 'startDate': '', 'endDate': '', 'letterType': '', 'letterStatue': ''}

# 模拟ajax发送post请求

request = scrapy.http.Request(url, method='POST',

callback=self.parse_model,

body=json.dumps(my_data),

encoding='utf-8')

requests.append(request)

return requests

def parse_model(self, response):

# 可以利用json库解析返回来得数据

jsonBody = json.loads(response.body)

print(jsonBody)

size = jsonBody['PageCond']['size']

data = jsonBody['mailList']

listdata = {}

fb1 = open('suggest.txt' , 'a')

fb2 = open('consult.txt' , 'a')

fb3 = open('complain.txt', 'a')

for i in range(size):

print(i)

listdata['letter_type'] = data[i]['letter_type']

listdata['original_id'] = data[i]['original_id']

if(listdata['letter_type']=="咨询"):

fb2.write(listdata['original_id'])

fb2.write('

')

if (listdata['letter_type'] == "建议"):

fb1.write(listdata['original_id'])

fb1.write('

')

else:

fb3.write(listdata['original_id'])

fb3.write('

')

#listdata['catalog_id'] = str(data[i]['catalog_id'])

#listdata['letter_title'] = data[i]['letter_title']

#listdata['create_date'] = data[i]['create_date']

#listdata['org_id'] = data[i]['org_id']

#listdata['letter_status'] = data[i]['letter_status']

print(listdata)

import random

import re

import requests

from lxml import etree

header = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.117 Safari/537.36'

}

def read():

f=open('D://consult.txt','r')

for id in f.readlines():

id = id.strip()

url2 = "http://www.beijing.gov.cn/hudong/hdjl/com.web.consult.consultDetail.flow?originalId="+id

parser(url2)

f.close()

def write(contents):

f2 = open('D://zx3.txt', 'a+')

f2.write(contents.encode("gbk", 'ignore').decode("gbk", "ignore"))

print(contents, '写入成功')

f2.close()

def parser(url):

try:

response=requests.get(url,headers=header,timeout=15)

html=etree.HTML(response.text)

def process_num(num):

num =[re.sub(r"

|

| |xa0| ","",i) for i in num]

num=[i for i in num if len(i)>0]

return num

def process_content(content):

content=[re.sub(r"

|

| |xa0| ","",i)for i in content]

content=[i for i in content if len(i)>0]

return content

def process_jigou(jigou):

jigou=[re.sub(r"

|

| | ","",i)for i in jigou]

jigou=[i for i in jigou if len(i)>0]

return jigou

def process_hfcontent(hfcontent):

hfcontent=[re.sub(r"

|

| |xa0| ","",i)for i in hfcontent]

hfcontent=[i for i in hfcontent if len(i)>0]

return hfcontent

def process_person(person):

person=[re.sub(r"

|

| | ","",i)for i in person]

person=[i for i in person if len(i)>0]

return person

data_list={ }

data_list['type']="咨询"

data_list['title']=html.xpath("//div[@class='col-xs-10 col-sm-10 col-md-10 o-font4 my-2']//text()")

data_list['person']=html.xpath("//div[@class='col-xs-10 col-lg-3 col-sm-3 col-md-4 text-muted ']/text()")[0].lstrip('来信人:')

data_list['person']=process_person(data_list['person'])

data_list['date']=html.xpath("//div[@class='col-xs-5 col-lg-3 col-sm-3 col-md-3 text-muted ']/text()")[0].lstrip('时间:')

data_list['num']=html.xpath("//div[@class='col-xs-4 col-lg-3 col-sm-3 col-md-3 text-muted ']/label/text()")

data_list['num']=process_num(data_list['num'])[0]

data_list['content']=html.xpath("//div[@class='col-xs-12 col-md-12 column p-2 text-muted mx-2']//text()")

data_list['content']=process_content(data_list['content'])

data_list['jigou']=html.xpath("//div[@class='col-xs-9 col-sm-7 col-md-5 o-font4 my-2']/text()")

data_list['jigou']=process_jigou(data_list['jigou'])[0]

data_list['date2']=html.xpath("//div[@class='col-xs-12 col-sm-3 col-md-3 my-2 ']/text()")[0].lstrip('答复时间:')

data_list['hfcontent']=html.xpath("//div[@class='col-xs-12 col-md-12 column p-4 text-muted my-3']//text()")

data_list['hfcontent']=process_hfcontent(data_list['hfcontent'])

print(data_list)

write(data_list['type']+"||")

for i in data_list['title']:

write(i)

write("||")

for i in data_list['person']:

write(i)

write("||"+data_list['date'] + "||")

write(data_list['num'] + "||")

for i in data_list['content']:

write(i)

write("||"+data_list['jigou'] + "||")

write(data_list['date2'] + "||")

for i in data_list['hfcontent']:

write(i)

write("

")

except:

print("投诉爬取失败!")

if __name__=="__main__":

read()

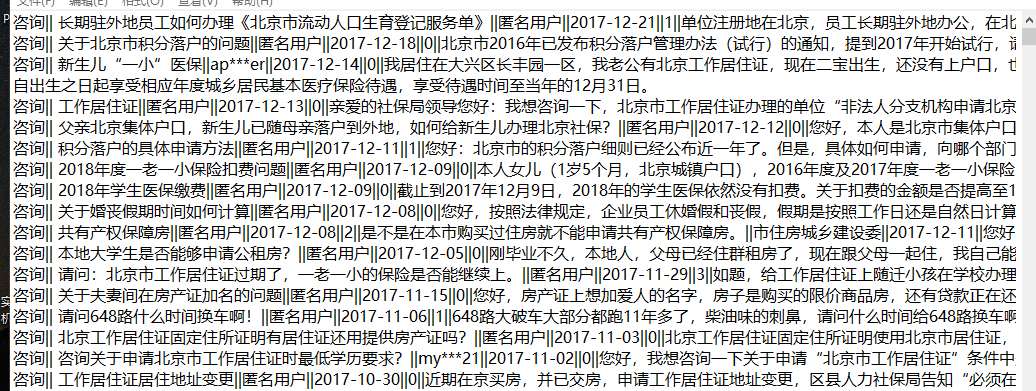

获取的数据: