容器资源需求、资源限制及Heapster

容器的资源需求,资源限制

requests:需求,最低保障;

limits:限制,应限制;

CPU:

1颗逻辑CPU

500m=0.5CPU

内存:

E、P、T、G、M、K

Ei、Pi

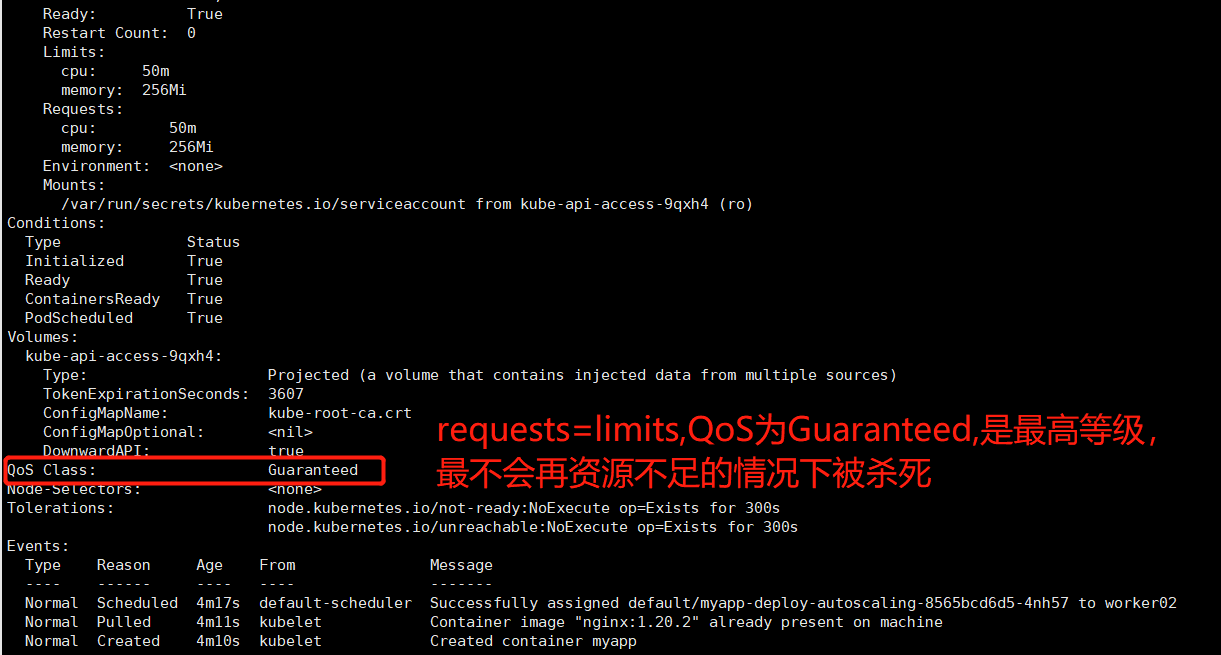

QoS:

Guaranteed: 每个容器

同时设置CPU和内存的requests和limits。

cpu.limits=cpu.requests

memory.limits=memory.request

Burstable:

至少有一个容器设置CPU或内存资源的requests属性

BestEffort: 没有任何一个容器设置了requests或limits属性;最低优先级别;# 当节点资源匮乏时,为维护节点自身的安全,会回收一定的节点资源,这时优先级最低的pod最先被杀死。

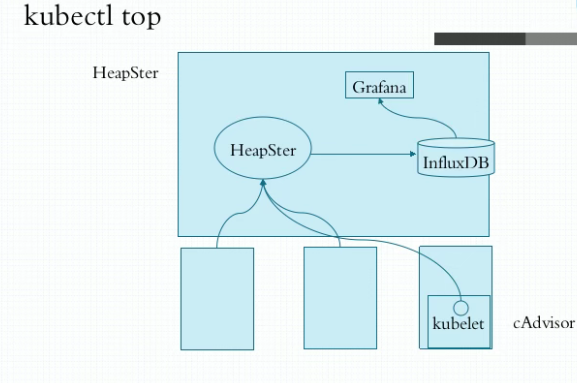

Heapster

在k8s1.11之前使用heapster采集k8s集群内资源使用情况1.12版之后直接废弃heapster,之后使用metric-server

InfluxDB是一个时序数据库,为了将cAdvisor采集到的数据做存储,便于数据指标的持续展示。通过grafana接入InfluxDB数据源进行数据展示。

pod资源监控的大体行为可分为3类指标:

1.kubernetes系统指标

2.容器指标(cpu、内存的利用状况)

3.应用指标(业务指标:接受了多少用户请求、容器内部启动了多少个子进程处理请求)

部署InfluxDB

cat influxDB.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-influxdb

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: influxdb

template:

metadata:

labels:

task: monitoring

k8s-app: influxdb

spec:

containers:

- name: influxdb

image: k8s.gcr.io/heapster-influxdb-amd64:v1.5.2

volumeMounts:

- mountPath: /data

name: influxdb-storage

volumes:

- name: influxdb-storage

emptyDir: {} # 生产最好换成持久化存储

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon,you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-infludb

spec:

ports:

- port: 8086

targetPort: 8086

selector:

k8s-app: influxdb

cat rbac.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: heapster

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:heapster

subjects:

- kind: ServiceAccount

name: heapster

namespace: kube-system

cat heapster.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: heapster

namespace: kube-system

---

apiVersion: apps/v1

kind: Development

metadata:

name: heapster

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: influxdb

template:

metadata:

labels:

task: monitoring

k8s-app: heapster

spec:

serviceAccountName: heapster

containers:

- name: heapster

image: k8s.gcr.io/heapster-amd64:v1.5.4

imagePullPolicy: IfNotPresent

command:

- /heapster

- --source=kubernetes:https://kubernetes.default

- --sink=influxdb:http//monitoring-influxdb.kube-system.svc:8086

---

apiVersion: v1

kind: Service

metadata:

labels:

task: monitoring

# For use as a CLuster add-on (https://github.com/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon,you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: Heapster

name: heapster

namespace: kube-system

spec:

ports:

- port: 80

targetPort: 8082

selector:

k8s-app: heapster

type: NodePort

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: kube-system

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: influxdb

template:

metadata:

labels:

task: monitoring

k8s-app: grafana

spec:

containers:

- name: grafana

image: k8s.gcr.io/heapster-grafana-amd64:v5.0.4

ports:

- containerPort: 3000

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/certs

name: ca-certificates

readOnly: true

- mountPath: /var

name: grafana-storage

env:

- name: INFLUXDB_HOST

value: monitoring-influxdb

- name: GF_SERVER_HTTP_PORT

value: "3000"

# The followiing env variables are required to make Grafana accessible via

# the kubernetes api-server proxy. On production clusters,we recommend

# removing these env variables,setup auth for grafana,and expose the grafana

# service using a LoadBalancer or a public IP.

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_SERVER_ROOT_URL

# If you;re only using the API Server proxy,set this value instead:

# value: /api/v1/namespaces/kube-system/services/monitoring-grafana/proxy

value: /

volumes:

- name: ca-certificates

hostPath:

path: /etc/ssl/certs

- name: grafana-storage

emptyDir: {}

---

apiVersions: v1

kind: Service

metadata:

labels:

# For use as a Cluster add-on (https://github.com/kubernetes/kubernetes/tree/master/cluster/addons)

# If you are NOT using this as an addon, you should comment out this line.

kubernetes.io/cluster-service: 'true'

kubernetes.io/name: monitoring-grafana

name: monitoring-grafana

namespace: kube-system

spec:

# In a production setup,we recommend accessing Grafana through an external LoadBalancer

# Oor through a public IP.

# type: LoadBalancer

# you could also use NodePort to the service at a randonly gr=nerated port

# type: NodePort

ports:

- port: 80

targetPort: 3000

selector:

k8s-app: grafana

type: NodePort

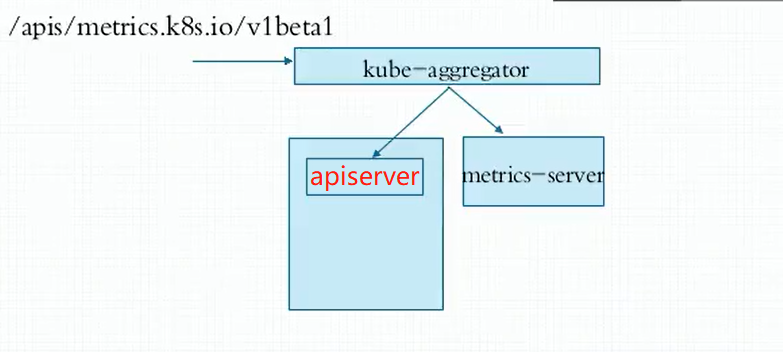

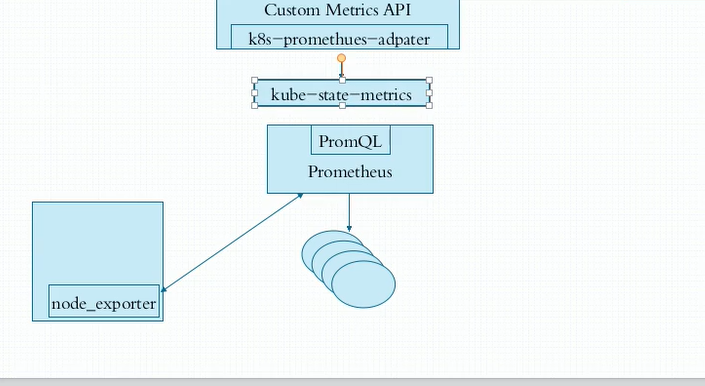

资源指标API及自定义指标API

资源指标:metrics-server

自定义指标: prometheus,k8s-promethus-adapter

新一代架构:

核心指标流水线:由kubelet、metrics-server以及由API server提供的api组成;CPU累积使用率、Pod的资源占用率及容器的磁盘占用率;

监控流水线:用于从系统收集各种指标数据并提供终端用户、存储系统以及HPA,它们包含核心指标及许多非核心指标。非核心指标本身不能被k8s所解析。

metrics-server: API server,此API server 服务于资源指标的API服务,不是k8s的apiserver,

关于metrics-server镜像的下载,kubernetes官方已经把关于metrics-server附件(addons)的稳定版本添加到你核心附件,点击 metrics-servicer附件安装,可以进入kubernetes对应版本中下载yaml文件进行安装或者在此安装。本阶段使用的是k8s的1.22版本,对应metrics-server插件两个容器的版本是:

k8s.gcr.io/metrics-server/metrics-server:v0.5.2

k8s.gcr.io/autoscaling/addon-resizer:1.8.14

已经上传到dockerhub账号

在此版本之前,metrics-server容器的development YAML文件并没做存活性和就绪性探测;下面附上k8s 1.22版本的mtrics-server 的部署的yaml文件

[root@master01 addons_metrics-server]# cat auth-delegator.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

[root@master01 addons_metrics-server]# cat auth-reader.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

[root@master01 addons_metrics-server]# cat metrics-apiserver.yaml

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

cat metrics-server-deployment.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: v1

kind: ConfigMap

metadata:

name: metrics-server-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

NannyConfiguration: |-

apiVersion: nannyconfig/v1alpha1

kind: NannyConfiguration

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server-v0.5.2

namespace: kube-system

labels:

k8s-app: metrics-server

addonmanager.kubernetes.io/mode: Reconcile

version: v0.5.2

spec:

selector:

matchLabels:

k8s-app: metrics-server

version: v0.5.2

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

version: v0.5.2

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

nodeSelector:

kubernetes.io/os: linux

containers:

- name: metrics-server

#image: k8s.gcr.io/metrics-server/metrics-server:v0.5.2

image: v5cn/metrics-server:v0.5.2

command:

- /metrics-server

- --metric-resolution=30s

- --kubelet-use-node-status-port

- --kubelet-insecure-tls #

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

- --cert-dir=/tmp

- --secure-port=10250

ports:

- containerPort: 10250

name: https

protocol: TCP

readinessProbe:

httpGet:

path: /readyz

port: https

scheme: HTTPS

periodSeconds: 10

failureThreshold: 3

livenessProbe:

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

failureThreshold: 3

volumeMounts:

- mountPath: /tmp

name: tmp-dir

- name: metrics-server-nanny

image: k8s.gcr.io/autoscaling/addon-resizer:1.8.14

resources:

limits:

cpu: 100m

memory: 300Mi

requests:

cpu: 5m

memory: 50Mi

env:

- name: MY_POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: MY_POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: metrics-server-config-volume

mountPath: /etc/config

command:

- /pod_nanny

- --config-dir=/etc/config

# - --cpu={{ base_metrics_server_cpu }}

- --cpu=300m

- --extra-cpu=20m #0.5m

# - --memory={{ base_metrics_server_memory }}

- --memory=200Mi

# - --extra-memory={{ metrics_server_memory_per_node }}Mi

- --extra-memory=10Mi

- --threshold=5

- --deployment=metrics-server #-v0.5.2

- --container=metrics-server

- --poll-period=30000

- --estimator=exponential

# Specifies the smallest cluster (defined in number of nodes)

# resources will be scaled to.

#- --minClusterSize={{ metrics_server_min_cluster_size }}

- --minClusterSize=2

# Use kube-apiserver metrics to avoid periodically listing nodes.

- --use-metrics=true

volumes:

- name: metrics-server-config-volume

configMap:

name: metrics-server-config

- emptyDir: {}

name: tmp-dir

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

[root@master01 addons_metrics-server]# cat metrics-server-service.yaml

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "Metrics-server"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: https

[root@master01 addons_metrics-server]# cat resource-reader.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

verbs:

- get

- list

- watch

- apiGroups:

- "apps"

resources:

- deployments

verbs:

- get

- list

- update

- watch

- patch

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

kubectl apply -f ./ # 部署metrics-server

kubectl get po -n kube-system

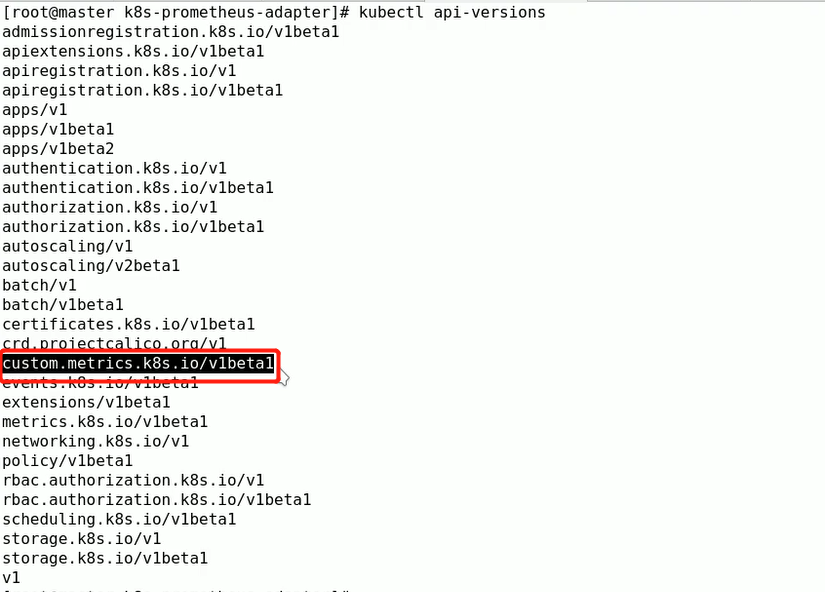

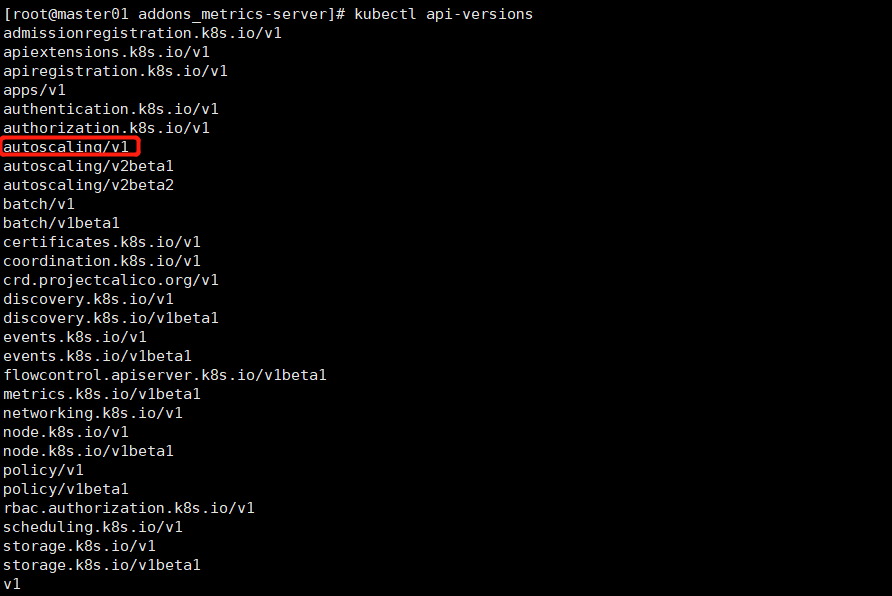

kubectl api-versions # 部署metrics-server之后新增加的api-->metrics.k8s.io/v1beta1

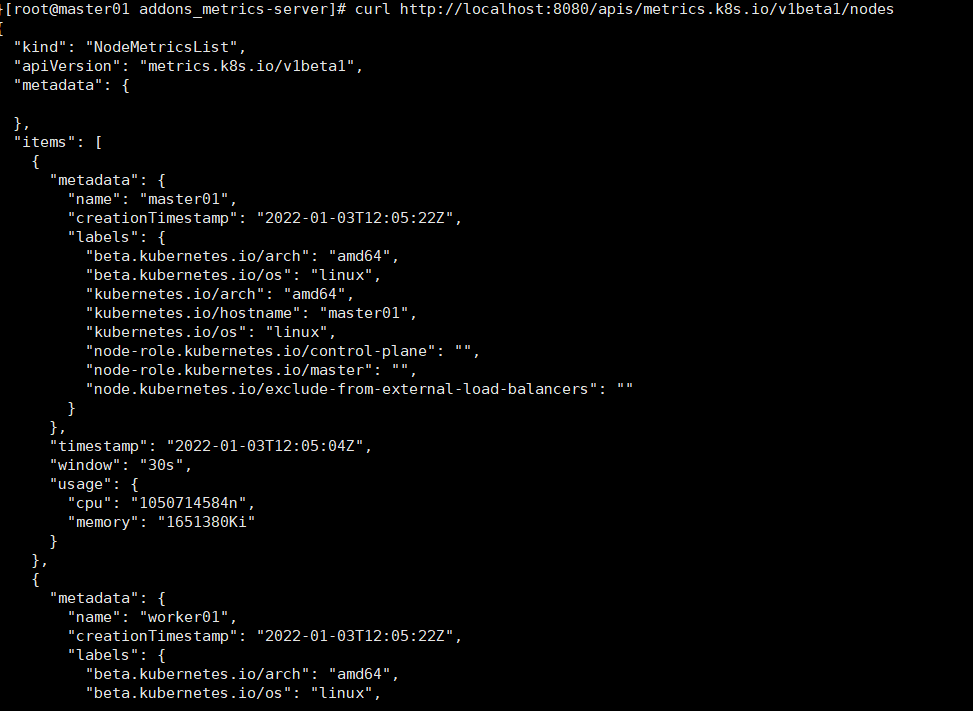

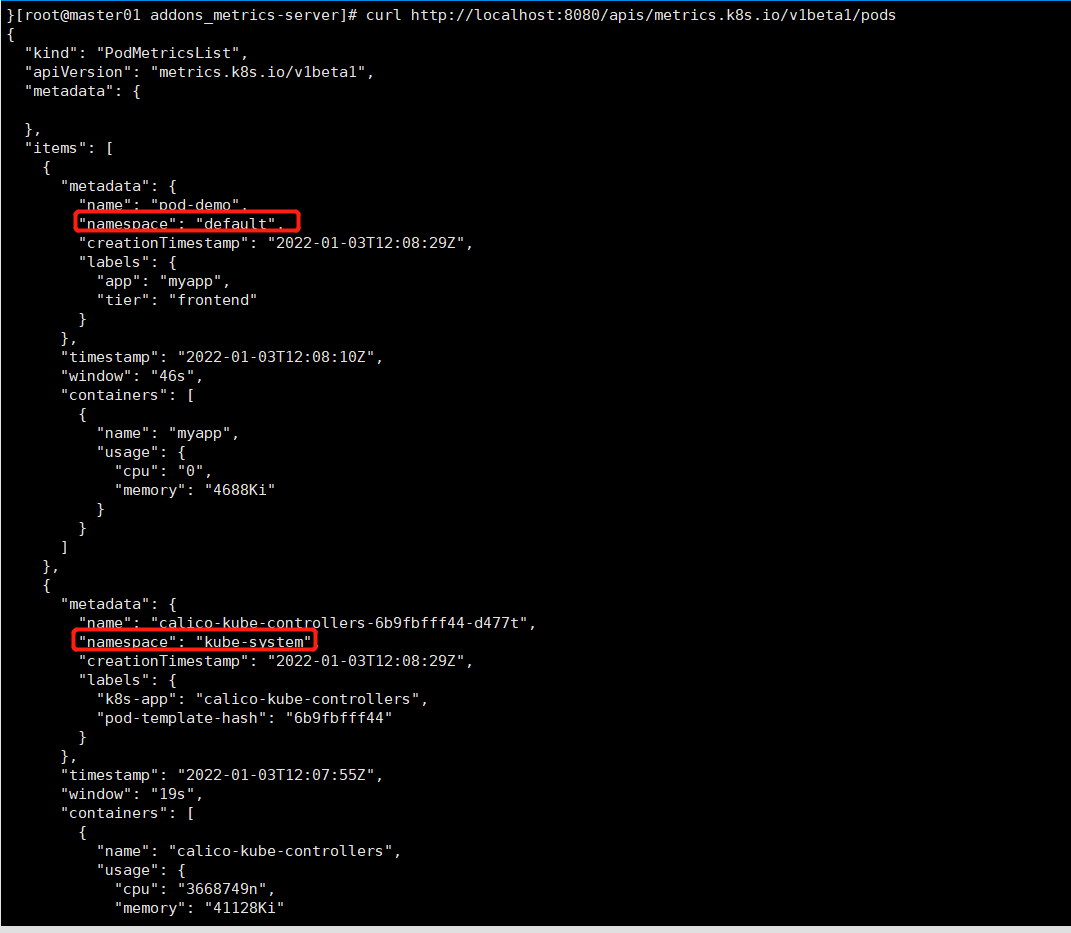

打开一个kube-apiserver的反代接口,通过curl获取api接口数据

kubectl proxy --port=8080

curl http://localhost:8080/apis/metrics.k8s.io/v1beta1

'''

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "metrics.k8s.io/v1beta1",

"resources": [

{

"name": "nodes",

"singularName": "",

"namespaced": false,

"kind": "NodeMetrics",

"verbs": [

"get",

"list"

]

},

{

"name": "pods",

"singularName": "",

"namespaced": true,

"kind": "PodMetrics",

"verbs": [

"get",

"list"

]

}

]

}

'''

curl http://localhost:8080/apis/metrics.k8s.io/v1beta1/nodes

curl http://localhost:8080/apis/metrics.k8s.io/v1beta1/pods

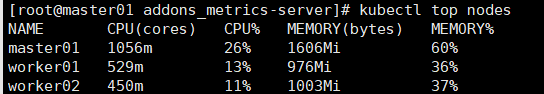

kubectl top nodes

kubectl top pods -n kube-system

k8s-promtheus监控部署

node-exporter

cat node-exporter-ds.yaml

apiVersion: v1

kind: NameSpace

metadata:

name: prom

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: prometheus-node-exporter

namespace: prom

labels:

app: prometheus

component: node-exporter

spec:

selector:

matchLabels:

app: prometheus

component: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

hostNetwork: true

hostPID: true

hostNetwork: true

tolerations:

- key: node-role.kubernetes.io/master

operator: "Exists"

effect: NoSchedule

containers:

- image: prom/node-exporter:v0.16.0

name: prometheus-node-exporter

ports:

- name: prom-node-exp

containerPort: 9100

hostPort: 9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /

---

apiVersion: v1

kind: Service

metadata:

name: prometheus-node-exporter

namespace: prom

spec:

ports:

- port: 9100

targetPort: 9100

type: NodePort

selector:

app: prometheus

component: node-exporter

[root@master node_exporter]# kubectl apply -f ./

prometheus

cd /root/manifests/k8s-prom/prometheus && ls

prometheus-cfg.yaml prometheus-deploy.yaml prometheus-rbac.yaml prometheus-svc.yaml

cat prometheus-rbac.yaml

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions:

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersions: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: prom

---

apiVersions: rbac.authorization.k8s.io/vibeta1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: prom

cat prometheus-deploy.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-server

namespace: prom

labels:

app: prometheus

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

component: server

# matchExpressions:

#- {key:app, operator: In, values: [prometheus]}

#- {key: component, operator: In, values: [server]}

template:

metadata:

labels:

app: prometheus

component: server

annotations:

prometheus.io/scrape: 'false'

spec:

nodeName: node1

serviceAccountName: prometheus

containers:

- name: prometheus

image: prom/prometheus:v2.2.1

imagePullPolicy: IfNotPresent

command:

- prometheus

- --config.file=/etc/prometheus/promethus.yml

- --storage.tsdb.path=/prometheus

- --storage.tsdb.retention=720h

ports:

- containerPort: 9090

protocol: TCP

resources:

limits:

memory: 2Gi

volumeMounts:

- mountPath: /etc/prommetheus/prometheus.yml

name: prometheus-config

subPath: prometheus.yml

- mountPath: /prometheus/

name: prometheus-storage-volume

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

items:

- key: prometheus.yml

path: prometheus.yml

mode: 0644

- name: prometheus-storage-volume

hostPath:

path: /data

type: Directory

kube-stats-metrics

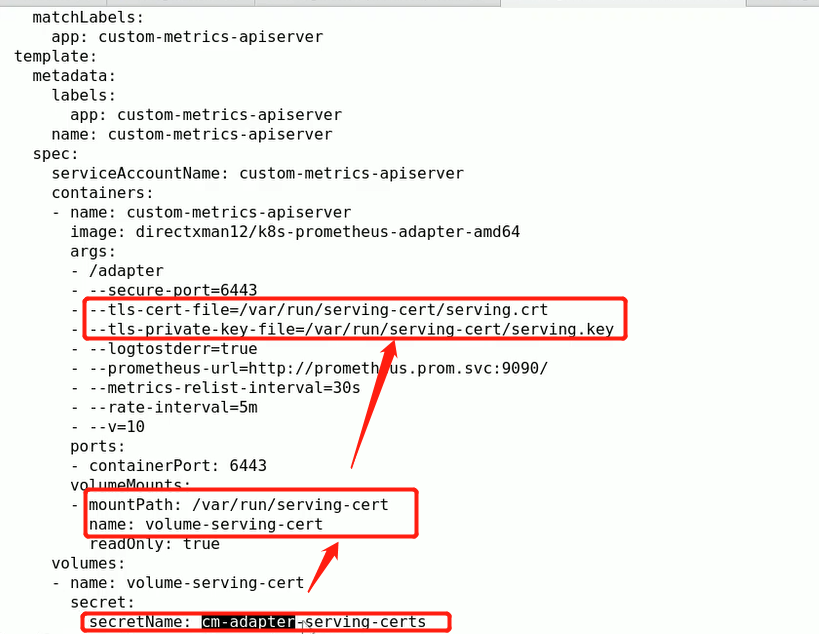

k8s-prom-adapter

下载k8s-prom-adapter部署清单,和k8s-prom-adapter容器镜像

(umask 077; openssl genrsa -out serving.key 2048)

openssl req -new -key serving.key -out serving.csr -subj "/CN=serving"

openssl x509 -req in serving.csr -CA ./ca.crt -CAkey ./ca.key -CAcreateserial -out serving.crt -days 3650

kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=serving.key -n prom

cd /root/manifests/k8s-prom/prometheus && ls

custom-metrics-apiserver-auth-delegator-cluster-role-binding.yaml

custom-metrics-apiserver-auth-reader-role-binding.yaml

custom-metrics-apiserver-deployment.yaml

custom-metrics-apiserver-resource-reader-cluster-role-binding.yaml

custom-metrics-apiserver-service-account.yaml

custom-metrics-apiserver-service.yaml

custom-metrics-apiservice.yaml

custom-metrics-cluster-role.yaml

custom-metrics-config-map.yaml

custom-metrics-resource-reader-cluster-role.yaml

hpa-custom-metrics-cluster-role-binding.yaml

cat custom-metrics-apiserver-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: custom-metrics-apiserver

name: custom-metrics-apiserver

namespace: prom

spec:

replicas: 1

selector:

matchLabels:

app: custom-metrics-apiserver

template:

metadata:

labels:

app: custom-metrics-apiserver

name: custom-metrics-apiserver

spec:

serviceAccountName: custom-metrics-apiserver

containers:

- name: custom-metrics-apiserver

image: directxman12/k8s-prometheus-adapter-amd64

args:

- --secure-port=6443

- --tls-cert-file=/var/run/serving-cert/serving.crt

- --tls-private-key-file=/var/run/serving-cert/serving.key

- --logtostderr=true

- --prometheus-url=http://prometheus.prom.svc:9090/

- --metrics-relist-interval=1m

- --v=10

- --config=/etc/adapter/config.yaml

ports:

- containerPort: 6443

volumeMounts:

- mountPath: /var/run/serving-cert

name: volume-serving-cert

readOnly: true

- mountPath: /etc/adapter/

name: config

readOnly: true

- mountPath: /tmp

name: tmp-vol

volumes:

- name: volume-serving-cert

secret:

secretName: cm-adapter-serving-certs

- name: config

configMap:

name: adapter-config

- name: tmp-vol

emptyDir: {}

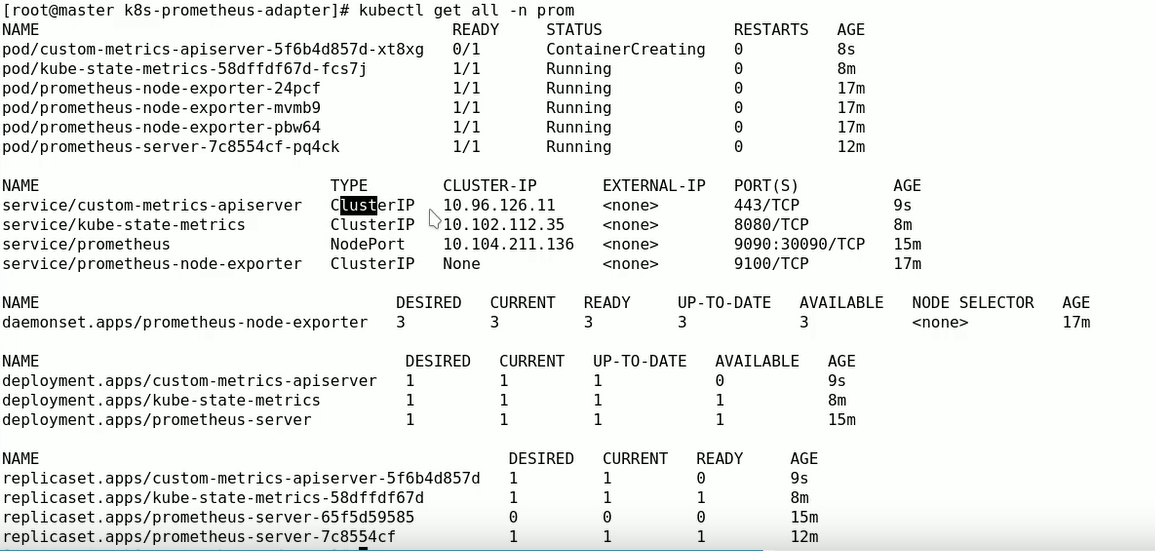

kubectl apply -f ./

kubectl get all -n prom

kubectl api-versions

kubectl proxy --port=8080

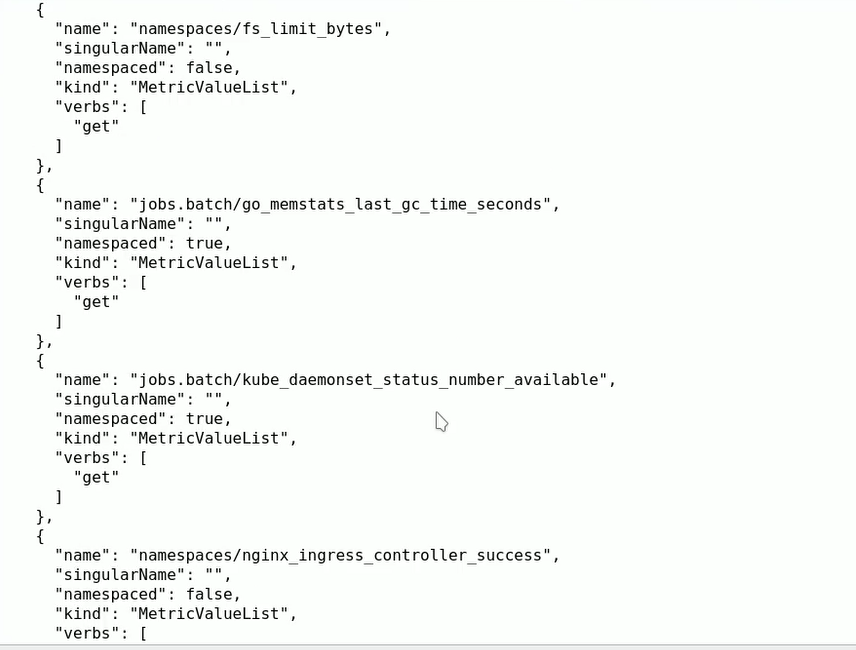

curl http://localhost:8080/apis/custom.metrics.k8s.io/v1beta1/

HPA(HorizontalPodAutoscaler)

kubectl api-versions

kubectl explain hpa

apiVersion <string>

kind <string>

metadata <Object>

spec <Object>

maxReplicas <integer> -required-

minReplicas <integer>

targetCPUUtilizationPercentage <integer>

scaleTargetRef <Object> -required-

apiVersion <string>

kind <string> -required-

name <string> -required-

http://kubernetes.io/docs/user-guide/identifiers#names

创建一个静态pod

kubectl run myapp --image=nginx:1.20 --replicas=1 --requests='cpu=50m,memory=256Mi' --limits='cpu=50m,memory=256Mi' --labels='app=myapp' --expose --port=80

[root@master01 controll]# cat pod-deploy-autoscaling.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: myapp-deploy-autoscaling

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: myapp

template:

metadata:

name: myapp-deploy-controll

labels:

app: myapp

spec:

containers:

- name: myapp

image: nginx:1.20.2

ports:

- name: http

containerPort: 80

resources:

requests:

cpu: 50m

memory: 256Mi

limits:

cpu: 50m

memory: 256Mi

---

apiVersion: v1

kind: Service

metadata:

name: myapp-deploy-autoscaling

spec:

ports:

- port: 80

targetPort: 80

name: myapp-deploy-autoscaling

protocol: TCP

type: NodePort

selector:

app: myapp

kubectl apply -f pod-deploy-autoscaling.yaml

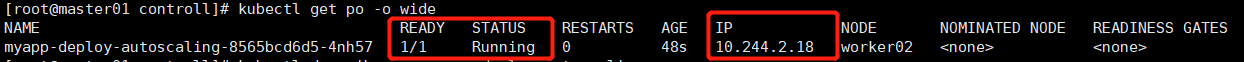

kubectl get po -o wide

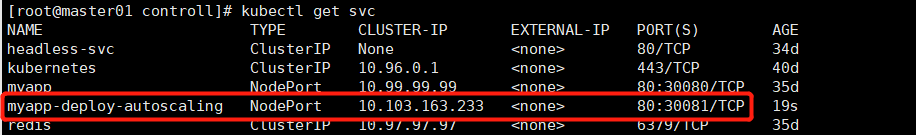

kubectl get svc -o wide

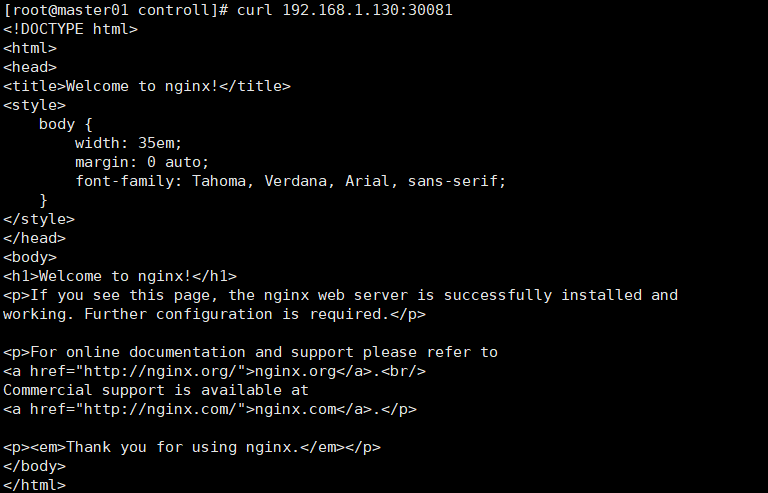

curl 10.103.163.233:80 curl 192.168.1.130:30081

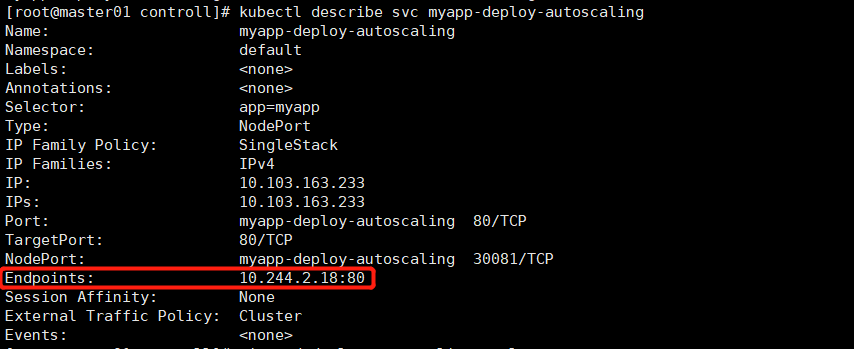

kubectl describe svc myapp-deploy-autoscaling

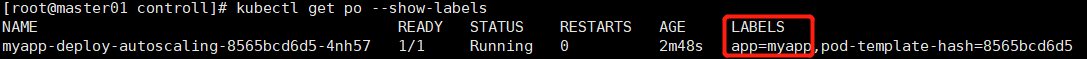

kubectl get po --show-labels

kubectl describe po myapp-deploy-autoscaling-8565bcd6d5-4nh57

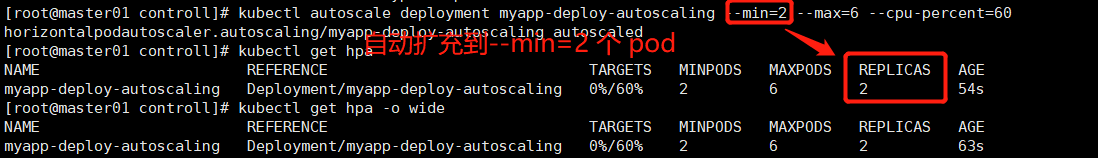

创建一个hpa v1控制器

autoscale默认创建的是v1控制器。

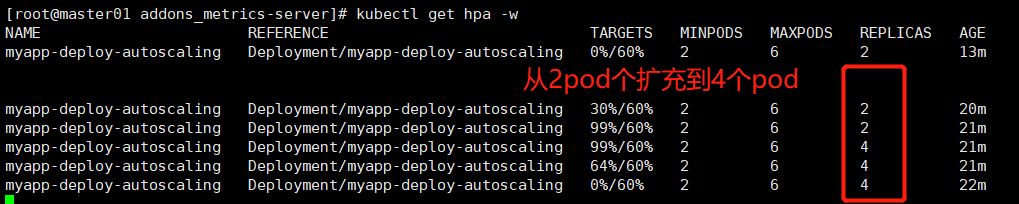

kubectl autoscale deployment myapp-deploy-autoscaling --min=2 --max=6 --cpu-percent=60 # 当前控制器控制的一组pod的cpu使用率的平均值超过60%,开始扩充pod.

kubectl get hpa

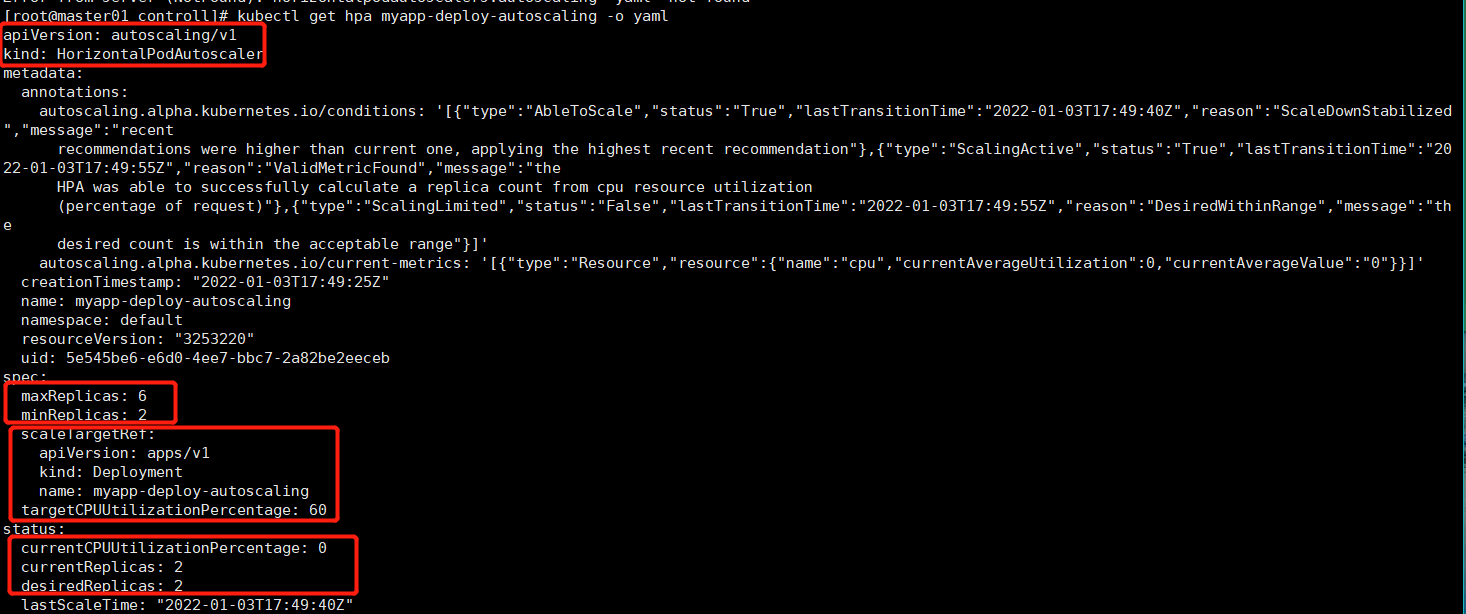

kubectl get hpa myapp-deploy-autoscaling -o yaml

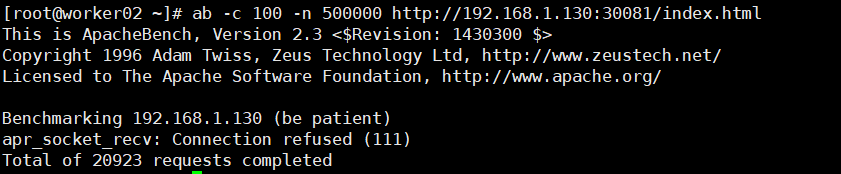

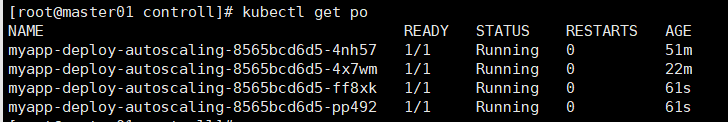

现在使用ab工具对service为myapp-deploy-autoscaling关联的一组pod进行压测,查看pod的自动动态水平扩充和缩减情况

kubectl get hpa -w

ab -c 100 -n 500000 http://192.168.1.130:30081/index.html

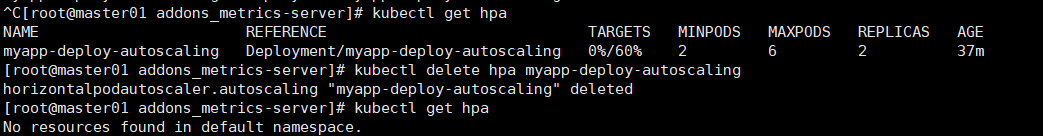

创建一个hpa v2控制器

删除hpa:

kubectl delete hpa myapp-deploy-autoscaling

v2控制器可以使用更多的指标,当作我们评估扩展时使用的指标

cat hpa-v2-demo.yaml

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: myapp-hpa-v2

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: myapp

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

targetAverageUtilization: 55 # 百分比

- type: Resource

resource:

name: memory

targetAverageValue: 50Mi # pod内存超过50Mi,扩充pod.

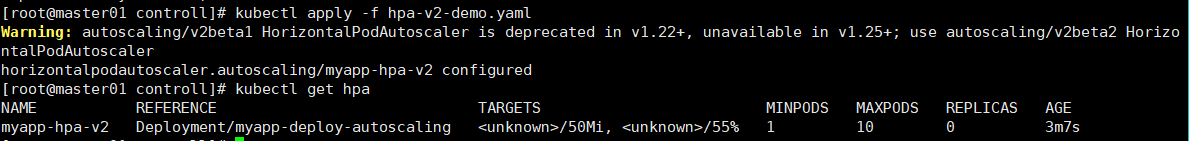

kubectl apply -f hpa-v2-demo.yaml

kubectl get hpa

现在使用ab工具对service为myapp-deploy-autoscaling关联的一组pod进行压测,查看pod的自动动态水平扩充和缩减情况

kubectl get hpa -w

ab -c 100 -n 500000 http://192.168.1.130:30081/index.html

自定义hpa指标

根据pod中定义的指标限制pod的扩展。

cat hpa-v2-custom.yaml

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: myapp-hpa-v2

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: myapp

minReplicas: 1

maxReplicas: 10

metrics:

- type: Pods

pods:

metricName: http_requests

targetAverageValue: 800m