K8S集群搭建

摘要

是借鉴网上的几篇文章加上自己的理解整理得到的结果,去掉了一些文章中比较冗余的组件和操作,力争做到部署简单化。

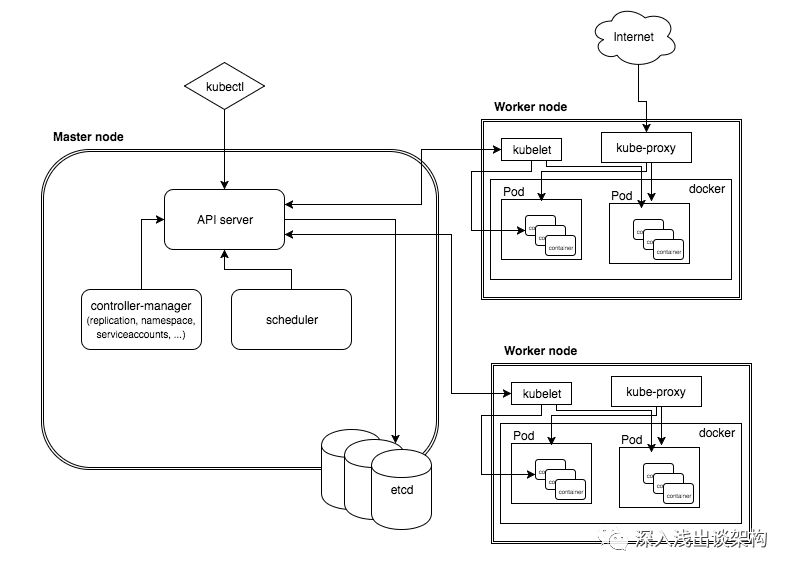

K8S组件说明

Kubernetes包含两种节点角色:master节点和minion节点

- Master 节点负责对外提供一系列管理集群的 API 接口,并且通过和 Minion 节点交互来实现对集群的操作管理。

- Minion 节点是实际运行 Docker 容器的节点,负责和节点上运行的 Docker 进行交互,并且提供了代理功能。

master节点组件

apiserver:用户和 kubernetes 集群交互的入口,封装了核心对象的增删改查操作,提供了 RESTFul 风格的 API 接口,通过 etcd 来实现持久化并维护对象的一致性。

scheduler:负责集群资源的调度和管理,例如当有 pod 异常退出需要重新分配机器时,scheduler 通过一定的调度算法从而找到最合适的节点。

controller-manager:主要是用于保证 replicationController 定义的复制数量和实际运行的 pod 数量一致,另外还保证了从 service 到 pod 的映射关系总是最新的。

etcd:key-value键值存储数据库,用来存储kubernetes的信息的。

minion节点组件

kubelet:运行在 minion 节点,负责和节点上的 Docker 交互,例如启停容器,监控运行状态等。

proxy:运行在 minion 节点,负责为 pod 提供代理功能,会定期从 etcd 获取 service 信息,并根据 service 信息通过修改 iptables 来实现流量转发(最初的版本是直接通过程序提供转发功能,效率较低。),将流量转发到要访问的 pod 所在的节点上去。

flannel:Flannel 是 CoreOS 团队针对 Kubernetes 设计的一个覆盖网络(Overlay Network)工具,需要另外下载部署。我们知道当我们启动 Docker 后会有一个用于和容器进行交互的 IP 地址,如果不去管理的话可能这个 IP 地址在各个机器上是一样的,并且仅限于在本机上进行通信,无法访问到其他机器上的 Docker 容器。Flannel 的目的就是为集群中的所有节点重新规划 IP 地址的使用规则,从而使得不同节点上的容器能够获得同属一个内网且不重复的 IP 地址,并让属于不同节点上的容器能够直接通过内网 IP 通信。

架构图

前期准备

os:centos 7.5.1804

| IP | hostname | 服务 |

|---|---|---|

| 44.201 | node1 | kube-apiservice,kube-scheduler,kube-controller-manager,etcd |

| 44.202 | node2 | kubelet,kube-proxy,flanneld,docker |

| 44.203 | node3 | kubelet,kube-proxy,flanneld,docker |

安装

master节点

yum install kubernetes-master etcd -y

minion节点

yum install kubernetes-node flannel -y

配置

master节点

修改/etc/etcd/etcd.conf

[root@node1 ~]# vim /etc/etcd/etcd.conf

#[Member]

#ETCD_CORS=""

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

ETCD_LISTEN_PEER_URLS="http://192.168.44.201:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.44.201:2379,http://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

ETCD_NAME="default"

#ETCD_SNAPSHOT_COUNT="100000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

#ETCD_QUOTA_BACKEND_BYTES="0"

#ETCD_MAX_REQUEST_BYTES="1572864"

#ETCD_GRPC_KEEPALIVE_MIN_TIME="5s"

#ETCD_GRPC_KEEPALIVE_INTERVAL="2h0m0s"

#ETCD_GRPC_KEEPALIVE_TIMEOUT="20s"

#

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.44.201:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.44.201:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_DISCOVERY_SRV=""

ETCD_INITIAL_CLUSTER="default=http://192.168.44.201:2380"

....

针对几个URLS做下简单的解释:

[member]

ETCD_NAME :ETCD的节点名

ETCD_DATA_DIR:ETCD的数据存储目录

ETCD_SNAPSHOT_COUNTER:多少次的事务提交将触发一次快照

ETCD_HEARTBEAT_INTERVAL:ETCD节点之间心跳传输的间隔,单位毫秒

ETCD_ELECTION_TIMEOUT:该节点参与选举的最大超时时间,单位毫秒

ETCD_LISTEN_PEER_URLS:该节点与其他节点通信时所监听的地址列表,多个地址使用逗号隔开,其格式可以划分为scheme://IP:PORT,这里的scheme可以是http、https

ETCD_LISTEN_CLIENT_URLS:该节点与客户端通信时监听的地址列表

[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS:该成员节点在整个集群中的通信地址列表,这个地址用来传输集群数据的地址。因此这个地址必须是可以连接集群中所有的成员的。

ETCD_INITIAL_CLUSTER:配置集群内部所有成员地址,其格式为:ETCD_NAME=ETCD_INITIAL_ADVERTISE_PEER_URLS,如果有多个使用逗号隔开

ETCD_ADVERTISE_CLIENT_URLS:广播给集群中其他成员自己的客户端地址列表

注:k8s中只需要master节点配置etcd,因为其他节点共享同一个etcd服务

修改/etc/kubernetes/apiserver

[root@node1 ~]# vim /etc/kubernetes/apiserver

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

#KUBE_API_ADDRESS="--insecure-bind-address=127.0.0.1"

KUBE_API_ADDRESS="--address=0.0.0.0" ##API-server绑定的安全IP只有127.0.0.1,相当于一个白名单,修改成"--address=0.0.0.0"",表示运行所有节点进行访问。

# The port on the local server to listen on.

KUBE_API_PORT="--port=8080"

# Port minions listen on

KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.44.201:2379" #etcd服务

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.1.0.0/16"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

# Add your own!

KUBE_API_ARGS="--service_account_key_file=/etc/kubernetes/serviceaccount.key"

修改/etc/kubernetes/controller-manager

[root@node1 ~]# vim /etc/kubernetes/controller-manager

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--service_account_private_key_file=/etc/kubernetes/serviceaccount.key"

以下文件在操作过程中,未修改过,不过也比较重要

/usr/lib/systemd/system/etcd.service

[root@node1 kubernetes]# cat /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/var/lib/etcd/

EnvironmentFile=-/etc/etcd/etcd.conf

User=etcd

# set GOMAXPROCS to number of processors

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/etcd --name="${ETCD_NAME}" --data-dir="${ETCD_DATA_DIR}" --listen-client-urls="${ETCD_LISTEN_CLIENT_URLS}""

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@node1 kubernetes]#

minion节点

修改/etc/kubernetes/config

[root@node2 kubernetes]# vim /etc/kubernetes/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://192.168.44.201:8080"

修改/etc/kubernetes/kubelet

[root@node2 kubernetes]# vim /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=192.168.44.202" ##修改ip

# The port for the info server to serve on

KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=192.168.44.202" ## 修改ip

# location of the api-server

KUBELET_API_SERVER="--api-servers=http://192.168.44.201:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS=""

注:修改对应的ip地址

修改/etc/sysconfig/flanneld

[root@node2 kubernetes]# vim /etc/sysconfig/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://192.168.44.201:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/coreos.com/network"

# Any additional options that you want to pass

#FLANNEL_OPTIONS=""

注:node3与node2上面配置相同

以下文件在操作过程中,未修改过,不过也比较重要

/usr/lib/systemd/system/flanneld.service

[root@node2 kubernetes]# cat /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/etc/sysconfig/flanneld

EnvironmentFile=-/etc/sysconfig/docker-network

ExecStart=/usr/bin/flanneld-start $FLANNEL_OPTIONS

#ExecStart=/usr/bin/flanneld --iface ens33 $FLANNEL_OPTIONS

ExecStartPost=/usr/libexec/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker

Restart=on-failure

[Install]

WantedBy=multi-user.target

WantedBy=docker.service

/usr/lib/systemd/system/docker.service

[root@node2 kubernetes]# cat /usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=http://docs.docker.com

After=network.target flanneld.service

Wants=docker-storage-setup.service

Requires=flanneld.service #这个参数应该可以没有,只要docker的启动顺序在flanneld之后就OK

[Service]

Type=notify

NotifyAccess=main

EnvironmentFile=-/run/containers/registries.conf

EnvironmentFile=-/etc/sysconfig/docker

EnvironmentFile=-/etc/sysconfig/docker-storage

EnvironmentFile=-/etc/sysconfig/docker-network

Environment=GOTRACEBACK=crash

Environment=DOCKER_HTTP_HOST_COMPAT=1

Environment=PATH=/usr/libexec/docker:/usr/bin:/usr/sbin

ExecStart=/usr/bin/dockerd-current

--add-runtime docker-runc=/usr/libexec/docker/docker-runc-current

--default-runtime=docker-runc

--exec-opt native.cgroupdriver=systemd

--userland-proxy-path=/usr/libexec/docker/docker-proxy-current

--init-path=/usr/libexec/docker/docker-init-current

--seccomp-profile=/etc/docker/seccomp.json

$OPTIONS

$DOCKER_STORAGE_OPTIONS

$DOCKER_NETWORK_OPTIONS

$ADD_REGISTRY

$BLOCK_REGISTRY

$INSECURE_REGISTRY

$REGISTRIES

ExecReload=/bin/kill -s HUP $MAINPID

LimitNOFILE=1048576

LimitNPROC=1048576

LimitCORE=infinity

TimeoutStartSec=0

Restart=on-abnormal

KillMode=process

[Install]

WantedBy=multi-user.target

启动

minion节点上的flanneld服务必须要在master上的etcd服务启动之后再启动

master节点

systemctl start etcd

systemctl enable etcd

systemctl start kube-apiserver

systemctl enable kube-apiserver

systemctl start kube-scheduler

sysemtctl enable kube-scheduler

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

minion节点

systemctl start kubelet

systemctl enable kubelet

systemctl start kube-proxy

systemctl enable kube-proxy

systemctl start flanneld

systemctl enable flanneld

systemctl start docker

systemctl enable docker

注:如果之前docker启动,并且docker0存在(ip addr),则需要先将docker停止(systemctl stop docker),并且删掉docker0(ip link delete docker0)

注意事项

1.每个节点的防火墙和selinux必须关掉

- 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

- 关闭selinux

临时关闭:

setenforce 0

永久关闭:

[root@node2 kubernetes]# vim /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

# SELINUXTYPE= can take one of three two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

2.仅仅关闭防火墙,pod之间也是无法ping通。需要关闭iptables规则(这个很坑,找了很多资料才发现的问题)

iptables -P INPUT ACCEPT

iptables -P FORWARD ACCEPT

iptables -F

iptables -L -n

web界面管理kuberneters

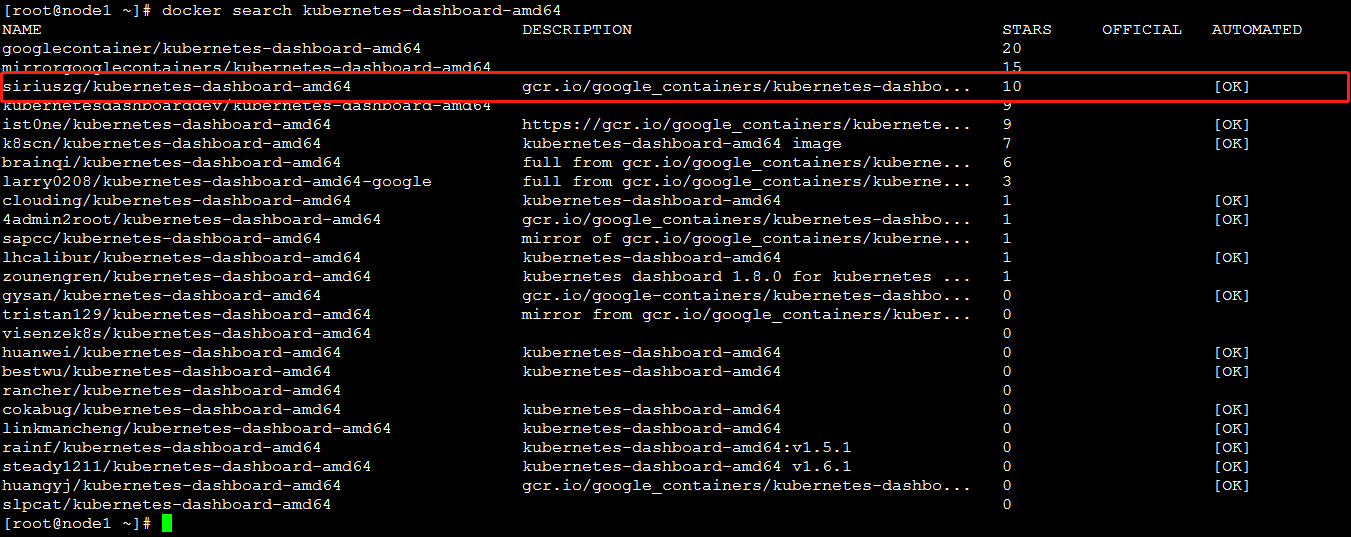

1.下载kubernetes-dashboard-amd64

docker search kubernetes-dashboard-amd64

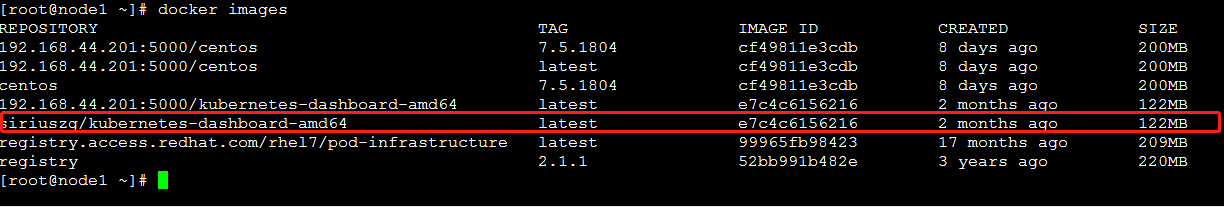

docker images

2.将kubernetes-dashboard-amd64发送到本地私有仓库

docker tag siriuszg/kubernetes-dashboard-amd64:latest 192.168.44.201:5000/kubernetes-dashboard-amd64:latest

3.在master服务器上创建kubernetes-dashboard.yaml文件

[root@node1 ~]# vim kubernetes-dashboard.yaml

# Comment the following annotation if Dashboard must not be deployed on master

annotations:

scheduler.alpha.kubernetes.io/tolerations: |

[

{

"key": "dedicated",

"operator": "Equal",

"value": "master",

"effect": "NoSchedule"

}

]

spec:

containers:

- name: kubernetes-dashboard

image: 192.168.44.201:5000/kubernetes-dashboard-amd64

imagePullPolicy: Always

ports:

- containerPort: 9090

protocol: TCP

args:

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

- --apiserver-host=192.168.44.201:8080

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

---

kind: Service

apiVersion: v1

metadata:

labels:

app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kube-system

spec:

type: NodePort

ports:

- port: 80

targetPort: 9090

selector:

app: kubernetes-dashboard

4.创建kubernetes-dashboard

[root@node1 ~]# kubectl create -f kubernetes-dashboard.yaml

Error from server (AlreadyExists): error when creating "kubernetes-dashboard.yaml": deployments.extensions "kubernetes-dashboard" already exists

Error from server (AlreadyExists): error when creating "kubernetes-dashboard.yaml": services "kubernetes-dashboard" already exists

出现上面错误,需要将kubernetes-dashboard的有关配置全部删除

[root@node1 ~]# kubectl delete -f kubernetes-dashboard.yaml

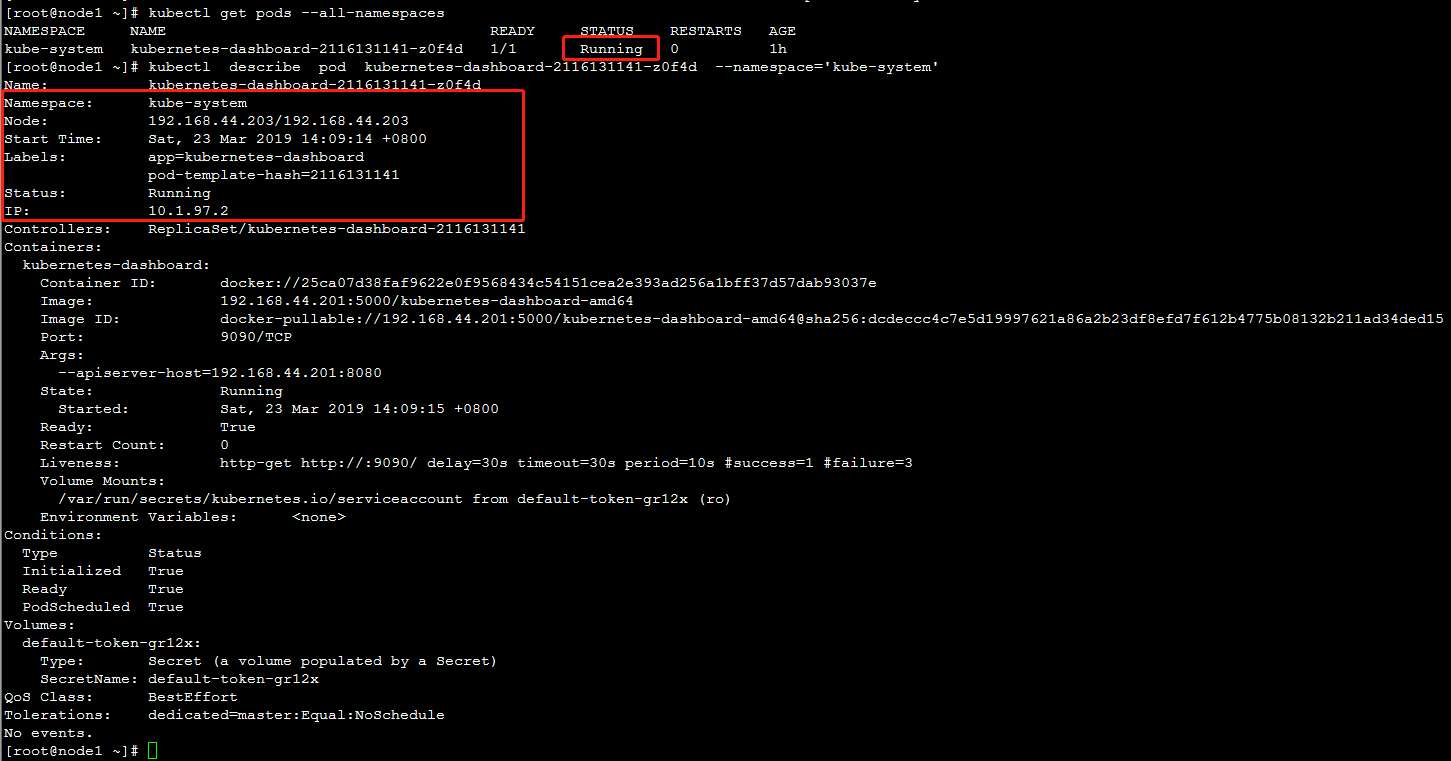

5. 查看pod详情

通过192.168.44.201:8080/ui可以访问

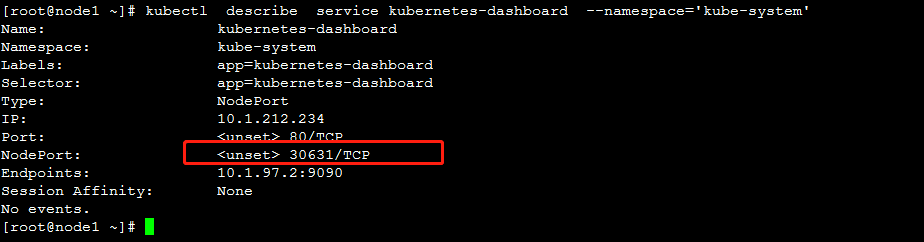

6.查看service详情

通过192.168.44.203:8080/ui可以访问

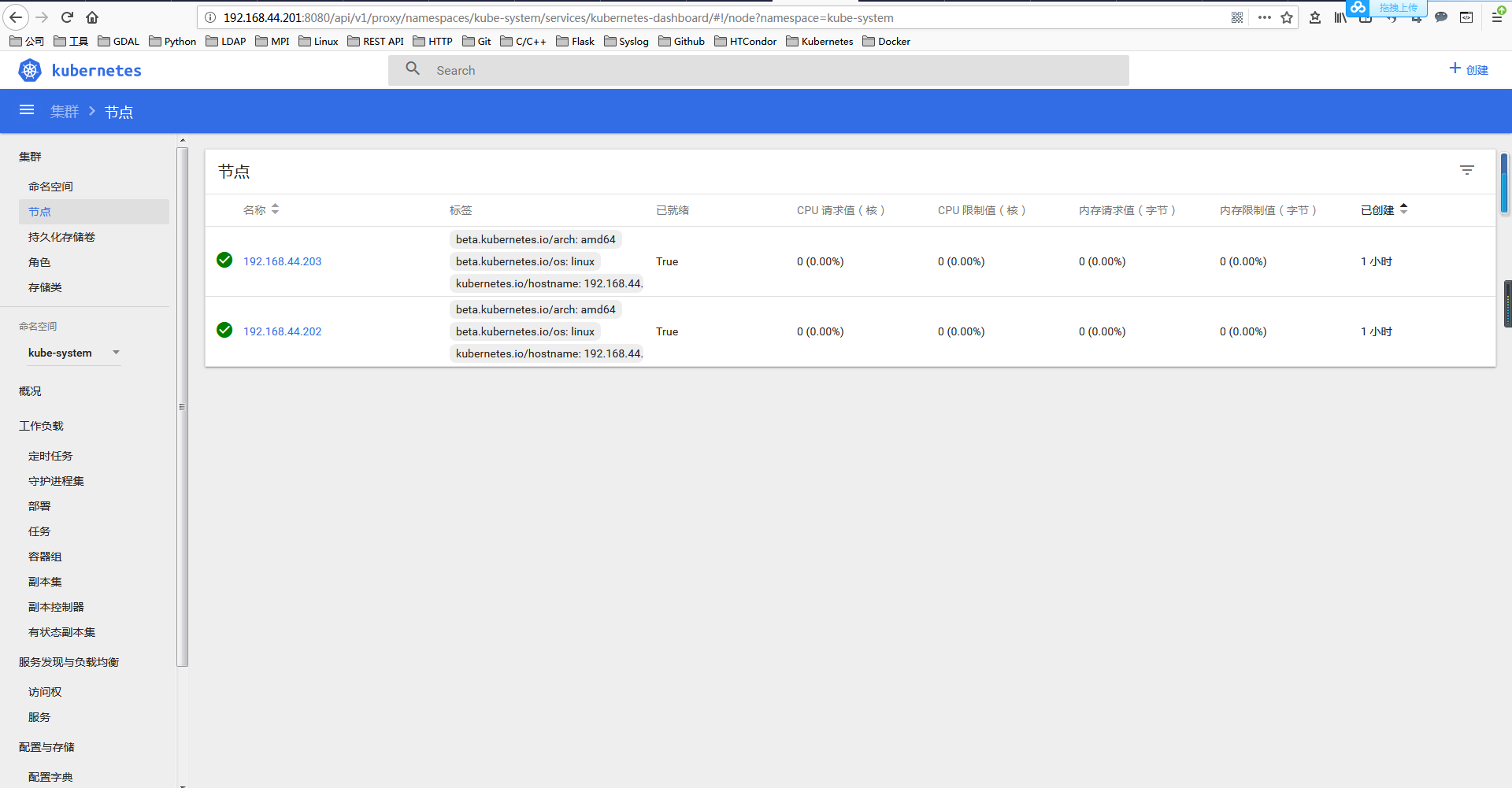

6.web界面