我们在服务里面创建如下的应用(以下是创建完复制过来的json):

{

"id": "/nginxtest",

"cmd": null,

"cpus": 0.1,

"mem": 65,

"disk": 0,

"instances": 1,

"acceptedResourceRoles": [

"*"

],

"container": {

"type": "DOCKER",

"docker": {

"forcePullImage": true,

"image": "10.20.31.223:5000/nginx",

"parameters": [],

"privileged": false

},

"volumes": [],

"portMappings": [

{

"containerPort": 80,

"hostPort": 0,

"labels": {},

"protocol": "tcp",

"servicePort": 10026

}

]

},

"healthChecks": [

{

"gracePeriodSeconds": 10,

"ignoreHttp1xx": false,

"intervalSeconds": 2,

"maxConsecutiveFailures": 10,

"path": "/",

"portIndex": 0,

"protocol": "HTTP",

"ipProtocol": "IPv4",

"timeoutSeconds": 10,

"delaySeconds": 15

}

],

"labels": {

"HAPROXY_GROUP": "sdgx"

},

"networks": [

{

"mode": "container/bridge"

}

],

"portDefinitions": []

}

在这个应用里面,servicePort为10026则说明我们注册到Marathon-lb上的外部端口为10026,labels里面写的是sdgx,也即注册到外部的负载均衡器上。

这个时候,我们访问public slave上的10026端口,就能看到启动的Nginx的页面http://10.20.31.101:10026/。

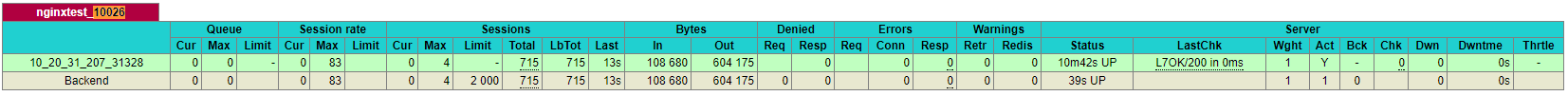

如果我们访问public slave上的haproxy的配置页面http://10.20.31.101:9090/haproxy?stats,可以看到如下的映射关系。

接下来我们部署marathon-lb-autoscale,它监控HAProxy,发现RPS(request per seconds)超过一定的数目,就对应用进行弹性扩展。

{

"id": "/autoscaling/sdgx-marathon-lb-autoscale",

"cmd": null,

"cpus": 0.1,

"mem": 65,

"disk": 0,

"instances": 1,

"acceptedResourceRoles": [

"*"

],

"container": {

"type": "DOCKER",

"docker": {

"forcePullImage": true,

"image": "10.20.31.223:5000/marathon-lb-autoscale",

"parameters": [],

"privileged": false

},

"volumes": []

},

"portDefinitions": [

{

"port": 10028,

"protocol": "tcp"

}

],

"args": [

"--marathon",

"http://10.20.31.210:8080",

"--haproxy",

"http://10.20.31.101:9090",

"--target-rps",

"100",

"--apps",

"nginxtest_10026"

]

}

接下来,我们部署应用/autoscaling/siege向nginxtest发送请求

{

"id": "/autoscaling/siege",

"cmd": null,

"cpus": 0.5,

"mem": 65,

"disk": 0,

"instances": 0,

"acceptedResourceRoles": [

"*"

],

"container": {

"type": "DOCKER",

"docker": {

"forcePullImage": true,

"image": "10.20.31.223:5000/yokogawa/siege:latest",

"parameters": [],

"privileged": false

},

"volumes": []

},

"portDefinitions": [

{

"port": 10029,

"name": "default",

"protocol": "tcp"

}

],

"args": [

"-d1",

"-r1000",

"-c100",

"http://10.20.31.101:10026/"

]

}

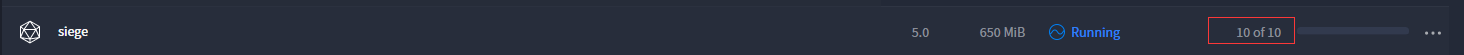

如果我们看HAProxy的stats页面,发现已经有请求发过来了。这个时候我们增加/autoscaling/siege到10,给nginxtest服务加压。

过一段时间就会发现sdgx-marathon-lb-autoscale.autoscaling已经有动作了(sdgx-marathon-lb-autoscale.autoscaling服务的日志)。

[2018-07-23T08:38:56] Starting autoscale controller

[2018-07-23T08:38:56] Options: #<OpenStruct marathon=#<URI::HTTP http://10.20.31.210:8080>, haproxy=[#<URI::HTTP http://10.20.31.101:9090>], interval=60, samples=10, cooldown=5, target_rps=100, apps=#<Set: {"nginxtest_10026"}>, threshold_percent=0.5, threshold_instances=3, intervals_past_threshold=3>

[2018-07-23T08:50:56] Scaling nginxtest from 1 to 10 instances

[2018-07-23T08:50:56] app_id=nginxtest rate_avg=908.2 target_rps=100 current_rps=908.2

[2018-07-23T08:50:56] 1 apps require scaling

将一个nginxtest实例变成10个nginxtest实例。

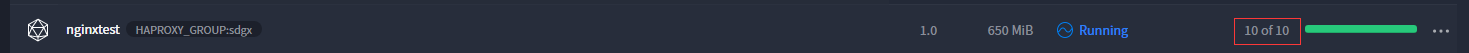

当我们将/autoscaling/siege从10个变回0个的时候,nginxtest实例从10个变成1个。

[2018-07-23T08:38:56] Starting autoscale controller

[2018-07-23T08:38:56] Options: #<OpenStruct marathon=#<URI::HTTP http://10.20.31.210:8080>, haproxy=[#<URI::HTTP http://10.20.31.101:9090>], interval=60, samples=10, cooldown=5, target_rps=100, apps=#<Set: {"nginxtest_10026"}>, threshold_percent=0.5, threshold_instances=3, intervals_past_threshold=3>

[2018-07-23T08:50:56] Scaling nginxtest from 1 to 10 instances

[2018-07-23T08:50:56] app_id=nginxtest rate_avg=908.2 target_rps=100 current_rps=908.2

[2018-07-23T08:50:56] 1 apps require scaling

[2018-07-23T08:55:56] Not scaling nginxtest yet because it needs to cool down (scaled 300.0s ago)

[2018-07-23T08:55:56] app_id=nginxtest rate_avg=553.0/10 target_rps=100 current_rps=55.3

[2018-07-23T08:56:56] Not scaling nginxtest yet because it needs to cool down (scaled 360.0s ago)

[2018-07-23T08:56:56] app_id=nginxtest rate_avg=561.4/10 target_rps=100 current_rps=56.14

[2018-07-23T08:57:56] Not scaling nginxtest yet because it needs to cool down (scaled 420.0s ago)

[2018-07-23T08:57:56] app_id=nginxtest rate_avg=570.7/10 target_rps=100 current_rps=57.07000000000001

[2018-07-23T08:58:56] Not scaling nginxtest yet because it needs to cool down (scaled 480.0s ago)

[2018-07-23T08:58:56] app_id=nginxtest rate_avg=570.7/10 target_rps=100 current_rps=57.07000000000001

[2018-07-23T08:59:56] Not scaling nginxtest yet because it needs to cool down (scaled 540.0s ago)

[2018-07-23T08:59:56] app_id=nginxtest rate_avg=487.1/10 target_rps=100 current_rps=48.71

[2018-07-23T09:00:56] Not scaling nginxtest yet because it needs to cool down (scaled 600.0s ago)

[2018-07-23T09:00:56] app_id=nginxtest rate_avg=397.7/10 target_rps=100 current_rps=39.769999999999996

[2018-07-23T09:01:56] Not scaling nginxtest yet because it needs to cool down (scaled 660.0s ago)

[2018-07-23T09:01:56] app_id=nginxtest rate_avg=277.9/10 target_rps=100 current_rps=27.79

[2018-07-23T09:02:56] Not scaling nginxtest yet because it needs to cool down (scaled 720.0s ago)

[2018-07-23T09:02:56] app_id=nginxtest rate_avg=227.5/10 target_rps=100 current_rps=22.75

[2018-07-23T09:03:56] Not scaling nginxtest yet because it needs to cool down (scaled 780.0s ago)

[2018-07-23T09:03:56] app_id=nginxtest rate_avg=176.5/10 target_rps=100 current_rps=17.65

[2018-07-23T09:04:56] Not scaling nginxtest yet because it needs to cool down (scaled 840.0s ago)

[2018-07-23T09:04:56] app_id=nginxtest rate_avg=128.5/10 target_rps=100 current_rps=12.85

[2018-07-23T09:05:56] Scaling nginxtest from 10 to 1 instances

[2018-07-23T09:05:56] app_id=nginxtest rate_avg=82.0 target_rps=100 current_rps=8.2

[2018-07-23T09:05:56] 1 apps require scaling