上篇中提到解决的一个问题是mysql驱动报的:

com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

昨天最后的“解决方法”是增加Mysql的max_connections,当时就觉得其实根本没有解决问题,果然今天早上来一压问题又重现了:

[2013-06-20 10:43:13.727][ERROR]执行存储过程{SEL_LocatlityRelationByName}出错:com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server. at sun.reflect.GeneratedConstructorAccessor55.newInstance(Unknown Source) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:525) at com.mysql.jdbc.Util.handleNewInstance(Util.java:411) at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:1121) at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:357) at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2479) at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2516) at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2301) at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:834) at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47) at sun.reflect.GeneratedConstructorAccessor46.newInstance(Unknown Source) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:525) at com.mysql.jdbc.Util.handleNewInstance(Util.java:411) at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:416) at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:346) at java.sql.DriverManager.getConnection(DriverManager.java:579) at java.sql.DriverManager.getConnection(DriverManager.java:221) at com.iflytek.ossp.vaserver.data.SQLHelper.getConnection(SQLHelper.java:84) at com.iflytek.ossp.vaserver.data.SQLHelper.getResultSetExecProc(SQLHelper.java:46) at com.iflytek.ossp.vaserver.data.VADataHandler.getLocRelation(VADataHandler.java:87) at com.iflytek.ossp.vaserver.cmd.weather.WeatherQueryClient.getCmccCityId(WeatherQueryClient.java:481) at com.iflytek.ossp.vaserver.cmd.weather.WeatherQueryClient.getMLUInfo(WeatherQueryClient.java:555) at com.iflytek.ossp.vaserver.cmd.mainlist.MainListUpdate.businessProcess(MainListUpdate.java:68) at com.iflytek.ossp.vaserver.cmd.mainlist.MainListUpdate.execute(MainListUpdate.java:26) at com.iflytek.ossp.vaserver.VAClientHttpServlet.businessProc(VAClientHttpServlet.java:133) at com.iflytek.ossp.vaserver.VAClientHttpServlet.doPost(VAClientHttpServlet.java:78) at javax.servlet.http.HttpServlet.service(HttpServlet.java:647) at javax.servlet.http.HttpServlet.service(HttpServlet.java:728) at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:305) at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:210) at com.iflytek.ossp.vaserver.EncodingFilter.doFilter(EncodingFilter.java:45) at org.apache.catalina.core.ApplicationFilterChain.internalDoFilter(ApplicationFilterChain.java:243) at org.apache.catalina.core.ApplicationFilterChain.doFilter(ApplicationFilterChain.java:210) at org.apache.catalina.core.StandardWrapperValve.invoke(StandardWrapperValve.java:222) at org.apache.catalina.core.StandardContextValve.invoke(StandardContextValve.java:123) at org.apache.catalina.authenticator.AuthenticatorBase.invoke(AuthenticatorBase.java:472) at org.apache.catalina.core.StandardHostValve.invoke(StandardHostValve.java:171) at org.apache.catalina.valves.ErrorReportValve.invoke(ErrorReportValve.java:99) at org.apache.catalina.valves.AccessLogValve.invoke(AccessLogValve.java:953) at org.apache.catalina.core.StandardEngineValve.invoke(StandardEngineValve.java:118) at org.apache.catalina.connector.CoyoteAdapter.service(CoyoteAdapter.java:408) at org.apache.coyote.http11.AbstractHttp11Processor.process(AbstractHttp11Processor.java:1008) at org.apache.coyote.AbstractProtocol$AbstractConnectionHandler.process(AbstractProtocol.java:589) at org.apache.tomcat.util.net.JIoEndpoint$SocketProcessor.run(JIoEndpoint.java:312) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615) at java.lang.Thread.run(Thread.java:722) Caused by: java.net.SocketException: 打开的文件过多 at java.net.Socket.createImpl(Socket.java:447) at java.net.Socket.<init>(Socket.java:421) at java.net.Socket.<init>(Socket.java:241) at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:259) at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:307) ... 43 more

不过这次我将堆栈信息打的更为详细了点,仔细一看注意到了上面标红的一行:Caused by: java.net.SocketException: 打开的文件过多。打开文件太多,到底打开什么文件太多呢?SocketException让我想到了TIME_WAIT,于是在压力测试进行的过程中查看了下TIME_WAIT执行下面的命令:

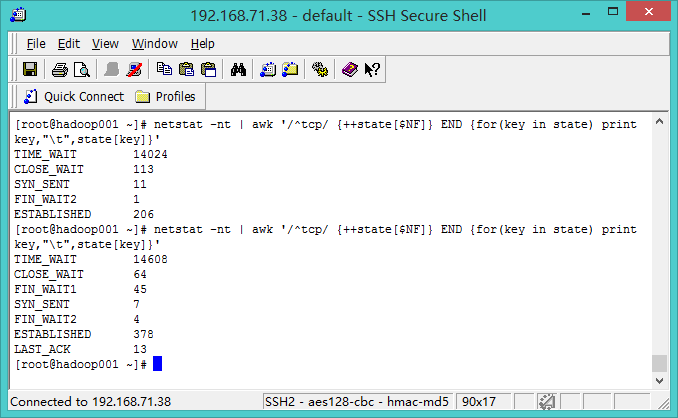

netstat -nt | awk '/^tcp/ {++state[$NF]} END {for(key in state) print key," ",state[key]}'

发现TIME_WAIT一路飙升最后达到了14000多:

于是我怀疑是因为TIME_WAIT过大导致的,于是Google解决TIME_WAIT过大的方法:

1)通过增加可用端口:

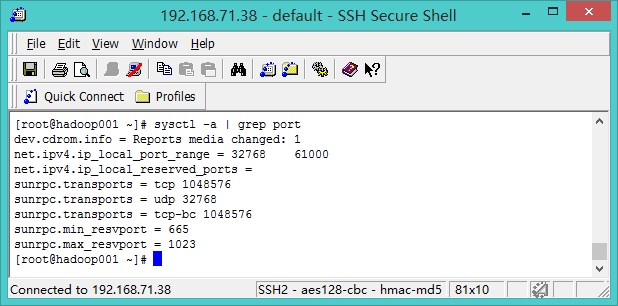

用下面的命令可以查看系统当前使用的端口区间:

sysctl -a | grep port

使用下面的命令可以增加系统使用的端口区间:

shell> echo "net.ipv4.ip_local_port_range = 10240 61000" >> /etc/sysctl.conf shell> sysctl -p

结果:TIME_WAIT依然很大,mysql还是报之前的错误。

2)修改系统内核参数tcp_tw_reuse和tcp_tw_recycle

可以用下面的命令查看这两个参数的值:

sysctl -a | grep tcp_tw_reuse sysctl -a | grep tcp_tw_recycle

默认这两个参数都是0,这两个参数的作用是让系统能重用端口。(注:通过sysctl命令修改内核参数,重启后会还原,要想持久化可以参考修改端口的方法。)

注意:tcp_tw_recycle只有在tcp_tw_reuse为1的时候置为1才有效。

结果:发现TIME_WAIT明显减少了,最高维持在3300多:

但是mysql的问题依然存在,但似乎减少了点。

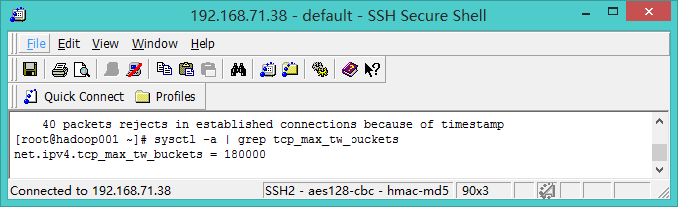

3)网上有说在NAT环境下打开tcp_tw_recycle可能会引起其他更复杂的网络问题,具体参看官方文档:https://www.kernel.org/doc/Documentation/networking/ip-sysctl.txt。于是我使用了网上说的另外一种解决TIME_WAIT过大的问题:修改系统参数tcp_max_tw_buckets,通过设置它系统会将多余的TIME_WAIT删除掉。默认该值为18000:

我将该值设置为5000:

shell> sysctl net.ipv4.tcp_max_tw_buckets=5000

结果:TIME_WAIT相比第2)种方法没有明显减少,问题依然存在。

难道不是因为TIME_WAIT过大造成的?产生这个异常到到底说明了什么:

这些异常指出操作系统 (OS) 资源问题和操作系统与 JVM 进程用完文件描述符的原因。

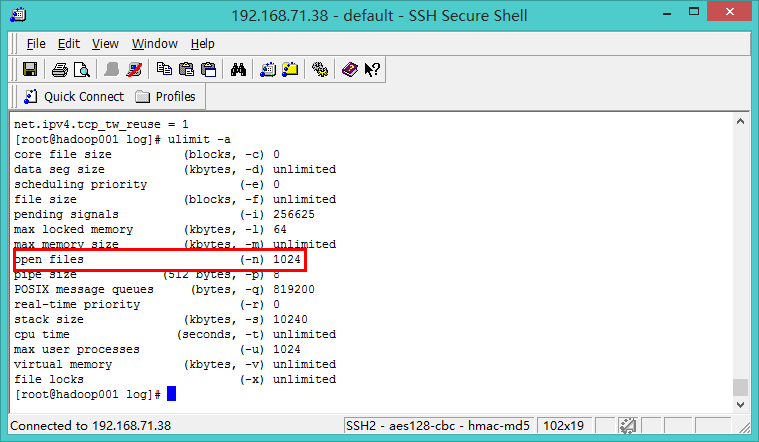

难道真的是系统文件描述符用完了!用过ulimit -a命令可以查看系统最大的打开文件数:

通过下面的命令可以增加这个值:

ulimit -n 8192

但是就算这样能解决我的问题,也是治标不治本。于是我重新仔细检查了下日志,因为之前一直怀疑是mysql的问题,所以只关心mysql的问题。结果发现另外一处发起http请求的时候出现了类似的异常信息:

java.net.SocketException: 打开的文件过多

于是最后将问题定位在了下面的发起http请求的代码中:

public boolean get(String url, StringBuffer content, String encoding) { HttpClient client = new HttpClient(); HttpMethod method = new GetMethod(url); // 设置url连接超时 client.getHttpConnectionManager().getParams() .setConnectionTimeout(timeOut); // 设置读取数据超时 client.getHttpConnectionManager().getParams().setSoTimeout(timeOut); method.getParams().setParameter(HttpMethodParams.HTTP_CONTENT_CHARSET, encoding); try { client.executeMethod(method); String respString = method.getResponseBodyAsString(); content.append(respString); } catch (ConnectTimeoutException e) { errorString = "Connect Timeout:" + url; return false; } catch (SocketTimeoutException e) { errorString = "Socket Timeout:" + url; return false; } catch (IOException e) { errorString = e.toString(); return false; } finally { method.releaseConnection(); } return true; }

最后证明真的是因为http请求没有释放socket资源造成的,mysql只是被“坑”了而已。

最后给出修改后的代码:

public boolean get(String url, StringBuffer content, String encoding) { HttpClient client = new HttpClient(); HttpMethod method = new GetMethod(url); // 设置url连接超时 client.getHttpConnectionManager().getParams() .setConnectionTimeout(timeOut); // 设置读取数据超时 client.getHttpConnectionManager().getParams().setSoTimeout(timeOut); // 这两行解决 too many open files问题 by jdzhan client.getParams().setBooleanParameter("http.protocol.expect-continue", false); // 解决Httpclient远程请求所造成Socket没有释放 by jdzhan method.addRequestHeader("Connection", "close"); method.getParams().setParameter(HttpMethodParams.HTTP_CONTENT_CHARSET, encoding); try { client.executeMethod(method); String respString = method.getResponseBodyAsString(); content.append(respString); } catch (ConnectTimeoutException e) { errorString = "Connect Timeout:" + url; return false; } catch (SocketTimeoutException e) { errorString = "Socket Timeout:" + url; return false; } catch (IOException e) { errorString = e.toString(); return false; } finally { method.releaseConnection(); } return true; }