1 import pandas as pd

2 import numpy as np

3

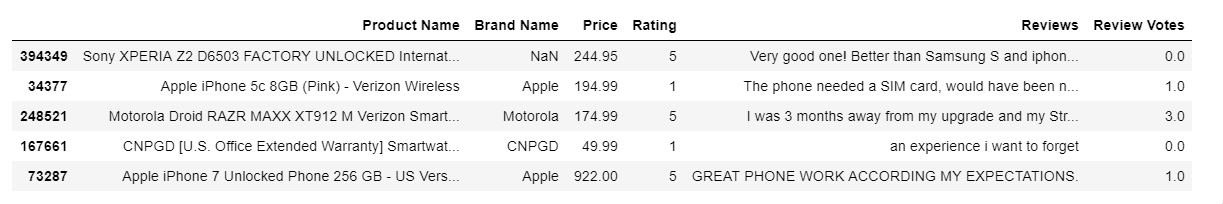

4 # 数据读取

5 df = pd.read_csv('Amazon_Unlocked_Mobile.csv')

6

7 # Sample the data to speed up computation

8 # Comment out this line to match with lecture

9 df = df.sample(frac=0.1, random_state=10)

10

11 df.head()

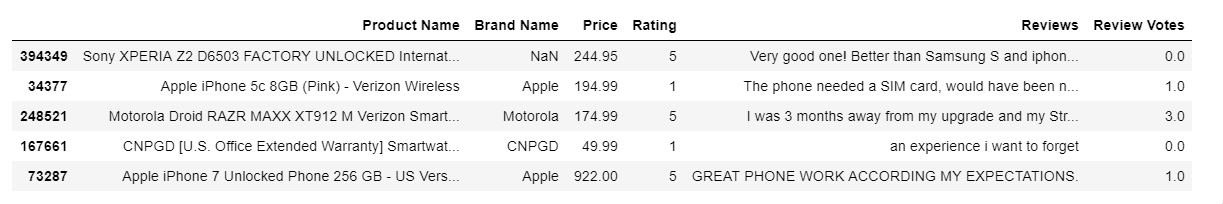

1 # Drop missing values

2 df.dropna(inplace=True)

3

4 # Remove any 'neutral' ratings equal to 3

5 df = df[df['Rating'] != 3]

6

7 # Encode 4s and 5s as 1 (rated positively)

8 # Encode 1s and 2s as 0 (rated poorly)

9 df['Positively Rated'] = np.where(df['Rating'] > 3, 1, 0)

10 df.head(10)

1 # Most ratings are positive

2 df['Positively Rated'].mean()

0.7471776686078667

1 from sklearn.model_selection import train_test_split

2

3 # Split data into training and test sets

4 X_train, X_test, y_train, y_test = train_test_split(df['Reviews'],

5 df['Positively Rated'],

6 random_state=0)

7 print('X_train first entry:

', X_train.iloc[0])

8 print('

X_train shape: ', X_train.shape)

X_train first entry:

Everything about it is awesome!

X_train shape: (23052,)

CountVectorizer

1 from sklearn.feature_extraction.text import CountVectorizer

2

3 # Fit the CountVectorizer to the training data

4 vect = CountVectorizer().fit(X_train)

5 vect.get_feature_names()[::2000]

['00',

'arroja',

'comapañias',

'dvds',

'golden',

'lands',

'oil',

'razonable',

'smallsliver',

'tweak']

1 len(vect.get_feature_names())

19601

1 # transform the documents in the training data to a document-term matrix

2 X_train_vectorized = vect.transform(X_train)

3

4 X_train_vectorized

<23052x19601 sparse matrix of type '<class 'numpy.int64'>'

with 613289 stored elements in Compressed Sparse Row format>

1 from sklearn.linear_model import LogisticRegression

2

3 # Train the model

4 model = LogisticRegression()

5 model.fit(X_train_vectorized, y_train)

LogisticRegression(C=1.0, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1,

penalty='l2', random_state=None, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False)

1 from sklearn.metrics import roc_auc_score

2

3 # Predict the transformed test documents

4 predictions = model.predict(vect.transform(X_test))

5

6 print('AUC: ', roc_auc_score(y_test, predictions))

AUC: 0.897433277667

1 # get the feature names as numpy array

2 feature_names = np.array(vect.get_feature_names())

3

4 # Sort the coefficients from the model

5 sorted_coef_index = model.coef_[0].argsort()

6

7 # Find the 10 smallest and 10 largest coefficients

8 # The 10 largest coefficients are being indexed using [:-11:-1]

9 # so the list returned is in order of largest to smallest

10 print('Smallest Coefs:

{}

'.format(feature_names[sorted_coef_index[:10]]))

11 print('Largest Coefs:

{}'.format(feature_names[sorted_coef_index[:-11:-1]]))

Smallest Coefs:

['worst' 'terrible' 'slow' 'junk' 'poor' 'sucks' 'horrible' 'useless'

'waste' 'disappointed']

Largest Coefs:

['excelent' 'excelente' 'excellent' 'perfectly' 'love' 'perfect' 'exactly'

'great' 'best' 'awesome']

IF-idf

1 from sklearn.feature_extraction.text import TfidfVectorizer

2

3 # Fit the TfidfVectorizer to the training data specifiying a minimum document frequency of 5

4 vect = TfidfVectorizer(min_df=5).fit(X_train)

5 len(vect.get_feature_names())

5442

1 X_train_vectorized = vect.transform(X_train)

2

3 model = LogisticRegression()

4 model.fit(X_train_vectorized, y_train)

5

6 predictions = model.predict(vect.transform(X_test))

7

8 print('AUC: ', roc_auc_score(y_test, predictions))