1.自己感兴趣的主题或网站。

http://www.18ladys.com/

1).用于数据爬取并生成txt文件的py文件:

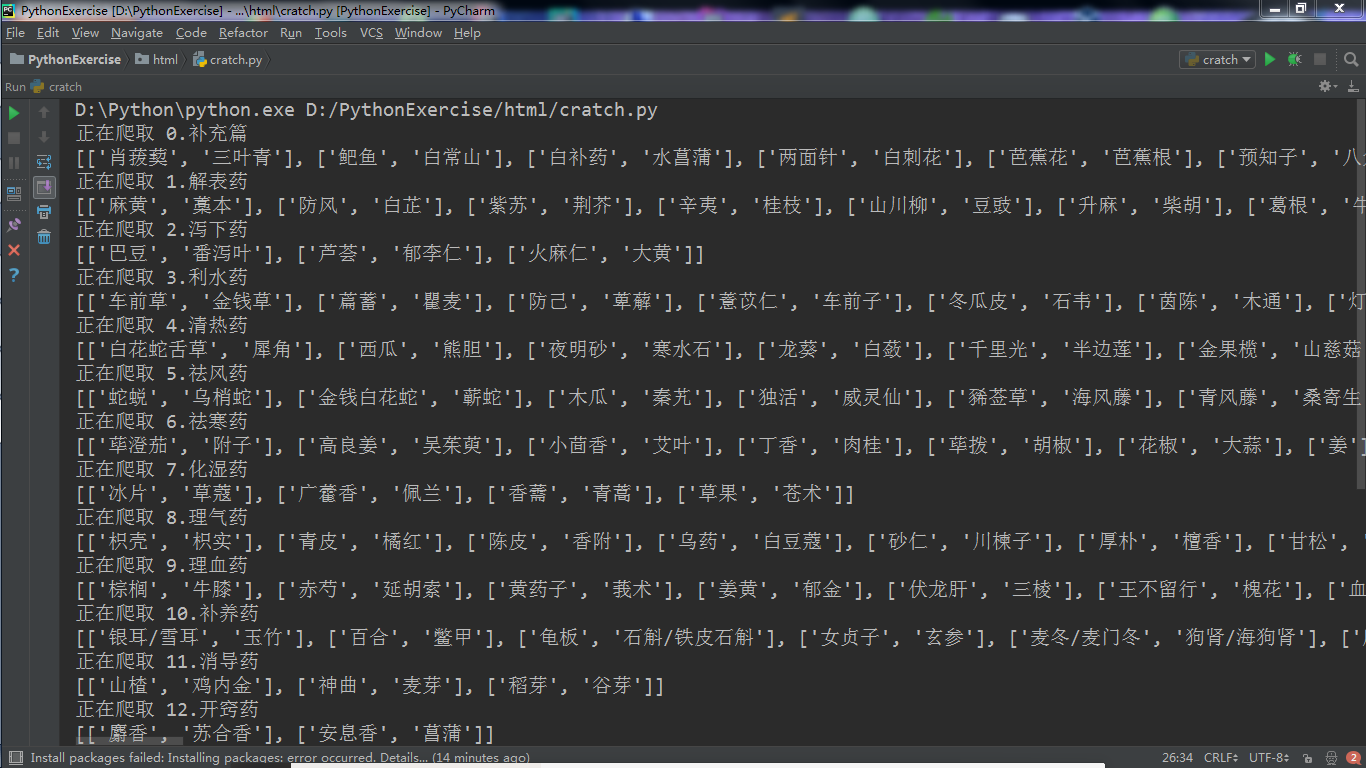

import requests from bs4 import BeautifulSoup #获取—————————————————————————————————————————— def catchSoup(url): #url=‘http://www.18ladys.com/post/buchong/‘ res=requests.get(url) res.encoding=‘utf-8‘ soup=BeautifulSoup(res.text,‘html.parser‘) return soup #类型及其网页查找(首页查找)—————————————————————— def kindSearch(soup): herbKind=[] for new in soup.select(‘li‘): if(new.text!=‘首页‘): perKind=[] perKind.append(new.text) perKind.append(new.select(‘a‘)[0].attrs[‘href‘]) herbKind.append(perKind) return herbKind #药名查找(传入页面)—————————————————————————————————————————————————————— def nameSearch(soup): herbName=[] for new in soup.select(‘h3‘): pername=new.text.split(‘_‘)[0].rstrip(‘图片‘).lstrip(‘xa0‘) pername=pername.rstrip(‘的功效与作用‘) herbName.append(pername) return herbName #分页及详细地址—————————————————————————————————————————————————————————— def perPage(soup): kindPage=[] add=[] for new in soup.select(‘.post.pagebar‘): for detail in new.select(‘a‘): d=[] d.append(detail.text) d.append(detail.attrs[‘href‘]) kindPage.append(d) kindPage.remove(kindPage[0]) kindPage.remove(kindPage[-1]) return kindPage #爬取某一类的所有药名:kind是一个数字,照着kindSearch的结果输入。———————————— def herbDetail(kind): soup=catchSoup(‘http://www.18ladys.com/post/buchong/‘)#从首页开始 kindName=kindSearch(soup)[kind][0] #这一类草药的类名 adds=kindSearch(soup)[kind][1] #这一类草药的第一页地址 totalRecord = [] #这一类草药的所有名字 print("正在爬取 "+str(kind)+‘.‘+kindName) totalRecord.append(nameSearch(catchSoup(adds)))#第一页的草药 for add in perPage(catchSoup(adds)): #第二页以及之后的草药 pageAdd=add[1] totalRecord.append(nameSearch(catchSoup(pageAdd))) #print(nameSearch(catchSoup(pageAdd))) print(totalRecord) return totalRecord #=========================================================== # 操作 #=========================================================== if __name__=="__main__": #获取类别名字及其网页地址— totalKind=kindSearch(catchSoup(‘http://www.18ladys.com/post/buchong/‘)) #首页 #获取某一类中药的各种药名 totalRecord=[] kind=0 detailContent = ‘‘ while(kind<20): totalRecord=herbDetail(kind) if(kind==0): detailContent+=‘目录: ‘ for i in totalKind: detailContent+=str(totalKind.index(i)+1)+‘.‘+i[0]+‘ ‘ kind+=1 continue

else: detailContent+=‘ ‘+str(totalKind[kind][0])+‘: ‘ for i in totalRecord: detailContent+=str(totalRecord.index(i)+1)+‘.‘+i[0]+‘ ‘ kind+=1 f = open(‘herbDetail.txt‘, ‘a+‘,encoding=‘utf-8‘) f.write(detailContent) f.close()

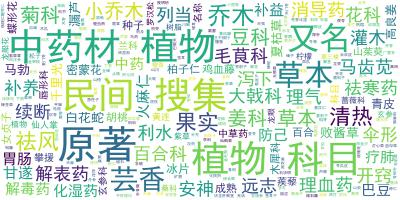

4.因为爬取的是各个中药的类别及名字,没有爬取更细节的数据,所以显示出来的多是一些中药名词

from wordcloud import WordCloud import jieba from os import path import matplotlib.pyplot as plt comment_text = open(‘D:\herbDetail.txt‘,‘r‘,encoding=‘utf-8‘).read() cut_text = " ".join(jieba.cut(comment_text)) d = path.dirname(__file__) cloud = WordCloud( font_path="C:\Windows\Fonts\simhei.ttf", background_color=‘white‘, max_words=2000, max_font_size=40 ) word_cloud = cloud.generate(cut_text) word_cloud.to_file("cloud4herb.jpg") #显示词云图片=================================== plt.imshow(word_cloud) plt.axis(‘off‘) plt.show()

5.

1).数据爬取并生成txt文件的py文件

2).利用python相关的包生成词云相关操作的py文件

遇到的问题以及解决方案

1).wordcloud包的安装配置出现很大的问题,本机系统装载了两个python版本导致装载出现很多额外的问题。

解决:在同学的帮助下安装了whl文件并删除了本机中的另一个python版本。

import requests

from bs4 import BeautifulSoup

import jieba

for i in range(2,10):

pages = i;

nexturl = 'http://www.shicimingju.com/chaxun/zuozhe/1_%s.html' % (pages)

reslist = requests.get(nexturl)

reslist.encoding = 'utf-8'

soup_list = BeautifulSoup(reslist.text, 'html.parser')

for news in soup_list.find_all('div',class_='summary'):

print(news.text)

f = open('123.txt', 'a', encoding='utf-8')

f.write(news.text)

f.close()

def changeTitleToDict():

f = open("123.txt", "r", encoding='utf-8')

str = f.read()

stringList = list(jieba.cut(str))

delWord = {"+", "/", "(", ")", "【", "】", " ", ";", "!", "、"}

stringSet = set(stringList) - delWord

title_dict = {}

for i in stringSet:

title_dict[i] = stringList.count(i)

print(title_dict)

return title_dict

然后就开始生成词云

1

2

3

4

5

6

7

8

9

10

11

12

13

from PIL import Image,ImageSequence

import numpy as np

import matplotlib.pyplot as plt

from wordcloud import WordCloud,ImageColorGenerator

# 获取上面保存的字典

title_dict = changeTitleToDict()

graph = np.array(title_dict)

font = r'C:WindowsFontssimhei.ttf'

wc = WordCloud(background_color='white',max_words=500,font_path=font)

wc.generate_from_frequencies(title_dict)

plt.imshow(wc)

plt.axis("off")

plt.show()