原博文出自于: http://blog.csdn.net/pengych_321/article/details/52014249#comments 感谢!

场景

好的,假设项目数据调研与需求分析已接近尾声,马上进入Coding阶段了,辣么在Coding之前需要干马呢?是的,“统一开发工具、开发环境的搭建与本地测试、测试环境的搭建与测试” - 本文详细记录实际Spark项目开发环境的搭建。

分析

开发工具

操作系统:win 10

JDK 版本 :jdk1.8.0_91

Scala版本:2.10.6

MAVEN版本:apache-maven-3.3.9

集成开发工具:IntelliJ IDEA 2016.1.3

开发主要语言:scala

开发环境的搭建与测试

一. 搭建过程文档

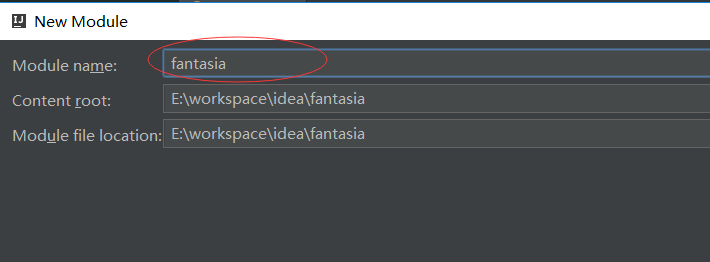

1、新建一个Maven工程

这里以新建一个名称为fantasia的maven工程为例加以说明。

设置完了,选择下一步

设置完了,选择下一步

点击 finish 后idea会加载maven与junit等相关的插件,可能需要30分钟左右的时间(网速决定)。

2、自定义maven的repository目录

idea内置了maven插件,且默认repository目录为C:Users${username}.m2

epository ,这里我们为项目指定一个新的repository,以方便管理依赖的jar包:

3、在pom.xml文件中配置相关依赖包

这里一次性导入项目可能用到的jar包,具体内容如下:

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/maven-v4_0_0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.pl.bdeu.bigdata</groupId>

<artifactId>fantasia</artifactId>

<version>1.0-SNAPSHOT</version>

<inceptionYear>2008</inceptionYear>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<scala.version>2.10.6</scala.version>

<spark.version>1.6.2</spark.version>

<hadoop.version>2.6.0</hadoop.version>

</properties>

<repositories>

<repository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</repository>

</repositories>

<pluginRepositories>

<pluginRepository>

<id>scala-tools.org</id>

<name>Scala-Tools Maven2 Repository</name>

<url>http://scala-tools.org/repo-releases</url>

</pluginRepository>

</pluginRepositories>

<dependencies>

<dependency>

<groupId>org.scala-lang</groupId>

<artifactId>scala-library</artifactId>

<version>${scala.version}</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>3.8.1</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.specs</groupId>

<artifactId>specs</artifactId>

<version>1.2.5</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-mllib_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka_2.10</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.6</version>

</dependency>

<dependency>

<groupId>org.json</groupId>

<artifactId>json</artifactId>

<version>20090211</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-core</artifactId>

<version>2.4.3</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId>

<version>2.4.3</version>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-annotations</artifactId>

<version>2.4.3</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.1.41</version>

</dependency>

<dependency>

<groupId>fastutil</groupId>

<artifactId>fastutil</artifactId>

<version>5.0.9</version>

</dependency>

</dependencies>

<build>

<sourceDirectory>src/main/scala</sourceDirectory>

<testSourceDirectory>src/test/scala</testSourceDirectory>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<executions>

<execution>

<goals>

<goal>compile</goal>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

<args>

<arg>-target:jvm-1.5</arg>

</args>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<configuration>

<downloadSources>true</downloadSources>

<buildcommands>

<buildcommand>ch.epfl.lamp.sdt.core.scalabuilder</buildcommand>

</buildcommands>

<additionalProjectnatures>

<projectnature>ch.epfl.lamp.sdt.core.scalanature</projectnature>

</additionalProjectnatures>

<classpathContainers>

<classpathContainer>org.eclipse.jdt.launching.JRE_CONTAINER</classpathContainer>

<classpathContainer>ch.epfl.lamp.sdt.launching.SCALA_CONTAINER</classpathContainer>

</classpathContainers>

</configuration>

</plugin>

</plugins>

</build>

<reporting>

<plugins>

<plugin>

<groupId>org.scala-tools</groupId>

<artifactId>maven-scala-plugin</artifactId>

<configuration>

<scalaVersion>${scala.version}</scalaVersion>

</configuration>

</plugin>

</plugins>

</reporting>

</project>

4、项目基础架构

新建两个子包:collector与 core

collector:存放 数据采集相关spark作业

core:存放核心业务类spark作业

resource目录下存放相关配置文件:数据库连接信息,kafka环境信息等,

其他的后续根据具体模块功能个再自行定义。

5、本地环境测试

编写 FrameworkExeTest类对框架可用性进行测试

package com.pl.bdeu.bigdata

import org.apache.commons.logging.LogFactory

import org.apache.spark.{SparkConf, SparkContext}

/**

* author pengych@pl.com

* date 2016/7/24

* function 框架可用性测试

*

执行结果:

(hello,2)

(pl,1)

(fantasia,1)

*/

object FrameworkExeTest {

def main(args: Array[String]) {

val log = LogFactory.getLog("FrameworkExeTest")

val conf = new SparkConf().setMaster("local[*]").setAppName("fantasia framework test")

val sc = new SparkContext(conf)

if(log.isDebugEnabled){

log.debug(" SparkContext initialized")

}

val linesRDD= sc.textFile("E:\wordcount.txt")

linesRDD.flatMap(line => line.split(" ") ).map( word => (word,1) ).reduceByKey(_+_).

collect.foreach(println)

sc.stop()

}

}

总结

-

耐心很重要,因为网速很可能很慢

别在idea加载依赖包的时候手动干掉正在加载的进程,这样很可能导致各种找不到包的情况. -

在maven的安装目录: ~apache-maven-3.3.9confsettings.xml的标签里自定义repository路径

本文指定repository的路径为:E:apache-maven-3.3.9 epository

<settings xmlns="http://maven.apache.org/SETTINGS/1.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd">

<localRepository>E:apache-maven-3.3.9

epository</localRepository>