不多说,直接上干货!

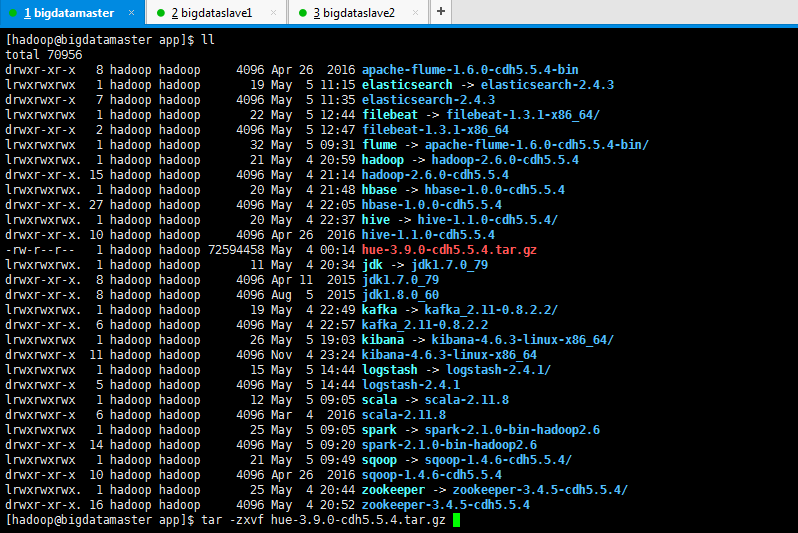

我的集群机器情况是 bigdatamaster(192.168.80.10)、bigdataslave1(192.168.80.11)和bigdataslave2(192.168.80.12)

然后,安装目录是在/home/hadoop/app下。

官方建议在master机器上安装Hue,我这里也不例外。安装在bigdatamaster机器上。

Hue版本:hue-3.9.0-cdh5.5.4

需要编译才能使用(联网)

说给大家的话:大家电脑的配置好的话,一定要安装cloudera manager。毕竟是一家人的。

同时,我也亲身经历过,会有部分组件版本出现问题安装起来要个大半天时间去排除,做好心里准备。废话不多说,因为我目前读研,自己笔记本电脑最大8G,只能玩手动来练手。

纯粹是为了给身边没高配且条件有限的学生党看的! 但我已经在实验室机器群里搭建好cloudera manager 以及 ambari都有。

大数据领域两大最主流集群管理工具Ambari和Cloudera Manger

Cloudera安装搭建部署大数据集群(图文分五大步详解)(博主强烈推荐)

Ambari安装搭建部署大数据集群(图文分五大步详解)(博主强烈推荐)

问题一:

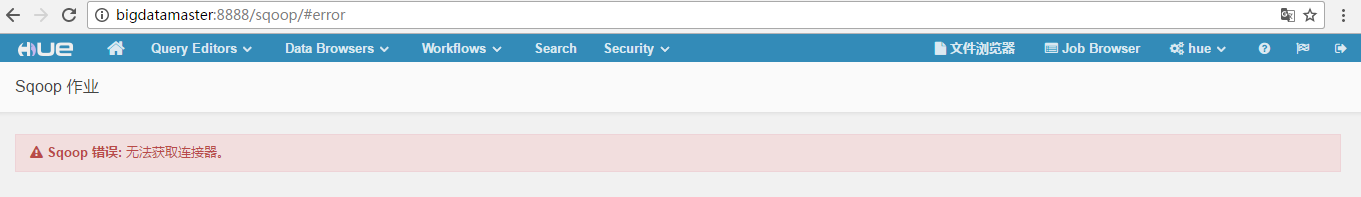

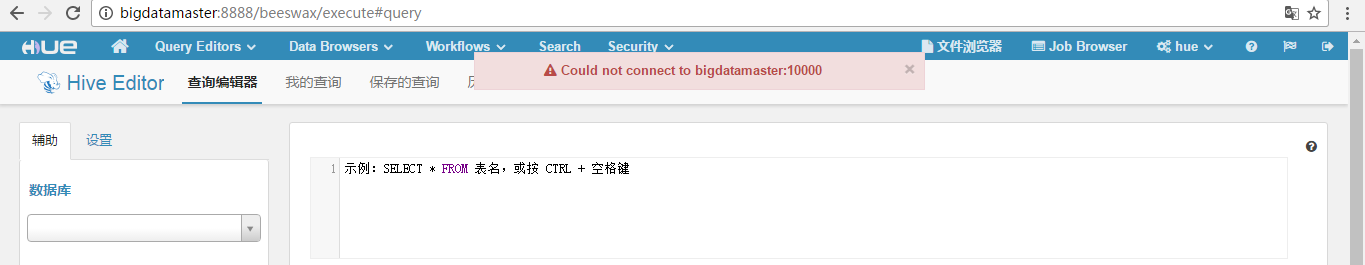

1、HUE中Hive 查询有问题,页面报错:Could not connect to localhost:10000 或者 Could not connect to bigdatamaster:10000

解决方法:

在安装的HIVE中启动hiveserver2 &,因为端口号10000是hiveserver2服务的端口号,否则,Hue Web 控制无法执行HIVE 查询。

bigdatamaster是我机器名。

在$HIVE_HOME下

bin/hive -–service hiveserver2 &

[hadoop@bigdatamaster ~]$ cd $HIVE_HOME

[hadoop@bigdatamaster hive]$ bin/hive --service hiveserver2 &

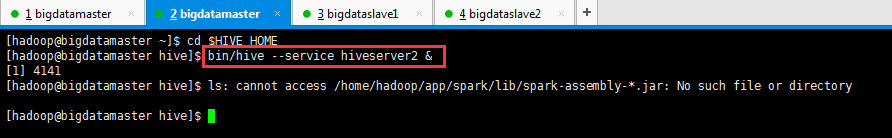

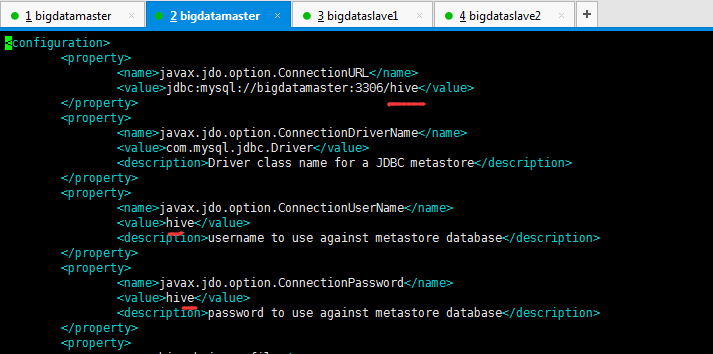

大家,注意,以下是我的hive-site.xml里的配置信息

该问题,成功解决。

问题二:

database is locked

这应该是hue默认的SQLite数据库出现错误,你可以使用mysql postgresql等来替换

https://www.cloudera.com/documentation/enterprise/5-5-x/topics/admin_hue_ext_db.html(这是官网)

同时,参考(见https://my.oschina.net/aibati2008/blog/647493)

这篇博客:安装配置和使用hue遇到的问题汇总

# Configuration options for specifying the Desktop Database. For more info, # see http://docs.djangoproject.com/en/1.4/ref/settings/#database-engine # ------------------------------------------------------------------------ [[database]] # Database engine is typically one of: # postgresql_psycopg2, mysql, sqlite3 or oracle. # # Note that for sqlite3, 'name', below is a path to the filename. For other backends, it is the database name. # Note for Oracle, options={"threaded":true} must be set in order to avoid crashes. # Note for Oracle, you can use the Oracle Service Name by setting "port=0" and then "name=<host>:<port>/<service_name>". # Note for MariaDB use the 'mysql' engine. ## engine=sqlite3 ## host= ## port= ## user= ## password= ## name=desktop/desktop.db ## options={}

以上是默认的。

hue默认使用sqlite作为元数据库,不推荐在生产环境中使用。会经常出现database is lock的问题。

解决方法:

其实官网也有解决方法,不过过程似乎有点问题。而且并不适合3.7之后的版本。我现在使用的是3.11,以下是总结的最快的切换方法。

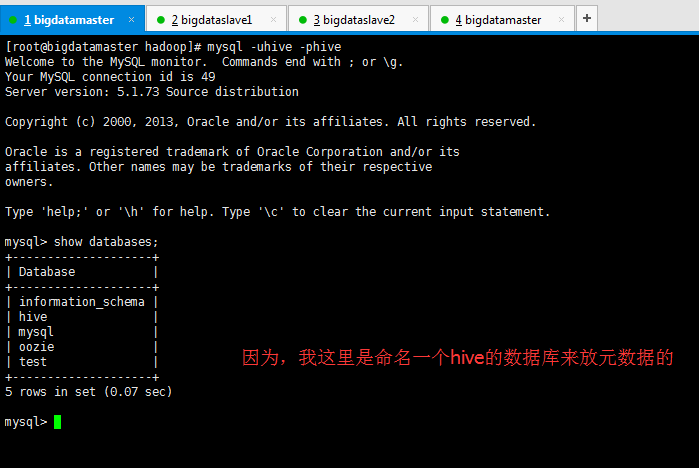

[root@bigdatamaster hadoop]# mysql -uhive -phive Welcome to the MySQL monitor. Commands end with ; or g. Your MySQL connection id is 49 Server version: 5.1.73 Source distribution Copyright (c) 2000, 2013, Oracle and/or its affiliates. All rights reserved. Oracle is a registered trademark of Oracle Corporation and/or its affiliates. Other names may be trademarks of their respective owners. Type 'help;' or 'h' for help. Type 'c' to clear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | hive | | mysql | | oozie | | test | +--------------------+ 5 rows in set (0.07 sec) mysql>

因为,我这里是,用户为hive,密码也为hive,然后,数据库也为hive,所以如下:

# Configuration options for specifying the Desktop Database. For more info, # see http://docs.djangoproject.com/en/1.4/ref/settings/#database-engine # ------------------------------------------------------------------------ [[database]] # Database engine is typically one of: # postgresql_psycopg2, mysql, sqlite3 or oracle. # # Note that for sqlite3, 'name', below is a path to the filename. For other backends, it is the database name. # Note for Oracle, options={"threaded":true} must be set in order to avoid crashes. # Note for Oracle, you can use the Oracle Service Name by setting "port=0" and then "name=<host>:<port>/<service_name>". # Note for MariaDB use the 'mysql' engine. engine=mysql host=bigdatamaster port=3306 user=hive password=hive name=hive ## options={}

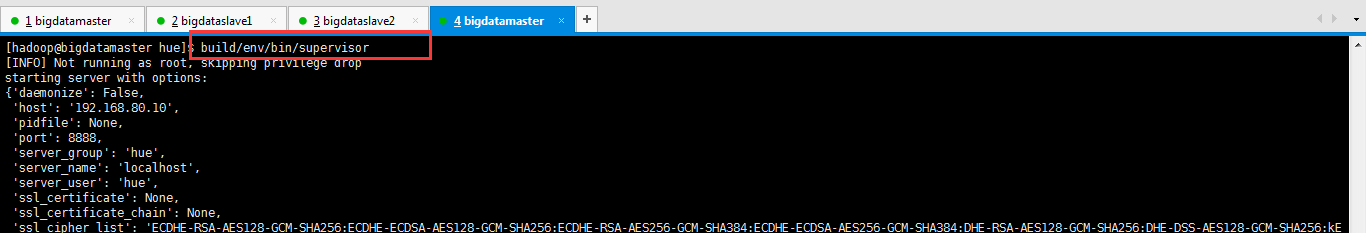

然后,重启hue进程

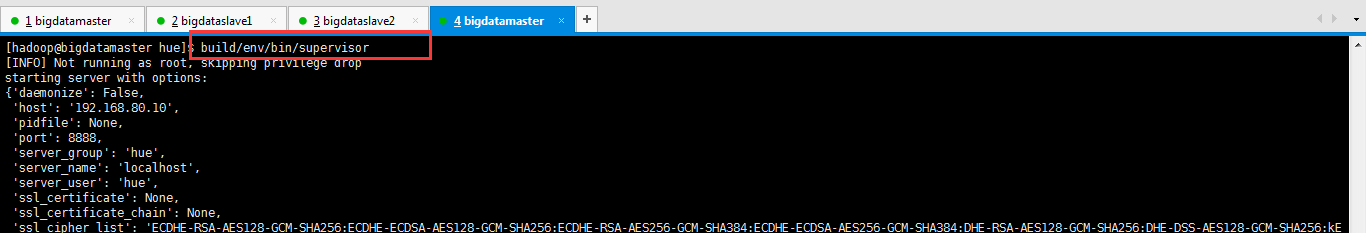

[hadoop@bigdatamaster hue]$ build/env/bin/supervisor

完成以上的这个配置,启动Hue,通过浏览器访问,会发生错误,原因是mysql数据库没有被初始化

DatabaseError: (1146, "Table 'hue.desktop_settings' doesn't exist")

或者

ProgrammingError: (1146, "Table 'hive.django_session' doesn't exist")

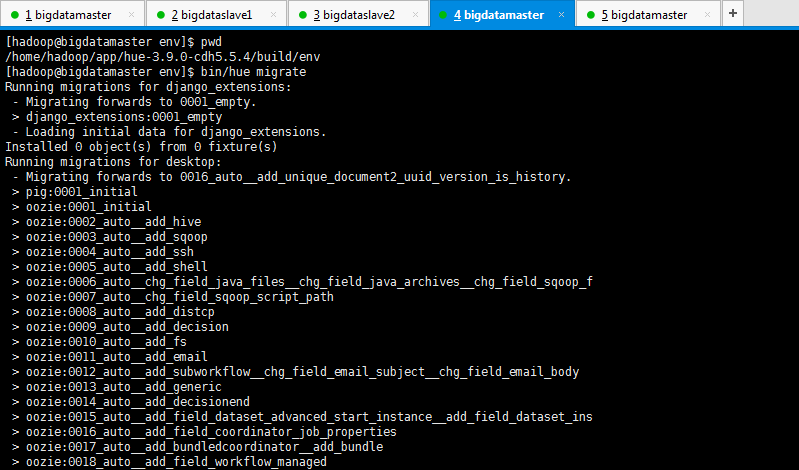

初始化数据库

/home/hadoop/app/hue-3.9.0-cdh5.5.4/build/env

bin/hue syncdb

bin/hue migrate执行完以后,可以在mysql中看到,hue相应的表已经生成。

启动hue, 能够正常访问了。

或者

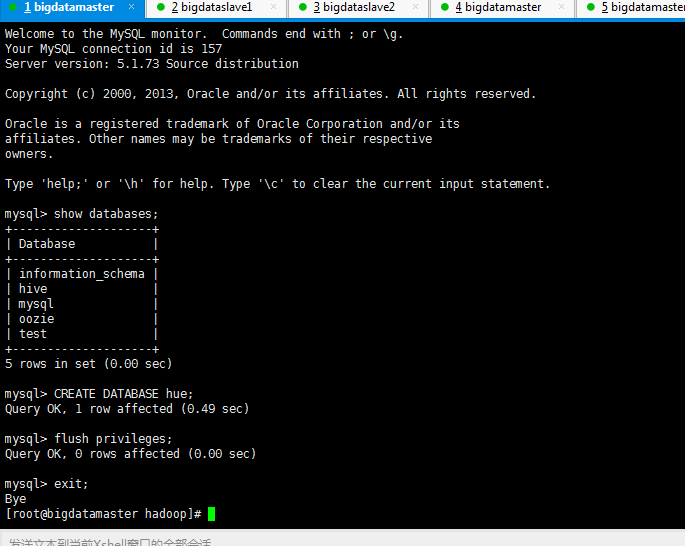

当然,大家这里,可以先在mysql里面创建数据库。命名为hue,并且是以hadoop用户和hadoop密码。

首先,

[root@master app]# mysql -uroot -prootroot mysql> create user 'hue' identified by 'hue'; //创建一个账号:用户名为hue,密码为hue

或者

mysql> create user 'hue'@'%' identified by 'hue'; //创建一个账号:用户名为hue,密码为hue

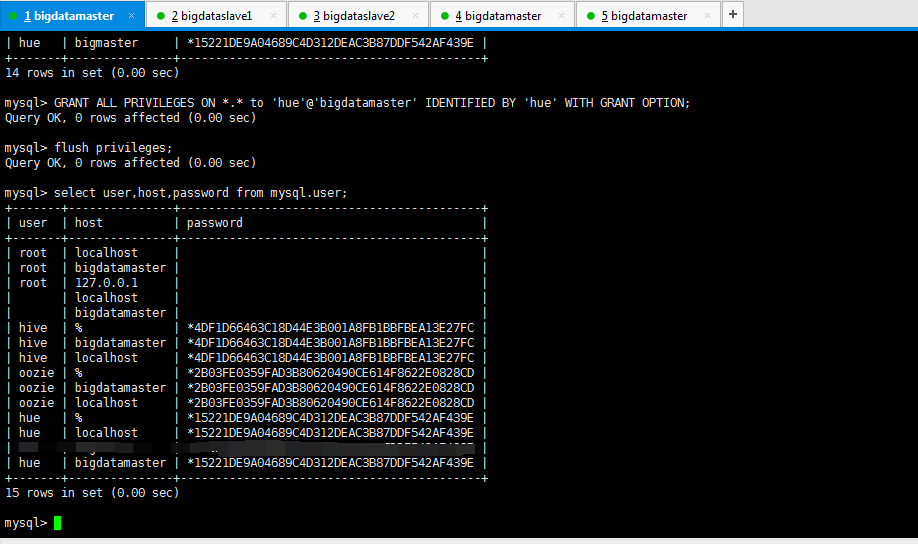

mysql> GRANT ALL PRIVILEGES ON *.* to 'hue'@'%' IDENTIFIED BY 'hue' WITH GRANT OPTION; //将权限授予host为%即所有主机的hue用户

mysql> GRANT ALL PRIVILEGES ON *.* to 'hue'@'bigdatamaster' IDENTIFIED BY 'hue' WITH GRANT OPTION; //将权限授予host为master的hue用户

mysql> GRANT ALL PRIVILEGES ON *.* to 'hue'@'localhost' IDENTIFIED BY 'hue' WITH GRANT OPTION; //将权限授予host为localhost的hue用户(其实这一步可以不配)

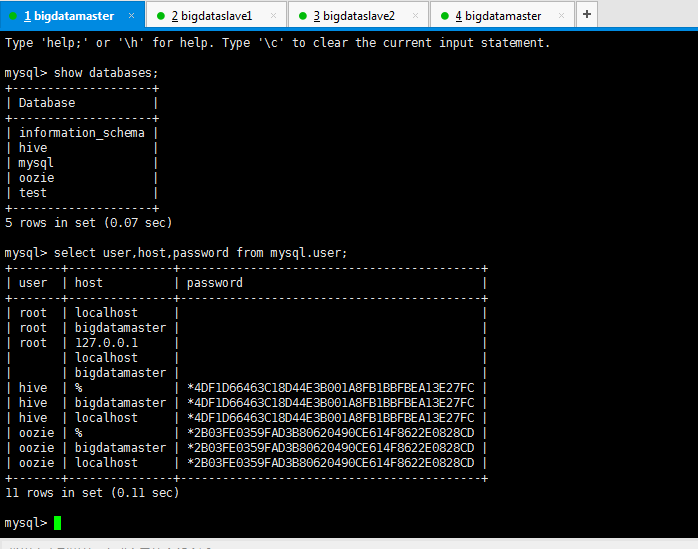

mysql> GRANT ALL PRIVILEGES ON *.* to 'hue'@'bigdatamaster' IDENTIFIED BY 'hue' WITH GRANT OPTION; Query OK, 0 rows affected (0.00 sec) mysql> flush privileges; Query OK, 0 rows affected (0.00 sec) mysql> select user,host,password from mysql.user; +-------+---------------+-------------------------------------------+ | user | host | password | +-------+---------------+-------------------------------------------+ | root | localhost | | | root | bigdatamaster | | | root | 127.0.0.1 | | | | localhost | | | | bigdatamaster | | | hive | % | *4DF1D66463C18D44E3B001A8FB1BBFBEA13E27FC | | hive | bigdatamaster | *4DF1D66463C18D44E3B001A8FB1BBFBEA13E27FC | | hive | localhost | *4DF1D66463C18D44E3B001A8FB1BBFBEA13E27FC | | oozie | % | *2B03FE0359FAD3B80620490CE614F8622E0828CD | | oozie | bigdatamaster | *2B03FE0359FAD3B80620490CE614F8622E0828CD | | oozie | localhost | *2B03FE0359FAD3B80620490CE614F8622E0828CD | | hue | % | *15221DE9A04689C4D312DEAC3B87DDF542AF439E | | hue | localhost | *15221DE9A04689C4D312DEAC3B87DDF542AF439E | | hue | bigdatamaster | *15221DE9A04689C4D312DEAC3B87DDF542AF439E | +-------+---------------+-------------------------------------------+ 15 rows in set (0.00 sec) mysql> exit; Bye [root@bigdatamaster hadoop]#

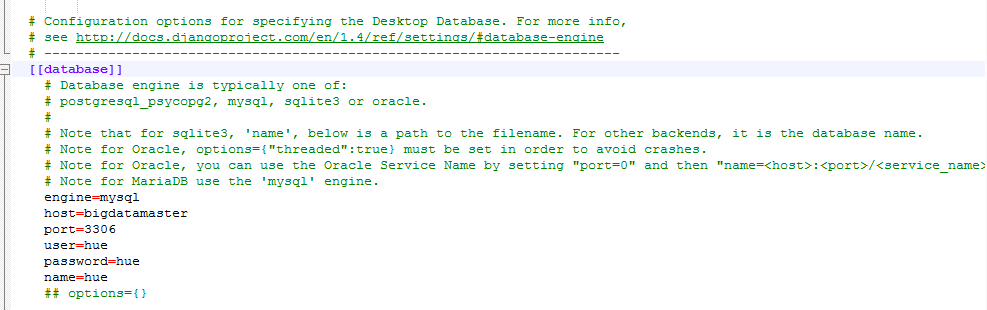

# Configuration options for specifying the Desktop Database. For more info, # see http://docs.djangoproject.com/en/1.4/ref/settings/#database-engine # ------------------------------------------------------------------------ [[database]] # Database engine is typically one of: # postgresql_psycopg2, mysql, sqlite3 or oracle. # # Note that for sqlite3, 'name', below is a path to the filename. For other backends, it is the database name. # Note for Oracle, options={"threaded":true} must be set in order to avoid crashes. # Note for Oracle, you can use the Oracle Service Name by setting "port=0" and then "name=<host>:<port>/<service_name>". # Note for MariaDB use the 'mysql' engine. engine=mysql host=bigdatamaster port=3306 user=hue password=hue name=hue ## options={}

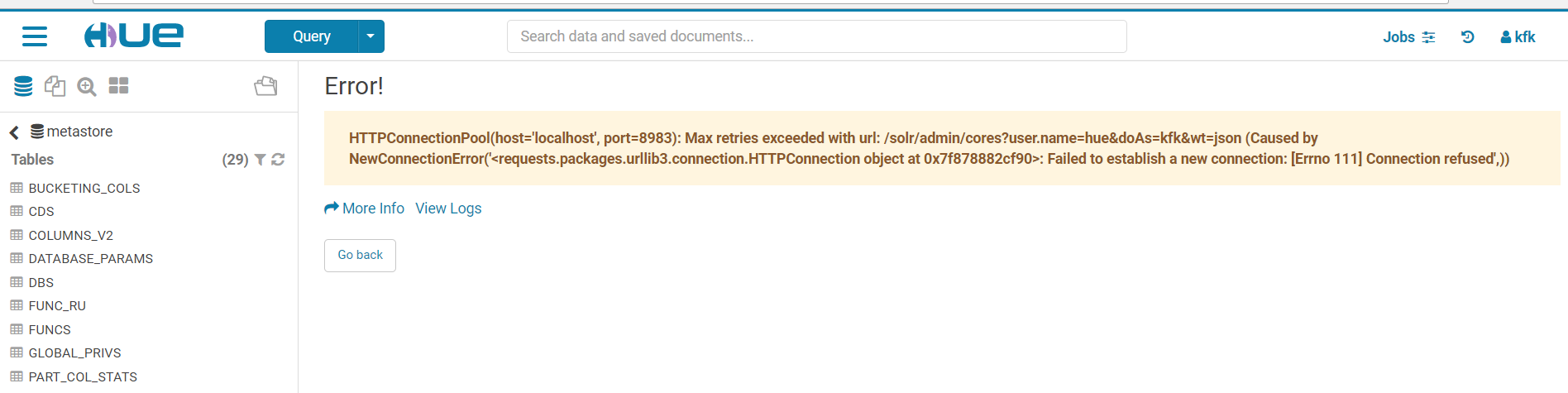

完成以上的这个配置,启动Hue,通过浏览器访问,会发生错误。比如如下

如果大家遇到这个问题,别忘记还要创建数据库命名为hue。

OperationalError: (1049, "Unknown database 'hue'")

mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | hive | | mysql | | oozie | | test | +--------------------+ 5 rows in set (0.00 sec) mysql> CREATE DATABASE hue; Query OK, 1 row affected (0.49 sec) mysql> flush privileges; Query OK, 0 rows affected (0.00 sec) mysql> exit; Bye [root@bigdatamaster hadoop]#

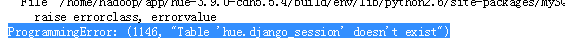

启动hue之后,比如如下的错误,原因是mysql数据库没有被初始化。

ProgrammingError: (1146, "Table 'hue.django_session' doesn't exist")

则初始化数据库

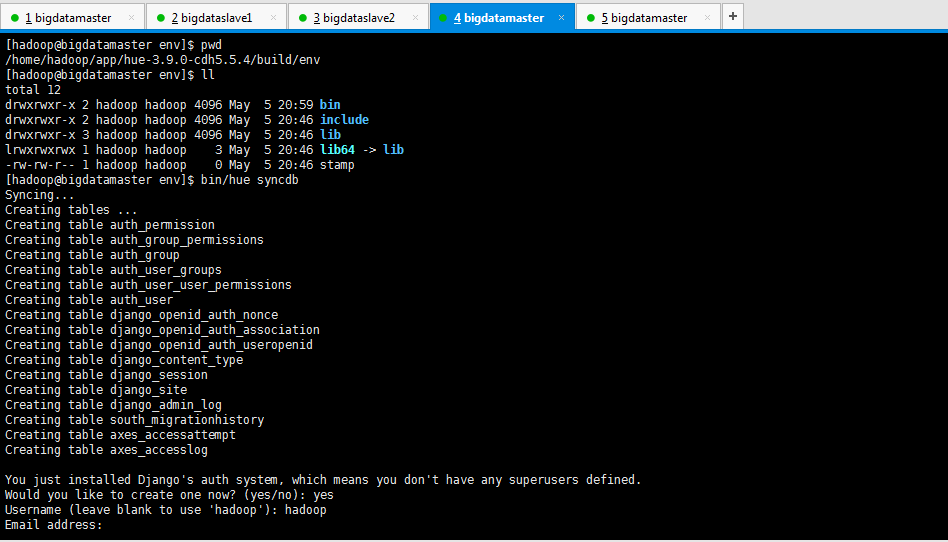

cd /home/hadoop/app/hue-3.9.0-cdh5.5.4/build/env

bin/hue syncdb

bin/hue migrate

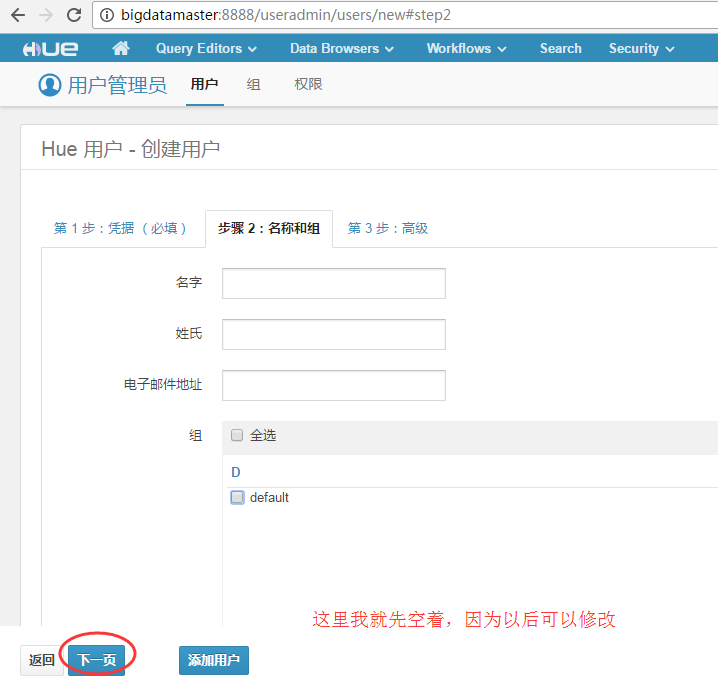

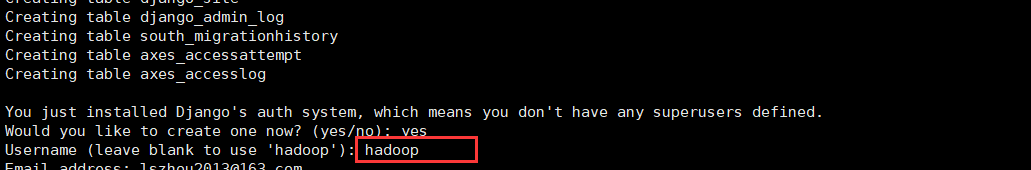

具体如下(这里一定要注意啦!!!先看完,再动手)

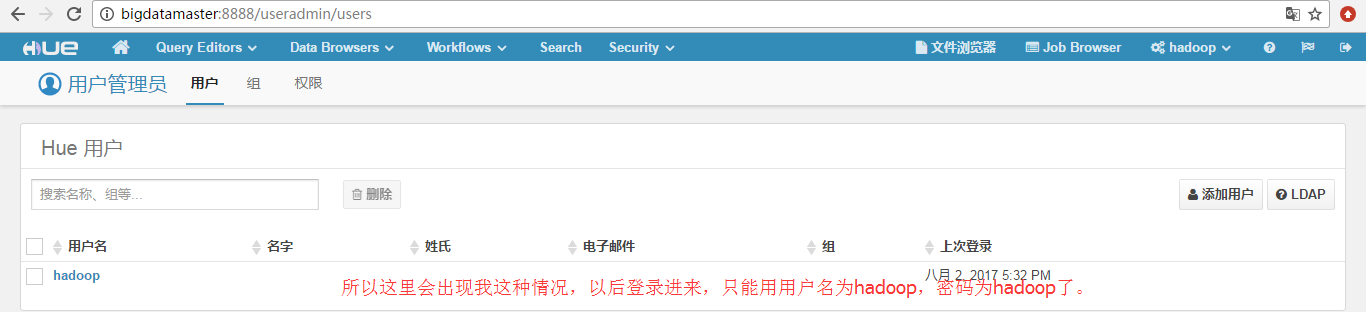

这里,大家一定要注意啊,如果这里你输入的是默认提示hadoop,则在登录的时候就是为hadoop啦。

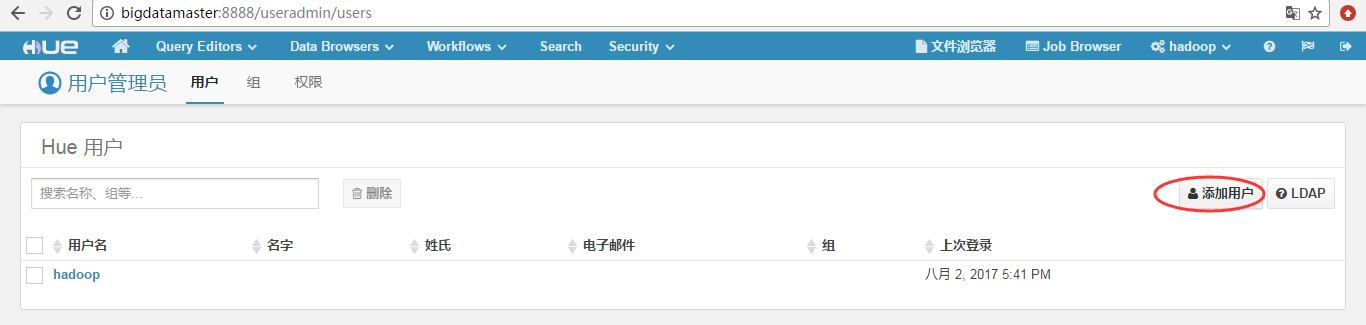

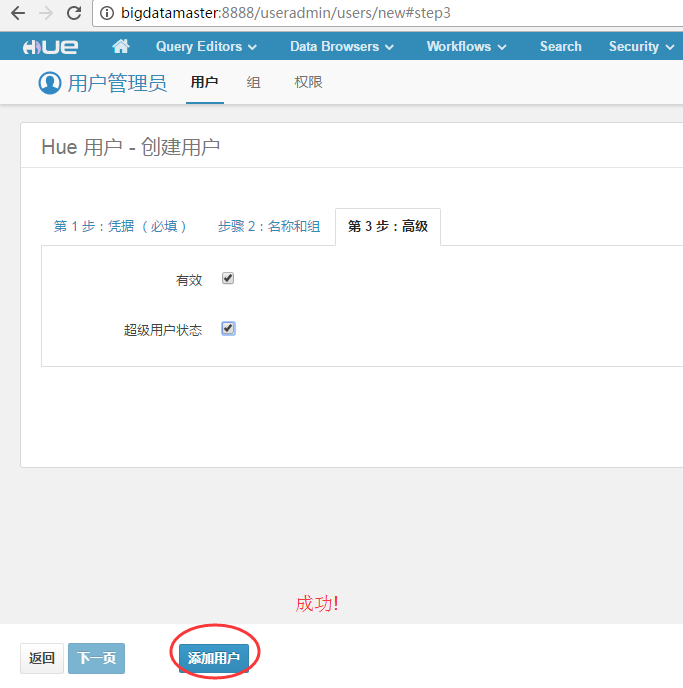

当然若这里,大家弄错了的话,还可以如我下面这样进行来弥补。

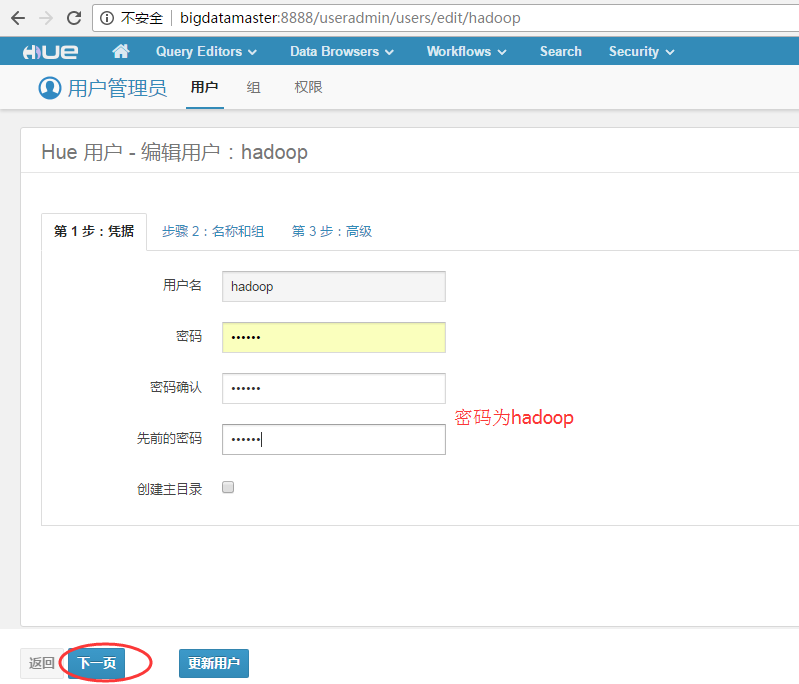

第一步:

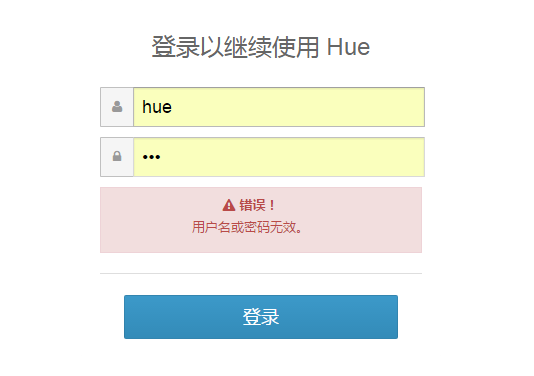

所以,这样下来,不太好。

所以我这里,为了避免这个情况发生,直接输入用户名为hue,密码也是为hue。

[hadoop@bigdatamaster env]$ pwd /home/hadoop/app/hue-3.9.0-cdh5.5.4/build/env [hadoop@bigdatamaster env]$ ll total 12 drwxrwxr-x 2 hadoop hadoop 4096 May 5 20:59 bin drwxrwxr-x 2 hadoop hadoop 4096 May 5 20:46 include drwxrwxr-x 3 hadoop hadoop 4096 May 5 20:46 lib lrwxrwxrwx 1 hadoop hadoop 3 May 5 20:46 lib64 -> lib -rw-rw-r-- 1 hadoop hadoop 0 May 5 20:46 stamp [hadoop@bigdatamaster env]$ bin/hue syncdb Syncing... Creating tables ... Creating table auth_permission Creating table auth_group_permissions Creating table auth_group Creating table auth_user_groups Creating table auth_user_user_permissions Creating table auth_user Creating table django_openid_auth_nonce Creating table django_openid_auth_association Creating table django_openid_auth_useropenid Creating table django_content_type Creating table django_session Creating table django_site Creating table django_admin_log Creating table south_migrationhistory Creating table axes_accessattempt Creating table axes_accesslog You just installed Django's auth system, which means you don't have any superusers defined. Would you like to create one now? (yes/no): yes Username (leave blank to use 'hadoop'): hue Email address: Password: hue Password (again): Superuser created successfully. Installing custom SQL ... Installing indexes ... Installed 0 object(s) from 0 fixture(s) Synced: > django.contrib.auth > django_openid_auth > django.contrib.contenttypes > django.contrib.sessions > django.contrib.sites > django.contrib.staticfiles > django.contrib.admin > south > axes > about > filebrowser > help > impala > jobbrowser > metastore > proxy > rdbms > zookeeper > indexer Not synced (use migrations): - django_extensions - desktop - beeswax - hbase - jobsub - oozie - pig - search - security - spark - sqoop - useradmin (use ./manage.py migrate to migrate these) [hadoop@bigdatamaster env]$

然后,再

[hadoop@bigdatamaster env]$ pwd /home/hadoop/app/hue-3.9.0-cdh5.5.4/build/env [hadoop@bigdatamaster env]$ bin/hue migrate Running migrations for django_extensions: - Migrating forwards to 0001_empty. > django_extensions:0001_empty - Loading initial data for django_extensions. Installed 0 object(s) from 0 fixture(s) Running migrations for desktop: - Migrating forwards to 0016_auto__add_unique_document2_uuid_version_is_history. > pig:0001_initial > oozie:0001_initial > oozie:0002_auto__add_hive > oozie:0003_auto__add_sqoop > oozie:0004_auto__add_ssh > oozie:0005_auto__add_shell > oozie:0006_auto__chg_field_java_files__chg_field_java_archives__chg_field_sqoop_f > oozie:0007_auto__chg_field_sqoop_script_path > oozie:0008_auto__add_distcp > oozie:0009_auto__add_decision > oozie:0010_auto__add_fs > oozie:0011_auto__add_email > oozie:0012_auto__add_subworkflow__chg_field_email_subject__chg_field_email_body > oozie:0013_auto__add_generic > oozie:0014_auto__add_decisionend > oozie:0015_auto__add_field_dataset_advanced_start_instance__add_field_dataset_ins > oozie:0016_auto__add_field_coordinator_job_properties > oozie:0017_auto__add_bundledcoordinator__add_bundle > oozie:0018_auto__add_field_workflow_managed > oozie:0019_auto__add_field_java_capture_output > oozie:0020_chg_large_varchars_to_textfields > oozie:0021_auto__chg_field_java_args__add_field_job_is_trashed > oozie:0022_auto__chg_field_mapreduce_node_ptr__chg_field_start_node_ptr > oozie:0022_change_examples_path_format - Migration 'oozie:0022_change_examples_path_format' is marked for no-dry-run. > oozie:0023_auto__add_field_node_data__add_field_job_data > oozie:0024_auto__chg_field_subworkflow_sub_workflow > oozie:0025_change_examples_path_format - Migration 'oozie:0025_change_examples_path_format' is marked for no-dry-run. > desktop:0001_initial > desktop:0002_add_groups_and_homedirs > desktop:0003_group_permissions > desktop:0004_grouprelations > desktop:0005_settings > desktop:0006_settings_add_tour > beeswax:0001_initial > beeswax:0002_auto__add_field_queryhistory_notify > beeswax:0003_auto__add_field_queryhistory_server_name__add_field_queryhistory_serve > beeswax:0004_auto__add_session__add_field_queryhistory_server_type__add_field_query > beeswax:0005_auto__add_field_queryhistory_statement_number > beeswax:0006_auto__add_field_session_application > beeswax:0007_auto__add_field_savedquery_is_trashed > beeswax:0008_auto__add_field_queryhistory_query_type > desktop:0007_auto__add_documentpermission__add_documenttag__add_document /home/hadoop/app/hue-3.9.0-cdh5.5.4/build/env/lib/python2.6/site-packages/Django-1.6.10-py2.6.egg/django/db/backends/mysql/base.py:124: Warning: Some non-transactional changed tables couldn't be rolled back return self.cursor.execute(query, args) > desktop:0008_documentpermission_m2m_tables > desktop:0009_auto__chg_field_document_name > desktop:0010_auto__add_document2__chg_field_userpreferences_key__chg_field_userpref > desktop:0011_auto__chg_field_document2_uuid > desktop:0012_auto__chg_field_documentpermission_perms > desktop:0013_auto__add_unique_documenttag_owner_tag > desktop:0014_auto__add_unique_document_content_type_object_id > desktop:0015_auto__add_unique_documentpermission_doc_perms > desktop:0016_auto__add_unique_document2_uuid_version_is_history - Loading initial data for desktop. Installed 0 object(s) from 0 fixture(s) Running migrations for beeswax: - Migrating forwards to 0013_auto__add_field_session_properties. > beeswax:0009_auto__add_field_savedquery_is_redacted__add_field_queryhistory_is_reda > beeswax:0009_auto__chg_field_queryhistory_server_port > beeswax:0010_merge_database_state > beeswax:0011_auto__chg_field_savedquery_name > beeswax:0012_auto__add_field_queryhistory_extra > beeswax:0013_auto__add_field_session_properties - Loading initial data for beeswax. Installed 0 object(s) from 0 fixture(s) Running migrations for hbase: - Migrating forwards to 0001_initial. > hbase:0001_initial - Loading initial data for hbase. Installed 0 object(s) from 0 fixture(s) Running migrations for jobsub: - Migrating forwards to 0006_chg_varchars_to_textfields. > jobsub:0001_initial > jobsub:0002_auto__add_ooziestreamingaction__add_oozieaction__add_oozieworkflow__ad > jobsub:0003_convertCharFieldtoTextField > jobsub:0004_hue1_to_hue2 - Migration 'jobsub:0004_hue1_to_hue2' is marked for no-dry-run. > jobsub:0005_unify_with_oozie - Migration 'jobsub:0005_unify_with_oozie' is marked for no-dry-run. > jobsub:0006_chg_varchars_to_textfields - Loading initial data for jobsub. Installed 0 object(s) from 0 fixture(s) Running migrations for oozie: - Migrating forwards to 0027_auto__chg_field_node_name__chg_field_job_name. > oozie:0026_set_default_data_values - Migration 'oozie:0026_set_default_data_values' is marked for no-dry-run. > oozie:0027_auto__chg_field_node_name__chg_field_job_name - Loading initial data for oozie. Installed 0 object(s) from 0 fixture(s) Running migrations for pig: - Nothing to migrate. - Loading initial data for pig. Installed 0 object(s) from 0 fixture(s) Running migrations for search: - Migrating forwards to 0003_auto__add_field_collection_owner. > search:0001_initial > search:0002_auto__del_core__add_collection > search:0003_auto__add_field_collection_owner - Loading initial data for search. Installed 0 object(s) from 0 fixture(s) ? You have no migrations for the 'security' app. You might want some. Running migrations for spark: - Migrating forwards to 0001_initial. > spark:0001_initial - Loading initial data for spark. Installed 0 object(s) from 0 fixture(s) Running migrations for sqoop: - Migrating forwards to 0001_initial. > sqoop:0001_initial - Loading initial data for sqoop. Installed 0 object(s) from 0 fixture(s) Running migrations for useradmin: - Migrating forwards to 0006_auto__add_index_userprofile_last_activity. > useradmin:0001_permissions_and_profiles - Migration 'useradmin:0001_permissions_and_profiles' is marked for no-dry-run. > useradmin:0002_add_ldap_support - Migration 'useradmin:0002_add_ldap_support' is marked for no-dry-run. > useradmin:0003_remove_metastore_readonly_huepermission - Migration 'useradmin:0003_remove_metastore_readonly_huepermission' is marked for no-dry-run. > useradmin:0004_add_field_UserProfile_first_login > useradmin:0005_auto__add_field_userprofile_last_activity > useradmin:0006_auto__add_index_userprofile_last_activity - Loading initial data for useradmin. Installed 0 object(s) from 0 fixture(s) [hadoop@bigdatamaster env]$

执行完以后,可以在mysql中看到,hue相应的表已经生成。

mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | hive | | hue | | mysql | | oozie | | test | +--------------------+ 6 rows in set (0.06 sec) mysql> use hue; Reading table information for completion of table and column names You can turn off this feature to get a quicker startup with -A Database changed mysql> show tables; +--------------------------------+ | Tables_in_hue | +--------------------------------+ | auth_group | | auth_group_permissions | | auth_permission | | auth_user | | auth_user_groups | | auth_user_user_permissions | | axes_accessattempt | | axes_accesslog | | beeswax_metainstall | | beeswax_queryhistory | | beeswax_savedquery | | beeswax_session | | desktop_document | | desktop_document2 | | desktop_document2_dependencies | | desktop_document2_tags | | desktop_document_tags | | desktop_documentpermission | | desktop_documenttag | | desktop_settings | | desktop_userpreferences | | django_admin_log | | django_content_type | | django_openid_auth_association | | django_openid_auth_nonce | | django_openid_auth_useropenid | | django_session | | django_site | | documentpermission_groups | | documentpermission_users | | jobsub_checkforsetup | | jobsub_jobdesign | | jobsub_jobhistory | | jobsub_oozieaction | | jobsub_ooziedesign | | jobsub_ooziejavaaction | | jobsub_ooziemapreduceaction | | jobsub_ooziestreamingaction | | oozie_bundle | | oozie_bundledcoordinator | | oozie_coordinator | | oozie_datainput | | oozie_dataoutput | | oozie_dataset | | oozie_decision | | oozie_decisionend | | oozie_distcp | | oozie_email | | oozie_end | | oozie_fork | | oozie_fs | | oozie_generic | | oozie_history | | oozie_hive | | oozie_java | | oozie_job | | oozie_join | | oozie_kill | | oozie_link | | oozie_mapreduce | | oozie_node | | oozie_pig | | oozie_shell | | oozie_sqoop | | oozie_ssh | | oozie_start | | oozie_streaming | | oozie_subworkflow | | oozie_workflow | | pig_document | | pig_pigscript | | search_collection | | search_facet | | search_result | | search_sorting | | south_migrationhistory | | useradmin_grouppermission | | useradmin_huepermission | | useradmin_ldapgroup | | useradmin_userprofile | +--------------------------------+ 80 rows in set (0.00 sec) mysql>

启动hue, 能够正常访问了。

[hadoop@bigdatamaster hue-3.9.0-cdh5.5.4]$ pwd /home/hadoop/app/hue-3.9.0-cdh5.5.4 [hadoop@bigdatamaster hue-3.9.0-cdh5.5.4]$ build/env/bin/supervisor

问题三:

Error loading MySQLdb module: libmysqlclient_r.so.16: cannot open shared object file: No such file or directory

解决办法

把安装mysql 的虚拟机里找一个直接把libmysqlclient.so.18这个文件拷贝到系统指定的/usr/lib64库文件目录中。

大家,注意,以下是我的hive-site.xml配置信息,我的hive是安在bigdatamaster机器上,

我的这里配置是,

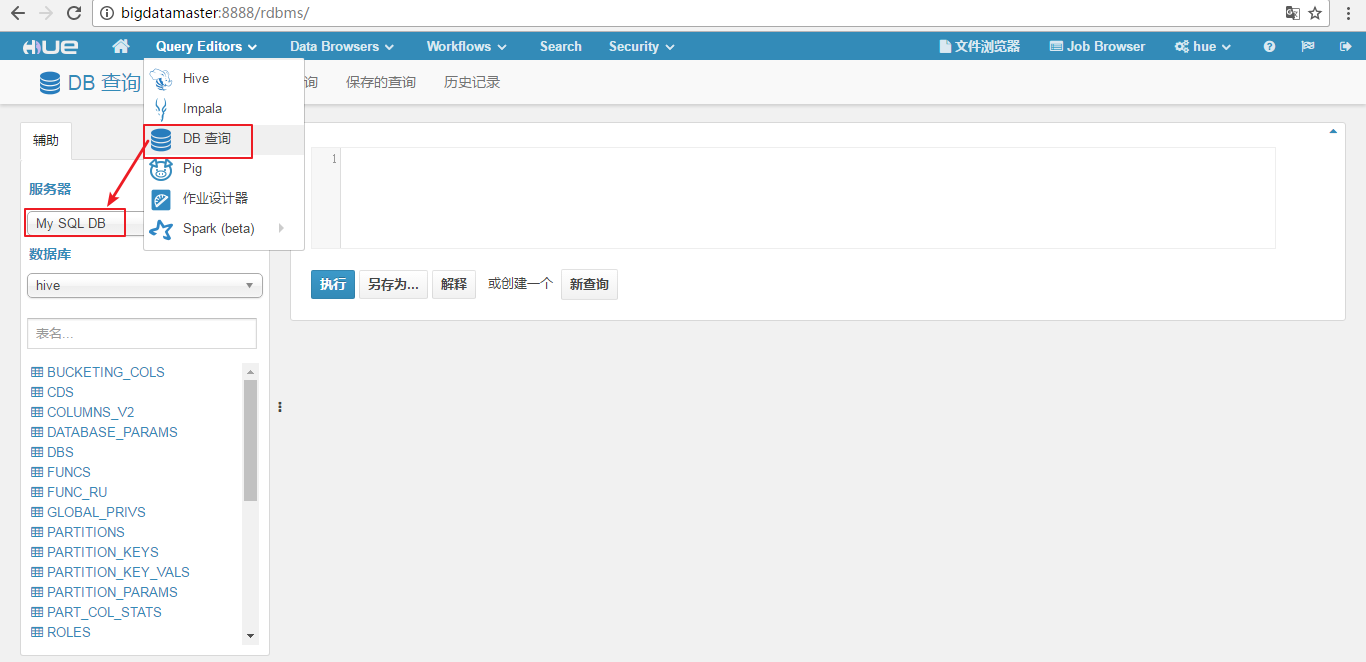

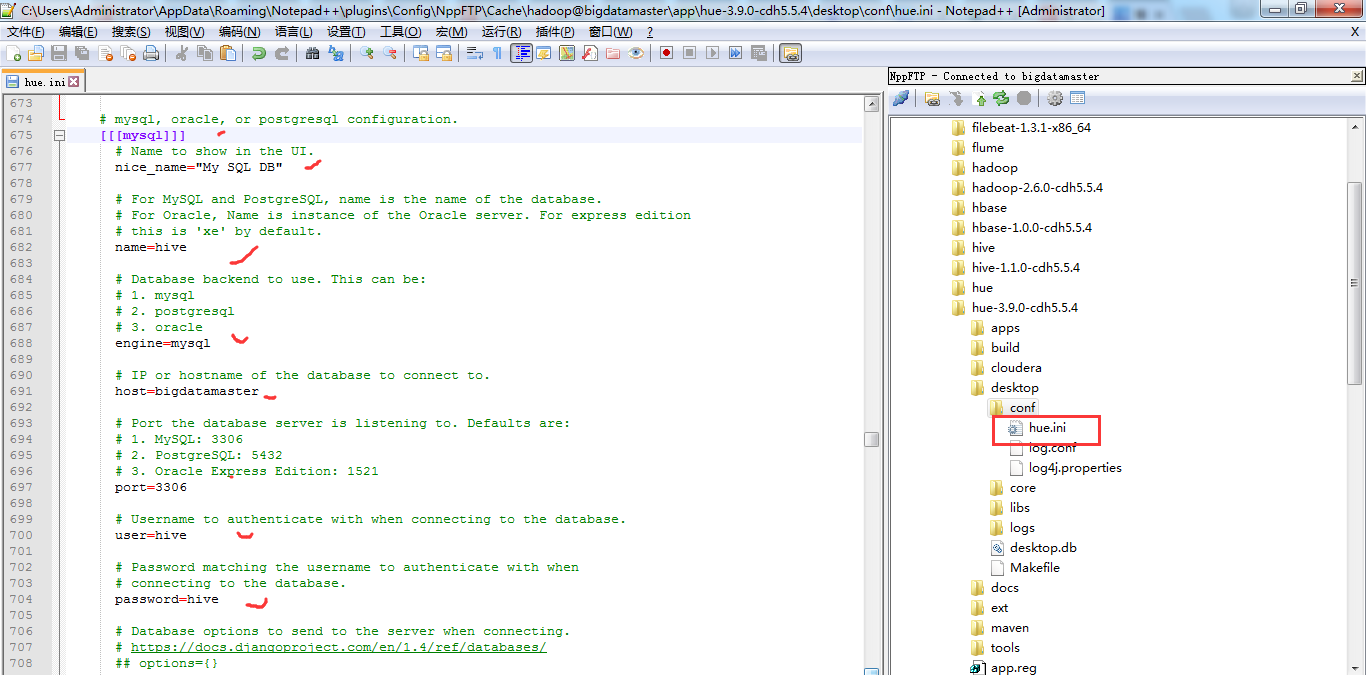

# Database options to send to the server when connecting. # https://docs.djangoproject.com/en/1.4/ref/databases/ ## options={} # mysql, oracle, or postgresql configuration. [[[mysql]]] # Name to show in the UI. nice_name="My SQL DB" # For MySQL and PostgreSQL, name is the name of the database. # For Oracle, Name is instance of the Oracle server. For express edition # this is 'xe' by default. name=hive # Database backend to use. This can be: # 1. mysql # 2. postgresql # 3. oracle engine=mysql # IP or hostname of the database to connect to. host=bigdatamaster # Port the database server is listening to. Defaults are: # 1. MySQL: 3306 # 2. PostgreSQL: 5432 # 3. Oracle Express Edition: 1521 port=3306 # Username to authenticate with when connecting to the database. user=hive # Password matching the username to authenticate with when # connecting to the database. password=hive # Database options to send to the server when connecting. # https://docs.djangoproject.com/en/1.4/ref/databases/ ## options={}

该问题成功得到解决!

问题四(跟博文的问题十五一样)

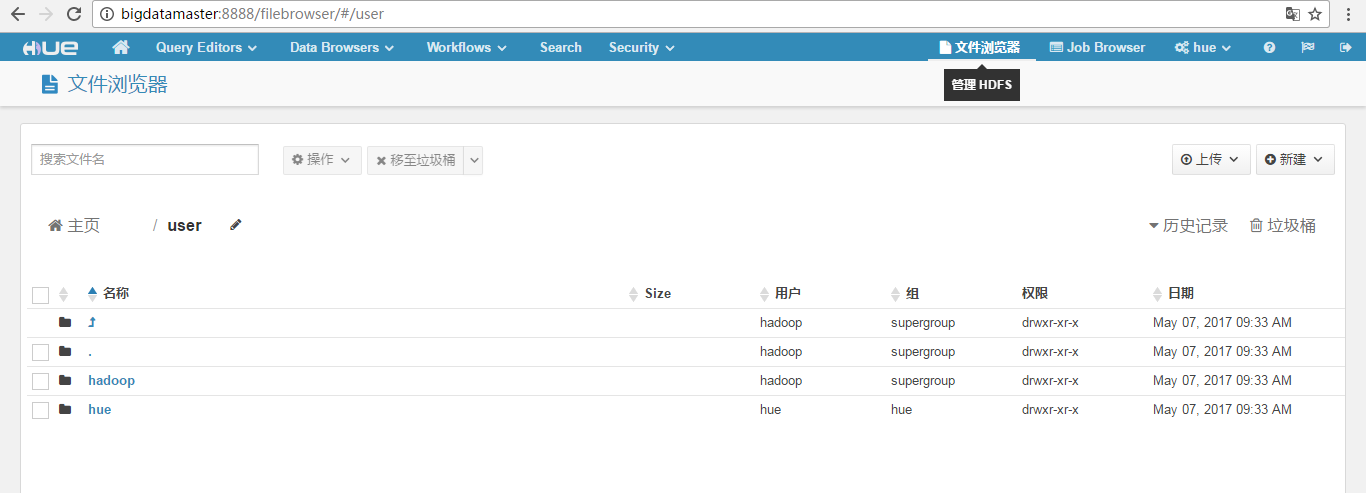

点击“File Browser”报错:

Cannot access:/user/admin."注:您是hue管理员,但不是HDFS超级用户(即“”HDFS“”)

解决方法:

在$HADOOP_HOME的etc/hadoop中编辑core-site.xml文件,增加

<property>

<name>hadoop.proxyuser.oozie.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.ozzie.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

然后重启hadoop,stop-all.sh----->start-all.sh即可。

该问题成功得到解决!

我一般配置是如下,在$HADOOP_HOME/etc/hadoop/下的core-site.xml里

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hdfs.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hdfs.groups</name>

<value>*</value>

</property>

为什么要这么加,是因为,我有三个用户,

这里,大家根据自己的实情去增加,修改完之后,一定要重启sbin/start-all.sh,就能解决问题了。

问题五

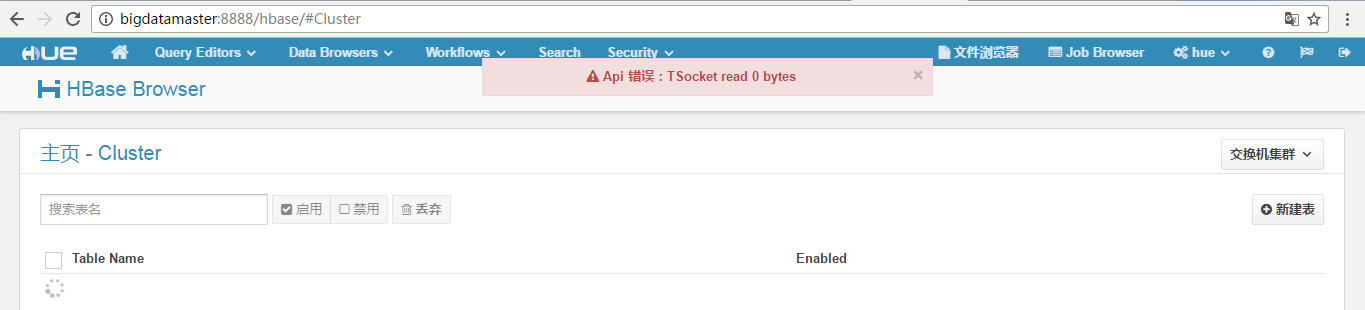

在Hue对HBase集成时, HBase Browser 里出现 Api Error: TSocket read 0 bytes

解决办法

https://stackoverflow.com/questions/20415493/api-error-tsocket-read-0-bytes-when-using-hue-with-hbase

Add this to your hbase "core-site.conf":

<property> <name>hbase.thrift.support.proxyuser</name> <value>true</value> </property> <property> <name>hbase.regionserver.thrift.http</name> <value>true</value> </property>

即可,解决问题。

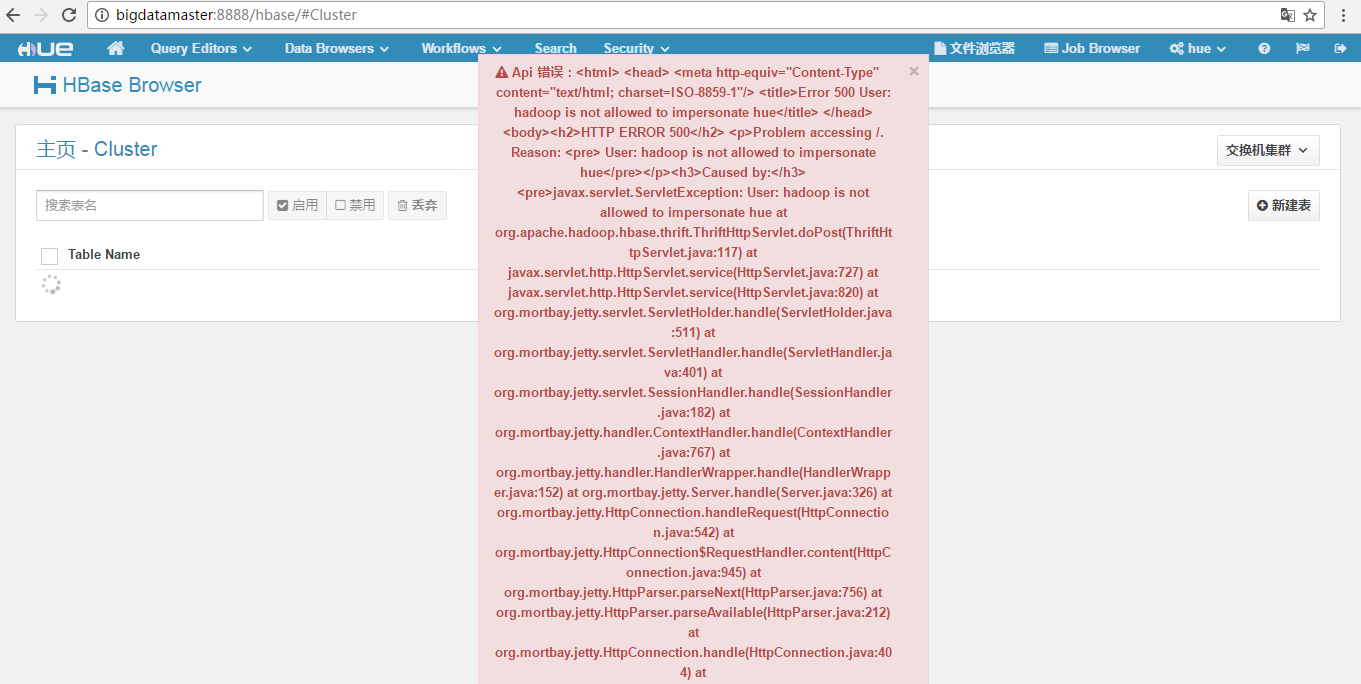

问题六:

User: hadoop is not allowed to impersonate hue

Api 错误:<html> <head> <meta http-equiv="Content-Type" content="text/html; charset=ISO-8859-1"/> <title>Error 500 User: hadoop is not allowed to impersonate hue</title> </head> <body><h2>HTTP ERROR 500</h2> <p>Problem accessing /. Reason: <pre> User: hadoop is not allowed to impersonate hue</pre></p><h3>Caused by:</h3><pre>javax.servlet.ServletException: User: hadoop is not allowed to impersonate hue at org.apache.hadoop.hbase.thrift.ThriftHttpServlet.doPost(ThriftHttpServlet.java:117) at javax.servlet.http.HttpServlet.service(HttpServlet.java:727) at javax.servlet.http.HttpServlet.service(HttpServlet.java:820) at org.mortbay.jetty.servlet.ServletHolder.handle(ServletHolder.java:511) at org.mortbay.jetty.servlet.ServletHandler.handle(ServletHandler.java:401) at org.mortbay.jetty.servlet.SessionHandler.handle(SessionHandler.java:182) at org.mortbay.jetty.handler.ContextHandler.handle(ContextHandler.java:767) at org.mortbay.jetty.handler.HandlerWrapper.handle(HandlerWrapper.java:152) at org.mortbay.jetty.Server.handle(Server.java:326) at org.mortbay.jetty.HttpConnection.handleRequest(HttpConnection.java:542) at org.mortbay.jetty.HttpConnection$RequestHandler.content(HttpConnection.java:945) at org.mortbay.jetty.HttpParser.parseNext(HttpParser.java:756) at org.mortbay.jetty.HttpParser.parseAvailable(HttpParser.java:212) at org.mortbay.jetty.HttpConnection.handle(HttpConnection.java:404) at org.mortbay.io.nio.SelectChannelEndPoint.run(SelectChannelE

解决办法

$HADOOP_HOME/ect/hadoop的core-site.xml里

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

改为

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>hadoop</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>hadoop</value>

</property>

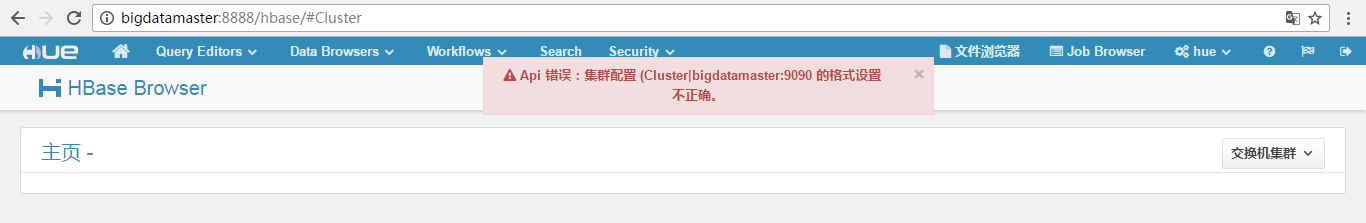

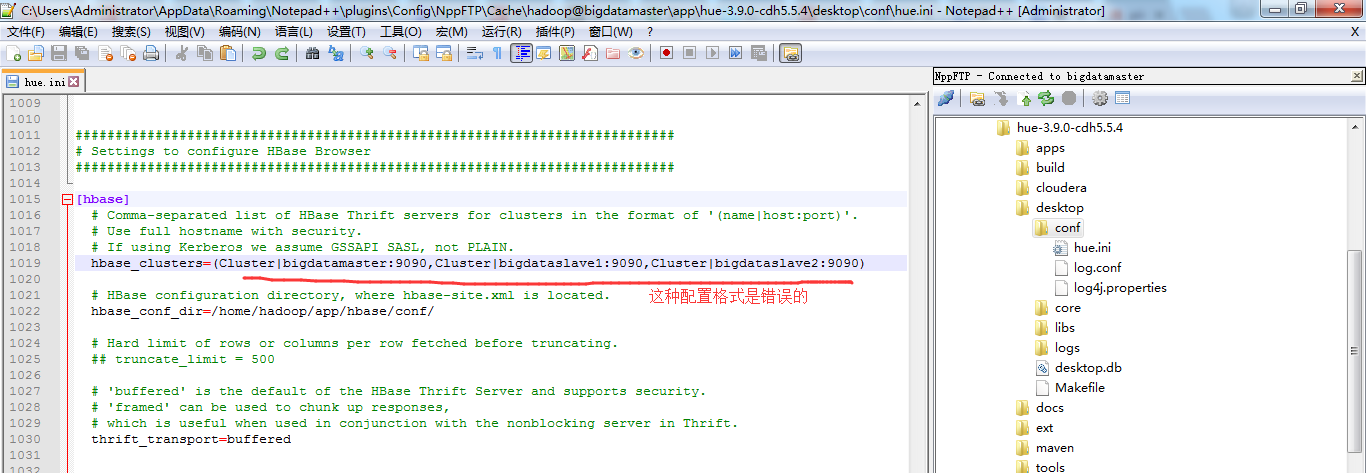

问题七

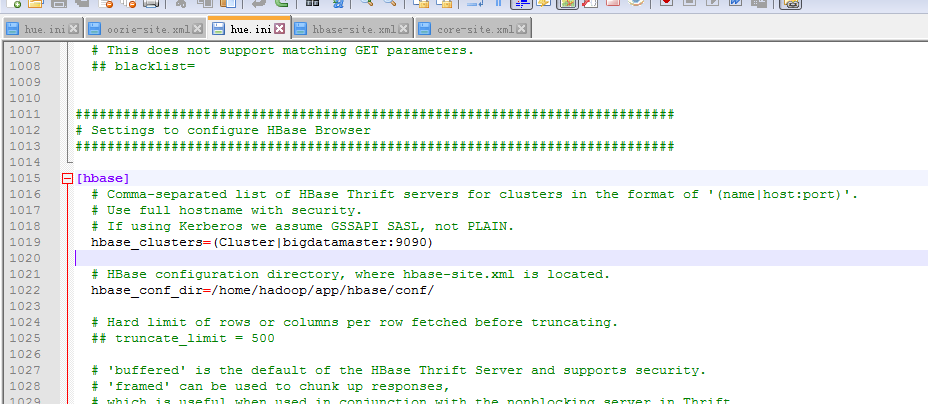

Api 错误:集群配置 (Cluster|bigdatamaster:9090 的格式设置不正确。

改为

问题八:

在hue里面查看HDFS文件浏览器报错:

当前用户没有权限查看,

cause:org.apache.hadoop.ipc.StandbyException: Operation category READ is not supported in state standby

解决方案:

Web页面查看两个NameNode状态,是不是之前的namenode是standby状态了. 我现有的集群就是这种情况, 由于之前的服务是master1起的, 挂了之后自动切换到master2, 但是hue中webhdfs还是配置的master1,导致在hue中没有访问权限.

问题九

hive查询时报错

org.apache.hive.service.cli.HiveSQLException: Couldn't find log associated with operation handle: OperationHandle [opType=EXECUTE_STATEMENT, getHandleIdentifier()=b3d05ca6-e3e8-4bef-b869-0ea0732c3ac5]

解决方案:

将hive-site.xml中的hive.server2.logging.operation.enabled=true;

<property>

<name>hive.server2.logging.operation.enabled</name>

<value>true</value>

</property>

问题十

启动hue web端 报错误:OperationalError: attempt to write a readonly database

解决办法

启动hue server的用户没有权限去写入默认sqlite DB,同时确保安装目录下所有文件的owner都是hadoop用户

chown -R hadoop:hadoop hue-3.9.0-cdh5.5.4

问题十一

HUE 报错误:Filesystem root ‘/’ should be owned by ‘hdfs’

hue 文件系统根目录“/”应归属于“hdfs”

解决方法

修改 文件desktop/libs/hadoop/src/hadoop/fs/webhdfs.py 中的 DEFAULT_HDFS_SUPERUSER = ‘hdfs’ 更改为你的hadoop用户

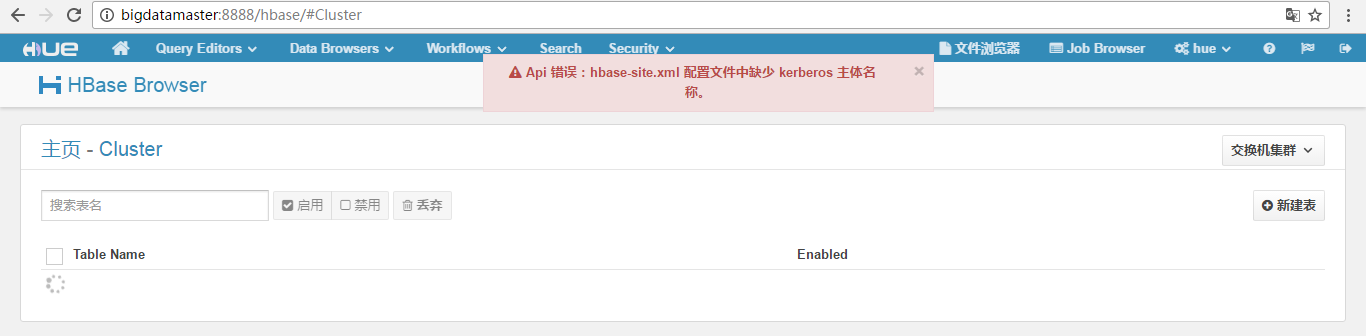

问题十二

错误:hbase-site.xml 配置文件中缺少 kerberos 主体名称。

解决办法

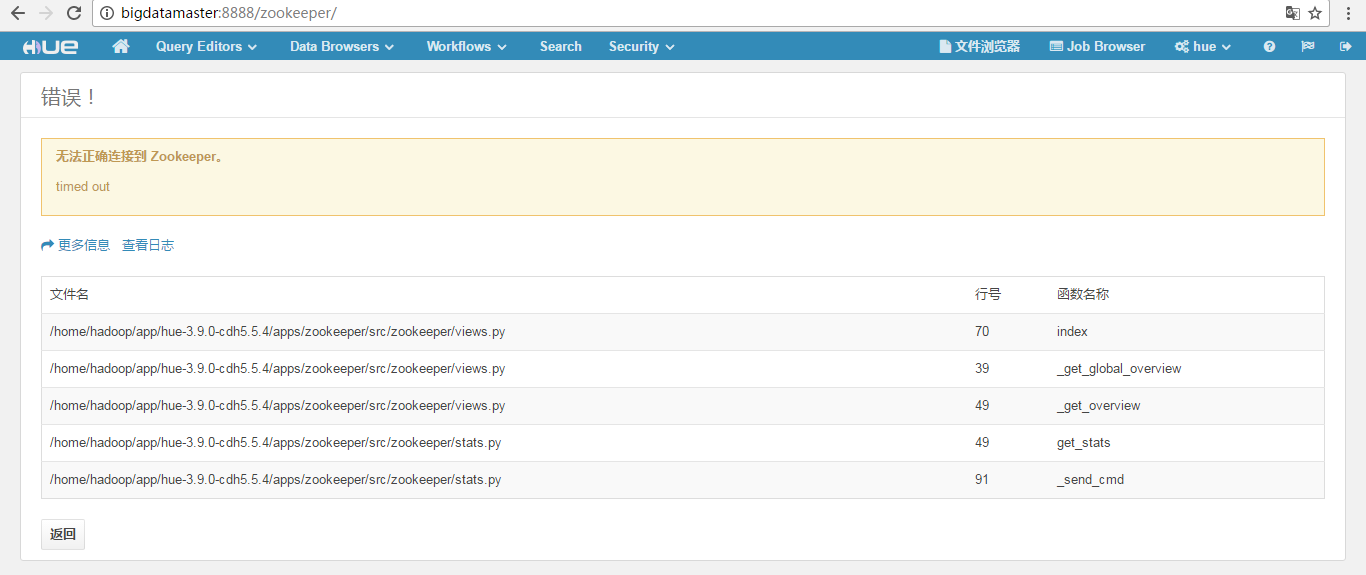

问题十三

Hue下无法无法正确连接到 Zookeeper timed out

解决办法

说明你的zookeeper模块,还没配置完全。

HUE配置文件hue.ini 的zookeeper模块详解(图文详解)(分HA集群)

问题十四

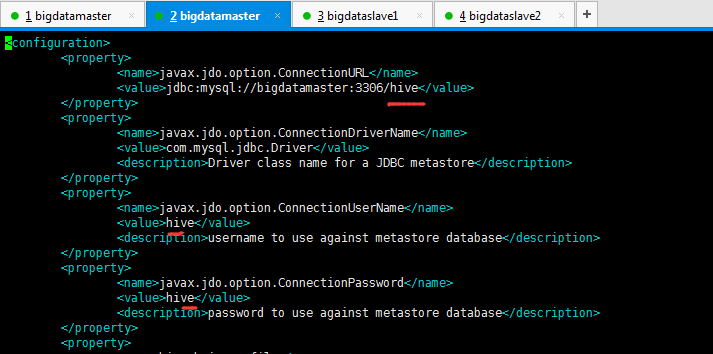

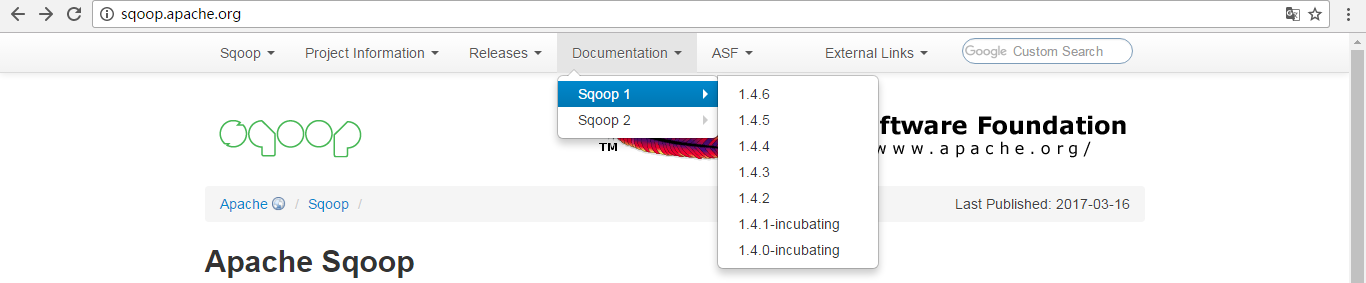

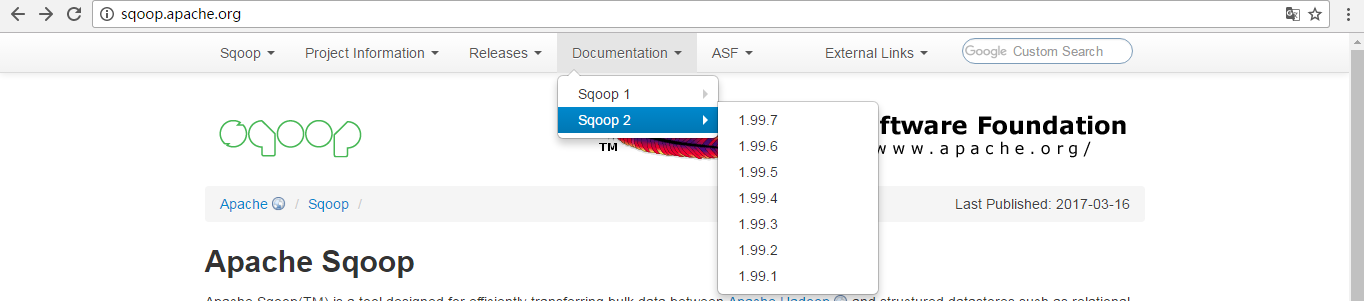

Sqoop 错误:

解决办法

看下自己的sqoop版本是不是,不是Sqoop2版本。好比我的如下

大家要注意,Hue里是只支持Sqoop2版本,对于Sqoop1和Sqoop2版本,直接去官网看就得了。

那么,得更换sqoop版本。

sqoop2-1.99.5-cdh5.5.4.tar.gz的部署搭建

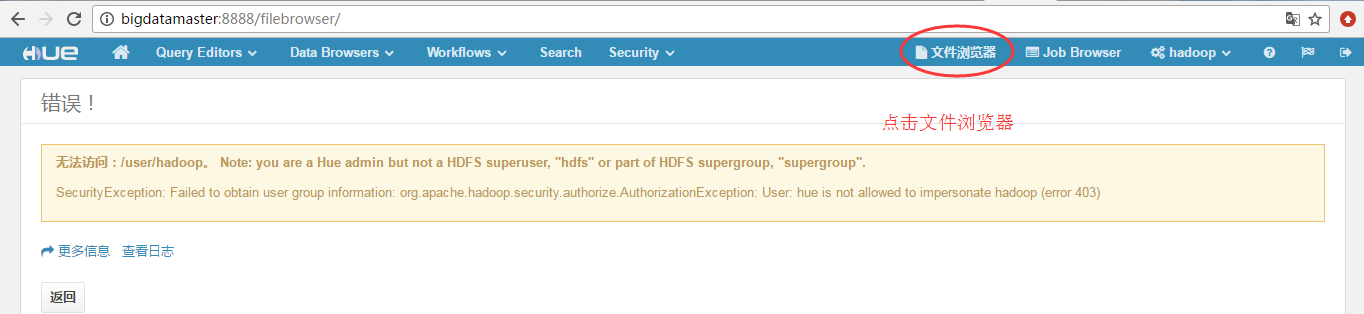

问题十五(见本博客的问题四)

无法访问:/user/hadoop。 Note: you are a Hue admin but not a HDFS superuser, "hdfs" or part of HDFS supergroup, "supergroup".

SecurityException: Failed to obtain user group information: org.apache.hadoop.security.authorize.AuthorizationException: User: hue is not allowed to impersonate hadoop (error 403)

问题分析

那是因为,HUE安装完成之后,第一次登录的用户就是HUE的超级用户,可以管理用户,等等。但是在用的过程发现一个问题这个用户不能管理HDFS中由supergroup创建的数据。

虽然在HUE中创建的用户可以管理自己文件夹下面的数据/user/XXX。那么Hadoop superuser的数据怎么管理呢,HUE提供了一个功能就是将Unix的用户和Hue集成,这样用Hadoop superuser的用户登录到HUE中就能顺利的管理数据了。

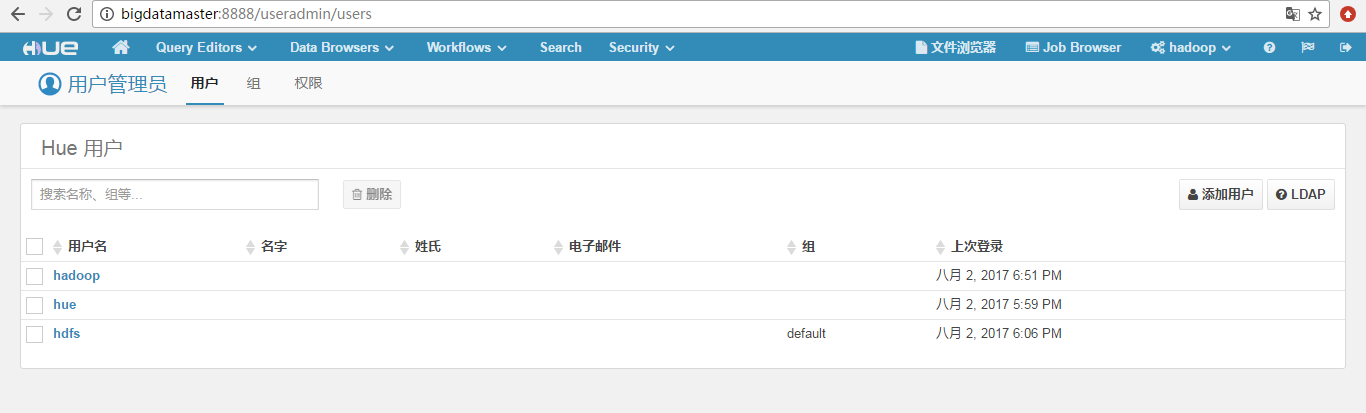

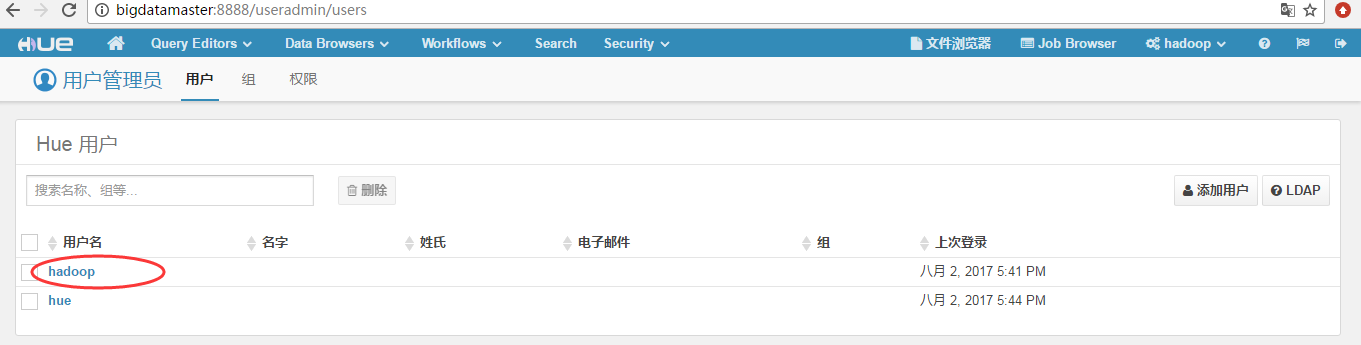

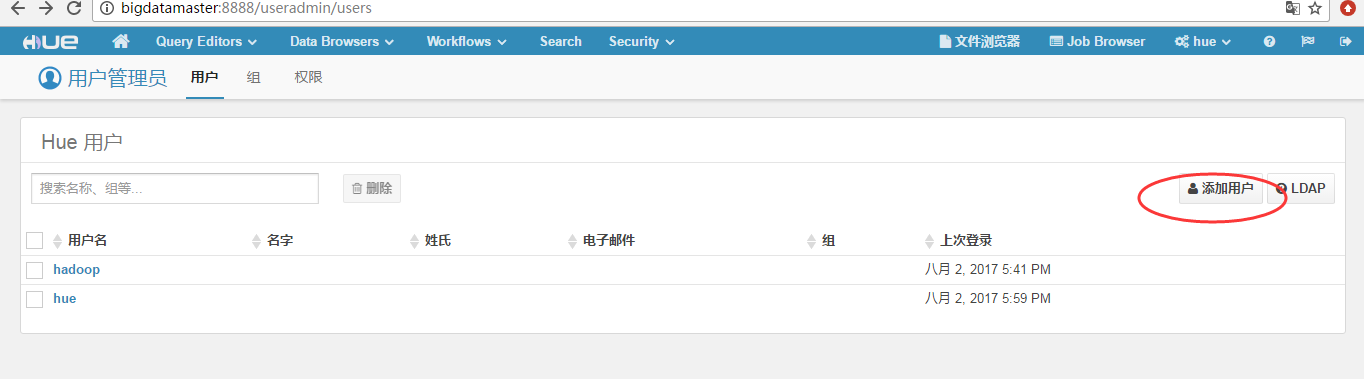

下面几个步骤来进行集成

第一步:确保hadoop 这个用户组在系统之中(这个hadoop肯定是在系统中了)

第二步:运行下面命令

[hadoop@bigdatamaster env]$ pwd /home/hadoop/app/hue-3.9.0-cdh5.5.4/build/env [hadoop@bigdatamaster env]$ ll total 16 drwxrwxr-x 2 hadoop hadoop 4096 May 5 20:59 bin drwxrwxr-x 2 hadoop hadoop 4096 May 5 20:46 include drwxrwxr-x 3 hadoop hadoop 4096 May 5 20:46 lib lrwxrwxrwx 1 hadoop hadoop 3 May 5 20:46 lib64 -> lib drwxrwxr-x 2 hadoop hadoop 4096 Aug 2 17:20 logs -rw-rw-r-- 1 hadoop hadoop 0 May 5 20:46 stamp [hadoop@bigdatamaster env]$ bin/hue useradmin_sync_with_unix [hadoop@bigdatamaster env]$

第三步:

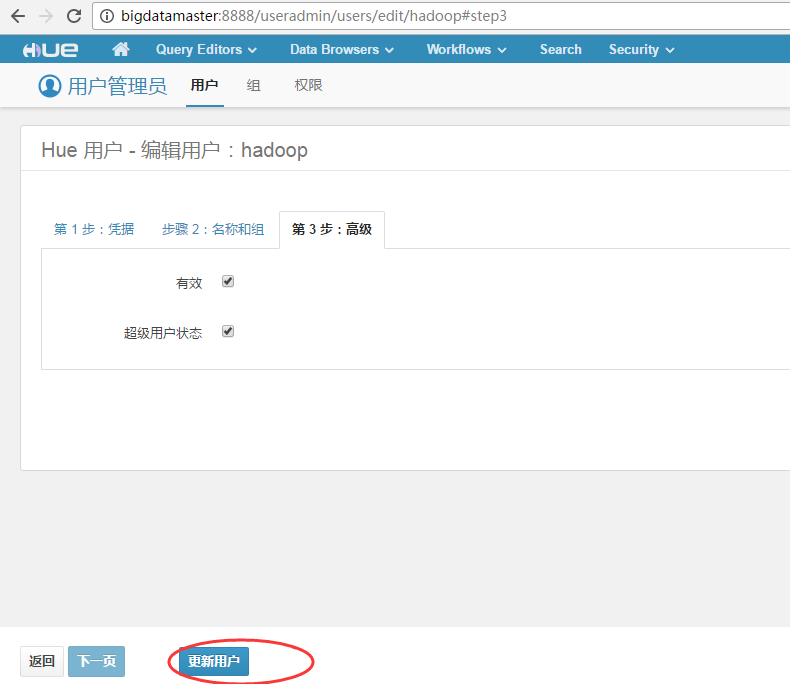

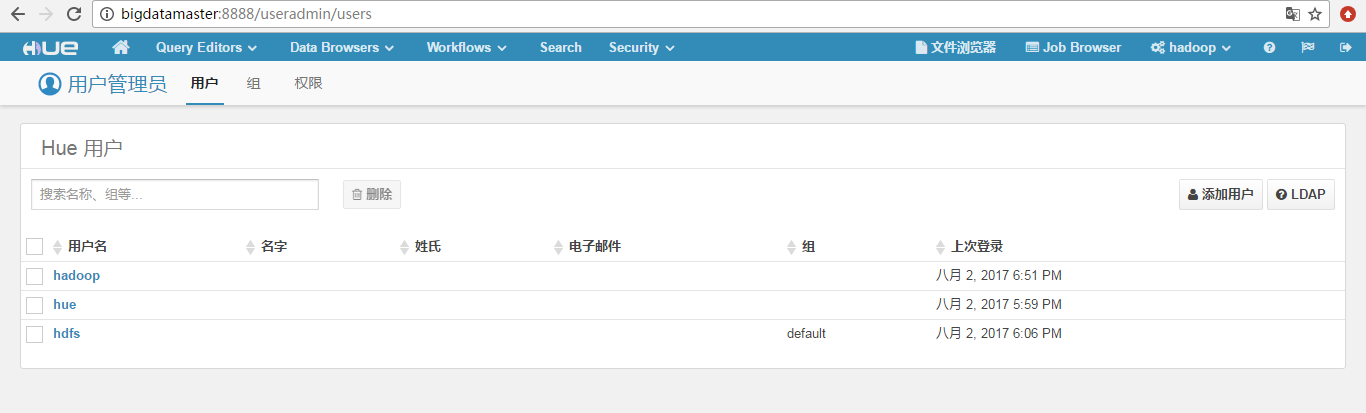

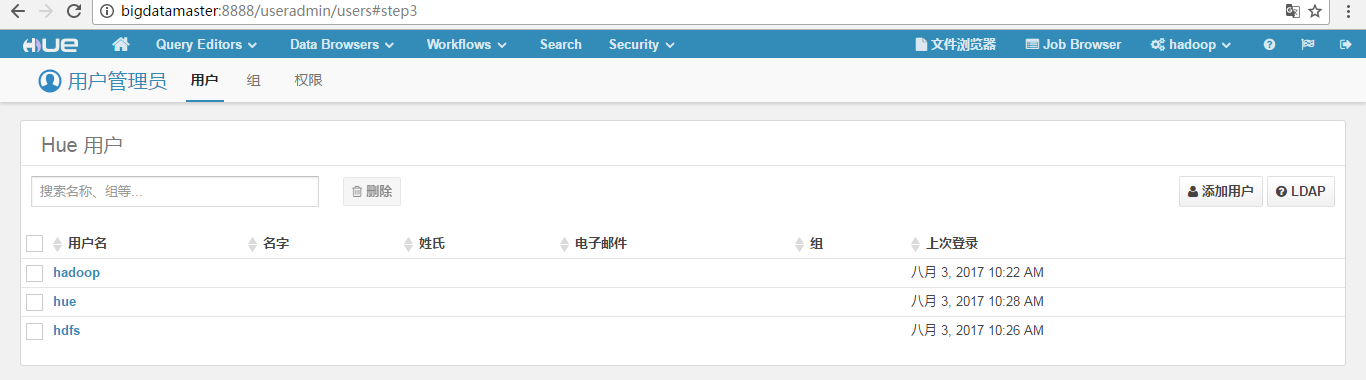

运行完上面的命令,进入到HUE总你就会发现用户已经集成进来了,但是,没有密码,所以需要给Unix的用户设定密码和分配用户组。

这里,

或者

完成上述步骤之后,登陆进去,就能愉快地管理HDFS数据了。

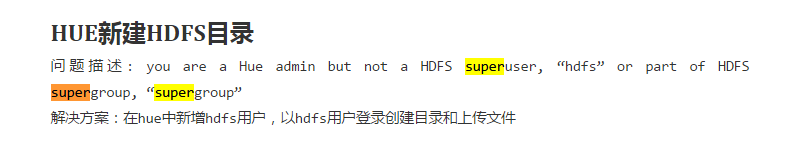

HUE新建HDFS目录

问题描述: you are a Hue admin but not a HDFS superuser, “hdfs” or part of HDFS supergroup, “supergroup”

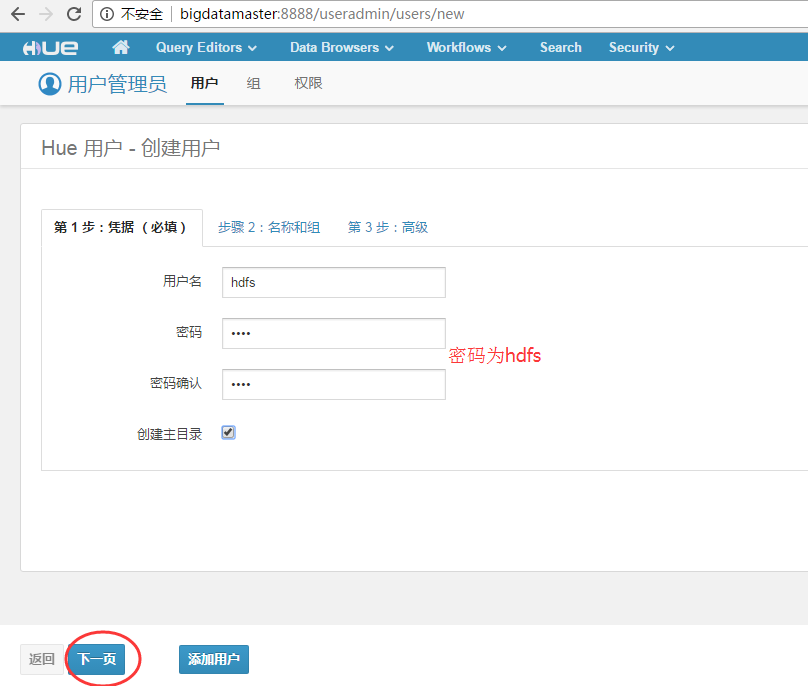

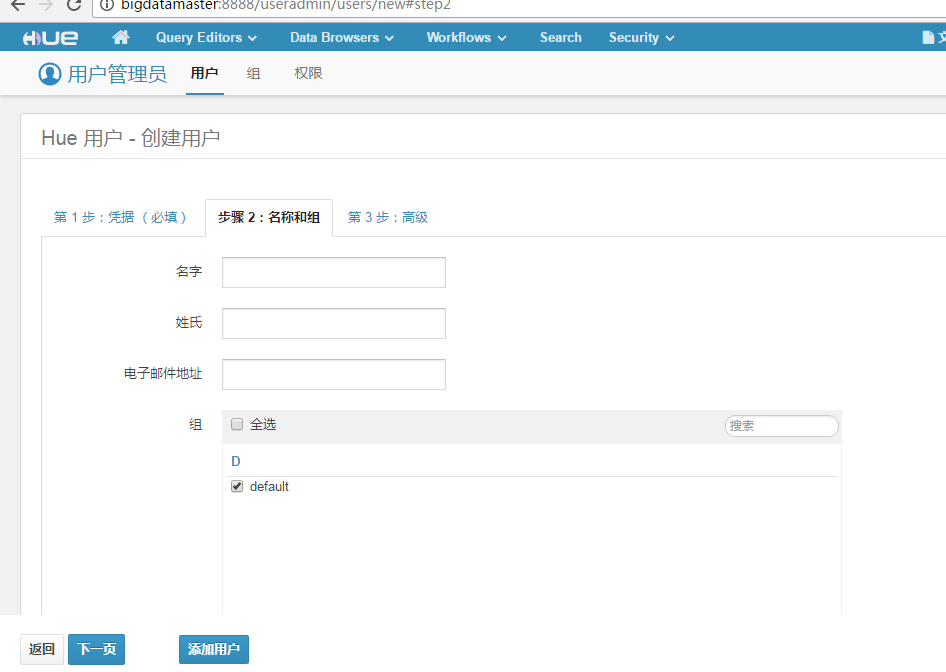

解决方案:在hue中新增hdfs用户,以hdfs用户登录创建目录和上传文件

参考

https://geosmart.github.io/2015/10/27/CDH%E4%BD%BF%E7%94%A8%E9%97%AE%E9%A2%98%E8%AE%B0%E5%BD%95/

然后,其实还是没有成功,

说白了,这个问题还是要看本博文的问题四

我一般配置是如下,在$HADOOP_HOME/etc/hadoop/下的core-site.xml里

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hdfs.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hdfs.groups</name>

<value>*</value>

</property>

为什么要这么加,是因为,我有三个用户,

这里,大家根据自己的实情去增加,修改完之后,一定要重启sbin/start-all.sh,就能解决问题了。

问题十六

无法为用户hue创建主目录, 无法为用户hadoop创建主目录,无法为用户hdfs创建主目录。

解决办法

在$hadoop/etc/hadoop/core-site.xml

<property>

<name>hadoop.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hue.groups</name>

<value>*</value>

</property>

比如,以下是无法为用户hdfs创建主目录,则

<property>

<name>hadoop.proxyuser.hdfs.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hdfs.groups</name>

<value>*</value>

</property>

成功!

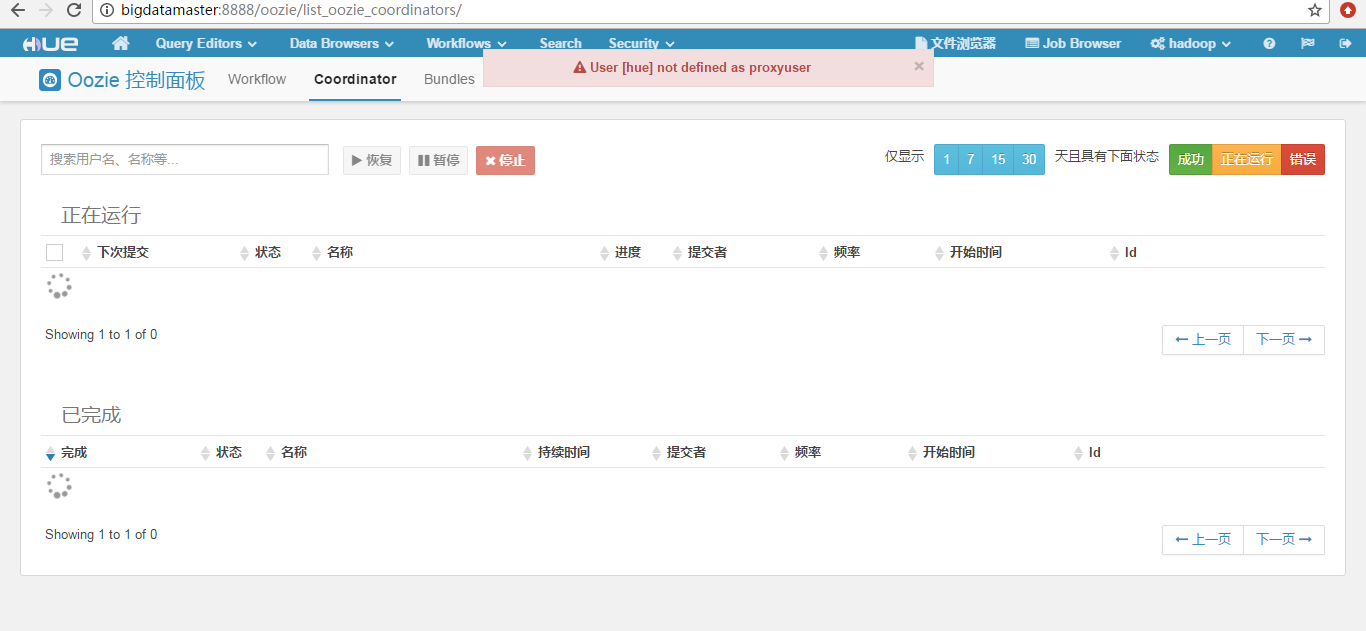

问题十七

User [hue] not defined as proxyuser

问题来源:

The oozie is running, when I click WorkFlows, there appares a error

即,oozie启动后,当我在hue界面点击workfolws后,出现如下的错误

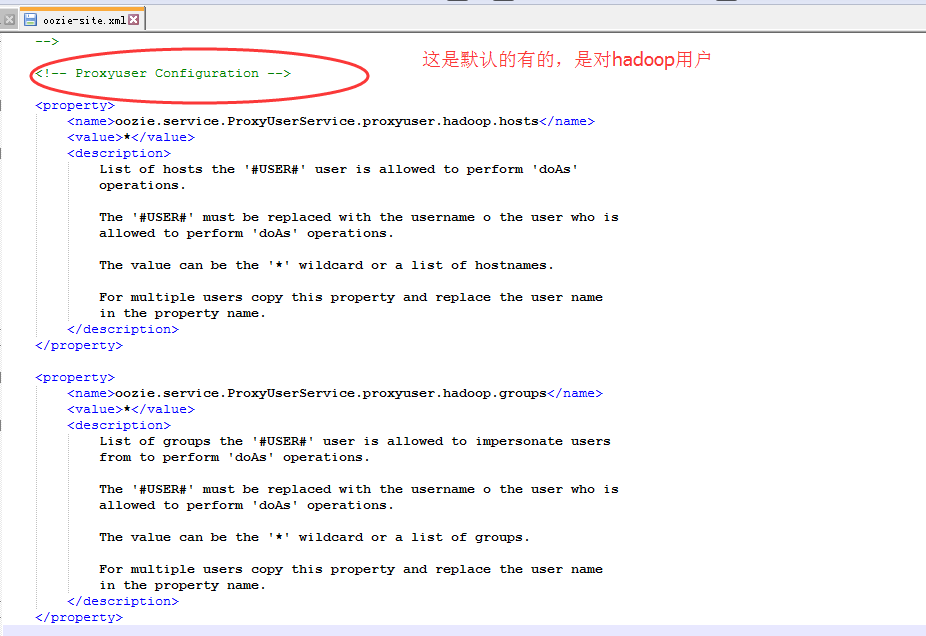

解决办法

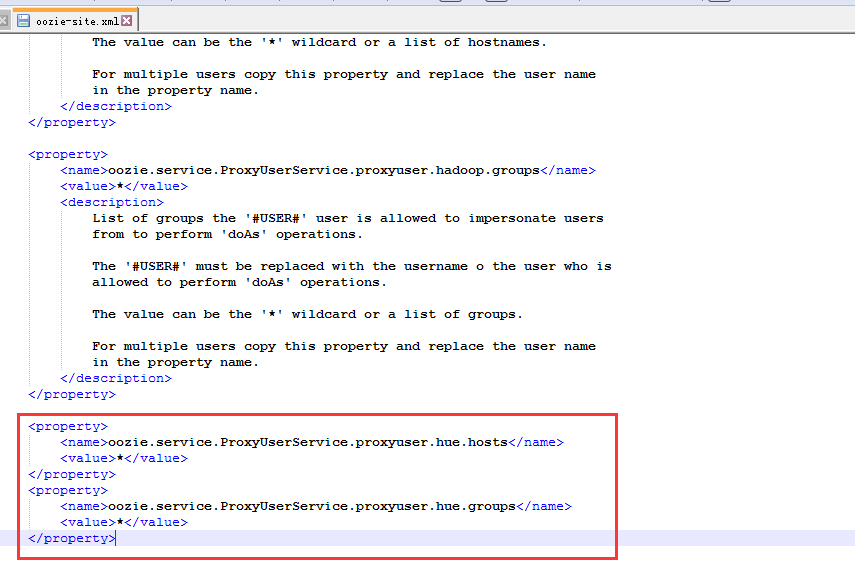

Hue submits MapReduce jobs to Oozie as the logged in user. You need to configure Oozie to accept the hue user to be a proxyuser. Specify this in your oozie-site.xml (even in a non-secure cluster), and restart Oozie:

<property>

<name>oozie.service.ProxyUserService.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>oozie.service.ProxyUserService.proxyuser.hue.groups</name>

<value>*</value>

</property>

参考: http://archive.cloudera.com/cdh4/cdh/4/hue-2.0.0-cdh4.0.1/manual.html

即,在oozie-site.xml配置文件里,加入

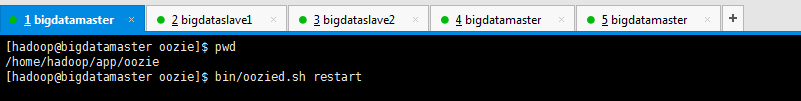

添加好之后,然后重启oozie。记得先jps下进程,Kill掉,再重启。

注意:/home/hadoop/app/oozie是我安装oozie的目录。

或者执行以下命令重启也是可以的。

[hadoop@bigdatamaster oozie]$ pwd /home/hadoop/app/oozie [hadoop@bigdatamaster oozie]$ bin/oozied.sh restart

然后,得到

问题十八

Oozie 服务器未运行

解决办法

Oozie的详细启动步骤(CDH版本的3节点集群)

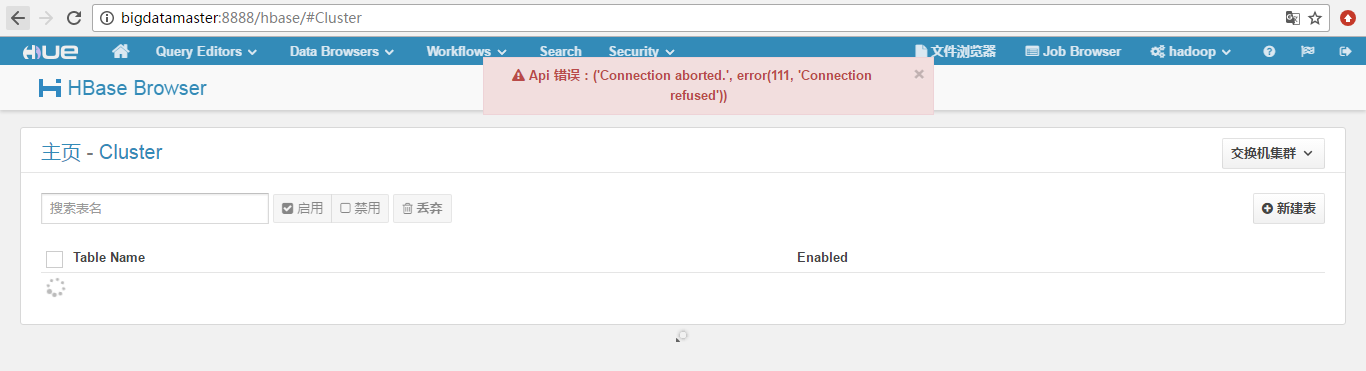

问题十九

Api 错误:('Connection aborted.', error(111, 'Connection refused'))

解决办法

问题二十

Could not connect to bigdatamaster:21050

解决办法

开启impala服务

问题二十一:

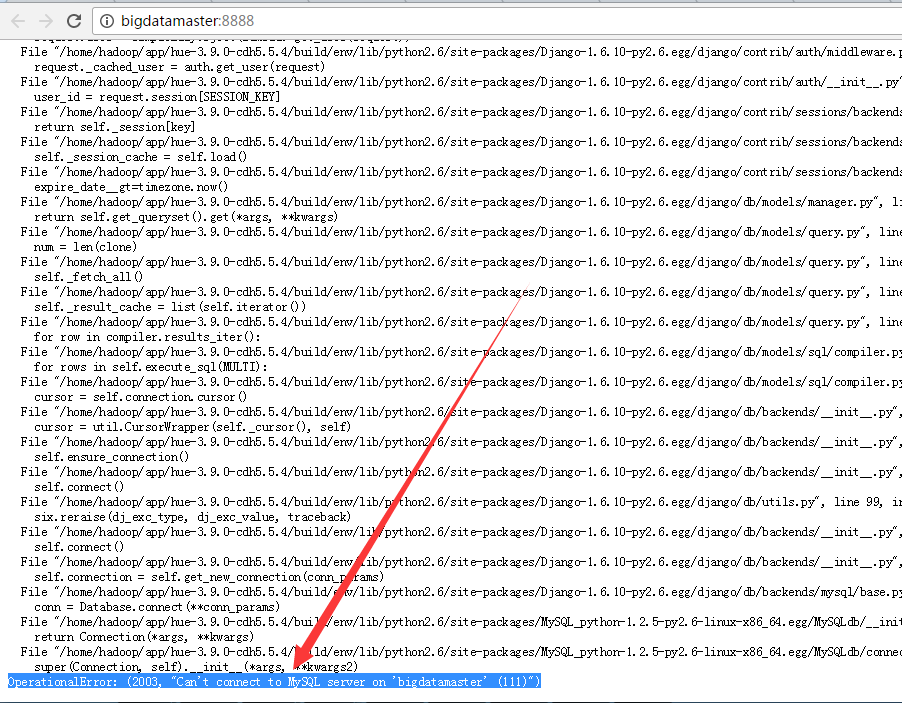

OperationalError: (2003, "Can't connect to MySQL server on 'bigdatamaster' (111)")

解决办法

[root@bigdatamaster hadoop]# service mysqld start

Starting mysqld: [ OK ]

[root@bigdatamaster hadoop]#

再刷新即可。

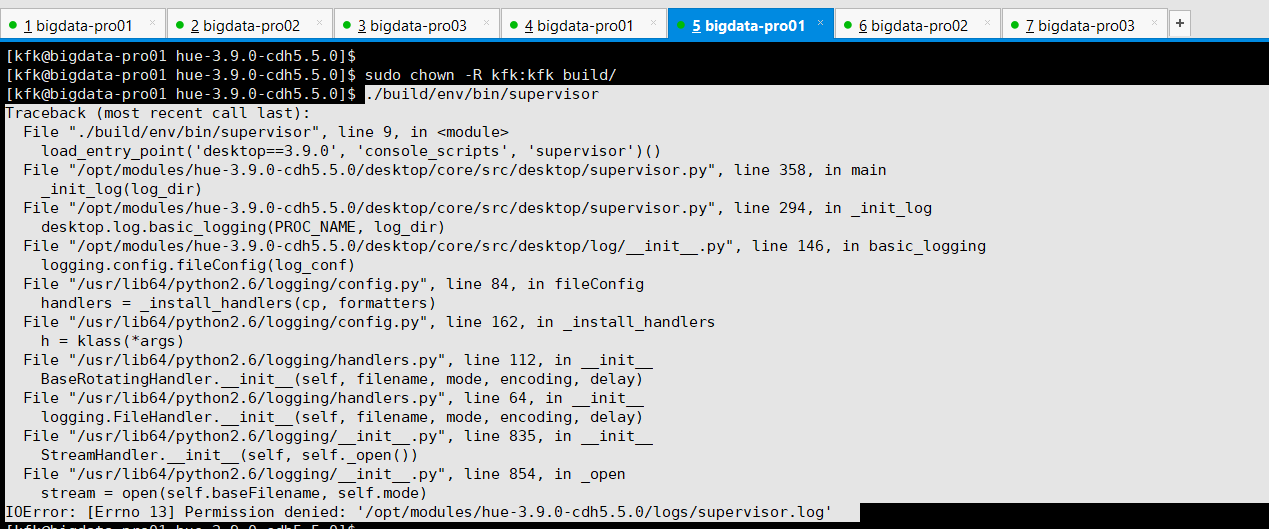

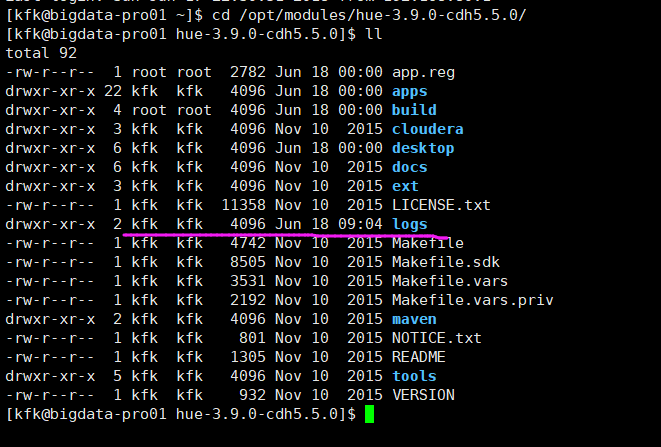

问题二十二:

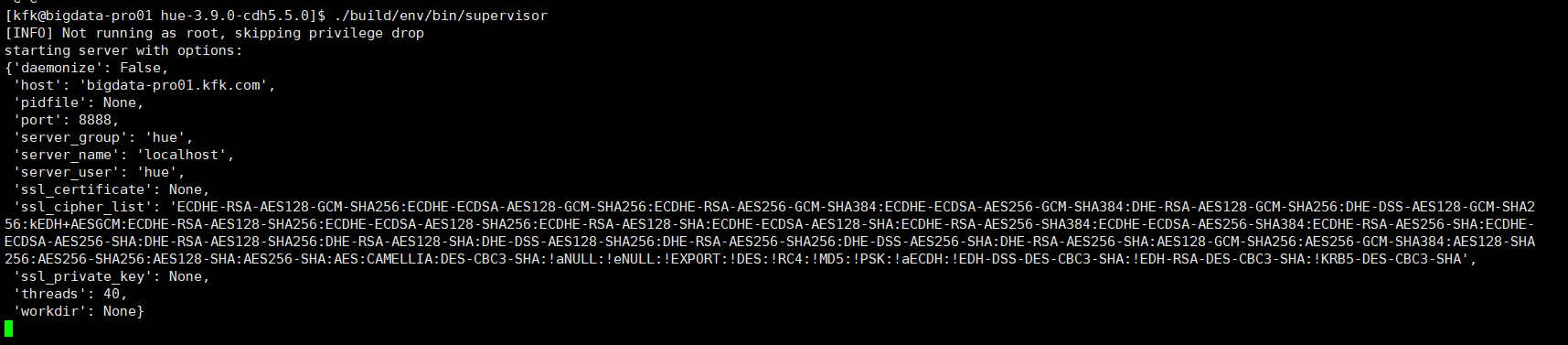

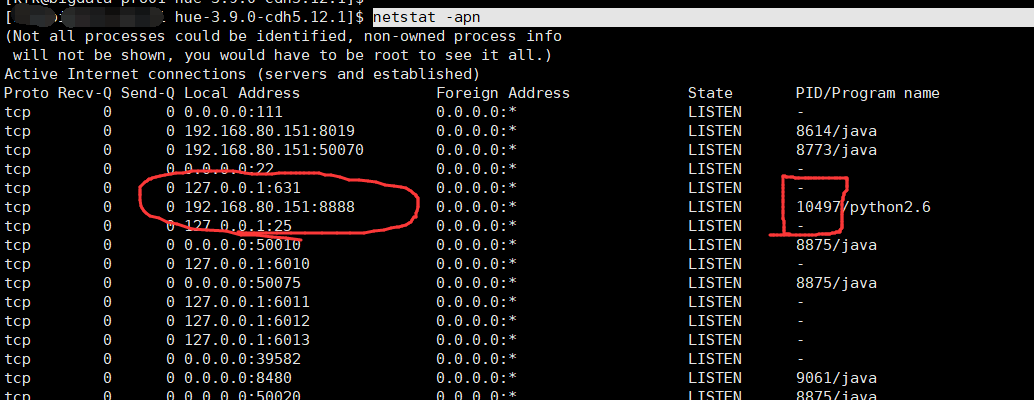

Hue执行./build/env/bin/supervisor出现

IOError: [Errno 13] Permission denied: '/opt/modules/hue-3.9.0-cdh5.5.0/logs/supervisor.log

File "./build/env/bin/supervisor", line 9

[kfk@bigdata-pro01 hue-3.9.0-cdh5.5.0]$ ./build/env/bin/supervisor Traceback (most recent call last): File "./build/env/bin/supervisor", line 9, in <module> load_entry_point('desktop==3.9.0', 'console_scripts', 'supervisor')() File "/opt/modules/hue-3.9.0-cdh5.5.0/desktop/core/src/desktop/supervisor.py", line 358, in main _init_log(log_dir) File "/opt/modules/hue-3.9.0-cdh5.5.0/desktop/core/src/desktop/supervisor.py", line 294, in _init_log desktop.log.basic_logging(PROC_NAME, log_dir) File "/opt/modules/hue-3.9.0-cdh5.5.0/desktop/core/src/desktop/log/__init__.py", line 146, in basic_logging logging.config.fileConfig(log_conf) File "/usr/lib64/python2.6/logging/config.py", line 84, in fileConfig handlers = _install_handlers(cp, formatters) File "/usr/lib64/python2.6/logging/config.py", line 162, in _install_handlers h = klass(*args) File "/usr/lib64/python2.6/logging/handlers.py", line 112, in __init__ BaseRotatingHandler.__init__(self, filename, mode, encoding, delay) File "/usr/lib64/python2.6/logging/handlers.py", line 64, in __init__ logging.FileHandler.__init__(self, filename, mode, encoding, delay) File "/usr/lib64/python2.6/logging/__init__.py", line 835, in __init__ StreamHandler.__init__(self, self._open()) File "/usr/lib64/python2.6/logging/__init__.py", line 854, in _open stream = open(self.baseFilename, self.mode) IOError: [Errno 13] Permission denied: '/opt/modules/hue-3.9.0-cdh5.5.0/logs/supervisor.log'

解决办法:

只有build是root权限,其他都是普通用户。

问题二十三:

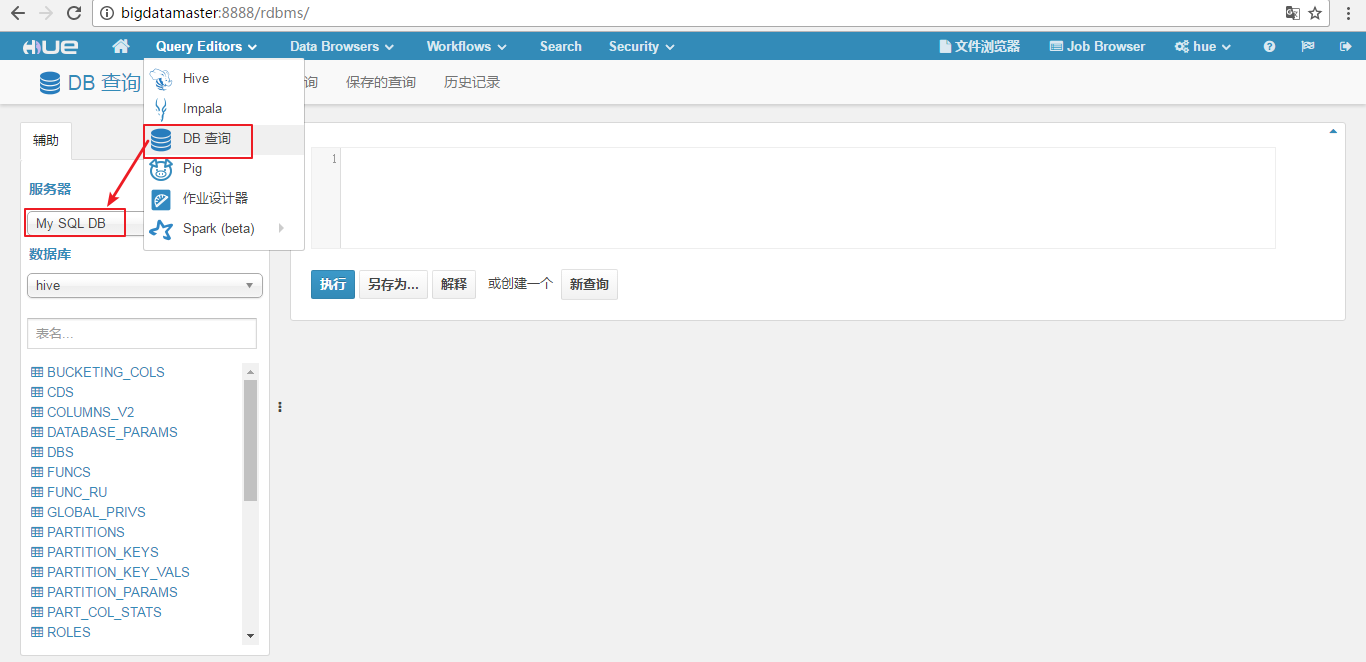

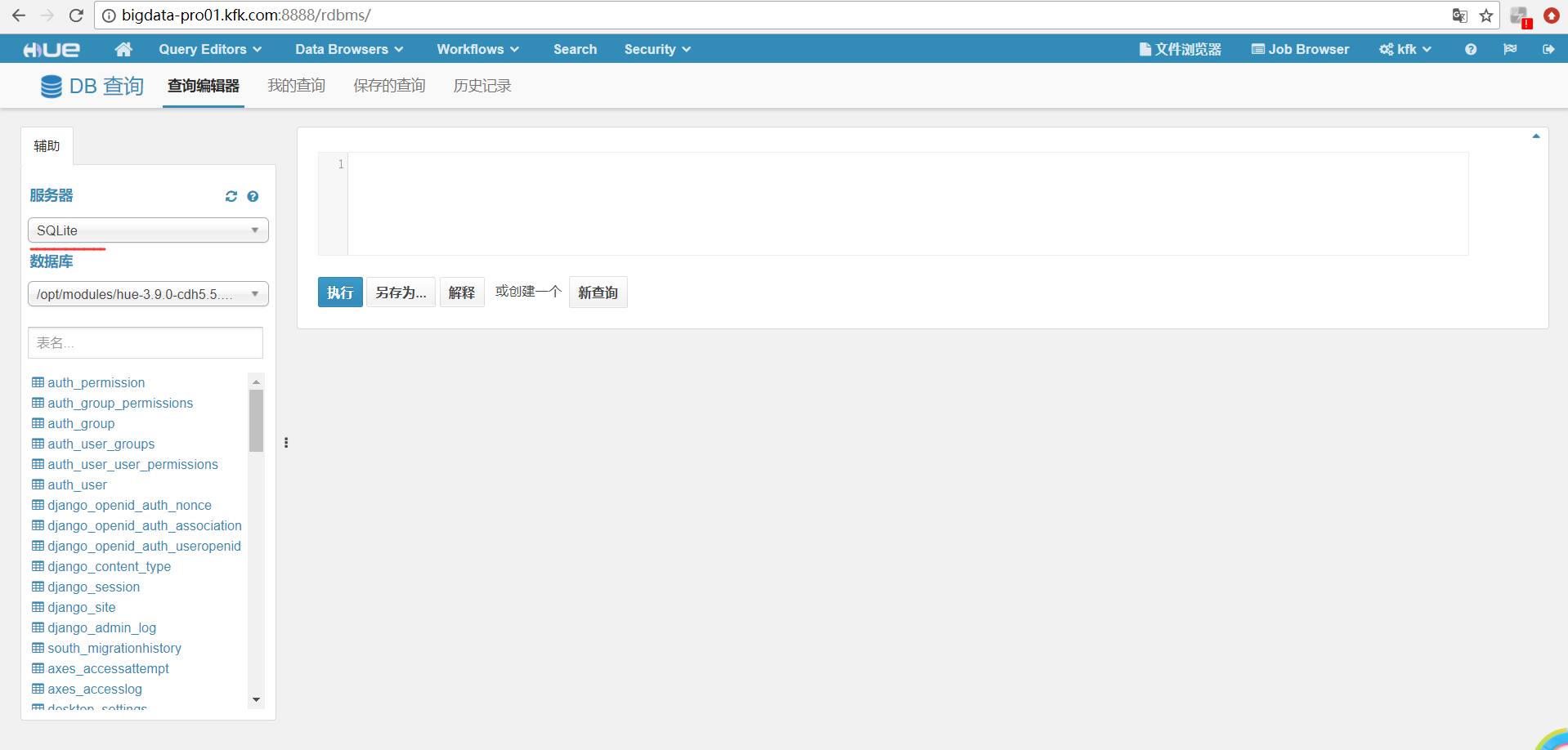

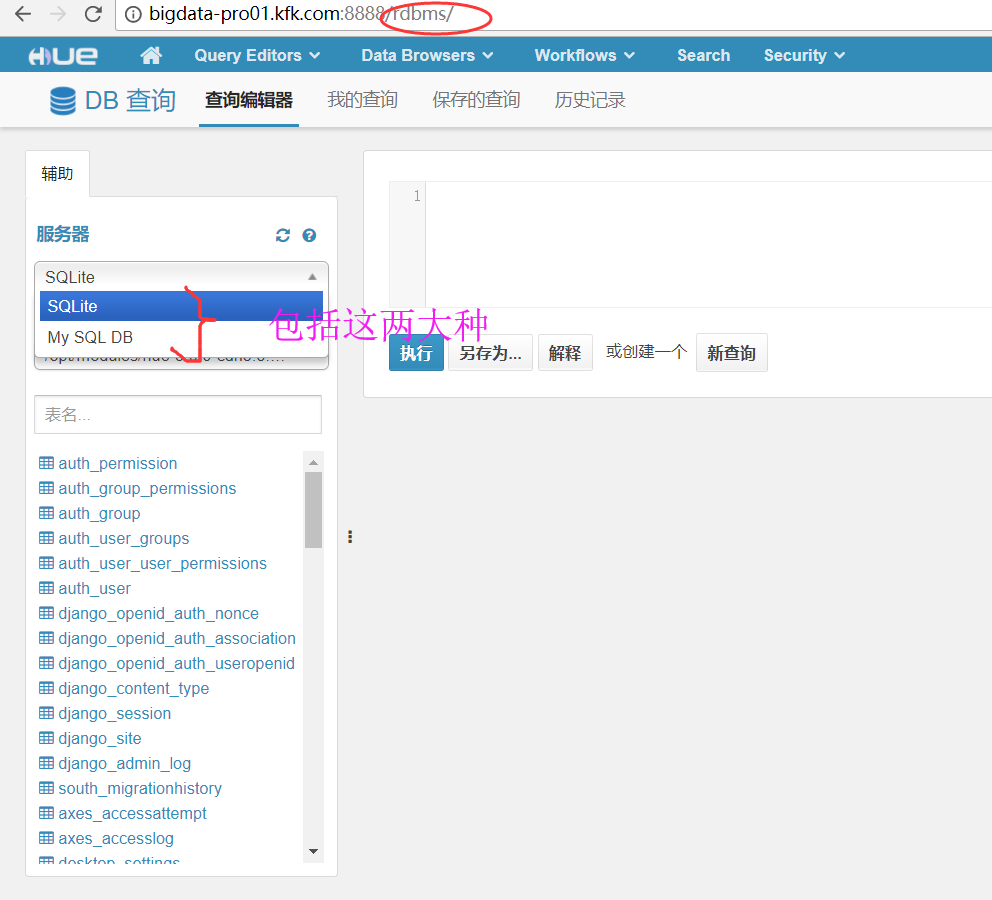

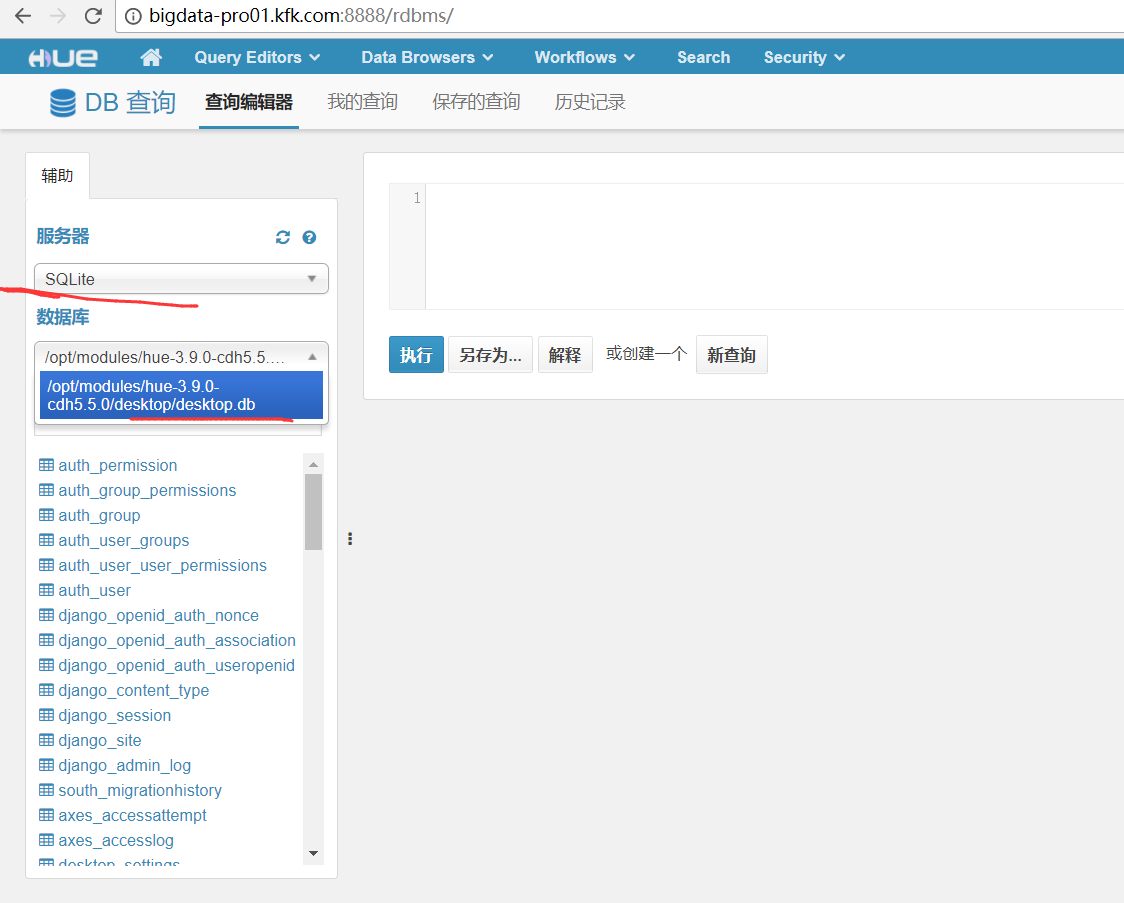

当前没有已配置的数据库。请转到您的 Hue 配置并在“rdbms”部分下方添加数据库。

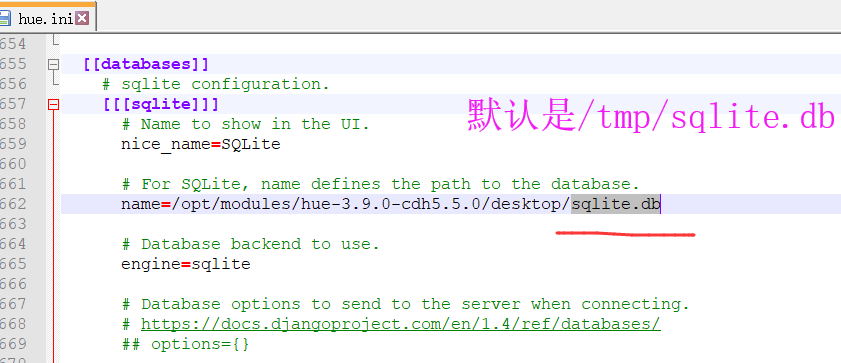

以下是,默认的

########################################################################### # Settings for the RDBMS application ########################################################################### [librdbms] # The RDBMS app can have any number of databases configured in the databases # section. A database is known by its section name # (IE sqlite, mysql, psql, and oracle in the list below). [[databases]] # sqlite configuration. ## [[[sqlite]]] # Name to show in the UI. ## nice_name=SQLite # For SQLite, name defines the path to the database. ## name=/tmp/sqlite.db # Database backend to use. ## engine=sqlite # Database options to send to the server when connecting. # https://docs.djangoproject.com/en/1.4/ref/databases/ ## options={} # mysql, oracle, or postgresql configuration. ## [[[mysql]]] # Name to show in the UI. ## nice_name="My SQL DB" # For MySQL and PostgreSQL, name is the name of the database. # For Oracle, Name is instance of the Oracle server. For express edition # this is 'xe' by default. ## name=mysqldb # Database backend to use. This can be: # 1. mysql # 2. postgresql # 3. oracle ## engine=mysql # IP or hostname of the database to connect to. ## host=localhost # Port the database server is listening to. Defaults are: # 1. MySQL: 3306 # 2. PostgreSQL: 5432 # 3. Oracle Express Edition: 1521 ## port=3306 # Username to authenticate with when connecting to the database. ## user=example # Password matching the username to authenticate with when # connecting to the database. ## password=example # Database options to send to the server when connecting. # https://docs.djangoproject.com/en/1.4/ref/databases/ ## options={}

很有可能,你在这个地方,没有细致地更改。

改为

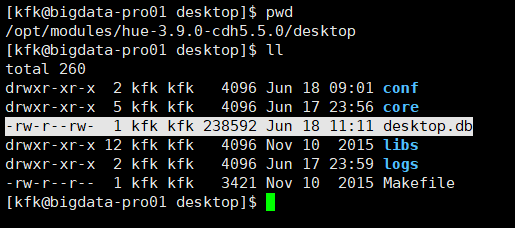

# sqlite configuration. [[[sqlite]]] # Name to show in the UI. nice_name=SQLite # For SQLite, name defines the path to the database. name=/opt/modules/hue-3.9.0-cdh5.5.0/desktop/desktop.db # Database backend to use. engine=sqlite # Database options to send to the server when connecting. # https://docs.djangoproject.com/en/1.4/ref/databases/ ## options={}

再,停掉mysql,重启mysql和hue

[kfk@bigdata-pro01 conf]$ sudo service mysqld restart

Stopping mysqld: [ OK ]

Starting mysqld: [ OK ]

[kfk@bigdata-pro01 conf]$

[kfk@bigdata-pro01 hue-3.9.0-cdh5.5.0]$ ./build/env/bin/supervisor [INFO] Not running as root, skipping privilege drop starting server with options: {'daemonize': False, 'host': 'bigdata-pro01.kfk.com', 'pidfile': None, 'port': 8888, 'server_group': 'hue', 'server_name': 'localhost', 'server_user': 'hue', 'ssl_certificate': None, 'ssl_cipher_list': 'ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA', 'ssl_private_key': None, 'threads': 40, 'workdir': None}

即,Hue的服务器(名字为My SQL DB,一般是mysql, oracle, or postgresql)。通常用mysql。

那么,它的数据库是metastore,是在hive里新建的mysql来充当它的元数据库。

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://master:3306/metastore?createDatabaseIfNotExist=true</value>

</property>

Hue的服务器(名字为SQLite),那么它的数据库是/opt/modules/hue-3.9.0-cdh5.5.0/desktop/desktop.db

问题二十四:

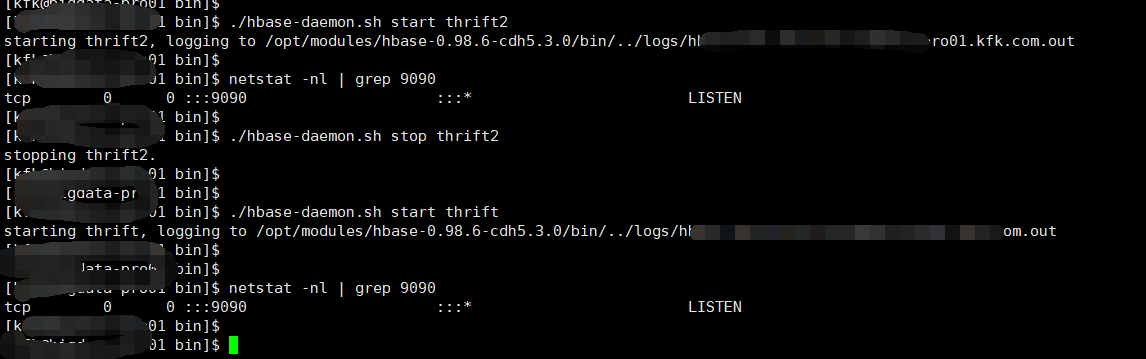

hue HBase Thrift 1 server cannot be contacted: 9090

问题二十五:

Api Error: Invalid method name: 'getTableNames'

产生的原因

后来通过查询资料,怀疑是客户端thrift版本和hbase thrift server的thrift版本不一致造成的。

果然thrift server上是使用的thrift2启动的,而客户端使用的是thrift访问的。

解决方法

因为根本原因在于客户端和服务器thrift版本不一致,那么解决方法有两个:

服务端以启动thrift版本的thrift server

hbase 的 thrift server以thrift1方式启动。

# hbase-daemon.sh stop thrift2 #启动命令 hbase-daemon.sh start thrift

问题二十六:

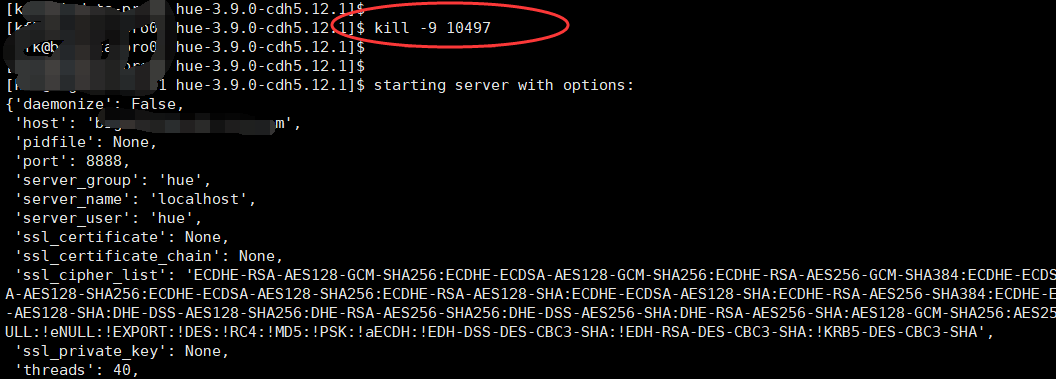

socket.error: [Errno 98] Address already in use

出现这个问题的原因是:

在于,当hue还在运行,你也许在命令行里进行了如hue.ini的修改,然后没正常关闭Hue再开启。

问题二十七:

二十八

desktop_settings' doesn't exist

然后,重启hue进程

[hadoop@bigdatamaster hue]$ build/env/bin/supervisor

完成以上的这个配置,启动Hue,通过浏览器访问,会发生错误,原因是mysql数据库没有被初始化

DatabaseError: (1146, "Table 'hue.desktop_settings' doesn't exist")

或者

ProgrammingError: (1146, "Table 'hive.django_session' doesn't exist")

初始化数据库

/home/hadoop/app/hue-3.9.0-cdh5.5.4/build/env

bin/hue syncdb

bin/hue migrate执行完以后,可以在mysql中看到,hue相应的表已经生成。

启动hue, 能够正常访问了。

二十九:

build/env/bin/supervisor 执行时,出现 No such file or diretory

解决办法:

如果你实在不会安装,或者说搞不定安装Hue,则就去别人机器上拷贝一个已经安装好的Hue。

放心,是可以放到你的Apache集群或者CDH集群里的,作者亲身经历过。

只是,需要自己手动,将软连接重新做一遍,这个不难的,删除,再重新ln -s 即可嘛。

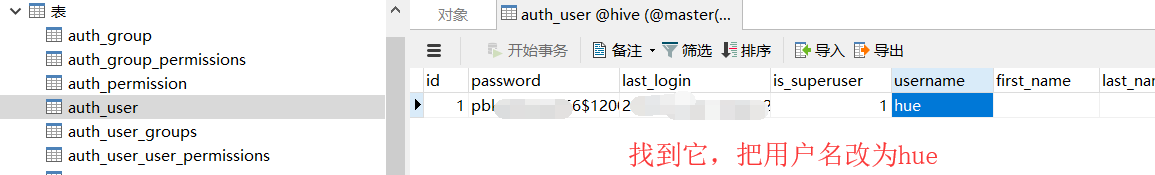

三十 Hue界面登录的密码忘记了??

如我刚开始,用户是hadoop,密码也是hadoop。 现在,我想改为hue,密码也是hue。

此时,用户名是由hadoop,改为hue了,但是密码还没改过来,别急。

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/ http://www.cnblogs.com/sunnyDream/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

对应本平台的讨论和答疑QQ群:大数据和人工智能躺过的坑(总群)(161156071)