作业来源:https://edu.cnblogs.com/campus/gzcc/GZCC-16SE1/homework/3002

0.从新闻url获取点击次数,并整理成函数

- newsUrl

- newsId(re.search())

- clickUrl(str.format())

- requests.get(clickUrl)

- re.search()/.split()

- str.lstrip(),str.rstrip()

- int

- 整理成函数

-

# 点击次数 def clickCount(url): # clickNum=re.findall('/(d*).html',url)[0] clickNum = list(re.search('/(d*).html', url).groups(1))[0] clickurl = 'http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80'.format(clickNum) count = html(clickurl).text.split()[0].split('html')[-1] ss = "()';" for i in ss: count = count.replace(i, '') co = int(count) return co

- 获取新闻发布时间及类型转换也整理成函数

-

# 时间 def time(url): time1 = html(url).select('div .show-info')[0].text.split()[0].split(':')[1] time2 = html(url).select('div .show-info')[0].text.split()[1] Time = time1 + ' ' + time2 now1 = datetime.strptime(Time, '%Y-%m-%d %H:%M:%S') newstime = datetime.strftime(now1, '%Y{y}-%m{m}-%d{d} %H{H}:%M{M}:%S{S}').format(y='年', m='月', d='日', H='时', M='分',S='秒') return newstime

1.从新闻url获取新闻详情: 字典,anews

# 新闻字典 def newsdist(url): newsdis = {} newsdis['newstitle'] = title(url) newsdis['newstime'] = time(url) newsdis['newsfrom'] = froms(url) newsdis['newswrite'] = write(url) newsdis['newsclick'] = clickCount(url) # newsdis['newscont']=cont(url) return newsdis

2.从列表页的url获取新闻url:列表append(字典) alist

# 每一页新闻链接列表 def alist(url): nets = html(url).select('div .list-container li') k = 0 newslist = [] for i in nets: # 每一条新闻url net = i.find('a').get('href') # 每一条新闻描述 description = html(url).select('div .news-list-description')[k].text k = k + 1 newsdis = newsdist(net) newsdis['newurl'] = net newsdis['newdscription'] = description newslist.append(newsdis) return newslist

3.生成所页列表页的url并获取全部新闻 :列表extend(列表) allnews

for i in range(43, 45): if i == 1: url = 'http://news.gzcc.cn/html/xiaoyuanxinwen/' else: url = 'http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html'.format(i) allnews = alist(url) # 列表叠加 allnews.extend(alist(url))

*每个同学爬学号尾数开始的10个列表页

4.设置合理的爬取间隔

import time

import random

time.sleep(random.random()*3)

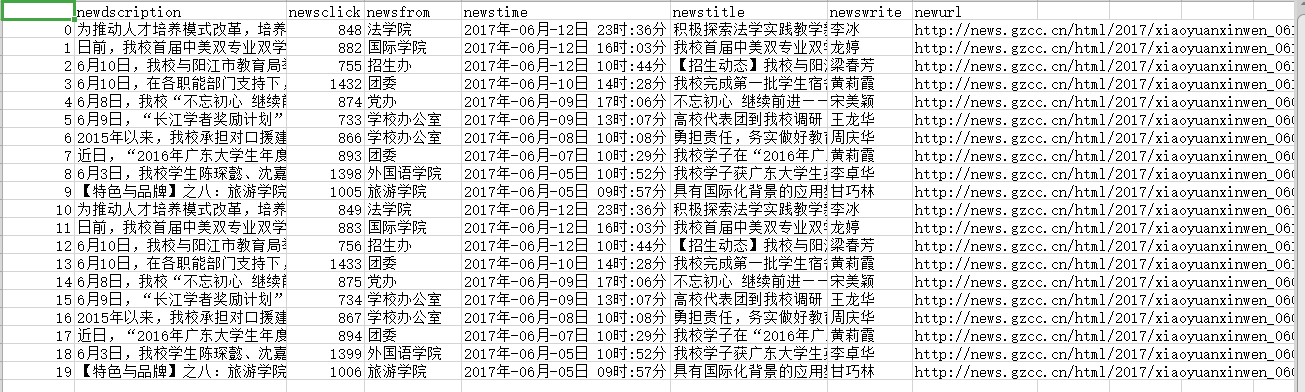

5.用pandas做简单的数据处理并保存

保存到csv或excel文件

newsdf.to_csv(r'F:duym爬虫gzccnews.csv')

newsdf = pd.DataFrame(allnews) print(newsdf) newsdf.to_csv(r'F:xinwen.csv')

全部代码:

import requests import bs4 from bs4 import BeautifulSoup as bs from datetime import datetime import re import pandas as pd def html(url): response = requests.get(url=url) response.encoding = 'utf-8' soup = bs(response.text, 'html.parser') return soup # 点击次数 def clickCount(url): # clickNum=re.findall('/(d*).html',url)[0] clickNum = list(re.search('/(d*).html', url).groups(1))[0] clickurl = 'http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80'.format(clickNum) count = html(clickurl).text.split()[0].split('html')[-1] ss = "()';" for i in ss: count = count.replace(i, '') co = int(count) return co # 标题 def title(url): title = html(url).select('div .show-title')[0].text return title # 时间 def time(url): time1 = html(url).select('div .show-info')[0].text.split()[0].split(':')[1] time2 = html(url).select('div .show-info')[0].text.split()[1] Time = time1 + ' ' + time2 now1 = datetime.strptime(Time, '%Y-%m-%d %H:%M:%S') newstime = datetime.strftime(now1, '%Y{y}-%m{m}-%d{d} %H{H}:%M{M}:%S{S}').format(y='年', m='月', d='日', H='时', M='分',S='秒') return newstime # 发布单位 def froms(url): comFrom = html(url).select('div .show-info')[0].text.split()[4].split(':')[1] return comFrom # 作者 def write(url): write = html(url).select('div .show-info')[0].text.split()[2].split(':')[1] return write # 内容 def cont(url): cont = html(url).select('div .show-content')[0].text ss = " u3000 xa0" for i in ss: cont = cont.replace(i, '') return cont # 新闻字典 def newsdist(url): newsdis = {} newsdis['newstitle'] = title(url) newsdis['newstime'] = time(url) newsdis['newsfrom'] = froms(url) newsdis['newswrite'] = write(url) newsdis['newsclick'] = clickCount(url) # newsdis['newscont']=cont(url) return newsdis # 每一页新闻链接列表 def alist(url): nets = html(url).select('div .list-container li') k = 0 newslist = [] for i in nets: # 每一条新闻url net = i.find('a').get('href') # 每一条新闻描述 description = html(url).select('div .news-list-description')[k].text k = k + 1 newsdis = newsdist(net) newsdis['newurl'] = net newsdis['newdscription'] = description newslist.append(newsdis) return newslist for i in range(43, 45): if i == 1: url = 'http://news.gzcc.cn/html/xiaoyuanxinwen/' else: url = 'http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html'.format(i) allnews = alist(url) # 列表叠加 allnews.extend(alist(url)) newsdf = pd.DataFrame(allnews) print(newsdf) newsdf.to_csv(r'F:xinwen.csv')

运行结果: