来自github

https://github.com/misrn/k8s-tls

操作步骤

前置工作

1. 备份原有证书目录

mv /etc/kubernetes /etc/kubernetes.bak

2. 集群说明

版本: 1.13.3

节点:

集群一共有三个节点(三个节点都是master,worker)

192.168.122.11 node1

192.168.122.12 node2

192.168.122.13 node3

进入目录

此操作我node1上执行的

- cd /usr/src

- git clone https://github.com/fandaye/k8s-tls.git && cd k8s-tls/

- chmod +x ./run.sh

修改配置文件

- apiserver.json

{

"CN": "kube-apiserver",

"hosts": [

"192.168.122.11", # master兼node

"192.168.122.12", # master兼node

"192.168.122.13", # master兼node

"10.233.0.1", # k8s 中 kubernetes svc IP地址

"127.0.0.1",

"172.17.0.1", # docker0网卡ip地址

"node1",

"node2",

"node3"

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

}

}

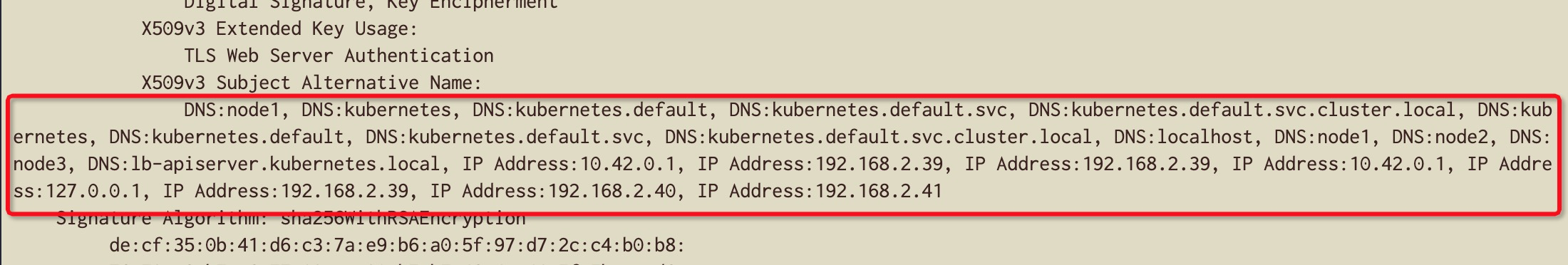

注意: 如果不清楚要加哪些IP,可以参考正常运行的集群中apiserver.crt例如:

openssl x509 -in apiserver.crt -text -noout

- config.yaml

apiVersion: kubeadm.k8s.io/v1alpha1

kind: MasterConfiguration

kubernetesVersion: v1.13.3

networking:

podSubnet: 10.233.64.0/18 # 修改为自己集群pod的地址段

apiServerCertSANs:

- node1

- node2

- node3

- 192.168.122.11

- 192.168.122.12

- 192.168.122.13

apiServerExtraArgs:

endpoint-reconciler-type: "lease"

etcd:

endpoints:

- https://192.168.122.11:2379

- https://192.168.122.12:2379

- https://192.168.122.13:2379

token: "deed3a.b3542929fcbce0f0"

tokenTTL: "0"

目前就只需要修改这两个文件

- 运行run.sh及复制配置文件

./run.sh

# 运行该脚本后,会生成/etc/kubernetes/pki目录

# 拷贝kubelet.env文件和manifests目录到各个master节点

- 进入/etc/kubernetes/pki/编辑node.sh文件(该脚本需要在每个机器上运行)

说明: 目前在node1上

修改其中的两个参数

ip="192.168.122.11" # node1对应的IP 如果在其他node上执行,对应修改IP就行

NODE="node1" # 如果在其他node上执行,对应修改为hostname就行

- 更新~/.kube/config文件

cp /etc/kubernetes/admin.conf ~/.kube/config

出现的问题

证书更新后重启了docker和kubelet,apiserver controller-manage schedu 运行正常,但是kube-proxy dns kube-proxy calico 都有均出现了问题

- kube-proxy

出现无法连接到127.0.0.1:6443 报证书错误

分析: 本机的127.0.01:6443端口是通的,所以排除apiserver服务本身的问题。

用kubectl查看了kube-proxy相关信息(sa secret clusterrole等等)发现使用的还是未过期时的,所以基本找到问题了,需要构建这些有关kube-proxy信息 - calico 和 calico-controller-manage

calico-controller-manage:

calico: network plugin unauthri

kubectl查看到的calico相关信息虽然更新了,但还是出现了认证失败,所以还是需要重建相关资源 - dns

无法连接到10.233.0.1 (这个错误是因为网络不通,所以根本问题是在kube-proxy和calico)

关于kube-proxy

从上面的分析来看,需要重新构建kube-proxy资源,以下是相关资源yaml文件

- sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-proxy

namespace: kube-system

- ClusterRoleBinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:node-proxier

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-proxier

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: system:kube-proxy

- configmap.yaml

apiVersion: v1

data:

config.conf: |-

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 5

clusterCIDR: 10.233.64.0/18

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: rr

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: ipvs

nodePortAddresses: []

oomScoreAdj: -999

portRange: ""

resourceContainer: /kube-proxy

udpIdleTimeout: 250ms

kubeconfig.conf: |-

apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server: https://127.0.0.1:6443

name: default

contexts:

- context:

cluster: default

namespace: default

user: default

name: default

current-context: default

users:

- name: default

user:

tokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

kind: ConfigMap

metadata:

labels:

app: kube-proxy

name: kube-proxy

namespace: kube-system

kube-proxy资源重建后又出现报错iptables-restore: line 7 failed in kube-proxy, google上说升级kube-proxy版本就OK了,于是将版本升级到1.16.3。嗯果然可以了

关于calico和calico-controller-manage

calico资源相关yaml文件

- calico-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

- calico-cr.yaml

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: calico-node

namespace: kube-system

rules:

- apiGroups: [""]

resources:

- pods

- nodes

- namespaces

verbs:

- get

- apiGroups: [""]

resources:

- endpoints

- services

verbs:

- watch

- list

- apiGroups: [""]

resources:

- nodes/status

verbs:

- patch

- apiGroups:

- policy

resourceNames:

- privileged

resources:

- podsecuritypolicies

verbs:

- use

- calico-crb.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: calico-node

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: calico-node

subjects:

- kind: ServiceAccount

name: calico-node

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

etcd_endpoints: "https://192.168.122.11:2379,https://192.168.122.12:2379,https://192.168.122.13:2379"

etcd_ca: "/calico-secrets/ca_cert.crt"

etcd_cert: "/calico-secrets/cert.crt"

etcd_key: "/calico-secrets/key.pem"

cluster_type: "kubespray,bgp"

calico_backend: "bird"

- calico-ds.yaml

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

template:

metadata:

labels:

k8s-app: calico-node

annotations:

# Mark pod as critical for rescheduling (Will have no effect starting with kubernetes 1.12)

kubespray.etcd-cert/serial: "C9370ED3FE6243D3"

prometheus.io/scrape: 'true'

prometheus.io/port: "9091"

spec:

priorityClassName: system-node-critical

hostNetwork: true

serviceAccountName: calico-node

tolerations:

- effect: NoExecute

operator: Exists

- effect: NoSchedule

operator: Exists

# Mark pod as critical for rescheduling (Will have no effect starting with kubernetes 1.12)

- key: CriticalAddonsOnly

operator: "Exists"

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

initContainers:

# This container installs the Calico CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: quay.io/calico/cni:v3.4.0-amd64

command: ["/install-cni.sh"]

env:

# Name of the CNI config file to create.

- name: CNI_CONF_NAME

value: "10-calico.conflist"

# CNI binaries are already on the host

- name: UPDATE_CNI_BINARIES

value: "false"

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG_FILE

value: "/host/etc/cni/net.d/calico.conflist.template"

# Prevents the container from sleeping forever.

- name: SLEEP

value: "false"

volumeMounts:

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

containers:

# Runs calico/node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: quay.io/calico/node:v3.4.0-amd64

env:

# The location of the Calico etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

valueFrom:

configMapKeyRef:

name: calico-config

key: cluster_type

# Set noderef for node controller.

- name: CALICO_K8S_NODE_REF

valueFrom:

fieldRef:

fieldPath: spec.nodeName

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "RETURN"

- name: FELIX_HEALTHHOST

value: "localhost"

# Prior to v3.2.1 iptables didn't acquire the lock, so Calico's own implementation of the lock should be used,

# this is not required in later versions https://github.com/projectcalico/calico/issues/2179

# should be set in etcd before deployment

# # Configure the IP Pool from which Pod IPs will be chosen.

# - name: CALICO_IPV4POOL_CIDR

# value: "192.168.0.0/16"

- name: CALICO_IPV4POOL_IPIP

value: "Off"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set Felix logging to "info"

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_PROMETHEUSMETRICSENABLED

value: "false"

- name: FELIX_PROMETHEUSMETRICSPORT

value: "9091"

- name: FELIX_PROMETHEUSGOMETRICSENABLED

value: "true"

- name: FELIX_PROMETHEUSPROCESSMETRICSENABLED

value: "true"

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

- name: IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

- name: NODENAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: FELIX_HEALTHENABLED

value: "true"

- name: FELIX_IGNORELOOSERPF

value: "False"

securityContext:

privileged: true

resources:

limits:

cpu: 300m

memory: 500M

requests:

cpu: 150m

memory: 64M

livenessProbe:

httpGet:

host: 127.0.0.1

path: /liveness

port: 9099

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

exec:

command:

- /bin/calico-node

- -bird-ready

- -felix-ready

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /var/run/calico

name: var-run-calico

- mountPath: /var/lib/calico

name: var-lib-calico

readOnly: false

- mountPath: /calico-secrets

name: etcd-certs

- name: xtables-lock

mountPath: /run/xtables.lock

readOnly: false

volumes:

# Used by calico/node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

- name: var-lib-calico

hostPath:

path: /var/lib/calico

# Used to install CNI.

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Mount in the etcd TLS secrets.

- name: etcd-certs

hostPath:

path: "/etc/calico/certs"

# Mount the global iptables lock file, used by calico/node

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

updateStrategy:

rollingUpdate:

maxUnavailable: 20%

type: RollingUpdate

关于dns

不再贴配置了,/etc/kubernetes.bak下有对应文件

OK。。。k8s证书更新到此结束啦