作业1

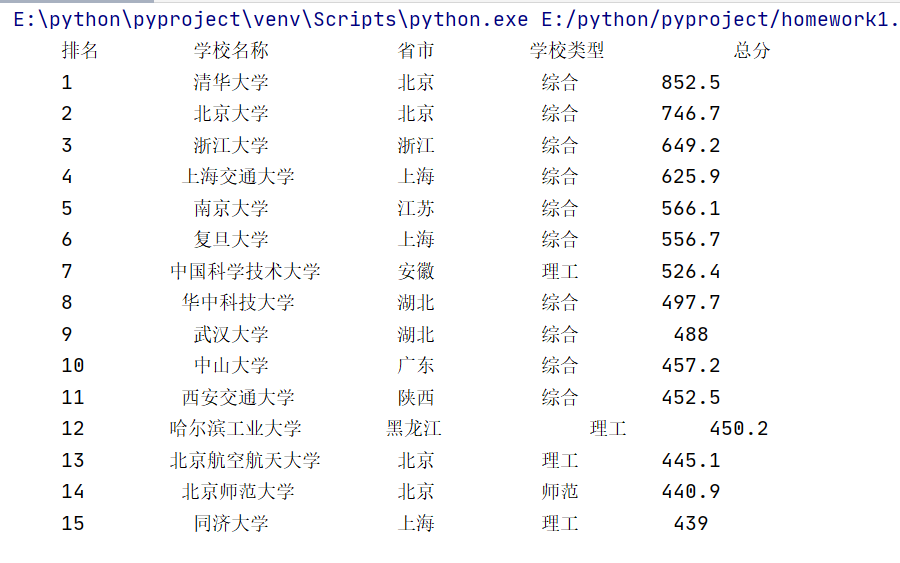

1)、实验内容:用requests和BeautifulSoup库方法定向爬取给定网址(http://www.shanghairanking.cn/rankings/bcur/2020 )的数据,屏幕打印爬取的大学排名信息。

代码如下:

import requests

from bs4 import BeautifulSoup

import bs4

#爬取的通用代码基本框架

def getHTMlText(url):

try:

r = requests.get(url, timeout = 30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""

#将爬取的info放入List中

def fillUnivList(ulist,html):

soup = BeautifulSoup(html,"html.parser")

for tr in soup.find('tbody').children:

if isinstance(tr,bs4.element.Tag): #判断标签类型

tds = tr('td')

ulist.append([tds[0],tds[1],tds[2],tds[3],tds[4]])

#打印方法

def printUnivList(ulist,num):

print("{:^10} {:^10} {:^10} {:^10} {:^10}".format("排名","学校名称","省市","学校类型","总分"))

for i in range(num):

u = ulist[i]

print("{:^10} {:^10} {:^10} {:^10} {:^10}".format(u[0].text.strip(),u[1].text.strip(),u[2].text.strip(),u[3].text.strip(),u[4].text.strip()))

#主函数

def main():

uinfo = []

url = 'http://www.shanghairanking.cn/rankings/bcur/2020' #所爬取的url

html = getHTMlText(url)

fillUnivList(uinfo,html)

printUnivList(uinfo,15)

main()

结果如下:

2)、心得体会

这次实验的心得体会大概就是代码的风格要控制好,尽量把功能分装成函数方便调用,还有就是在爬取的时候注意分析源代码,比如这个实验就需要将获得的内容的前后换行符删去。

作业2

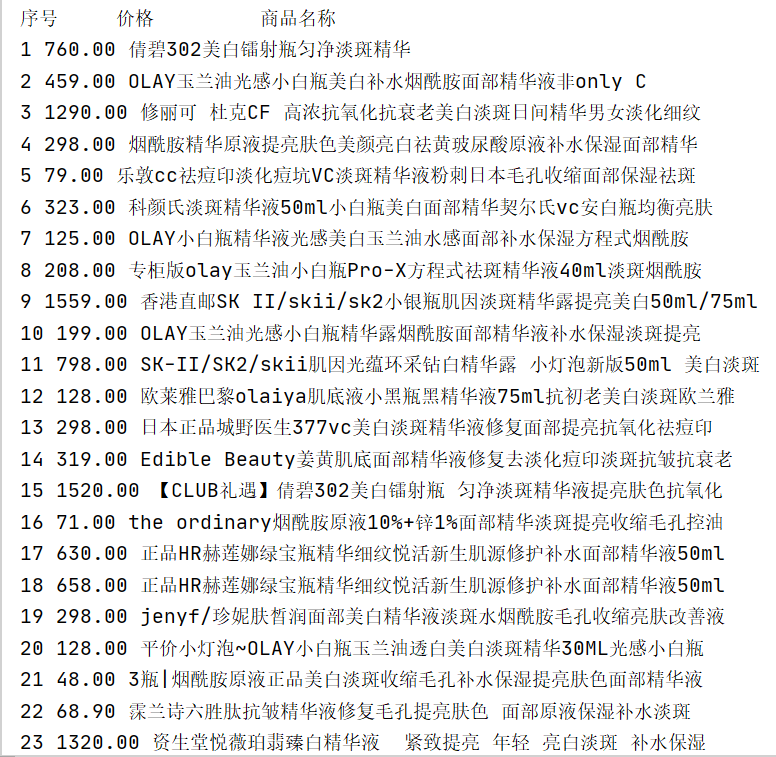

1)、实验内容:用requests和re库方法设计某个商城(自已选择)商品比价定向爬虫,爬取该商城,以关键词“书包”搜索页面的数据,爬取商品名称和价格。

代码如下:

import requests

import re

headers = {

'authority': 's.taobao.com',

'cache-control': 'max-age=0',

'upgrade-insecure-requests': '1',

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/76.0.3809.87 Safari/537.36',

'sec-fetch-mode': 'navigate',

'sec-fetch-user': '?1',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3',

'sec-fetch-site': 'none',

'referer': 'https://s.taobao.com/search?q=shubao&commend=all&ssid=s5-e&search_type=mall&sourceId=tb.index&area=c2c&spm=a1z02.1.6856637.d4910789',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'zh-CN,zh;q=0.9',

'cookie': 'thw=cn; cna=Vzk9FtjcI20CAd9Vz/wYMTMu; t=20e35e562420e4844071fdb958fb7c9a; hng=CN%7Czh-CN%7CCNY%7C156; miid=791733911206048212; tracknick=chia_jia; tg=0; cookie2=1f0898f4d5e217732638dedf9fe15701; v=0; _tb_token_=ebe5eeed35f33; enc=0hYktGOhhe0QclVgvyiraV50UAu2nXH2DGGiUhLzUiXhhwjN3%2BmWuY8a%2Bg%2B13VWtqA42kqOMQxOCBM%2F9y%2FMKEA%3D%3D; alitrackid=www.taobao.com; _samesite_flag_=true; sgcookie=ErzxRE%2F%2Fujbceh7Nk8tsW; unb=2925825942; uc3=lg2=U%2BGCWk%2F75gdr5Q%3D%3D&id2=UUGgqLe1BUBPyw%3D%3D&nk2=AHLe94pmu18%3D&vt3=F8dBxd9lptyvS0VrdSI%3D; csg=2ff7a88b; lgc=chia_jia; cookie17=UUGgqLe1BUBPyw%3D%3D; dnk=chia_jia; skt=fffc9202f189ba15; existShop=MTU4NDgwNjA5OA%3D%3D; uc4=nk4=0%40AhyIi%2BV%2FGWSNaFwor7d%2Fi8aNNg%3D%3D&id4=0%40U2OXkqaj%2BLnczzIixfRAeE2zi2mx; _cc_=U%2BGCWk%2F7og%3D%3D; _l_g_=Ug%3D%3D; sg=a24; _nk_=chia_jia; cookie1=VW7ubnoPKm6ZWpbFap8xTV%2BlfhUdVkTTn8y8%2Fh5pWuE%3D; tfstk=c-PRBp27QijoTXTB7NX0R8OD7-jGZX8-xUip9wQB5nAdp0OdilPg_WDhF2--MZC..; uc1=cookie16=V32FPkk%2FxXMk5UvIbNtImtMfJQ%3D%3D&cookie21=WqG3DMC9Fb5mPLIQo9kR&cookie15=URm48syIIVrSKA%3D%3D&existShop=false&pas=0&cookie14=UoTUPvg16Dl1fw%3D%3D&tag=8&lng=zh_CN; mt=ci=-1_1; lastalitrackid=buyertrade.taobao.com; JSESSIONID=46486E97DAB8DFE70AF7B618C2AE4309; l=dB_cmQBHqVHSpFQ9BOfwIH3Ssv7t4IdbzrVy9-oE7ICP991H5uC5WZ4ztBTMCnGVn6rkJ3JsgJC4BKm92ydZGhZfP3k_J_xmed8h.; isg=BAwM2R7Qvilbman-Lz4SX8Sj3Wo-RbDvFjL_wmbMw7da8a77j1WsfldLkflJv-hH',

}

#爬取的通用代码基本框架

def getHTMLText_031804139(url):

try:

r = requests.get(url, timeout=30, headers=headers)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return "产生异常"

#对每个获得的页面进行解析,是整个程序的关键

def parsePage_031804139(ilt, html):

try:

prices = re.findall(r'"view_price":"[(d.)]*"', html) #商品价格信息

titles = re.findall(r'"raw_title":".*?"', html) #商品名称信息

for i in range(len(prices)):

price = eval(prices[i].split(':')[1])

title = eval(titles[i].split(':')[1])

ilt.append([price, title])

except:

print("")

#将淘宝的商品信息输出到屏幕

def printGoodsList_031804139(ilt):

tplt = "{:4} {:8} {:16}"

print(tplt.format("序号", "价格", "商品名称"))

count = 0

for goods in ilt:

count += 1

print(count, goods[0], goods[1])

def main():

goods = '美白精华'

url = "https://s.taobao.com/search?q="+ goods

depth = 8 #爬取的深度

infoList = [] #盛装商品信息

for i in range(depth):

try:

start_url = url + "&s=" + str(44 * i)

html = getHTMLText_031804139(url)

parsePage_031804139(infoList, html)

print(printGoodsList_031804139(infoList))

except:

continue

#如果某个页面出了问题,那么就继续下一个页面

main()

实验结果:

2)、心得体会

这次实验主要是设计正则表达式来筛选信息,因为构造正则表达式在这一类型的爬虫中是非常适用的,还有就是爬取淘宝的信息一定要加headers。

作业3

1)、实验内容:爬取一个给定网页(http://xcb.fzu.edu.cn/html/2019ztjy)或者自选网页的所有JPG格式文件

代码如下:

import requests

from bs4 import BeautifulSoup

import os

#爬取的通用代码基本框架

def getHTMlText(url):

try:

r = requests.get(url, timeout = 30)

r.raise_for_status()

r.encoding = r.apparent_encoding

return r.text

except:

return ""

#将爬取的info放入List中

def fillUnivList(ulist,html):

soup = BeautifulSoup(html,"html.parser")

pic_all=soup.find_all("img")

for pic in pic_all:

pic = pic["src"]

if pic[-1]=='g' or pic[-1]=='G':

ulist.append(pic)

#打印方法

def printUnivList(ulist):

print(ulist)

#写入文件方法

def writein_kata(list):

for pics in list:

if pics[0]=='/':

pics='http://xcb.fzu.edu.cn'+pics

with open("./File"+os.path.basename(pics),'wb') as f:

f.write(requests.get(pics).content)

#主函数

def main():

uinfo = []

url = 'http://xcb.fzu.edu.cn'

html = getHTMlText(url)

fillUnivList(uinfo,html)

printUnivList(uinfo)

writein_kata(uinfo)

main()

实验结果:

2)、心得体会

其实图片的爬取和前两次作业都很有共通之处,照葫芦画瓢就可以,但是一些细节问题还是需要去查资料。