昨天折腾hadoop2X的eclipse插件,从https://github.com/winghc/hadoop2x-eclipse-plugin把源码搞下来后,很快搞定出来一个,但是。。。New Hadoop Location时,窗口出不来,汗死,原因后面会说明的。源码难下,最后我会附上源码包的。

仔细看了ant和ivy的文件之后,我发现了一个坑爹的现象,编译一个插件,需要ivy么?!

ant用了好久了,还是自己写一个的好。

查看了jar包,都是hadoop安装目录里面存在的,还需要连个毛线的网去检测啊!看了下ivy的调试信息,也就那么些东西,直接干掉ivy!

重新写了个build.xml,只要往目录hadoop2x-eclipse-plugin-master/src/contrib/eclipse-plugin里面一丢,改改前5个属性的值,直接跑“ant jar”就行了。

或者eclipse建立一个普通项目,把

META-INF

resources

src

build.properties

plugin.xml

往项目一丢,再把build.xml丢进去,改了前5个属性值直接跑就行了。

build.xml内容如下

<?xml version="1.0"?> <project name="eclipse-plugin" default="jar"> <property name="jdk.home" value="G:/jdk/jdk1.6.0" /> <property name="hadoop.version" value="2.4.1" /> <property name="hadoop.home" value="G:/hadoop/hadoop-2.4.1" /> <property name="eclipse.version" value="3.7" /> <property name="eclipse.home" value="G:/MyEclipse10/Common" /> <property name="root" value="${basedir}" /> <property file="${root}/build.properties" /> <property name="name" value="${ant.project.name}" /> <property name="src.dir" location="${root}/src/java" /> <property name="build.contrib.dir" location="${root}/build/contrib" /> <property name="build.dir" location="${build.contrib.dir}/${name}" /> <property name="build.classes" location="${build.dir}/classes" /> <!-- all jars together --> <property name="javac.deprecation" value="off" /> <property name="javac.debug" value="on" /> <property name="build.encoding" value="ISO-8859-1" /> <path id="eclipse-sdk-jars"> <fileset dir="${eclipse.home}/plugins/"> <include name="org.eclipse.ui*.jar" /> <include name="org.eclipse.jdt*.jar" /> <include name="org.eclipse.core*.jar" /> <include name="org.eclipse.equinox*.jar" /> <include name="org.eclipse.debug*.jar" /> <include name="org.eclipse.osgi*.jar" /> <include name="org.eclipse.swt*.jar" /> <include name="org.eclipse.jface*.jar" /> <include name="org.eclipse.team.cvs.ssh2*.jar" /> <include name="com.jcraft.jsch*.jar" /> </fileset> </path> <path id="project-jars"> <fileset file="${build.dir}/lib/*.jar" /> </path> <target name="init" unless="skip.contrib"> <echo message="contrib: ${name}" /> <mkdir dir="${build.dir}" /> <mkdir dir="${build.classes}" /> <mkdir dir="${build.dir}/lib" /> <copy todir="${build.dir}/lib/" verbose="true"> <fileset dir="${hadoop.home}/share/hadoop/mapreduce"> <include name="hadoop*.jar" /> <exclude name="*test*"/> <exclude name="*example*"/> </fileset> <fileset dir="${hadoop.home}/share/hadoop/common"> <include name="hadoop*.jar" /> <exclude name="*test*"/> <exclude name="*example*"/> </fileset> <fileset dir="${hadoop.home}/share/hadoop/hdfs"> <include name="hadoop*.jar" /> <exclude name="*test*"/> <exclude name="*example*"/> </fileset> <fileset dir="${hadoop.home}/share/hadoop/yarn"> <include name="hadoop*.jar" /> <exclude name="*test*"/> <exclude name="*example*"/> </fileset> <fileset dir="${hadoop.home}/share/hadoop/common/lib"> <include name="protobuf-java-*.jar" /> <include name="log4j-*.jar" /> <include name="commons-cli-*.jar" /> <include name="commons-collections-*.jar" /> <include name="commons-configuration-*.jar" /> <include name="commons-lang-*.jar" /> <include name="jackson-core-asl-*.jar" /> <include name="jackson-mapper-asl-*.jar" /> <include name="slf4j-log4j12-*.jar" /> <include name="slf4j-api-*.jar" /> <include name="guava-*.jar" /> <include name="hadoop-annotations-*.jar" /> <include name="hadoop-auth-*.jar" /> <include name="commons-cli-*.jar" /> <include name="netty-*.jar" /> </fileset> </copy> </target> <target name="compile" depends="init" unless="skip.contrib"> <echo message="contrib: ${name}" /> <javac fork="true" executable="${jdk.home}/bin/javac" encoding="${build.encoding}" srcdir="${src.dir}" includes="**/*.java" destdir="${build.classes}" debug="${javac.debug}" deprecation="${javac.deprecation}" includeantruntime="on"> <classpath refid="eclipse-sdk-jars" /> <classpath refid="project-jars" /> </javac> </target> <target name="jar" depends="compile" unless="skip.contrib"> <pathconvert property="mf.classpath" pathsep=",lib/"> <path refid="project-jars" /> <flattenmapper /> </pathconvert> <jar jarfile="${build.dir}/hadoop-${hadoop.version}-eclipse-${eclipse.version}-plugin.jar" manifest="${root}/META-INF/MANIFEST.MF"> <manifest> <attribute name="Bundle-ClassPath" value="classes/,lib/${mf.classpath}" /> </manifest> <fileset dir="${build.dir}" includes="classes/ lib/" /> <fileset dir="${root}" includes="resources/ plugin.xml" /> </jar> </target> <target name="clean"> <echo message="contrib: ${name}" /> <delete dir="${build.dir}" /> </target> </project>

最前面5个属性中,jdk.home指定编译时用的jdk,hadoop和eclipse版本号是用来给jar起名字的,hadoop.home是hadoop安装目录,会从里面提取需要的文件,eclipse.home是eclipse的安装目录,MyEclipse中是那个Common目录。

原版中坑爹的jar包版本号无视了,MANIFEST.MF的classpath值也懒得去改了,都自动了,虽然自动会多了几个值,但是总比少的好。

又回到最开始的问题,new hadoop location时的窗口问题,是因为少了commons-collections包,所以直接从hadoop安装目录把这个包拉进来打包了,然后问题解决!

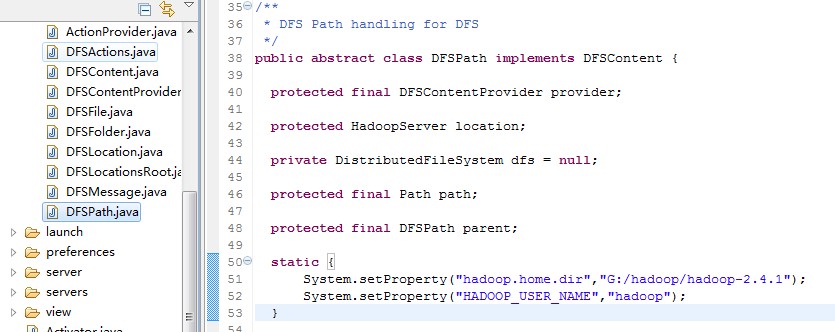

用jdk1.6编译了两个插件包,MyEclipse10的和Eclipse4.4的,都能用。至于上传文件时由于当前用户名导致的权限问题,这个就是改配置或改代码的事情了,我是编译前直接在源码里面加了两行,反正插件随便编译一下就有了。

最后附上官方源码,里面原先那个打包好的插件干掉了,都是旧的,还巨大无比!下载源码包