1 系统准备

查看系统版本

[root@localhost]# cat /etc/centos-release CentOS Linux release 8.1.1911 (Core)

修改主机名

hostnamectl set-hostname master01

我在两个服务器测试的,一个是我阿里云的服务器,这个是8.1的系统,另一个是本地虚拟机是7.4的系统,基本差不多操作

自己设置好ip

改名字不用说了吧

添加阿里源

[root@master ~]# rm -rfv /etc/yum.repos.d/*

[root@master ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-8.repo

配置主机名

[root@master ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.0.149 master

ip配置信息

cd /etc/sysconfig/network-scripts下面的配置,我的是ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=static

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=d06d07c2-9167-4ada-addb-b05c2669e24b

DEVICE=ens33

IPADDR=192.168.8.10

NETMASK=255.255.255.0

GATEWAY=192.168.8.2

DNS1=114.114.114.114

ONBOOT=yes

保存之后重启netwok服务

4、重启网卡服务

service network restart 或

关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

关闭swap,注释swap分区

[root@master ~]# swapoff -a [root@master ~]# cat /etc/fstab #/dev/mapper/cl-swap swap swap defaults 0 0

配置内核参数,将桥接的IPv4流量传递到iptables的链

[root@master ~]# cat > /etc/sysctl.d/k8s.conf <<EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 EOF sysctl --system

2 安装常用包

[root@master ~]# yum install vim bash-completion net-tools gcc -y

3 使用aliyun源安装docker-ce

安装docker依赖的软件包

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

设置yum镜像源为阿里镜像源,加快安装速度

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

如果用官方的docker镜像源,安装很慢,很可能会安装失败。官方镜像源为:https://download.docker.com/linux/centos/docker-ce.repo ,不建方使用官方镜像源。

查看所有仓库中所有docker版本,并选择特定版本安装

yum list docker-ce --showduplicates | sort -r

[root@master ~]# yum -y install docker-ce

#指定版本号

sudo yum install docker-ce-19.03.6

安装docker-ce如果出现以下错

[root@master ~]# yum -y install docker-ce Error: Problem: package docker-ce-3:19.03.8-3.el7.x86_64 requires containerd.io >= 1.2.2-3, but none of the providers can be installed - cannot install the best candidate for the job - package containerd.io-1.2.10-3.2.el7.x86_64 is excluded - package containerd.io-1.2.13-3.1.el7.x86_64 is excluded - package containerd.io-1.2.2-3.3.el7.x86_64 is excluded - package containerd.io-1.2.2-3.el7.x86_64 is excluded - package containerd.io-1.2.4-3.1.el7.x86_64 is excluded - package containerd.io-1.2.5-3.1.el7.x86_64 is excluded - package containerd.io-1.2.6-3.3.el7.x86_64 is excluded (try to add '--skip-broken' to skip uninstallable packages or '--nobest' to use not only best candidate packages)

解决方法

[root@master ~]# wget https://download.docker.com/linux/centos/7/x86_64/edge/Packages/containerd.io-1.2.6-3.3.el7.x86_64.rpm [root@master ~]# yum install containerd.io-1.2.6-3.3.el7.x86_64.rpm

这个时候可能还是下载不下来,可以自己去百度上下载下来然后使用然后再安装docker-ce即可成功

添加aliyundocker仓库加速器

[root@master ~]# mkdir -p /etc/docker [root@master ~]# tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://fl791z1h.mirror.aliyuncs.com"] } EOF [root@master01 ~]# systemctl daemon-reload [root@master01 ~]# systemctl restart docker

4 安装kubectl、kubelet、kubeadm

添加阿里kubernetes源

[root@master ~]# cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

安装

[root@master01 ~]# yum install kubectl kubelet kubeadm 或者指定版本 yum install kubectl-1.18.0 kubelet-1.18.0 kubeadm-1.18.0 [root@master01 ~]# systemctl enable kubelet

5 初始化k8s集群

[root@master ~]# kubeadm init --kubernetes-version=1.18.0 --apiserver-advertise-address=192.168.0.149 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.10.0.0/16 --pod-network-cidr=10.122.0.0/16

POD的网段为: 10.122.0.0/16, api server地址就是master本机IP。

这一步很关键,由于kubeadm 默认从官网k8s.grc.io下载所需镜像,国内无法访问,因此需要通过–image-repository指定阿里云镜像仓库地址。

集群初始化成功后返回如下信息:

[root@master k8s]# kubeadm init --kubernetes-version=1.18.0 --apiserver-advertise-address=172.25.10.23 --image-repository registry.aliyuncs.com/google_containers --service-cidr=10.10.0.0/16 --pod-network-cidr=10.122.0.0/16 W1126 17:36:14.148469 15643 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] [init] Using Kubernetes version: v1.18.0 [preflight] Running pre-flight checks [WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service' [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ [WARNING FileExisting-tc]: tc not found in system path [WARNING Hostname]: hostname "master" could not be reached [WARNING Hostname]: hostname "master": lookup master on 100.100.2.136:53: no such host [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.10.0.1 172.25.10.23] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [172.25.10.23 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [172.25.10.23 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" W1126 17:36:18.428946 15643 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [control-plane] Creating static Pod manifest for "kube-scheduler" W1126 17:36:18.430024 15643 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 21.001826 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''" [mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: vumdcz.2z3os5njsv4oboaz [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 172.25.10.23:6443 --token vumdcz.2z3os5njsv4oboaz --discovery-token-ca-cert-hash sha256:89a9154ff203c6a3f851bd521bcb32bbc296d99b2a274648ffb6b80582827d9c

记录生成的最后部分内容,此内容需要在其它节点加入Kubernetes集群时执行。

根据提示创建kubectl

[root@master ~]# mkdir -p $HOME/.kube [root@master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

执行下面命令,使kubectl可以自动补充

[root@master ~]# source <(kubectl completion bash)

查看节点,pod

[root@master k8s]# kubectl get node NAME STATUS ROLES AGE VERSION master NotReady master 6m55s v1.18.0 [root@master k8s]# kubectl get pod --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-8586758878-79p52 0/1 Pending 0 19s kube-system calico-node-2xxg5 0/1 Init:0/3 0 19s kube-system coredns-7ff77c879f-l6nvg 0/1 Pending 0 7m21s kube-system coredns-7ff77c879f-q6j94 0/1 Pending 0 7m21s kube-system etcd-master 1/1 Running 0 7m31s kube-system kube-apiserver-master 1/1 Running 0 7m31s kube-system kube-controller-manager-master 1/1 Running 0 7m31s kube-system kube-proxy-6md54 1/1 Running 0 7m21s kube-system kube-scheduler-master 1/1 Running 0 7m31s

node节点为NotReady,因为corednspod没有启动,缺少网络pod

6 安装calico网络(此处使用calico,不使用flannel,flannel多次封包解包占用资源较多)

[root@master ~]# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created

查看pod和node

[root@master k8s]# kubectl get pod --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-8586758878-79p52 1/1 Running 0 11m kube-system calico-node-2xxg5 1/1 Running 0 11m kube-system coredns-7ff77c879f-l6nvg 1/1 Running 0 18m kube-system coredns-7ff77c879f-q6j94 1/1 Running 0 18m kube-system etcd-master 1/1 Running 0 19m kube-system kube-apiserver-master 1/1 Running 0 19m kube-system kube-controller-manager-master 1/1 Running 0 19m kube-system kube-proxy-6md54 1/1 Running 0 18m kube-system kube-scheduler-master 1/1 Running 0 19m kubernetes-dashboard dashboard-metrics-scraper-dc6947fbf-gqcdh 1/1 Running 0 8m12s kubernetes-dashboard kubernetes-dashboard-5d4dc8b976-kxz6j 1/1 Running 0 8m12s [root@master k8s]# kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper NodePort 10.10.197.176 <none> 8000:30572/TCP 8m22s kubernetes-dashboard ClusterIP 10.10.7.126 <none> 443/TCP 8m22s

此时集群状态正常

7 安装kubernetes-dashboard

官方部署dashboard的服务没使用nodeport,将yaml文件下载到本地,在service里添加nodeport(或者自行去网上下载此文件)

[root@master ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc7/aio/deploy/recommended.yaml [root@master ~]# vim recommended.yaml kind: Service apiVersion: v1 metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard spec: type: NodePort ports: - port: 443 targetPort: 8443 nodePort: 30000 selector: k8s-app: kubernetes-dashboard [root@master ~]# kubectl create -f recommended.yaml namespace/kubernetes-dashboard created serviceaccount/kubernetes-dashboard created service/kubernetes-dashboard created secret/kubernetes-dashboard-certs created secret/kubernetes-dashboard-csrf created secret/kubernetes-dashboard-key-holder created configmap/kubernetes-dashboard-settings created role.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created deployment.apps/kubernetes-dashboard created service/dashboard-metrics-scraper created deployment.apps/dashboard-metrics-scraper created

查看pod,service

[root@master k8s]# kubectl get node NAME STATUS ROLES AGE VERSION master Ready master 11m v1.18.0 [root@master k8s]# kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.10.0.1 <none> 443/TCP 11m [root@master k8s]# kubectl get svc -n kubernetes-dashboard NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE dashboard-metrics-scraper NodePort 10.10.197.176 <none> 8000:30572/TCP 51s kubernetes-dashboard ClusterIP 10.10.7.126 <none> 443/TCP 51s

通过页面访问,推荐使用firefox浏览器

一种使用配置文件,另一种使用token,此处使用token

使用token进行登录,执行下面命令获取token

kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

#授权命名空间创建权限

kubectl create clusterrolebinding serviceaccounts-cluster-admin --clusterrole=cluster-admin --group=system:serviceaccounts

kubectl get secret -n kube-system #查看token

获取token通用代码 kubectl -n kube-system describe $(kubectl -n kube-system get secret -n kube-system -o name | grep namespace) | grep token

[root@master01 ~]# kubectl describe secrets -n kubernetes-dashboard kubernetes-dashboard-token-b5sd4 | grep token | awk 'NR==3{print $2}' eyJhbGciOiJSUzI1NiIsImtpZCI6IlE2RnRYZlJicGExR1pyckZ1ZmIybUxuTWdVdHN3ekxmeWxpR1VOWTZvNGcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tenhxOXEiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiNDNhZTgzN2EtMzc2MS00YzUzLTg5ZjItMGMwZGQxYzU3MTFkIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.qWZ8I2buFE8o8_B7_byMt8GTWxBMFKnyuEtIOADR4LbbPL85k2Tsnb-RjpX9DCt6ZnvTnzH1bcDC8xQWuM-YForfMAXGN1sKv8DAgh75dTSSs1vppC4a9uDlHQNY2MrEqLNeM99SurF5w2lu5V7b9TLmtpk05GIedSKdGb1hbSRlI_m35AYLChu5lZayLNXJ9sum70SL20_IVe6UQPNS4XCa63g2NCe_kOAP-tfLKw1GmzBwtVTskHa2ImgVRT5GfyA-48DNkiXi51czDHNo1aNZUcdf7RLJ44kSsfommZIYX2J37LxQqFCIW8THBeIM7AQjjsAiTXYnYpWx4MKxBA

查看dashboard日志,大概就是一下样子,这个名字你要根据自己的名字改

[root@master ~]# kubectl logs -f -n kubernetes-dashboard kubernetes-dashboard-5d4dc8b976-brxv92020/11/27 01:30:06 [2020-11-27T01:30:06Z] Outcoming response to 172.25.10.23:4039 with 200 status code 2020/11/27 01:30:10 [2020-11-27T01:30:10Z] Incoming HTTP/2.0 GET /api/v1/namespace request from 172.25.10.23:4039: 2020/11/27 01:30:10 Getting list of namespaces 2020/11/27 01:30:10 [2020-11-27T01:30:10Z] Outcoming response to 172.25.10.23:4039 with 200 status code 2020/11/27 01:30:11 [2020-11-27T01:30:11Z] Incoming HTTP/2.0 GET /api/v1/node?itemsPerPage=10&page=1&sortBy=d,creationTimestamp request from 172.25.10.23:4039: 2020/11/27 01:30:11 [2020-11-27T01:30:11Z] Outcoming response to 172.25.10.23:4039 with 200 status code 2020/11/27 01:30:15 [2020-11-27T01:30:15Z] Incoming HTTP/2.0 GET /api/v1/namespace request from 172.25.10.23:4039: 2020/11/27 01:30:15 Getting list of namespaces 2020/11/27 01:30:15 [2020-11-27T01:30:15Z] Outcoming response to 172.25.10.23:4039 with 200 status code 2020/11/27 01:30:16 [2020-11-27T01:30:16Z] Incoming HTTP/2.0 GET /api/v1/node?itemsPerPage=10&page=1&sortBy=d,creationTimestamp request from 172.25.10.23:4039: 2020/11/27 01:30:16 [2020-11-27T01:30:16Z] Outcoming response to 172.25.10.23:4039 with 200 status code 2020/11/27 01:30:20 [2020-11-27T01:30:20Z] Incoming HTTP/2.0 GET /api/v1/namespace request from 172.25.10.23:4039: 2020/11/27 01:30:20 Getting list of namespaces 2020/11/27 01:30:20 [2020-11-27T01:30:20Z] Outcoming response to 172.25.10.23:4039 with 200 status code 2020/11/27 01:30:21 [2020-11-27T01:30:21Z] Incoming HTTP/2.0 GET /api/v1/node?itemsPerPage=10&page=1&sortBy=d,creationTimestamp request from 172.25.10.23:4039: 2020/11/27 01:30:21 [2020-11-27T01:30:21Z] Outcoming response to 172.25.10.23:4039 with 200 status code 2020/11/27 01:30:25 [2020-11-27T01:30:25Z] Incoming HTTP/2.0 GET /api/v1/namespace request from 172.25.10.23:4039: 2020/11/27 01:30:25 Getting list of namespaces 2020/11/27 01:30:25 [2020-11-27T01:30:25Z] Outcoming response to 172.25.10.23:4039 with 200 status code 2020/11/27 01:30:27 [2020-11-27T01:30:27Z] Incoming HTTP/2.0 GET /api/v1/node?itemsPerPage=10&page=1&sortBy=d,creationTimestamp request from 172.25.10.23:4039: 2020/11/27 01:30:27 [2020-11-27T01:30:27Z] Outcoming response to 172.25.10.23:4039 with 200 status code

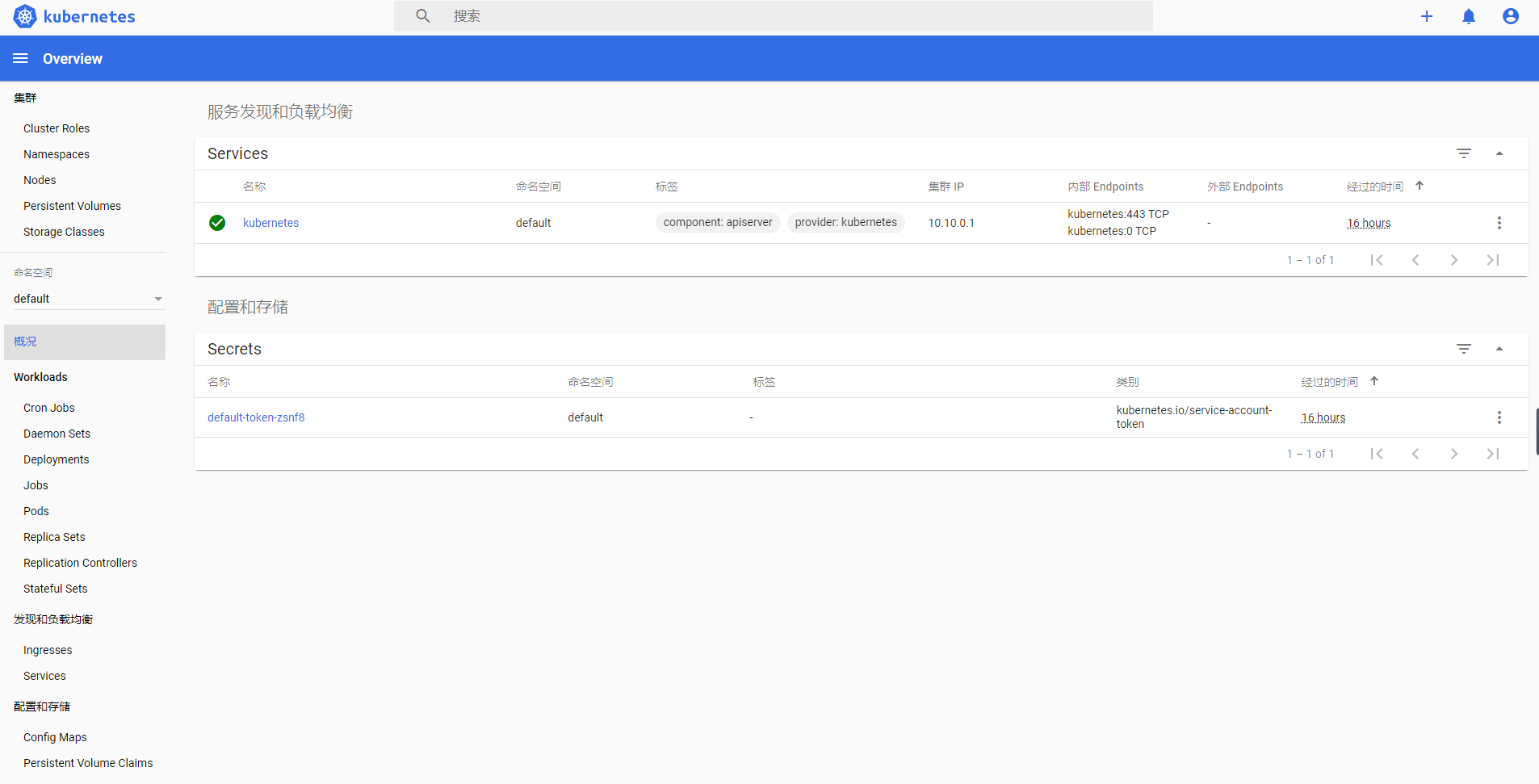

此时再查看dashboard,即可看到有资源展示

至此为止已经安装完毕,node节点安装方法和上面基本一样,只是不用kb init,直接使用上面的命令即可

kubeadm join 172.25.10.23:6443 --token vumdcz.2z3os5njsv4oboaz --discovery-token-ca-cert-hash sha256:89a9154ff203c6a3f851bd521bcb32bbc296d99b2a274648ffb6b80582827d9c

前提是最好在一个网段

-1.18.0