官方代码地址

https://github.com/tensorflow/models/blob/master/research/slim/nets/inception_v1.py

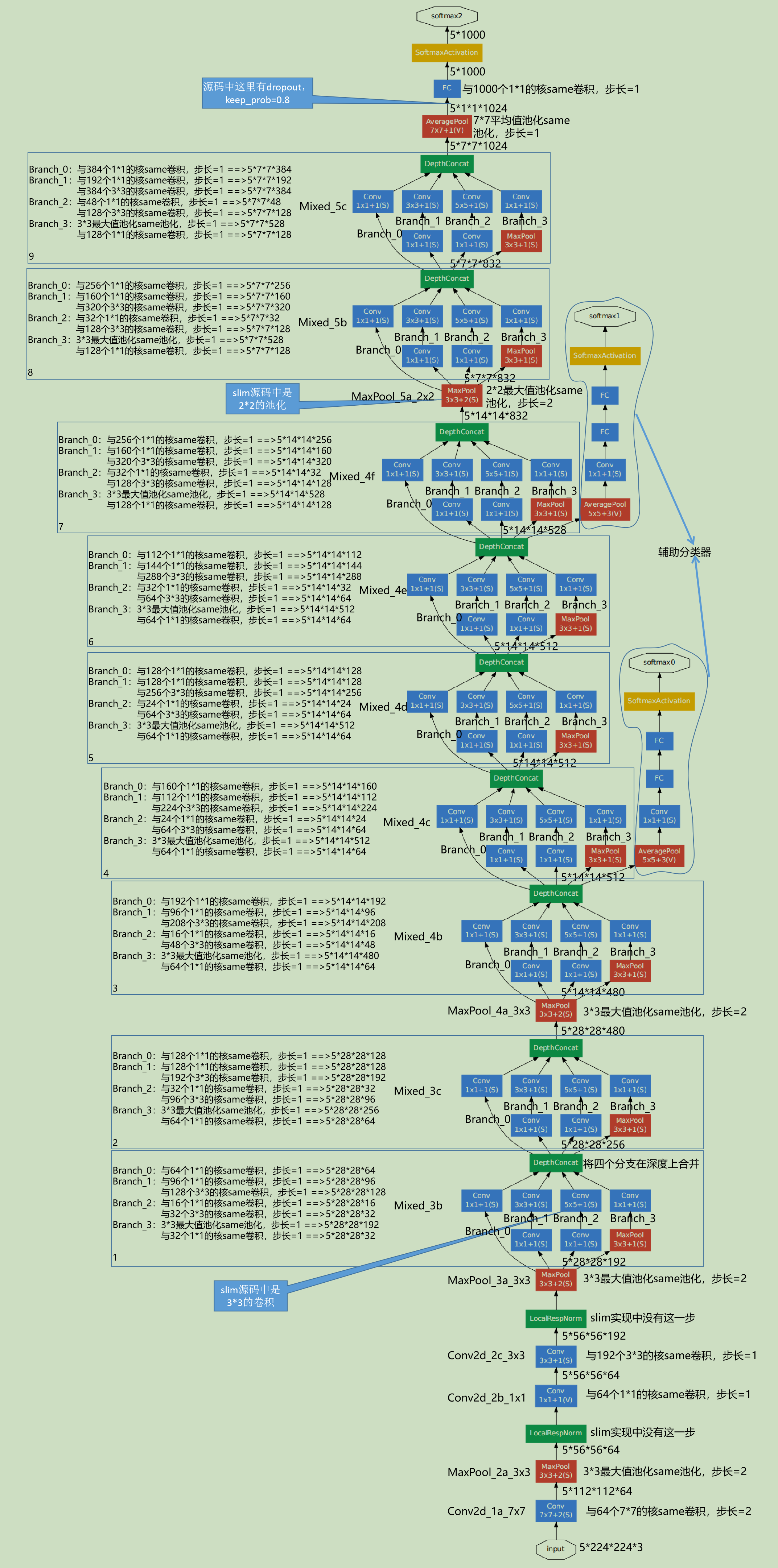

网络结构图

代码对照

1 # Copyright 2016 The TensorFlow Authors. All Rights Reserved. 2 # 3 # Licensed under the Apache License, Version 2.0 (the "License"); 4 # you may not use this file except in compliance with the License. 5 # You may obtain a copy of the License at 6 # 7 # http://www.apache.org/licenses/LICENSE-2.0 8 # 9 # Unless required by applicable law or agreed to in writing, software 10 # distributed under the License is distributed on an "AS IS" BASIS, 11 # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. 12 # See the License for the specific language governing permissions and 13 # limitations under the License. 14 # ============================================================================== 15 """Contains the definition for inception v1 classification network.""" 16 17 from __future__ import absolute_import 18 from __future__ import division 19 from __future__ import print_function 20 21 import tensorflow as tf 22 23 from nets import inception_utils 24 25 slim = tf.contrib.slim 26 trunc_normal = lambda stddev: tf.truncated_normal_initializer(0.0, stddev) 27 28 29 def inception_v1_base(inputs, 30 final_endpoint='Mixed_5c', 31 include_root_block=True, 32 scope='InceptionV1'): 33 """Defines the Inception V1 base architecture. 34 35 This architecture is defined in: 36 Going deeper with convolutions 37 Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, 38 Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich. 39 http://arxiv.org/pdf/1409.4842v1.pdf. 40 41 Args: 42 inputs: a tensor of size [batch_size, height, width, channels]. 43 final_endpoint: specifies the endpoint to construct the network up to. It 44 can be one of ['Conv2d_1a_7x7', 'MaxPool_2a_3x3', 'Conv2d_2b_1x1', 45 'Conv2d_2c_3x3', 'MaxPool_3a_3x3', 'Mixed_3b', 'Mixed_3c', 46 'MaxPool_4a_3x3', 'Mixed_4b', 'Mixed_4c', 'Mixed_4d', 'Mixed_4e', 47 'Mixed_4f', 'MaxPool_5a_2x2', 'Mixed_5b', 'Mixed_5c']. If 48 include_root_block is False, ['Conv2d_1a_7x7', 'MaxPool_2a_3x3', 49 'Conv2d_2b_1x1', 'Conv2d_2c_3x3', 'MaxPool_3a_3x3'] will not be available. 50 include_root_block: If True, include the convolution and max-pooling layers 51 before the inception modules. If False, excludes those layers. 52 scope: Optional variable_scope. 53 54 Returns: 55 A dictionary from components of the network to the corresponding activation. 56 57 Raises: 58 ValueError: if final_endpoint is not set to one of the predefined values. 59 """ 60 end_points = {} 61 with tf.variable_scope(scope, 'InceptionV1', [inputs]): 62 with slim.arg_scope( 63 [slim.conv2d, slim.fully_connected], 64 weights_initializer=trunc_normal(0.01)): 65 with slim.arg_scope([slim.conv2d, slim.max_pool2d], 66 stride=1, padding='SAME'): 67 net = inputs 68 if include_root_block: 69 end_point = 'Conv2d_1a_7x7' 70 net = slim.conv2d(inputs, 64, [7, 7], stride=2, scope=end_point) 71 end_points[end_point] = net 72 if final_endpoint == end_point: 73 return net, end_points 74 end_point = 'MaxPool_2a_3x3' 75 net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point) 76 end_points[end_point] = net 77 if final_endpoint == end_point: 78 return net, end_points 79 end_point = 'Conv2d_2b_1x1' 80 net = slim.conv2d(net, 64, [1, 1], scope=end_point) 81 end_points[end_point] = net 82 if final_endpoint == end_point: 83 return net, end_points 84 end_point = 'Conv2d_2c_3x3' 85 net = slim.conv2d(net, 192, [3, 3], scope=end_point) 86 end_points[end_point] = net 87 if final_endpoint == end_point: 88 return net, end_points 89 end_point = 'MaxPool_3a_3x3' 90 net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point) 91 end_points[end_point] = net 92 if final_endpoint == end_point: 93 return net, end_points 94 95 end_point = 'Mixed_3b' 96 with tf.variable_scope(end_point): 97 with tf.variable_scope('Branch_0'): 98 branch_0 = slim.conv2d(net, 64, [1, 1], scope='Conv2d_0a_1x1') 99 with tf.variable_scope('Branch_1'): 100 branch_1 = slim.conv2d(net, 96, [1, 1], scope='Conv2d_0a_1x1') 101 branch_1 = slim.conv2d(branch_1, 128, [3, 3], scope='Conv2d_0b_3x3') 102 with tf.variable_scope('Branch_2'): 103 branch_2 = slim.conv2d(net, 16, [1, 1], scope='Conv2d_0a_1x1') 104 branch_2 = slim.conv2d(branch_2, 32, [3, 3], scope='Conv2d_0b_3x3') 105 with tf.variable_scope('Branch_3'): 106 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 107 branch_3 = slim.conv2d(branch_3, 32, [1, 1], scope='Conv2d_0b_1x1') 108 net = tf.concat( 109 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 110 end_points[end_point] = net 111 if final_endpoint == end_point: return net, end_points 112 113 end_point = 'Mixed_3c' 114 with tf.variable_scope(end_point): 115 with tf.variable_scope('Branch_0'): 116 branch_0 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1') 117 with tf.variable_scope('Branch_1'): 118 branch_1 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1') 119 branch_1 = slim.conv2d(branch_1, 192, [3, 3], scope='Conv2d_0b_3x3') 120 with tf.variable_scope('Branch_2'): 121 branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1') 122 branch_2 = slim.conv2d(branch_2, 96, [3, 3], scope='Conv2d_0b_3x3') 123 with tf.variable_scope('Branch_3'): 124 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 125 branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1') 126 net = tf.concat( 127 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 128 end_points[end_point] = net 129 if final_endpoint == end_point: return net, end_points 130 131 end_point = 'MaxPool_4a_3x3' 132 net = slim.max_pool2d(net, [3, 3], stride=2, scope=end_point) 133 end_points[end_point] = net 134 if final_endpoint == end_point: return net, end_points 135 136 end_point = 'Mixed_4b' 137 with tf.variable_scope(end_point): 138 with tf.variable_scope('Branch_0'): 139 branch_0 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1') 140 with tf.variable_scope('Branch_1'): 141 branch_1 = slim.conv2d(net, 96, [1, 1], scope='Conv2d_0a_1x1') 142 branch_1 = slim.conv2d(branch_1, 208, [3, 3], scope='Conv2d_0b_3x3') 143 with tf.variable_scope('Branch_2'): 144 branch_2 = slim.conv2d(net, 16, [1, 1], scope='Conv2d_0a_1x1') 145 branch_2 = slim.conv2d(branch_2, 48, [3, 3], scope='Conv2d_0b_3x3') 146 with tf.variable_scope('Branch_3'): 147 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 148 branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1') 149 net = tf.concat( 150 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 151 end_points[end_point] = net 152 if final_endpoint == end_point: return net, end_points 153 154 end_point = 'Mixed_4c' 155 with tf.variable_scope(end_point): 156 with tf.variable_scope('Branch_0'): 157 branch_0 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1') 158 with tf.variable_scope('Branch_1'): 159 branch_1 = slim.conv2d(net, 112, [1, 1], scope='Conv2d_0a_1x1') 160 branch_1 = slim.conv2d(branch_1, 224, [3, 3], scope='Conv2d_0b_3x3') 161 with tf.variable_scope('Branch_2'): 162 branch_2 = slim.conv2d(net, 24, [1, 1], scope='Conv2d_0a_1x1') 163 branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3') 164 with tf.variable_scope('Branch_3'): 165 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 166 branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1') 167 net = tf.concat( 168 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 169 end_points[end_point] = net 170 if final_endpoint == end_point: return net, end_points 171 172 end_point = 'Mixed_4d' 173 with tf.variable_scope(end_point): 174 with tf.variable_scope('Branch_0'): 175 branch_0 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1') 176 with tf.variable_scope('Branch_1'): 177 branch_1 = slim.conv2d(net, 128, [1, 1], scope='Conv2d_0a_1x1') 178 branch_1 = slim.conv2d(branch_1, 256, [3, 3], scope='Conv2d_0b_3x3') 179 with tf.variable_scope('Branch_2'): 180 branch_2 = slim.conv2d(net, 24, [1, 1], scope='Conv2d_0a_1x1') 181 branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3') 182 with tf.variable_scope('Branch_3'): 183 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 184 branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1') 185 net = tf.concat( 186 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 187 end_points[end_point] = net 188 if final_endpoint == end_point: return net, end_points 189 190 end_point = 'Mixed_4e' 191 with tf.variable_scope(end_point): 192 with tf.variable_scope('Branch_0'): 193 branch_0 = slim.conv2d(net, 112, [1, 1], scope='Conv2d_0a_1x1') 194 with tf.variable_scope('Branch_1'): 195 branch_1 = slim.conv2d(net, 144, [1, 1], scope='Conv2d_0a_1x1') 196 branch_1 = slim.conv2d(branch_1, 288, [3, 3], scope='Conv2d_0b_3x3') 197 with tf.variable_scope('Branch_2'): 198 branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1') 199 branch_2 = slim.conv2d(branch_2, 64, [3, 3], scope='Conv2d_0b_3x3') 200 with tf.variable_scope('Branch_3'): 201 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 202 branch_3 = slim.conv2d(branch_3, 64, [1, 1], scope='Conv2d_0b_1x1') 203 net = tf.concat( 204 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 205 end_points[end_point] = net 206 if final_endpoint == end_point: return net, end_points 207 208 end_point = 'Mixed_4f' 209 with tf.variable_scope(end_point): 210 with tf.variable_scope('Branch_0'): 211 branch_0 = slim.conv2d(net, 256, [1, 1], scope='Conv2d_0a_1x1') 212 with tf.variable_scope('Branch_1'): 213 branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1') 214 branch_1 = slim.conv2d(branch_1, 320, [3, 3], scope='Conv2d_0b_3x3') 215 with tf.variable_scope('Branch_2'): 216 branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1') 217 branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0b_3x3') 218 with tf.variable_scope('Branch_3'): 219 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 220 branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1') 221 net = tf.concat( 222 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 223 end_points[end_point] = net 224 if final_endpoint == end_point: return net, end_points 225 226 end_point = 'MaxPool_5a_2x2' 227 net = slim.max_pool2d(net, [2, 2], stride=2, scope=end_point) 228 end_points[end_point] = net 229 if final_endpoint == end_point: return net, end_points 230 231 end_point = 'Mixed_5b' 232 with tf.variable_scope(end_point): 233 with tf.variable_scope('Branch_0'): 234 branch_0 = slim.conv2d(net, 256, [1, 1], scope='Conv2d_0a_1x1') 235 with tf.variable_scope('Branch_1'): 236 branch_1 = slim.conv2d(net, 160, [1, 1], scope='Conv2d_0a_1x1') 237 branch_1 = slim.conv2d(branch_1, 320, [3, 3], scope='Conv2d_0b_3x3') 238 with tf.variable_scope('Branch_2'): 239 branch_2 = slim.conv2d(net, 32, [1, 1], scope='Conv2d_0a_1x1') 240 branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0a_3x3') 241 with tf.variable_scope('Branch_3'): 242 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 243 branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1') 244 net = tf.concat( 245 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 246 end_points[end_point] = net 247 if final_endpoint == end_point: return net, end_points 248 249 end_point = 'Mixed_5c' 250 with tf.variable_scope(end_point): 251 with tf.variable_scope('Branch_0'): 252 branch_0 = slim.conv2d(net, 384, [1, 1], scope='Conv2d_0a_1x1') 253 with tf.variable_scope('Branch_1'): 254 branch_1 = slim.conv2d(net, 192, [1, 1], scope='Conv2d_0a_1x1') 255 branch_1 = slim.conv2d(branch_1, 384, [3, 3], scope='Conv2d_0b_3x3') 256 with tf.variable_scope('Branch_2'): 257 branch_2 = slim.conv2d(net, 48, [1, 1], scope='Conv2d_0a_1x1') 258 branch_2 = slim.conv2d(branch_2, 128, [3, 3], scope='Conv2d_0b_3x3') 259 with tf.variable_scope('Branch_3'): 260 branch_3 = slim.max_pool2d(net, [3, 3], scope='MaxPool_0a_3x3') 261 branch_3 = slim.conv2d(branch_3, 128, [1, 1], scope='Conv2d_0b_1x1') 262 net = tf.concat( 263 axis=3, values=[branch_0, branch_1, branch_2, branch_3]) 264 end_points[end_point] = net 265 if final_endpoint == end_point: return net, end_points 266 raise ValueError('Unknown final endpoint %s' % final_endpoint) 267 268 269 def inception_v1(inputs, 270 num_classes=1000, 271 is_training=True, 272 dropout_keep_prob=0.8, 273 prediction_fn=slim.softmax, 274 spatial_squeeze=True, 275 reuse=None, 276 scope='InceptionV1', 277 global_pool=False): 278 """Defines the Inception V1 architecture. 279 280 This architecture is defined in: 281 282 Going deeper with convolutions 283 Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, 284 Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich. 285 http://arxiv.org/pdf/1409.4842v1.pdf. 286 287 The default image size used to train this network is 224x224. 288 289 Args: 290 inputs: a tensor of size [batch_size, height, width, channels]. 291 num_classes: number of predicted classes. If 0 or None, the logits layer 292 is omitted and the input features to the logits layer (before dropout) 293 are returned instead. 294 is_training: whether is training or not. 295 dropout_keep_prob: the percentage of activation values that are retained. 296 prediction_fn: a function to get predictions out of logits. 297 spatial_squeeze: if True, logits is of shape [B, C], if false logits is of 298 shape [B, 1, 1, C], where B is batch_size and C is number of classes. 299 reuse: whether or not the network and its variables should be reused. To be 300 able to reuse 'scope' must be given. 301 scope: Optional variable_scope. 302 global_pool: Optional boolean flag to control the avgpooling before the 303 logits layer. If false or unset, pooling is done with a fixed window 304 that reduces default-sized inputs to 1x1, while larger inputs lead to 305 larger outputs. If true, any input size is pooled down to 1x1. 306 307 Returns: 308 net: a Tensor with the logits (pre-softmax activations) if num_classes 309 is a non-zero integer, or the non-dropped-out input to the logits layer 310 if num_classes is 0 or None. 311 end_points: a dictionary from components of the network to the corresponding 312 activation. 313 """ 314 # Final pooling and prediction 315 with tf.variable_scope(scope, 'InceptionV1', [inputs], reuse=reuse) as scope: 316 with slim.arg_scope([slim.batch_norm, slim.dropout], 317 is_training=is_training): 318 net, end_points = inception_v1_base(inputs, scope=scope) 319 with tf.variable_scope('Logits'): 320 if global_pool: 321 # Global average pooling. 322 net = tf.reduce_mean(net, [1, 2], keep_dims=True, name='global_pool') 323 end_points['global_pool'] = net 324 else: 325 # Pooling with a fixed kernel size. 326 net = slim.avg_pool2d(net, [7, 7], stride=1, scope='AvgPool_0a_7x7') 327 end_points['AvgPool_0a_7x7'] = net 328 if not num_classes: 329 return net, end_points 330 net = slim.dropout(net, dropout_keep_prob, scope='Dropout_0b') 331 logits = slim.conv2d(net, num_classes, [1, 1], activation_fn=None, 332 normalizer_fn=None, scope='Conv2d_0c_1x1') 333 if spatial_squeeze: 334 logits = tf.squeeze(logits, [1, 2], name='SpatialSqueeze') 335 336 end_points['Logits'] = logits 337 end_points['Predictions'] = prediction_fn(logits, scope='Predictions') 338 return logits, end_points 339 inception_v1.default_image_size = 224 340 341 inception_v1_arg_scope = inception_utils.inception_arg_scope