昨晚安装完sbt之后发现打包的时候又出BUG了,因此决定实验中的打包操作使用本机的IDEA进行,结合虚拟机中的Spark与Scala版本,在IDEA的pom.xml中引入下面这些东西:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.example</groupId> <artifactId>ScoreAver</artifactId> <version>1.0-SNAPSHOT</version> <properties> <spark.version>2.2.3</spark.version> <scala.version>2.11</scala.version> </properties> <dependencies> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_${scala.version}</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming_${scala.version}</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_${scala.version}</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-hive_${scala.version}</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-mllib_${scala.version}</artifactId> <version>${spark.version}</version> </dependency> <dependency> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> <version>2.11</version> </dependency> <dependency> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-eclipse-plugin</artifactId> <version>2.5.1</version> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-assembly-plugin</artifactId> <version>2.5.5</version> <configuration> <archive> <manifest> <mainClass>Aver</mainClass> </manifest> </archive> <descriptorRefs> <descriptorRef>jar-with-dependencies</descriptorRef> </descriptorRefs> </configuration> <executions> <execution> <id>make-assembly</id> <phase>package</phase> <goals> <goal>single</goal> </goals> </execution> </executions> </plugin> <plugin> <groupId>org.scala-tools</groupId> <artifactId>maven-scala-plugin</artifactId> <executions> <execution> <goals> <goal>compile</goal> <goal>testCompile</goal> </goals> </execution> </executions> <configuration> <scalaVersion>${scala.version}</scalaVersion> <args> <arg>-target:jvm-1.5</arg> </args> </configuration> </plugin> <plugin> <artifactId>maven-compiler-plugin</artifactId> <version>3.6.0</version> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-surefire-plugin</artifactId> <version>2.19</version> <configuration> <skip>true</skip> </configuration> </plugin> </plugins> </build> </project>

<mainClass>Aver</mainClass>这里指定运行jar时的调用入口,与object要一致,我这里没有创建package,否则格式是:包名.类名:

import org.apache.spark.SparkContext import org.apache.spark.SparkContext._ import org.apache.spark.SparkConf object Aver { def main(args: Array[String]): Unit = { val conf = new SparkConf().setAppName("Aver") val sc = new SparkContext(conf) val scoredata = sc.textFile("file:///home/hadoop02/Downloads/StudGrade") val res = scoredata.filter(_.trim().length>0).map(line=>(line.split(",")(0).trim(),line.split(",")(1).trim().toInt)) .groupByKey() .map(x=>{ var num = 0.0 var sum = 0 for(i <- x._2){ sum = sum + i num = num + 1 } val avg = sum/num val format = f"$avg%1.2f".toDouble (x._1,format) }).collect.foreach(x=>println(x._1+" "+x._2)) } }

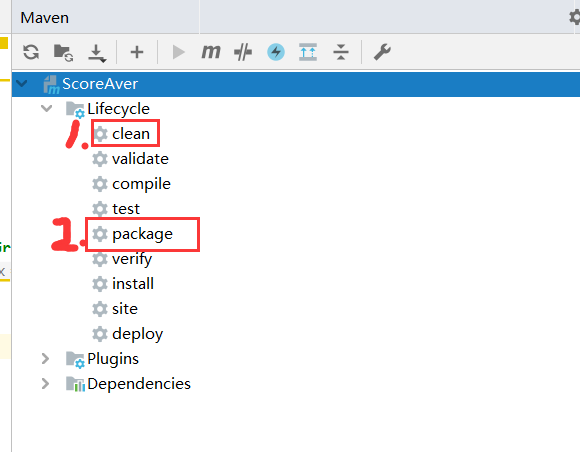

打包操作就是Maven的流程: