源码,爬虫部分

package utils; import java.io.IOException; import java.io.UnsupportedEncodingException; import java.net.URISyntaxException; import java.util.ArrayList; import java.util.List; import org.apache.http.HttpEntity; import org.apache.http.NameValuePair; import org.apache.http.client.config.RequestConfig; import org.apache.http.client.entity.UrlEncodedFormEntity; import org.apache.http.client.methods.CloseableHttpResponse; import org.apache.http.client.methods.HttpGet; import org.apache.http.client.methods.HttpPost; import org.apache.http.client.utils.URIBuilder; import org.apache.http.impl.client.CloseableHttpClient; import org.apache.http.impl.client.HttpClients; import org.apache.http.impl.conn.PoolingHttpClientConnectionManager; import org.apache.http.message.BasicNameValuePair; import org.apache.http.util.EntityUtils; import com.alibaba.fastjson.JSONObject; public class HttpClientPool { /** * 这是httpClient连接池 * @throws Exception */ public static void HttpClientPool() { //创建连接池管理器 PoolingHttpClientConnectionManager cm =new PoolingHttpClientConnectionManager(); //设置最大连接数 cm.setMaxTotal(100); //设置每个主机的最大连接数 cm.setDefaultMaxPerRoute(10); //使用连接池管理器发起请求 // doGet(cm); // doPost(cm); } public static String doPost(PoolingHttpClientConnectionManager cm) throws Exception { //从连接池中获取httpClient对象 CloseableHttpClient httpClient = HttpClients.custom().setConnectionManager(cm).build(); //2、输入网址,发起请求,创建httpPost对象 HttpPost httpPost= new HttpPost("http://m.sinovision.net/newpneumonia.php"); System.out.println("发起请求的信息:"+httpPost); //Post使用,声明List集合,封装表单中的参数 List<NameValuePair> params= new ArrayList<NameValuePair>(); params.add(new BasicNameValuePair("","")); //创建表单的Entity对象,第一个参数是封装好的参数,第二个是编码 UrlEncodedFormEntity formEntity= new UrlEncodedFormEntity(params,"utf8"); //设置表单的Entity对象到Post请求中 httpPost.setEntity(formEntity); //配置请求信息 RequestConfig config = RequestConfig.custom().setConnectTimeout(1000)//设置创建连接的最长时间,单位为毫秒 .setConnectionRequestTimeout(500)//设置获取连接的最长时间,单位为毫秒 .setSocketTimeout(10*1000)//设置传输数据的最长时间,单位为毫秒 .build(); //给请求设置请求信息 httpPost.setConfig(config); CloseableHttpResponse response=null; String content=null; try { //3、按回车,发起请求,返回响应,使用httpClient对象发起请求 response = httpClient.execute(httpPost); //解析响应,获取数据 //判断状态码是否为两百 if(response.getStatusLine().getStatusCode()==200) { HttpEntity httpEntity = response.getEntity(); if(httpEntity!=null) { content = EntityUtils.toString(httpEntity, "utf8"); System.out.println(content.length()); // System.out.println(content); } }else { System.out.println("请求失败"+response); } }catch(Exception e) { e.printStackTrace(); }finally { try { //关闭response if(response!=null) { //关闭response response.close(); } //不关闭httpClient //httpClient.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } return content; } public static String doGet(PoolingHttpClientConnectionManager cm) throws Exception { //从连接池中获取httpClient对象 CloseableHttpClient httpClient = HttpClients.custom().setConnectionManager(cm).build(); //创建URIBuilder URIBuilder uribuilder= new URIBuilder("http://m.sinovision.net/newpneumonia.php"); //设置参数:参数名+参数值,可设置多个 uribuilder.setParameter("",""); //2、输入网址,发起请求,创建httpGet对象 HttpGet httpGet= new HttpGet(uribuilder.build()); System.out.println("发起请求的信息:"+httpGet); //配置请求信息 RequestConfig config = RequestConfig.custom().setConnectTimeout(1000000000*1000000000)//设置创建连接的最长时间,单位为毫秒 .setConnectionRequestTimeout(1000000000*1000000000)//设置获取连接的最长时间,单位为毫秒 .setSocketTimeout(1000000000*1000000000)//设置传输数据的最长时间,单位为毫秒 .build(); //给请求设置请求信息 httpGet.setConfig(config); CloseableHttpResponse response=null; String content=null; try { //3、按回车,发起请求,返回响应,使用httpClient对象发起请求 response = httpClient.execute(httpGet); //解析响应,获取数据 //判断状态码是否为两百 if(response.getStatusLine().getStatusCode()==200) { HttpEntity httpEntity = response.getEntity(); if(httpEntity!=null) { content = EntityUtils.toString(httpEntity, "utf8"); // System.out.println(content.length()); // System.out.println(content); } } }catch(Exception e) { e.printStackTrace(); }finally { try { if(response!=null) { //关闭response response.close(); } //不能关闭httpClient //httpClient.close(); } catch (IOException e) { // TODO Auto-generated catch block e.printStackTrace(); } } return content; } }

package utils; import java.io.IOException; import java.net.MalformedURLException; import java.net.URL; import java.util.Set; import org.apache.http.impl.conn.PoolingHttpClientConnectionManager; import org.jsoup.Jsoup; import org.jsoup.nodes.Attributes; import org.jsoup.nodes.Document; import org.jsoup.nodes.Element; import org.jsoup.select.Elements; import dao.dao; import entity.Info; public class Jsouputil { /** * 使用Selector选择器获取元素 */ public static void testSelector()throws Exception{ //获取Document对象 HttpClientPool httpClient =new HttpClientPool(); //创建连接池管理器 PoolingHttpClientConnectionManager cm =new PoolingHttpClientConnectionManager(); //获取网页HTML字符串 String content=httpClient.doGet(cm); //解析字符串 Document doc = Jsoup.parse(content); // System.out.println(doc.toString()); //[attr=value],利用属性获取 Elements elements = doc.select("div[class=todaydata] ").select("div[class=prod]"); System.out.println(elements.toString()); Info info=new Info(); dao dao=new dao(); for(Element ele:elements) { String province=ele.select("span[class=area]").text(); String Confirmed_num=ele.select("span[class=confirm]").text(); String Dead_num=ele.select("span[class=dead]").text(); String Cured_num=ele.select("span[class=cured]").text(); info=new Info(province,Confirmed_num,Dead_num,Cured_num); dao.add(info); } } }

主要参考了https://home.cnblogs.com/u/yeyueweiliang

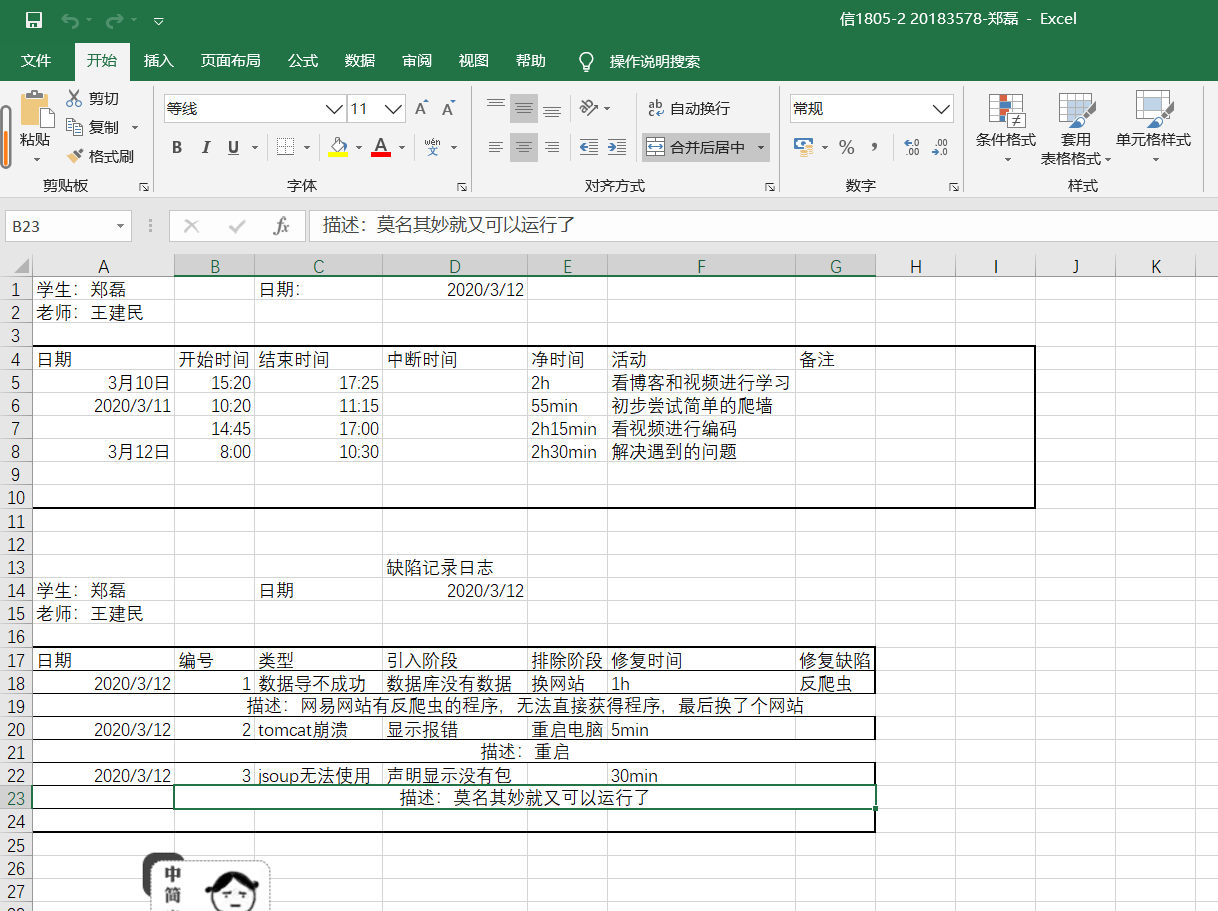

然后就是其中遇到的麻烦:

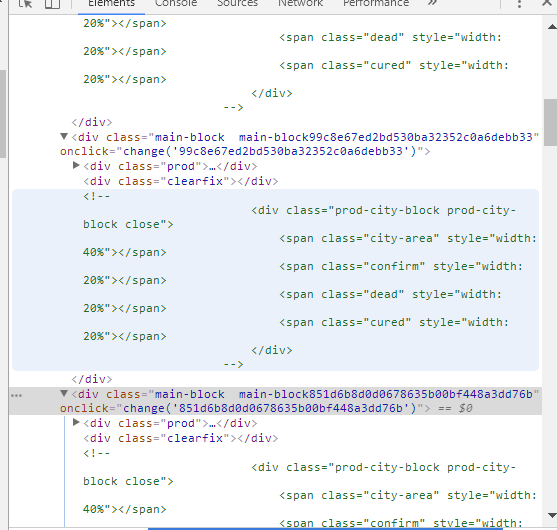

在找网站爬墙的时候先是找的这个网站

https://wp.m.163.com/163/page/news/virus_report/index.html?_nw_=1&_anw_=1

结果某易发现他有反爬虫的功能,就又换了一个网址

http://m.sinovision.net/newpneumonia.php

具体位置如下:

中间又出现导不到数据库 的情况,然后发现是他跟我原来的数据库结构不一样,导致最后先是不出来图像

然后又 出现tomcat崩溃等一系列情况,都被重启服务,或者换端口,或者重启电脑解决了

本次学习爬虫,在学习上面花费三个小时,实践花费两小时,解决问题花费两小时

具体源码可以见我github库

https://github.com/diaolingjun/-2