1.自动编码器

自动编码器首先通过下面的映射,把输入 $xin[0,1]^{d}$映射到一个隐层 $yin[0,1]^{d^{'}}$(编码器):

$y=s(Wx+b)$

其中 $s$ 是非线性的函数,例如sigmoid. 隐层表示 $y$ ,即编码然后被映射回(通过解码器)一个重构的 $z$,形状与输入$x$ 一样:

$z=s(W^{'}y+b^{'})$

这里 $W^{'}$ 不是表示 $W$ 的转置。$z$ 应该看作在给定编码 $y$ 的条件下对 $x$ 的预测。反向映射的权重矩阵 $W^{'}$ 可能会被约束为前向映射的转置:

$W^{'}=W^{T}$,这就是所谓的tied weights, 所以这个模型的参数($W$, $b$, $b^{'}$)的优化就是使得平均重构误差最小化。

重构误差有很多方式衡量,主要取决于对输入给定的编码的分布的合适的假设。传统的平方差 $L(x,z)=||x-z||^{2}$ 可以应用到此。如果输入可以看作是位向量或者概率向量,交叉熵也可以用来衡量:

$L_{H}(x,z)=-sum_{k=1}^{d}[x_{k}log z_{k}+(1-x_{k})log(1-x_{k})]$

这就是希望编码 $y$ 是一种分布的表示,这种表示能够捕捉到输入数据变化的主元的坐标,有一点类似于PCA.

确实,如果有一层线性隐层,并且用均方差最小化的准则来训练网络,那么 $k$ 个隐单元就是学习去把输入映射到数据最开始的 $k$ 个主元的的范围之内。如果隐层是非线性的,自动编码器就不同于PCA,因为自动编码器能够捕获数据分布的不同方面。

因为 $y$ 可以看做是 $x$ 的有损失的压缩,所以很难做到对所有的输入 $x$ 都能产生很好(损失小)的压缩。优化就是要使得对于训练样本的压缩很好,并且希望对于其他输入同样有很好的压缩,但是这里的其他输入并不是指任意的输入,一般要求其他输入要和训练集是服从同一分布的。这就是一个自动编码器泛化的意义:对于与训练样本来自同一分布的测试样本,产生很小的重构误差,但是对于在输入空间随意采样的的其他输入通常产生很高的重构误差。

首先用theano来实现自动编码器----

1 class dA(object): 2 """Auto-Encoder class 3 4 一个降噪自动编码器就是去通过把一些加噪声的输入映射到隐层空间,然后再映射回输入空间来 5 重构真实的输入。 6 (1)首先把真实输入加噪声 7 (2)把加噪声的输入映射到隐层空间 8 (3)重构真实输入 9 (4)计算重构误差 10 .. math:: 11 12 ilde{x} ~ q_D( ilde{x}|x) (1) 13 14 y = s(W ilde{x} + b) (2) 15 16 x = s(W' y + b') (3) 17 18 L(x,z) = -sum_{k=1}^d [x_k log z_k + (1-x_k) log( 1-z_k)] (4) 19 20 """ 21 22 def __init__( 23 self, 24 numpy_rng, 25 theano_rng=None, 26 input=None, 27 n_visible=784, 28 n_hidden=500, 29 W=None, 30 bhid=None, 31 bvis=None 32 ): 33 """ 34 dA类的初始化:可视层单元数量,隐层单元数量,加噪声程度。重构过程还需要输入,权重 35 和偏置。 36 :type numpy_rng: numpy.random.RandomState 37 :param numpy_rng: number random generator used to generate weights 38 39 :type theano_rng: theano.tensor.shared_randomstreams.RandomStreams 40 :param theano_rng: Theano random generator; if None is given one is 41 generated based on a seed drawn from `rng` 42 43 :type input: theano.tensor.TensorType 44 :param input: a symbolic description of the input or None for 45 standalone dA 46 47 :type n_visible: int 48 :param n_visible: number of visible units 49 50 :type n_hidden: int 51 :param n_hidden: number of hidden units 52 53 :type W: theano.tensor.TensorType 54 :param W: Theano variable pointing to a set of weights that should be 55 shared belong the dA and another architecture; if dA should 56 be standalone set this to None 57 58 :type bhid: theano.tensor.TensorType 59 :param bhid: Theano variable pointing to a set of biases values (for 60 hidden units) that should be shared belong dA and another 61 architecture; if dA should be standalone set this to None 62 63 :type bvis: theano.tensor.TensorType 64 :param bvis: Theano variable pointing to a set of biases values (for 65 visible units) that should be shared belong dA and another 66 architecture; if dA should be standalone set this to None 67 68 69 """ 70 self.n_visible = n_visible 71 self.n_hidden = n_hidden 72 73 # create a Theano random generator that gives symbolic random values 74 if not theano_rng: 75 theano_rng = RandomStreams(numpy_rng.randint(2 ** 30)) 76 77 # note : W' was written as `W_prime` and b' as `b_prime` 78 if not W: 79 # W is initialized with `initial_W` which is uniformely sampled 80 # from -4*sqrt(6./(n_visible+n_hidden)) and 81 # 4*sqrt(6./(n_hidden+n_visible))the output of uniform if 82 # converted using asarray to dtype 83 # theano.config.floatX so that the code is runable on GPU 84 initial_W = numpy.asarray( 85 numpy_rng.uniform( 86 low=-4 * numpy.sqrt(6. / (n_hidden + n_visible)), 87 high=4 * numpy.sqrt(6. / (n_hidden + n_visible)), 88 size=(n_visible, n_hidden) 89 ), 90 dtype=theano.config.floatX 91 ) 92 W = theano.shared(value=initial_W, name='W', borrow=True) 93 94 if not bvis: 95 bvis = theano.shared( 96 value=numpy.zeros( 97 n_visible, 98 dtype=theano.config.floatX 99 ), 100 borrow=True 101 ) 102 103 if not bhid: 104 bhid = theano.shared( 105 value=numpy.zeros( 106 n_hidden, 107 dtype=theano.config.floatX 108 ), 109 name='b', 110 borrow=True 111 ) 112 113 self.W = W 114 # b corresponds to the bias of the hidden 115 self.b = bhid 116 # b_prime corresponds to the bias of the visible 117 self.b_prime = bvis 118 # tied weights, therefore W_prime is W transpose 119 self.W_prime = self.W.T 120 self.theano_rng = theano_rng 121 # if no input is given, generate a variable representing the input 122 if input is None: 123 # we use a matrix because we expect a minibatch of several 124 # examples, each example being a row 125 self.x = T.dmatrix(name='input') 126 else: 127 self.x = input 128 129 self.params = [self.W, self.b, self.b_prime]

在栈式自动编码器中,前一层的输出将作为后面一层的输入。

现在计算隐层表示和重构信号:

def get_hidden_values(self, input): """ Computes the values of the hidden layer """ return T.nnet.sigmoid(T.dot(input, self.W) + self.b)

def get_reconstructed_input(self, hidden): """Computes the reconstructed input given the values of the hidden layer """ return T.nnet.sigmoid(T.dot(hidden, self.W_prime) + self.b_prime)

利用这些函数计算损失和更新参数:

1 def get_cost_updates(self, corruption_level, learning_rate): 2 """ This function computes the cost and the updates for one trainng 3 step of the dA """ 4 5 tilde_x = self.get_corrupted_input(self.x, corruption_level) 6 y = self.get_hidden_values(tilde_x) 7 z = self.get_reconstructed_input(y) 8 # note : we sum over the size of a datapoint; if we are using 9 # minibatches, L will be a vector, with one entry per 10 # example in minibatch 11 L = - T.sum(self.x * T.log(z) + (1 - self.x) * T.log(1 - z), axis=1) 12 # note : L is now a vector, where each element is the 13 # cross-entropy cost of the reconstruction of the 14 # corresponding example of the minibatch. We need to 15 # compute the average of all these to get the cost of 16 # the minibatch 17 cost = T.mean(L) 18 19 # compute the gradients of the cost of the `dA` with respect 20 # to its parameters 21 gparams = T.grad(cost, self.params) 22 # generate the list of updates 23 updates = [ 24 (param, param - learning_rate * gparam) 25 for param, gparam in zip(self.params, gparams) 26 ] 27 28 return (cost, updates)

现在可以定义一个函数迭代更新参数使得重构误差最小化:

1 da = dA( 2 numpy_rng=rng, 3 theano_rng=theano_rng, 4 input=x, 5 n_visible=28 * 28, 6 n_hidden=500 7 ) 8 9 cost, updates = da.get_cost_updates( 10 corruption_level=0., 11 learning_rate=learning_rate 12 ) 13 14 train_da = theano.function( 15 [index], 16 cost, 17 updates=updates, 18 givens={ 19 x: train_set_x[index * batch_size: (index + 1) * batch_size] 20 } 21 )

如果除了重构误差最小之外没有其他约束,我们自然希望重构的数据与输入完全一样最好,即隐层的编码维度与输入数据维度一样。

然而在[Bengio07] 中的实验表明,在实际中,隐单元数目比输入层单元多(称为超完备)的非线性自动编码器能够产生更加有用的表示(这里”有用“意思是产生更低的分类误差)。

为了获得对连续输入较好的重构,单隐层的自动编码器的非线性单元需要在第一层(编码)时权重较小,这样使得隐单元的非线性数据能够处于激活函数的近似线性范围内,而解码时,需要很大的权重。

对于二元输入,同样需要最小化重构误差。由于显示或者隐式的正则化,使得解码时的权重很难达到一个较大的值,优化算法会找到只适合与训练集相似的样本的编码,这正是我们想要的。

2.降噪自动编码器(DA)

DA的思路很简单:为了使得隐层学习出更加鲁棒的表示,防止简单地学习出一个等价的表示,我们训练一个DA,使得自动编码器能能够从加噪声的的数据中重构出真实的数据。

DA主要做两件事:对输入进行编码和消除噪声的负面影响。

在[Vincent08]中,随机加噪声就是随机把输入中的一些数据置为0,因此自动编码器就是试图通过没有加噪声的数据预测出加噪声的数据。

为了把上面的自动编码器转换成DA,需要加上对输入随机加噪声的操作,加噪声的方式很多,这里只是随机将输入中的部分数据置为0.

下面做法就是生成与输入形状一样的二项分布随机数,然后与输入相乘即可:

1 from theano.tensor.shared_randomstreams import RandomStreams 2 3 def get_corrupted_input(self, input, corruption_level): 4 """ This function keeps ``1-corruption_level`` entries of the inputs the same 5 and zero-out randomly selected subset of size ``coruption_level`` 6 Note : first argument of theano.rng.binomial is the shape(size) of 7 random numbers that it should produce 8 second argument is the number of trials 9 third argument is the probability of success of any trial 10 11 this will produce an array of 0s and 1s where 1 has a probability of 12 1 - ``corruption_level`` and 0 with ``corruption_level`` 13 """ 14 return self.theano_rng.binomial(size=input.shape, n=1, p=1 - corruption_level) * input

最终DA类代码变成下面:

1 class dA(object): 2 """Denoising Auto-Encoder class (dA) 3 4 A denoising autoencoders tries to reconstruct the input from a corrupted 5 version of it by projecting it first in a latent space and reprojecting 6 it afterwards back in the input space. Please refer to Vincent et al.,2008 7 for more details. If x is the input then equation (1) computes a partially 8 destroyed version of x by means of a stochastic mapping q_D. Equation (2) 9 computes the projection of the input into the latent space. Equation (3) 10 computes the reconstruction of the input, while equation (4) computes the 11 reconstruction error. 12 13 .. math:: 14 15 ilde{x} ~ q_D( ilde{x}|x) (1) 16 17 y = s(W ilde{x} + b) (2) 18 19 x = s(W' y + b') (3) 20 21 L(x,z) = -sum_{k=1}^d [x_k log z_k + (1-x_k) log( 1-z_k)] (4) 22 23 """ 24 25 def __init__(self, numpy_rng, theano_rng=None, input=None, n_visible=784, n_hidden=500, 26 W=None, bhid=None, bvis=None): 27 """ 28 Initialize the dA class by specifying the number of visible units (the 29 dimension d of the input ), the number of hidden units ( the dimension 30 d' of the latent or hidden space ) and the corruption level. The 31 constructor also receives symbolic variables for the input, weights and 32 bias. Such a symbolic variables are useful when, for example the input is 33 the result of some computations, or when weights are shared between the 34 dA and an MLP layer. When dealing with SdAs this always happens, 35 the dA on layer 2 gets as input the output of the dA on layer 1, 36 and the weights of the dA are used in the second stage of training 37 to construct an MLP. 38 39 :type numpy_rng: numpy.random.RandomState 40 :param numpy_rng: number random generator used to generate weights 41 42 :type theano_rng: theano.tensor.shared_randomstreams.RandomStreams 43 :param theano_rng: Theano random generator; if None is given one is generated 44 based on a seed drawn from `rng` 45 46 :type input: theano.tensor.TensorType 47 :paran input: a symbolic description of the input or None for standalone 48 dA 49 50 :type n_visible: int 51 :param n_visible: number of visible units 52 53 :type n_hidden: int 54 :param n_hidden: number of hidden units 55 56 :type W: theano.tensor.TensorType 57 :param W: Theano variable pointing to a set of weights that should be 58 shared belong the dA and another architecture; if dA should 59 be standalone set this to None 60 61 :type bhid: theano.tensor.TensorType 62 :param bhid: Theano variable pointing to a set of biases values (for 63 hidden units) that should be shared belong dA and another 64 architecture; if dA should be standalone set this to None 65 66 :type bvis: theano.tensor.TensorType 67 :param bvis: Theano variable pointing to a set of biases values (for 68 visible units) that should be shared belong dA and another 69 architecture; if dA should be standalone set this to None 70 71 72 """ 73 self.n_visible = n_visible 74 self.n_hidden = n_hidden 75 76 # create a Theano random generator that gives symbolic random values 77 if not theano_rng : 78 theano_rng = RandomStreams(rng.randint(2 ** 30)) 79 80 # note : W' was written as `W_prime` and b' as `b_prime` 81 if not W: 82 # W is initialized with `initial_W` which is uniformely sampled 83 # from -4.*sqrt(6./(n_visible+n_hidden)) and 4.*sqrt(6./(n_hidden+n_visible)) 84 # the output of uniform if converted using asarray to dtype 85 # theano.config.floatX so that the code is runable on GPU 86 initial_W = numpy.asarray(numpy_rng.uniform( 87 low=-4 * numpy.sqrt(6. / (n_hidden + n_visible)), 88 high=4 * numpy.sqrt(6. / (n_hidden + n_visible)), 89 size=(n_visible, n_hidden)), dtype=theano.config.floatX) 90 W = theano.shared(value=initial_W, name='W') 91 92 if not bvis: 93 bvis = theano.shared(value = numpy.zeros(n_visible, 94 dtype=theano.config.floatX), name='bvis') 95 96 if not bhid: 97 bhid = theano.shared(value=numpy.zeros(n_hidden, 98 dtype=theano.config.floatX), name='bhid') 99 100 self.W = W 101 # b corresponds to the bias of the hidden 102 self.b = bhid 103 # b_prime corresponds to the bias of the visible 104 self.b_prime = bvis 105 # tied weights, therefore W_prime is W transpose 106 self.W_prime = self.W.T 107 self.theano_rng = theano_rng 108 # if no input is given, generate a variable representing the input 109 if input == None: 110 # we use a matrix because we expect a minibatch of several examples, 111 # each example being a row 112 self.x = T.dmatrix(name='input') 113 else: 114 self.x = input 115 116 self.params = [self.W, self.b, self.b_prime] 117 118 def get_corrupted_input(self, input, corruption_level): 119 """ This function keeps ``1-corruption_level`` entries of the inputs the same 120 and zero-out randomly selected subset of size ``coruption_level`` 121 Note : first argument of theano.rng.binomial is the shape(size) of 122 random numbers that it should produce 123 second argument is the number of trials 124 third argument is the probability of success of any trial 125 126 this will produce an array of 0s and 1s where 1 has a probability of 127 1 - ``corruption_level`` and 0 with ``corruption_level`` 128 """ 129 return self.theano_rng.binomial(size=input.shape, n=1, p=1 - corruption_level) * input 130 131 132 def get_hidden_values(self, input): 133 """ Computes the values of the hidden layer """ 134 return T.nnet.sigmoid(T.dot(input, self.W) + self.b) 135 136 def get_reconstructed_input(self, hidden ): 137 """ Computes the reconstructed input given the values of the hidden layer """ 138 return T.nnet.sigmoid(T.dot(hidden, self.W_prime) + self.b_prime) 139 140 def get_cost_updates(self, corruption_level, learning_rate): 141 """ This function computes the cost and the updates for one trainng 142 step of the dA """ 143 144 tilde_x = self.get_corrupted_input(self.x, corruption_level) 145 y = self.get_hidden_values( tilde_x) 146 z = self.get_reconstructed_input(y) 147 # note : we sum over the size of a datapoint; if we are using minibatches, 148 # L will be a vector, with one entry per example in minibatch 149 L = -T.sum(self.x * T.log(z) + (1 - self.x) * T.log(1 - z), axis=1 ) 150 # note : L is now a vector, where each element is the cross-entropy cost 151 # of the reconstruction of the corresponding example of the 152 # minibatch. We need to compute the average of all these to get 153 # the cost of the minibatch 154 cost = T.mean(L) 155 156 # compute the gradients of the cost of the `dA` with respect 157 # to its parameters 158 gparams = T.grad(cost, self.params) 159 # generate the list of updates 160 updates = [] 161 for param, gparam in zip(self.params, gparams): 162 updates.append((param, param - learning_rate * gparam)) 163 164 return (cost, updates)

3.训练

为了视觉上看出,学习出来的权重到底是什么样子,将使用下面代码:

1 import numpy 2 3 4 def scale_to_unit_interval(ndar, eps=1e-8): 5 """ Scales all values in the ndarray ndar to be between 0 and 1 """ 6 ndar = ndar.copy() 7 ndar -= ndar.min() 8 ndar *= 1.0 / (ndar.max() + eps) 9 return ndar 10 11 12 def tile_raster_images(X, img_shape, tile_shape, tile_spacing=(0, 0), 13 scale_rows_to_unit_interval=True, 14 output_pixel_vals=True): 15 """ 16 Transform an array with one flattened image per row, into an array in 17 which images are reshaped and layed out like tiles on a floor. 18 19 This function is useful for visualizing datasets whose rows are images, 20 and also columns of matrices for transforming those rows 21 (such as the first layer of a neural net). 22 23 :type X: a 2-D ndarray or a tuple of 4 channels, elements of which can 24 be 2-D ndarrays or None; 25 :param X: a 2-D array in which every row is a flattened image. 26 27 :type img_shape: tuple; (height, width) 28 :param img_shape: the original shape of each image 29 30 :type tile_shape: tuple; (rows, cols) 31 :param tile_shape: the number of images to tile (rows, cols) 32 33 :param output_pixel_vals: if output should be pixel values (i.e. int8 34 values) or floats 35 36 :param scale_rows_to_unit_interval: if the values need to be scaled before 37 being plotted to [0,1] or not 38 39 40 :returns: array suitable for viewing as an image. 41 (See:`Image.fromarray`.) 42 :rtype: a 2-d array with same dtype as X. 43 44 """ 45 46 assert len(img_shape) == 2 47 assert len(tile_shape) == 2 48 assert len(tile_spacing) == 2 49 50 # The expression below can be re-written in a more C style as 51 # follows : 52 # 53 # out_shape = [0,0] 54 # out_shape[0] = (img_shape[0]+tile_spacing[0])*tile_shape[0] - 55 # tile_spacing[0] 56 # out_shape[1] = (img_shape[1]+tile_spacing[1])*tile_shape[1] - 57 # tile_spacing[1] 58 out_shape = [ 59 (ishp + tsp) * tshp - tsp 60 for ishp, tshp, tsp in zip(img_shape, tile_shape, tile_spacing) 61 ] 62 63 if isinstance(X, tuple): 64 assert len(X) == 4 65 # Create an output numpy ndarray to store the image 66 if output_pixel_vals: 67 out_array = numpy.zeros((out_shape[0], out_shape[1], 4), 68 dtype='uint8') 69 else: 70 out_array = numpy.zeros((out_shape[0], out_shape[1], 4), 71 dtype=X.dtype) 72 73 #colors default to 0, alpha defaults to 1 (opaque) 74 if output_pixel_vals: 75 channel_defaults = [0, 0, 0, 255] 76 else: 77 channel_defaults = [0., 0., 0., 1.] 78 79 for i in xrange(4): 80 if X[i] is None: 81 # if channel is None, fill it with zeros of the correct 82 # dtype 83 dt = out_array.dtype 84 if output_pixel_vals: 85 dt = 'uint8' 86 out_array[:, :, i] = numpy.zeros( 87 out_shape, 88 dtype=dt 89 ) + channel_defaults[i] 90 else: 91 # use a recurrent call to compute the channel and store it 92 # in the output 93 out_array[:, :, i] = tile_raster_images( 94 X[i], img_shape, tile_shape, tile_spacing, 95 scale_rows_to_unit_interval, output_pixel_vals) 96 return out_array 97 98 else: 99 # if we are dealing with only one channel 100 H, W = img_shape 101 Hs, Ws = tile_spacing 102 103 # generate a matrix to store the output 104 dt = X.dtype 105 if output_pixel_vals: 106 dt = 'uint8' 107 out_array = numpy.zeros(out_shape, dtype=dt) 108 109 for tile_row in xrange(tile_shape[0]): 110 for tile_col in xrange(tile_shape[1]): 111 if tile_row * tile_shape[1] + tile_col < X.shape[0]: 112 this_x = X[tile_row * tile_shape[1] + tile_col] 113 if scale_rows_to_unit_interval: 114 # if we should scale values to be between 0 and 1 115 # do this by calling the `scale_to_unit_interval` 116 # function 117 this_img = scale_to_unit_interval( 118 this_x.reshape(img_shape)) 119 else: 120 this_img = this_x.reshape(img_shape) 121 # add the slice to the corresponding position in the 122 # output array 123 c = 1 124 if output_pixel_vals: 125 c = 255 126 out_array[ 127 tile_row * (H + Hs): tile_row * (H + Hs) + H, 128 tile_col * (W + Ws): tile_col * (W + Ws) + W 129 ] = this_img * c 130 return out_array

所以整个训练代码如下:

""" This tutorial introduces denoising auto-encoders (dA) using Theano. Denoising autoencoders are the building blocks for SdA. They are based on auto-encoders as the ones used in Bengio et al. 2007. An autoencoder takes an input x and first maps it to a hidden representation y = f_{ heta}(x) = s(Wx+b), parameterized by heta={W,b}. The resulting latent representation y is then mapped back to a "reconstructed" vector z in [0,1]^d in input space z = g_{ heta'}(y) = s(W'y + b'). The weight matrix W' can optionally be constrained such that W' = W^T, in which case the autoencoder is said to have tied weights. The network is trained such that to minimize the reconstruction error (the error between x and z). For the denosing autoencoder, during training, first x is corrupted into ilde{x}, where ilde{x} is a partially destroyed version of x by means of a stochastic mapping. Afterwards y is computed as before (using ilde{x}), y = s(W ilde{x} + b) and z as s(W'y + b'). The reconstruction error is now measured between z and the uncorrupted input x, which is computed as the cross-entropy : - sum_{k=1}^d[ x_k log z_k + (1-x_k) log( 1-z_k)] References : - P. Vincent, H. Larochelle, Y. Bengio, P.A. Manzagol: Extracting and Composing Robust Features with Denoising Autoencoders, ICML'08, 1096-1103, 2008 - Y. Bengio, P. Lamblin, D. Popovici, H. Larochelle: Greedy Layer-Wise Training of Deep Networks, Advances in Neural Information Processing Systems 19, 2007 """ import os import sys import time import numpy import theano import theano.tensor as T from theano.tensor.shared_randomstreams import RandomStreams from logistic_sgd import load_data from utils import tile_raster_images try: import PIL.Image as Image except ImportError: import Image # start-snippet-1 class dA(object): """Denoising Auto-Encoder class (dA) A denoising autoencoders tries to reconstruct the input from a corrupted version of it by projecting it first in a latent space and reprojecting it afterwards back in the input space. Please refer to Vincent et al.,2008 for more details. If x is the input then equation (1) computes a partially destroyed version of x by means of a stochastic mapping q_D. Equation (2) computes the projection of the input into the latent space. Equation (3) computes the reconstruction of the input, while equation (4) computes the reconstruction error. .. math:: ilde{x} ~ q_D( ilde{x}|x) (1) y = s(W ilde{x} + b) (2) x = s(W' y + b') (3) L(x,z) = -sum_{k=1}^d [x_k log z_k + (1-x_k) log( 1-z_k)] (4) """ def __init__( self, numpy_rng, theano_rng=None, input=None, n_visible=784, n_hidden=500, W=None, bhid=None, bvis=None ): """ Initialize the dA class by specifying the number of visible units (the dimension d of the input ), the number of hidden units ( the dimension d' of the latent or hidden space ) and the corruption level. The constructor also receives symbolic variables for the input, weights and bias. Such a symbolic variables are useful when, for example the input is the result of some computations, or when weights are shared between the dA and an MLP layer. When dealing with SdAs this always happens, the dA on layer 2 gets as input the output of the dA on layer 1, and the weights of the dA are used in the second stage of training to construct an MLP. :type numpy_rng: numpy.random.RandomState :param numpy_rng: number random generator used to generate weights :type theano_rng: theano.tensor.shared_randomstreams.RandomStreams :param theano_rng: Theano random generator; if None is given one is generated based on a seed drawn from `rng` :type input: theano.tensor.TensorType :param input: a symbolic description of the input or None for standalone dA :type n_visible: int :param n_visible: number of visible units :type n_hidden: int :param n_hidden: number of hidden units :type W: theano.tensor.TensorType :param W: Theano variable pointing to a set of weights that should be shared belong the dA and another architecture; if dA should be standalone set this to None :type bhid: theano.tensor.TensorType :param bhid: Theano variable pointing to a set of biases values (for hidden units) that should be shared belong dA and another architecture; if dA should be standalone set this to None :type bvis: theano.tensor.TensorType :param bvis: Theano variable pointing to a set of biases values (for visible units) that should be shared belong dA and another architecture; if dA should be standalone set this to None """ self.n_visible = n_visible self.n_hidden = n_hidden # create a Theano random generator that gives symbolic random values if not theano_rng: theano_rng = RandomStreams(numpy_rng.randint(2 ** 30)) # note : W' was written as `W_prime` and b' as `b_prime` if not W: # W is initialized with `initial_W` which is uniformely sampled # from -4*sqrt(6./(n_visible+n_hidden)) and # 4*sqrt(6./(n_hidden+n_visible))the output of uniform if # converted using asarray to dtype # theano.config.floatX so that the code is runable on GPU initial_W = numpy.asarray( numpy_rng.uniform( low=-4 * numpy.sqrt(6. / (n_hidden + n_visible)), high=4 * numpy.sqrt(6. / (n_hidden + n_visible)), size=(n_visible, n_hidden) ), dtype=theano.config.floatX ) W = theano.shared(value=initial_W, name='W', borrow=True) if not bvis: bvis = theano.shared( value=numpy.zeros( n_visible, dtype=theano.config.floatX ), borrow=True ) if not bhid: bhid = theano.shared( value=numpy.zeros( n_hidden, dtype=theano.config.floatX ), name='b', borrow=True ) self.W = W # b corresponds to the bias of the hidden self.b = bhid # b_prime corresponds to the bias of the visible self.b_prime = bvis # tied weights, therefore W_prime is W transpose self.W_prime = self.W.T self.theano_rng = theano_rng # if no input is given, generate a variable representing the input if input is None: # we use a matrix because we expect a minibatch of several # examples, each example being a row self.x = T.dmatrix(name='input') else: self.x = input self.params = [self.W, self.b, self.b_prime] # end-snippet-1 def get_corrupted_input(self, input, corruption_level): """This function keeps ``1-corruption_level`` entries of the inputs the same and zero-out randomly selected subset of size ``coruption_level`` Note : first argument of theano.rng.binomial is the shape(size) of random numbers that it should produce second argument is the number of trials third argument is the probability of success of any trial this will produce an array of 0s and 1s where 1 has a probability of 1 - ``corruption_level`` and 0 with ``corruption_level`` The binomial function return int64 data type by default. int64 multiplicated by the input type(floatX) always return float64. To keep all data in floatX when floatX is float32, we set the dtype of the binomial to floatX. As in our case the value of the binomial is always 0 or 1, this don't change the result. This is needed to allow the gpu to work correctly as it only support float32 for now. """ return self.theano_rng.binomial(size=input.shape, n=1, p=1 - corruption_level, dtype=theano.config.floatX) * input def get_hidden_values(self, input): """ Computes the values of the hidden layer """ return T.nnet.sigmoid(T.dot(input, self.W) + self.b) def get_reconstructed_input(self, hidden): """Computes the reconstructed input given the values of the hidden layer """ return T.nnet.sigmoid(T.dot(hidden, self.W_prime) + self.b_prime) def get_cost_updates(self, corruption_level, learning_rate): """ This function computes the cost and the updates for one trainng step of the dA """ tilde_x = self.get_corrupted_input(self.x, corruption_level) y = self.get_hidden_values(tilde_x) z = self.get_reconstructed_input(y) # note : we sum over the size of a datapoint; if we are using # minibatches, L will be a vector, with one entry per # example in minibatch L = - T.sum(self.x * T.log(z) + (1 - self.x) * T.log(1 - z), axis=1) # note : L is now a vector, where each element is the # cross-entropy cost of the reconstruction of the # corresponding example of the minibatch. We need to # compute the average of all these to get the cost of # the minibatch cost = T.mean(L) # compute the gradients of the cost of the `dA` with respect # to its parameters gparams = T.grad(cost, self.params) # generate the list of updates updates = [ (param, param - learning_rate * gparam) for param, gparam in zip(self.params, gparams) ] return (cost, updates) def test_dA(learning_rate=0.1, training_epochs=15, dataset='mnist.pkl.gz', batch_size=20, output_folder='dA_plots'): """ This demo is tested on MNIST :type learning_rate: float :param learning_rate: learning rate used for training the DeNosing AutoEncoder :type training_epochs: int :param training_epochs: number of epochs used for training :type dataset: string :param dataset: path to the picked dataset """ datasets = load_data(dataset) train_set_x, train_set_y = datasets[0] # compute number of minibatches for training, validation and testing n_train_batches = train_set_x.get_value(borrow=True).shape[0] / batch_size # allocate symbolic variables for the data index = T.lscalar() # index to a [mini]batch x = T.matrix('x') # the data is presented as rasterized images if not os.path.isdir(output_folder): os.makedirs(output_folder) os.chdir(output_folder) #################################### # BUILDING THE MODEL NO CORRUPTION # #################################### rng = numpy.random.RandomState(123) theano_rng = RandomStreams(rng.randint(2 ** 30)) da = dA( numpy_rng=rng, theano_rng=theano_rng, input=x, n_visible=28 * 28, n_hidden=500 ) cost, updates = da.get_cost_updates( corruption_level=0., learning_rate=learning_rate ) train_da = theano.function( [index], cost, updates=updates, givens={ x: train_set_x[index * batch_size: (index + 1) * batch_size] } ) start_time = time.clock() ############ # TRAINING # ############ # go through training epochs for epoch in xrange(training_epochs): # go through trainng set c = [] for batch_index in xrange(n_train_batches): c.append(train_da(batch_index)) print 'Training epoch %d, cost ' % epoch, numpy.mean(c) end_time = time.clock() training_time = (end_time - start_time) print >> sys.stderr, ('The no corruption code for file ' + os.path.split(__file__)[1] + ' ran for %.2fm' % ((training_time) / 60.)) image = Image.fromarray( tile_raster_images(X=da.W.get_value(borrow=True).T, img_shape=(28, 28), tile_shape=(10, 10), tile_spacing=(1, 1))) image.save('filters_corruption_0.png') ##################################### # BUILDING THE MODEL CORRUPTION 30% # ##################################### rng = numpy.random.RandomState(123) theano_rng = RandomStreams(rng.randint(2 ** 30)) da = dA( numpy_rng=rng, theano_rng=theano_rng, input=x, n_visible=28 * 28, n_hidden=500 ) cost, updates = da.get_cost_updates( corruption_level=0.3, learning_rate=learning_rate ) train_da = theano.function( [index], cost, updates=updates, givens={ x: train_set_x[index * batch_size: (index + 1) * batch_size] } ) start_time = time.clock() ############ # TRAINING # ############ # go through training epochs for epoch in xrange(training_epochs): # go through trainng set c = [] for batch_index in xrange(n_train_batches): c.append(train_da(batch_index)) print 'Training epoch %d, cost ' % epoch, numpy.mean(c) end_time = time.clock() training_time = (end_time - start_time) print >> sys.stderr, ('The 30% corruption code for file ' + os.path.split(__file__)[1] + ' ran for %.2fm' % (training_time / 60.)) image = Image.fromarray(tile_raster_images( X=da.W.get_value(borrow=True).T, img_shape=(28, 28), tile_shape=(10, 10), tile_spacing=(1, 1))) image.save('filters_corruption_30.png') os.chdir('../') if __name__ == '__main__': test_dA()

... loading data Training epoch 0, cost 63.2891694201 Training epoch 1, cost 55.7866565443 Training epoch 2, cost 54.7631168984 Training epoch 3, cost 54.2420533514 Training epoch 4, cost 53.888670659 Training epoch 5, cost 53.6203505434 Training epoch 6, cost 53.4037459012 Training epoch 7, cost 53.2219976788 Training epoch 8, cost 53.0658010178 Training epoch 9, cost 52.9295596873 Training epoch 10, cost 52.8094163525 Training epoch 11, cost 52.7024367362 Training epoch 12, cost 52.606310148 Training epoch 13, cost 52.5191693641 Training epoch 14, cost 52.4395240004 The no corruption code for file dA.py ran for 10.21m Training epoch 0, cost 81.7714190632 Training epoch 1, cost 73.4285756365 Training epoch 2, cost 70.8632686268 Training epoch 3, cost 69.3396642015 Training epoch 4, cost 68.4134660704 Training epoch 5, cost 67.723705304 Training epoch 6, cost 67.2401360252 Training epoch 7, cost 66.849303071 Training epoch 8, cost 66.5663948395 Training epoch 9, cost 66.3591257941 Training epoch 10, cost 66.1336658308 Training epoch 11, cost 65.9893924612 Training epoch 12, cost 65.8344131768 Training epoch 13, cost 65.7185348901 Training epoch 14, cost 65.6010749532 The 30% corruption code for file dA.py ran for 10.37m

没有加噪声训练出的权重可视化结果:

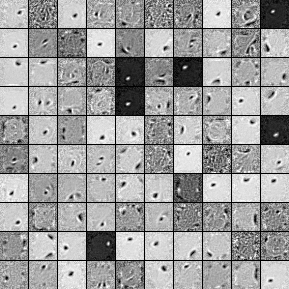

加了30%噪声训练出的权重可视化结果: