1. 读邮件数据集文件,提取邮件本身与标签。

列表

numpy数组

2.邮件预处理

- 邮件分句

- 句子分词

- 大小写,标点符号,去掉过短的单词

- 词性还原:复数、时态、比较级

- 连接成字符串

2.1 传统方法来实现

2.2 nltk库的安装与使用

pip install nltk

import nltk

nltk.download() # sever地址改成 http://www.nltk.org/nltk_data/

或

https://github.com/nltk/nltk_data下载gh-pages分支,里面的Packages就是我们要的资源。

将Packages文件夹改名为nltk_data。

或

网盘链接:https://pan.baidu.com/s/1iJGCrz4fW3uYpuquB5jbew 提取码:o5ea

放在用户目录。

----------------------------------

安装完成,通过下述命令可查看nltk版本:

import nltk

print nltk.__doc__

2.1 nltk库 分词

nltk.sent_tokenize(text) #对文本按照句子进行分割

nltk.word_tokenize(sent) #对句子进行分词

2.2 punkt 停用词

from nltk.corpus import stopwords

stops=stopwords.words('english')

*如果提示需要下载punkt

nltk.download(‘punkt’)

或 下载punkt.zip

https://pan.baidu.com/s/1OwLB0O8fBWkdLx8VJ-9uNQ 密码:mema

复制到对应的失败的目录C:UsersAdministratorAppDataRoaming ltk_data okenizers并解压。

2.3 NLTK 词性标注

nltk.pos_tag(tokens)

2.4 Lemmatisation(词性还原)

from nltk.stem import WordNetLemmatizer

lemmatizer = WordNetLemmatizer()

lemmatizer.lemmatize('leaves') #缺省名词

lemmatizer.lemmatize('best',pos='a')

lemmatizer.lemmatize('made',pos='v')

一般先要分词、词性标注,再按词性做词性还原。

2.5 编写预处理函数

def preprocessing(text):

sms_data.append(preprocessing(line[1])) #对每封邮件做预处理

3. 训练集与测试集

4. 词向量

5. 模型

代码:

import numpy as np

def loadDataSet():

postingList = [

['my','dog','has','flea','problems','help','please'],

['maybe','not','take','him','to','dog','park','stupid'],

['my','dalmation','is','so','cute','I','love','him'],

['stop','posting','stupid','worthless','garbage'],

['mr','licks','ate','my','steak','how','to','stop','him'],

['quit','buying','worthless','dog','food','stupid']

]

classVec = [0,1,0,1,0,1] #1:侮辱性文字,0:正常言论

return postingList,classVec

def createVocabList(dataSet):

vocabSet = set([]) #创建一个空集

for document in dataSet:

vocabSet = vocabSet | set(document) #创建两个集合的并集,去重操作

return list(vocabSet)

def setOfWords2Vec(vocabList,inputSet): #对单词做简单的词向量

returnVec = [0] * len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

else:

print ("the word: %s is not in my Vocabulary!" % word)

return returnVec

#训练函数

def trainNB0(trainMatrix,trainCategory):

#trainMatrix : 0,1表示的文档矩阵

#trainCategory : 类别标签构成的向量

numTrainDocs = len(trainMatrix)

numWords = len(trainMatrix[0])

pAbusive = sum(trainCategory) / float(numTrainDocs) #P(c1)

p0Num = np.zeros(numWords)

p1Num = np.zeros(numWords)

p0Denom = 0.0

p1Denom = 0.0

#len(trainMatrix) == len(trainCategory)

for i in range(numTrainDocs):

if trainCategory[i] == 1:

p1Num += trainMatrix[i]

p1Denom += sum(trainMatrix[i])

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p0Vect = p0Num / p0Denom #P(Wi|C1)的向量形式

p1Vect = p1Num / p1Denom #p(wi|c2)的向量形式

return p0Vect,p1Vect,pAbusive

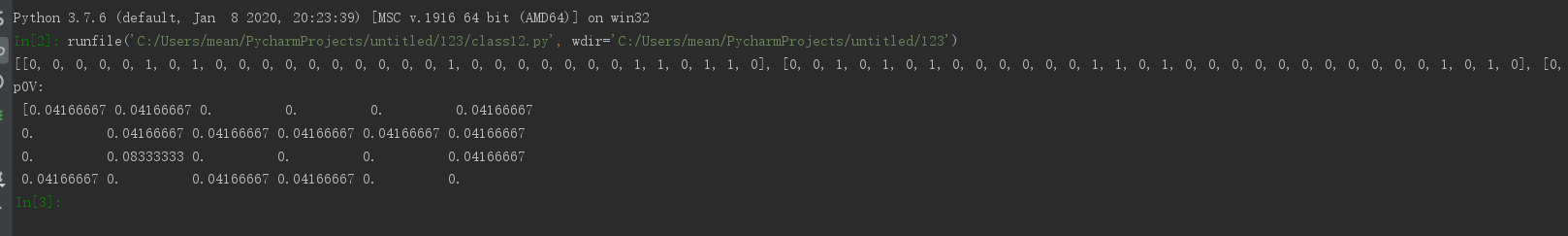

listPosts,listClasses = loadDataSet()

myVocabList = createVocabList(listPosts)

trainMat = []

for postinDoc in listPosts:

trainMat.append(setOfWords2Vec(myVocabList,postinDoc))

print (trainMat)

p0V,p1V,pAb = trainNB0(trainMat,listClasses)

print ("p0V:

",p0V)

print ("p1V:

",p1V)

print ("pAb:

",pAb)

运行结果: