1.什么是Hive

Hive 是建立在 Hadoop上的数据仓库基础构架。它提供了一系列的工具,可以用来进行数据提取转化加载(ETL),这是一种可以存储、查询和分析存储在Hadoop中的大规模数据的机制。Hive 定义了简单的类SQL查询语言,称为QL,它允许熟悉 SQL 的用户查询数据。同时,这个语言也允许熟悉 MapReduce开发者的开发自定义的 mapper 和 reducer 来处理内建的mapper 和 reducer 无法完成的复杂的分析工作。

Hive是SQL解析引擎,它将SQL语句转译成M/R Job然后在Hadoop执行。

Hive的表其实就是HDFS的目录/文件,按表名把文件夹分开。如果是分区表,则分区值是子文件夹,可以直接在M/R Job里使用这些数据。

hive运行时,一定要有元数据(存储真实数据到逻辑表的映射),它存储在关系型数据库里,hive自带的derby数据库不稳定,我们用mysql。

说白了,Hive就是人们为了偷懒,不去写MapReduce程序,而去写SQL语句,让Hive帮你转化成MapReduce程序。

2.Hive的安装配置

Hive只在一个节点上安装即可

2.1 上传tar包

2.2 解压

tar -zxvf hive-0.9.0.tar.gz -C /cloud/

2.3 配置mysql metastore(切换到root用户)

配置HIVE_HOME环境变量

查询以前安装的mysql相关包

rpm -qa | grep mysql

暴力删除这个包

rpm -e mysql-libs-5.1.66-2.el6_3.i686 --nodeps

rpm -ivh MySQL-server-5.1.73-1.glibc23.i386.rpm

rpm -ivh MySQL-client-5.1.73-1.glibc23.i386.rpm

执行命令设置mysql

/usr/bin/mysql_secure_installation

(注意:删除匿名用户,允许用户远程连接)

登陆mysql

mysql -u root -p

注:如果联网,可以直接sudo yum install mysql-server

关于安装mysql,可以参考:http://www.cnblogs.com/DarrenChan/p/6518304.html

2.4 配置hive

cp hive-default.xml.template hive-site.xml

修改hive-site.xml

修改以下4个内容:

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://weekend01:3306/hive?createDatabaseIfNotExist=true</value>

<description>JDBC connect string for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123456</value>

<description>password to use against metastore database</description>

</property>

</configuration>

2.5 安装hive和mysql完成后,将mysql的连接jar包拷贝到$HIVE_HOME/lib目录下

如果出现没有权限的问题,在mysql授权(在安装mysql的机器上执行)

mysql -uroot -p

#(执行下面的语句 *.*:所有库下的所有表 %:任何IP地址或主机都可以连接)

GRANT ALL PRIVILEGES ON *.* TO 'root'@'%' IDENTIFIED BY '123456' WITH GRANT OPTION;

FLUSH PRIVILEGES;

Jline包版本不一致的问题,需要拷贝hive的lib目录中jline.2.12.jar的jar包替换掉hadoop中的

/home/hadoop/app/hadoop-2.4.1/share/hadoop/yarn/lib/jline-0.9.94.jar

2.6 建表(默认是内部表)

去bin/下通过./hive进行启动。

create table trade_detail(id bigint, account string, income double, expenses double, time string) row format delimited fields terminated by ' ';

建分区表

create table td_part(id bigint, account string, income double, expenses double, time string) partitioned by (logdate string) row format delimited fields terminated by ' ';

建外部表

create external table td_ext(id bigint, account string, income double, expenses double, time string) row format delimited fields terminated by ' ' location '/td_ext';

2.7 创建分区表

普通表和分区表区别:有大量数据增加的需要建分区表

create table book (id bigint, name string) partitioned by (pubdate string) row format delimited fields terminated by ' ';

分区表加载数据(加载HDFS上的数据就不用写local了,如果你建的内部表,加载HDFS上的数据,数据会被剪切到Hive的相关目录下)

load data local inpath './book.txt' overwrite into table book partition (pubdate='2010-08-22');

load data local inpath '/root/data.am' into table beauty partition (nation="USA");

select nation, avg(size) from beauties group by nation order by avg(size);

3.安装过程出现问题总结

直接用Hive自带的数据库没多大问题,但是用Hive连接MySQL数据库,莫名其妙地出很多错误。网上查了很多解决办法,总算搞定。现总结如下:

问题一:

FAILED: Error in metadata: Java.lang.RuntimeException: Unable to instantiate org.apache.Hadoop.Hive.metastore.HiveMetaStoreClient

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.DDLTask

首先,解决过程中需要考虑的问题:

1.hadoop 是否完全启动

使用jps 命令查看一下hadoop相应的进程是否都启动了

2.hadoop安全模式是否关闭

hadoop dfsadmin -safemode enter |leave| get | wait

3.MySQL 是否安装正确

进入mysql,执行一下命令 show databases;测试一下

4.hive是否安装正确

hive在没有修改元数据存储数据库(derby)时,测试一下是否安装正确

show tables

5.需要的Jar包是否配置好

是否将相应版本的mysql-connector-java-*-bin.jar包放置hive/lib目录下,重启hive

6.是否正确修改了hive-site.xml文件

其次,如果以上方式无法解决问题,可以使用以下命令查看出现错误的日志信息(方法很好用,不信试一试)

./hive -hiveconf hive.root.logger=DEBUG,console

hive> show tables;

有一种常见的错误是(当然不同的问题所产生的日志是不同的):

Caused by: javax.jdo.JDOFatalDataStoreException: Access denied for user'root'@'weekend01' (using

password: YES)

NestedThrowables:

java.sql.SQLException: Access denied for user 'root'@'weekend01' (using

password: YES)。。。

这是因为在mysql中创建hive角色时权限分配出现问题,'root'@'weekend01' 的权限分配不正确

解决方法:

GRANT ALL PRIVILEGES ON *.* TO 'root'@'weekend01' Identified by '123456'; //最后是密码

flush privileges;

执行以上两步之后,重启hive,在执行操作 show tables测试

问题二:

测试发现,又开始报如下错误:

version information not found

Caused by: MetaException(message:Version information not found in metastore. )

解决办法:

1. 查看mysql-connector-Java-5.1.26-bin.jar 是否放到hive/lib 目录下 建议修改权限为777 (chmod 777 mysql-connector-java-5.1.26-bin.jar)

2. 修改conf/hive-site.xml 中的 “hive.metastore.schema.verification” 值为 false 即可解决 “Caused by: MetaException(message:Version information not found in metastore. )”

4. Hive thrift服务

启动方式,(假如是在weekend110上):

启动为前台:bin/hiveserver2

启动为后台:nohup bin/hiveserver2 1>/var/log/hiveserver.log 2>/var/log/hiveserver.err &

启动成功后,可以在别的节点上用beeline去连接

方式(1)

hive/bin/beeline 回车,进入beeline的命令界面

输入命令连接hiveserver2

beeline> !connect jdbc:hive2://weekend110:10000

(weekend110是hiveserver2所启动的那台主机名,端口默认是10000)

方式(2)

或者启动就连接:

bin/beeline -u jdbc:hive2://weekend110:10000 -n hadoop

接下来就可以做正常sql查询了。

5.简单测试

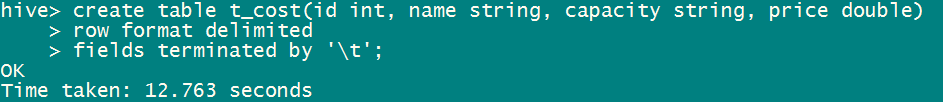

我们先执行建表语句:

create table t_cost(id int, name string, capacity string, price double)

row format delimited

fields terminated by ' ';

然后按照格式导入数据(数据在本地,需要加local):(除了into,还可以写overwrite into)

load data local inpath '/home/hadoop/hivedata/cost.data' into table t_cost;

cost.data数据如下:

10001 iPhone7 64G 5888

10002 SamsungNote7 64G 5888

10001 HuaweiMate9 64G 4999

10002 MiMix 64G 3999

10001 Nexus6 64G 5888

10002 MeizuMx5 64G 2888

10001 LGG6 64G 4888

10002 SonyZ6 64G 5888

进行查询:

hive> select * from t_cost;

OK

10001 iPhone7 64G 5888.0

10002 SamsungNote7 64G 5888.0

10001 HuaweiMate9 64G 4999.0

10002 MiMix 64G 3999.0

10001 Nexus6 64G 5888.0

10002 MeizuMx5 64G 2888.0

10001 LGG6 64G 4888.0

10002 SonyZ6 64G 5888.0

Time taken: 2.22 seconds, Fetched: 8 row(s)

查询总数量(竟然开始跑mapreduce程序了,事实上,除了select *,其他很多都会跑mapreduce程序):

hive> select count(*) from t_cost;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapred.reduce.tasks=<number>

Starting Job = job_1489463215771_0001, Tracking URL = http://weekend03:8088/proxy/application_1489463215771_0001/

Kill Command = /home/hadoop/app/hadoop-2.4.1/bin/hadoop job -kill job_1489463215771_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2017-03-14 01:07:46,096 Stage-1 map = 0%, reduce = 0%

2017-03-14 01:08:11,953 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:12,985 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:14,018 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:15,053 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:16,084 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:17,123 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:18,155 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:19,195 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:20,222 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:21,256 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:22,291 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:23,320 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:24,351 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:25,385 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:26,420 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:27,453 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:28,487 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:29,518 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:30,545 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:31,583 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:32,613 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:33,644 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:34,675 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:35,710 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:36,738 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:37,778 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:38,805 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:39,834 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:40,862 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:41,890 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:42,925 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:43,953 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:44,987 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:46,053 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:47,084 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:48,116 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:49,152 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 5.48 sec

2017-03-14 01:08:50,186 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 7.29 sec

2017-03-14 01:08:51,245 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 7.29 sec

2017-03-14 01:08:52,291 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 7.29 sec

MapReduce Total cumulative CPU time: 7 seconds 290 msec

Ended Job = job_1489463215771_0001

MapReduce Jobs Launched:

Job 0: Map: 1 Reduce: 1 Cumulative CPU: 7.29 sec HDFS Read: 388 HDFS Write: 2 SUCCESS

Total MapReduce CPU Time Spent: 7 seconds 290 msec

OK

8

Time taken: 127.847 seconds, Fetched: 1 row(s)

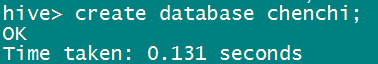

接着,我们重新建个库:

我们在这个库中再建一个表:

既然建了这么多东西了,我很好奇,它在HDFS中是怎么存储的呢?

通过查看以上截图,我们可以看到,它默认的库是在/user/hive/warehouse下的,因为我们建的表t_cost在这里。而且,我们建的chenchi库竟然也在这里面,原来我们重新建的库,就是在该目录下面建了一个文件夹而已。里面存储你新建的表。其实表说白了也是个目录,我们导入表数据,就是把东西上传到该目录下。哪怕你用最原始的办法上传相应格式的数据到该目录,查询的时候也是可以查询出来的。

然后我们再想,究竟Hive怎么和MySQL整合的呢?在MySQL中表,库又是怎么存储的呢?我在MySQL客户端中打开hive这个库,我们可以看到里面有很多表,而这些表的相互关系会解答我们的疑惑。