Everybody has had the experience of not recognising someone they know—changes in pose, illumination and expression all make the task tricky. So it’s not surprising that computer vision systems have similar problems. Indeed, no computer vision system matches human performance despite years of work by computer scientists all over the world.

That’s not to say that face recognition systems are poor. Far from it. The best systems can beat human performance in ideal conditions. But their performance drops dramatically as conditions get worse. So computer scientists would dearly love to develop an algorithm that can take the crown in the most challenging conditions too.

Today, Chaochao Lu and Xiaoou Tang at the Chinese University of Hong Kong say they’ve done just that. These guys have developed a face recognition algorithm called GaussianFace that outperforms humans for the first time.

The new system could finally make human-level face verification available in applications ranging from smart phone and computer game log-ons to security and passport control.

The first task in any programme of automated face verification is to build a decent dataset to test the algorithm with. That requires images of a wide variety of faces with complex variations in pose, lighting and expression as well as race, ethnicity, age and gender. Then there is clothing, hair styles, make up and so on.

As luck would have it, there is just such a dataset, known as the Labelled Faces in the Wild benchmark. This consists of over 13,000 images of the faces of almost 6000 public figures collected off the web. Crucially, there is more than one image of each person in the database.

There are various other databases but the Labelled faces in the Wild is well known amongst computer scientists as a challenging benchmark.

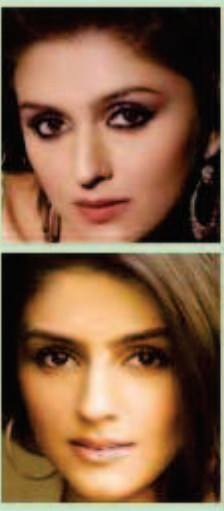

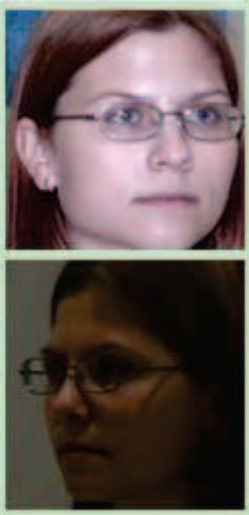

The task in facial recognition is to compare two images and determine whether they show the same person. (Try identifying which of the image pairs shown here are correct matches.)

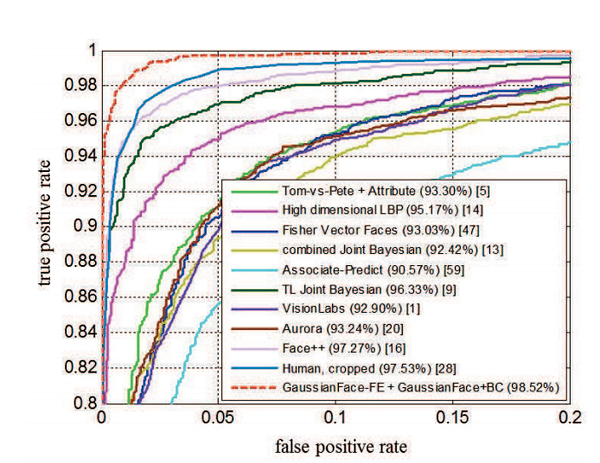

Humans can do this with an accuracy of 97.53 per cent on this database. But no algorithm has come close to matching this performance.

Until now. The new algorithm works by normalising each face into a 150 x 120 pixel image, by transforming it based on five image landmarks: the position of both eyes, the nose and the two corners of the mouth.

It then divides each image into overlapping patches of 25 x 25 pixels and describes each patch using a mathematical object known as a vector which captures its basic features. Having done that, the algorithm is ready to compare the images looking for similarities.

But first it needs to know what to look for. This is where the training data set comes in. The usual approach is to use a single dataset to train the algorithm and to use a sample of images from the same dataset to test the algorithm on.

But when the algorithm is faced with images that are entirely different from the training set, it often fails. “When the [image] distribution changes, these methods may suffer a large performance drop,” say Chaochao and Xiaoou.

Instead, they’ve trained GaussianFace on four entirely different datasets with very different images. For example, one of these datasets is known as the Multi-PIE database and consists of face images of 337 subjects from 15 different viewpoints under 19 different conditions of illumination taken in four photo sessions. Another is a database called Life Photos which contains about 10 images of 400 different people.

Having trained the algorithm on these datasets, they finally let it loose on the Labelled Faces in the Wild database. The goal is to identify matched pairs and to spot mismatched pairs too.

Remember that humans can do this with an accuracy of 97.53 per cent. “Our GaussianFace model can improve the accuracy to 98.52%, which for the first time beats the human-level performance,” say Chaochao and Xiaoou.

That’s an impressive result because of the wide variety of extreme conditions in these photos.

Chaochao and Xiaoou point out that there are many challenges ahead, however. Humans can use all kinds of additional cues to do this task, such as neck and shoulder configuration. “Surpassing the human-level performance may only be symbolically significant,” they say.

Another problem is the time it takes to train the new algorithm, the amount of memory it requires and the running time to identify matches. Some of that can be tackled by parallelising the algorithm and using a number of bespoke computer processing techniques.

Nevertheless, accurate automated face recognition is coming and on this evidence sooner rather than later.

Ref: arxiv.org/abs/1404.3840 : Surpassing Human-Level Face Verification Performance on LFW with GaussianFace

Follow the Physics arXiv Blog on Twitter at @arxivblog, on Facebook and by hitting the Follow button below.

from: https://medium.com/the-physics-arxiv-blog/the-face-recognition-algorithm-that-finally-outperforms-humans-2c567adbf7fc#.nvqi6691o