1. 均方误差MSE

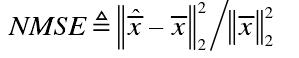

归一化的均方误差(NMSE)

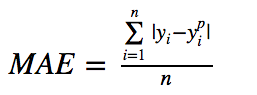

2. 平均绝对误差MAE

# true: 真目标变量的数组 # pred: 预测值的数组 def mse(true, pred): return np.sum((true - pred)**2) def mae(true, pred): return np.sum(np.abs(true - pred)) # 调用sklearn from sklearn.metrics import mean_squared_error from sklearn.metrics import mean_absolute_error

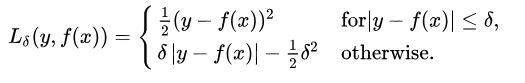

3. Huber损失函数

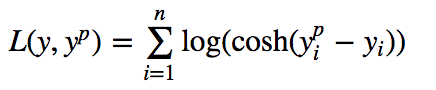

4. Log-Cosh损失函数

# huber 损失 def huber(true, pred, delta): loss = np.where(np.abs(true-pred) < delta , 0.5*((true-pred)**2), delta*np.abs(true - pred) - 0.5*(delta**2)) return np.sum(loss) # log cosh 损失 def logcosh(true, pred): loss = np.log(np.cosh(pred - true)) return np.sum(loss)

5. 实例

import numpy as np import math true = [0,1,2,3,4] pred = [0,0,1,5,-11] # MSE mse = mean_squared_error(true,pred) print("RMSE: ",math.sqrt(mse)) loss =0 for i,j in zip(true,pred): loss += mse(i,j) mseloss = math.sqrt(loss / len(true)) print("RMSE: ",mseloss) #MAE mae = mean_absolute_error(true,pred) print("MAE: ",mae) loss = 0 for i,j in zip(true,pred): loss += mae(i,j) maeloss = loss / len(true) print("MAE: ",maeloss) #Huber loss = 0 for i,j in zip(true,pred): loss += huber(i,j,1) loss = loss / len(true) print("Huber: ",loss) #Log-Cosh loss = 0 for i,j in zip(true,pred): loss += logcosh(i,j) loss = loss / len(true) print("Log-Cosh: ",loss)

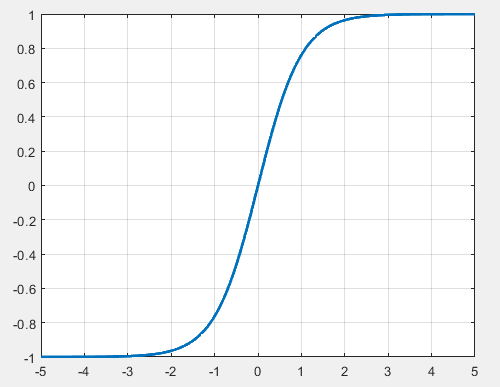

6. tanh

Python中直接调用np.tanh() 即可计算。