Fiddler抓包工具

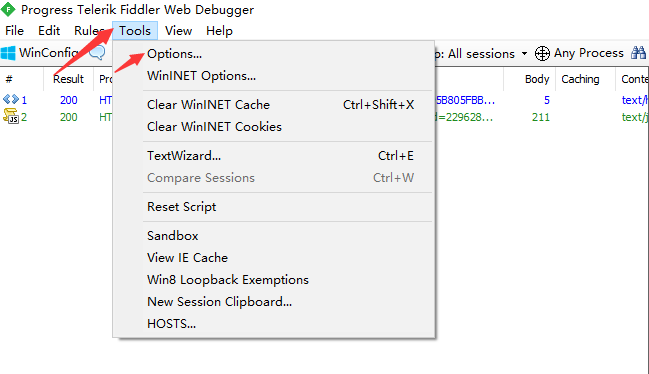

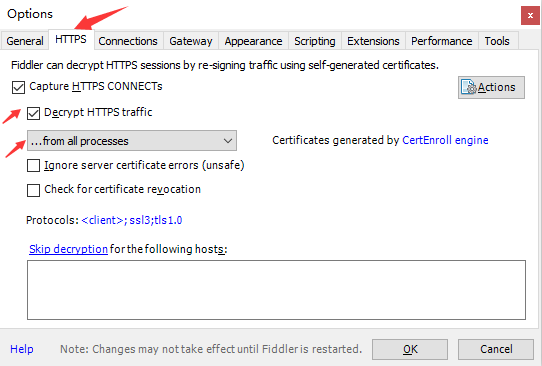

配置Fiddler

- 添加证书信任,Tools - Options - HTTPS,勾选 Decrypt Https Traffic 后弹出窗口,一路确认

- ...from browsers only 设置只抓取浏览器的数据包

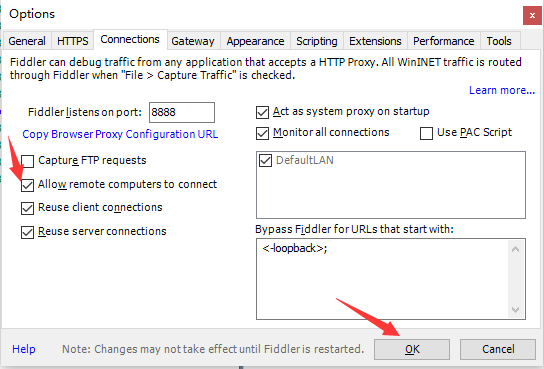

- Tools - Options - Connections,设置监听端口(默认为8888)

- 关闭Fiddler,再打开Fiddler,配置完成后重启Fiddler(重要)

配置浏览器代理

1、安装Proxy SwitchyOmega插件

2、浏览器右上角:SwitchyOmega->选项->新建情景模式->AID1901(名字)->创建

输入 :HTTP:// 127.0.0.1 8888

点击 :应用选项

3、点击右上角SwitchyOmega可切换代理

Fiddler常用菜单

1、Inspector :查看数据包详细内容

整体分为请求和响应两部分

2、常用菜单

Headers :请求头信息

WebForms: POST请求Form表单数据 :<body>

GET请求查询参数: <QueryString>

Raw 将整个请求显示为纯文本

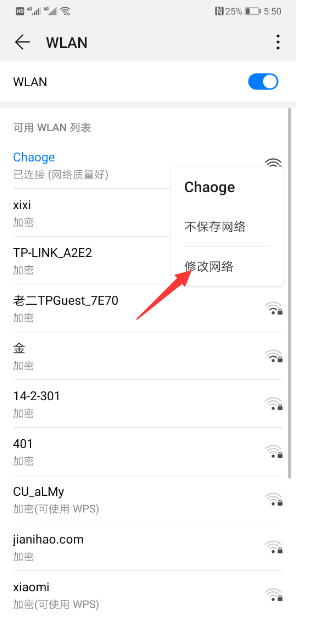

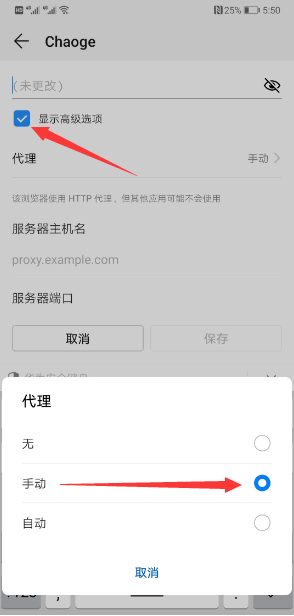

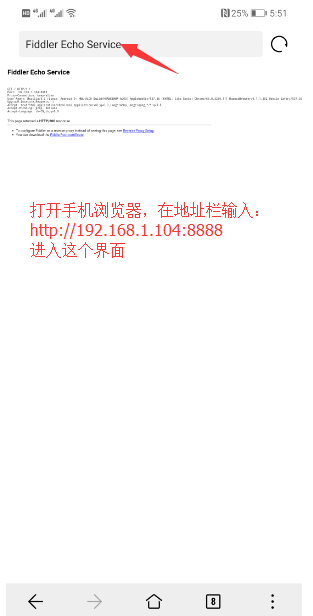

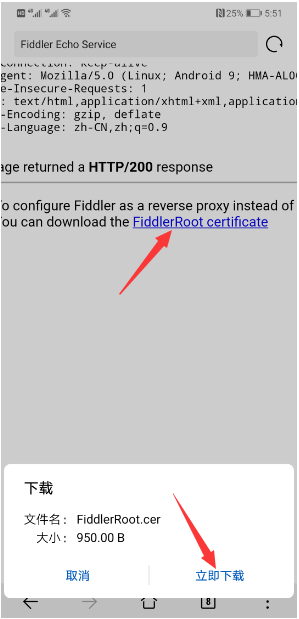

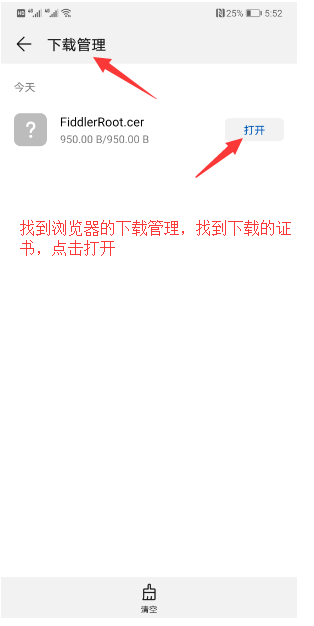

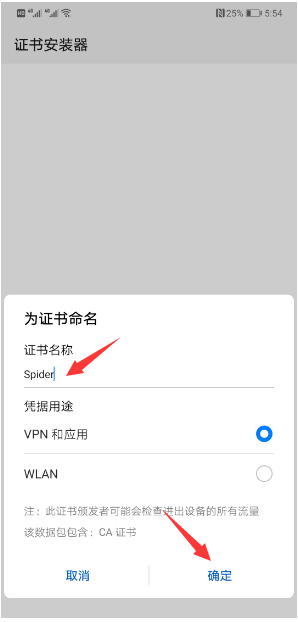

手机设置

正常版

1、设置手机

2、设置Fiddler

最后重启Fiddler。

如果遇到问题

在计算机中win+R,输入regedit打开注册表,找到fiddler,右键新建点击OWORD(64位)值Q,

名称:80;类型:REG_DWORD;数据:0x00000000(0)

Fiddler在上面的设置下,加上:菜单栏Rules-->Customize Rules...-->重启Fiddler

移动端app数据抓取

有道翻译手机版破解案例

import requests from lxml import etree word = input('请输入要翻译的单词:') url = 'http://m.youdao.com/translate' data = { 'inputtext': word, 'type': 'AUTO', } html = requests.post(url,data=data).text parse_html = etree.HTML(html) result = parse_html.xpath('//ul[@id="translateResult"]/li/text()')[0] print(result)

途牛旅游

目标:完成途牛旅游爬取系统,输入出发地、目的地,输入时间,抓取热门景点信息及相关评论

地址

1、地址: http://www.tuniu.com/

2、热门 - 搜索

3、选择: 相关目的地、出发城市、出游时间(出发时间和结束时间)点击确定

4、示例地址如下:

http://s.tuniu.com/search_complex/whole-sh-0-%E7%83%AD%E9%97%A8/list-a{触发时间}_{结束时间}-{出发城市}-{相关目的地}/

项目实现

1、创建项目

scrapy startproject Tuniu

cd Tuniu

scrapy genspider tuniu tuniu.com

2、定义要抓取的数据结构 - items.py

# 一级页面 # 标题 + 链接 + 价格 + 满意度 + 出游人数 + 点评人数 + 推荐景点 + 供应商 title = scrapy.Field() link = scrapy.Field() price = scrapy.Field() satisfaction = scrapy.Field() travelNum = scrapy.Field() reviewNum = scrapy.Field() recommended = scrapy.Field() supplier = scrapy.Field() # 二级页面 # 优惠券 + 产品评论 coupons = scrapy.Field() cp_comments = scrapy.Field()

3、爬虫文件数据分析与提取

页面地址分析

http://s.tuniu.com/search_complex/whole-sh-0-热门/list-a20190828_20190930-l200-m3922/

# 分析

list-a{出发时间_结束时间-出发城市-相关目的地}/

# 如何解决?

提取 出发城市及目的地城市的字典,key为城市名称,value为其对应的编码

# 提取字典,定义config.py存放

代码实现

# -*- coding: utf-8 -*- import scrapy from ..config import * from ..items import TuniuItem import json class TuniuSpider(scrapy.Spider): name = 'tuniu' allowed_domains = ['tuniu.com'] def start_requests(self): s_city = input('出发城市:') d_city = input('相关目的地:') start_time = input('出发时间(20190828):') end_time = input('结束时间(例如20190830):') s_city = src_citys[s_city] d_city = dst_citys[d_city] url = 'http://s.tuniu.com/search_complex/whole-sh-0-%E7%83%AD%E9%97%A8/list-a{}_{}-{}-{}'.format(start_time,end_time,s_city, d_city) yield scrapy.Request(url, callback=self.parse) def parse(self, response): # 提取所有景点的li节点信息列表 items = response.xpath('//ul[@class="thebox clearfix"]/li') for item in items: # 此处是否应该在for循环内创建? tuniuItem = TuniuItem() # 景点标题 + 链接 + 价格 tuniuItem['title'] = item.xpath('.//span[@class="main-tit"]/@name').get() tuniuItem['link'] = 'http:' + item.xpath('./div/a/@href').get() tuniuItem['price'] = int(item.xpath('.//div[@class="tnPrice"]/em/text()').get()) # 判断是否为新产品 isnews = item.xpath('.//div[@class="new-pro"]').extract() if not len(isnews): # 满意度 + 出游人数 + 点评人数 tuniuItem['satisfaction'] = item.xpath('.//div[@class="comment-satNum"]//i/text()').get() tuniuItem['travelNum'] = item.xpath('.//p[@class="person-num"]/i/text()').get() tuniuItem['reviewNum'] = item.xpath('.//p[@class="person-comment"]/i/text()').get() else: tuniuItem['satisfaction'] = '新产品' tuniuItem['travelNum'] = '新产品' tuniuItem['reviewNum'] = '新产品' # 包含景点+供应商 tuniuItem['recommended'] = item.xpath('.//span[@class="overview-scenery"]/text()').extract() tuniuItem['supplier'] = item.xpath('.//span[@class="brand"]/span/text()').extract() yield scrapy.Request(tuniuItem['link'], callback=self.item_info, meta={'item': tuniuItem}) # 解析二级页面 def item_info(self, response): tuniuItem = response.meta['item'] # 优惠信息 coupons = ','.join(response.xpath('//div[@class="detail-favor-coupon-desc"]/@title').extract()) tuniuItem['coupons'] = coupons # 想办法获取评论的地址 # 产品点评 + 酒店点评 + 景点点评 productId = response.url.split('/')[-1] # 产品点评 cpdp_url = 'http://www.tuniu.com/papi/tour/comment/product?productId={}'.format(productId) yield scrapy.Request(cpdp_url, callback=self.cpdp_func, meta={'item': tuniuItem}) # 解析产品点评 def cpdp_func(self, response): tuniuItem = response.meta['item'] html = json.loads(response.text) comment = {} for s in html['data']['list']: comment[s['realName']] = s['content'] tuniuItem['cp_comments'] = comment yield tuniuItem

4、管道文件处理 pipelines.py

print(dict(item))

5、设置settings.py

获取出发城市和目的地城市的编号

tools.py

# 出发城市 # 基准xpath表达式 //*//*[@id="niuren_list"]/div[2]/div[1]/div[2]/div[1]/div/div[1]/dl/dd/ul/li[contains(@class,"filter_input")]/a name : ./text() code : ./@href [0].split('/')[-1].split('-')[-1] # 目的地城市 # 基准xpath表达式 //*[@id="niuren_list"]/div[2]/div[1]/div[2]/div[1]/div/div[3]/dl/dd/ul/li[contains(@class,"filter_input")]/a name : ./text() code : ./@href [0].split('/')[-1].split('-')[-1]

代码实现

import requests from lxml import etree url = 'http://s.tuniu.com/search_complex/whole-sh-0-%E7%83%AD%E9%97%A8/' headers = {'User-Agent':'Mozilla/5.0'} html = requests.get(url,headers=headers).text parse_html = etree.HTML(html) # 获取出发地字典 # 基准xpath li_list = parse_html.xpath('//*[@id="niuren_list"]/div[2]/div[1]/div[2]/div[1]/div/div[3]/dl/dd/ul/li[contains(@class,"filter_input")]/a') src_citys = {} dst_citys = {} for li in li_list: city_name_list = li.xpath('./text()') city_code_list = li.xpath('./@href') if city_name_list and city_code_list: city_name = city_name_list[0].strip() city_code = city_code_list[0].split('/')[-1].split('-')[-1] src_citys[city_name] = city_code print(src_citys) # 获取目的地字典 li_list = parse_html.xpath('//*[@id="niuren_list"]/div[2]/div[1]/div[2]/div[1]/div/div[1]/dl/dd/ul/li[contains(@class,"filter_input")]/a') for li in li_list: city_name_list = li.xpath('./text()') city_code_list = li.xpath('./@href') if city_name_list and city_code_list: city_name = city_name_list[0].strip() city_code = city_code_list[0].split('/')[-1].split('-')[-1] dst_citys[city_name] = city_code print(dst_citys)