因为Spark与Hadoop是关联的,所以在安装Spark前应该根据已安装的Hadoop版本来选择待安装的Sqark版本,要不然就会报“Server IPC version X cannot communicate with client version Y”的错误。

我安装的Hadoop版本为Hadoop2.4.0(下载),选择的Spark版本为spark-1.2.0-bin-hadoop2.4.tgz(下载)。要注意的是Spark和Scala存在一定的版本兼容问题,参考我的另一篇博客中记录的问题。

官方文档:http://spark.apache.org/docs/latest/spark-standalone.html#cluster-launch-scripts

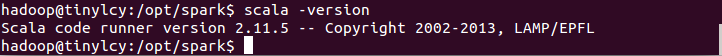

Spark依赖与Scala,所以还需要预装Scala,我下载的版本为scala-2.11.5.tgz,配置Scala的环境变量:

export SCALA_HOME=/opt/scala/scala-2.11.5

export PATH=$PATH:$SCALA_HOME/bin

修改后使环境变量生效,查看Scala版本:

然后配置Spark的环境变量:

export SPARK_HOME=/opt/spark

export PATH=$PATH:$SPARK_HOME/bin

配置后使环境变量修改生效。

在 ${SPARK_HOME}/conf 目录下做如下操作:

cp spark-env.sh.template spark-env.sh

修改 spark-env.sh ,在文件最后添加(视具体配置路径而定):

export SCALA_HOME=/opt/scala/scala-2.11.5 export SPARK_MASTER_IP=127.0.0.1 export SPARK_WORKER_MEMORY=2G export JAVA_HOME=/usr/lib/jvm/jdk1.7.0_75 export HADOOP_HOME=/usr/local/hadoop export HADOOP_CONF_DIR=/usr/local/hadoop/etc/hadoop

配置完毕。

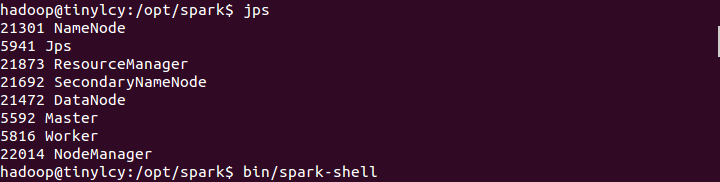

启动Spark前,先启动Hadoop:

hadoop@tinylcy:/usr/local/hadoop$ sbin/start-all.sh

然后启动Spark:

hadoop@tinylcy:/opt/spark$ sbin/start-all.sh

切换到Spark的bin目录,进入交互模式:

hadoop@tinylcy:/opt/spark$ bin/spark-shell

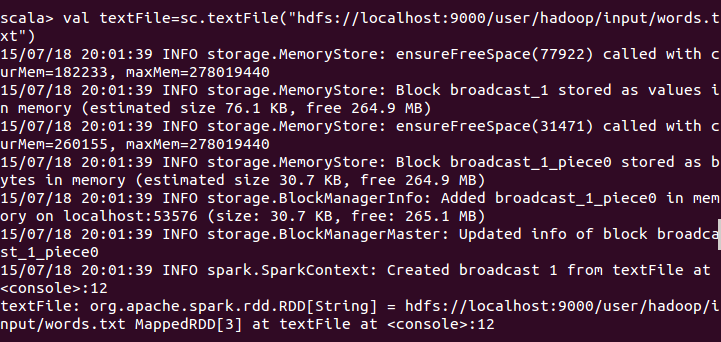

测试:

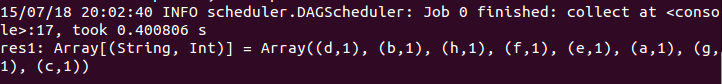

scala> val textFile=sc.textFile("hdfs://localhost:9000/user/hadoop/input/words.txt")

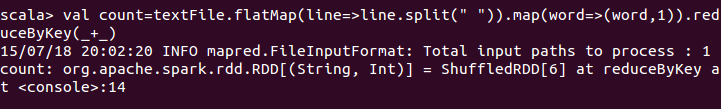

scala> val count=textFile.flatMap(line=>line.split(" ")).map(word=>(word,1)).reduceByKey(_+_)

scala> count.collect()

再举一个例子:

scala> val data=Array(1,2,3,4,5) //产生data data: Array[Int] = Array(1, 2, 3, 4, 5) scala> val distData=sc.parallelize(data) //将data处理成RDD distData: org.apache.spark.rdd.RDD[Int] = ParallelCollectionRDD[0] at parallelize at <console>:14 scala> distData.reduce(_+_) //在RDD上进行运算,对data里面的元素进行加和 15/07/19 14:37:56 INFO spark.SparkContext: Starting job: reduce at <console>:17 15/07/19 14:37:56 INFO scheduler.DAGScheduler: Got job 0 (reduce at <console>:17) with 4 output partitions (allowLocal=false) 15/07/19 14:37:56 INFO scheduler.DAGScheduler: Final stage: Stage 0(reduce at <console>:17) 15/07/19 14:37:56 INFO scheduler.DAGScheduler: Parents of final stage: List() 15/07/19 14:37:56 INFO scheduler.DAGScheduler: Missing parents: List() 15/07/19 14:37:56 INFO scheduler.DAGScheduler: Submitting Stage 0 (ParallelCollectionRDD[0] at parallelize at <console>:14), which has no missing parents 15/07/19 14:37:56 WARN util.SizeEstimator: Failed to check whether UseCompressedOops is set; assuming yes 15/07/19 14:37:56 INFO storage.MemoryStore: ensureFreeSpace(1184) called with curMem=0, maxMem=278019440 15/07/19 14:37:56 INFO storage.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 1184.0 B, free 265.1 MB) 15/07/19 14:37:56 INFO storage.MemoryStore: ensureFreeSpace(912) called with curMem=1184, maxMem=278019440 15/07/19 14:37:56 INFO storage.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 912.0 B, free 265.1 MB) 15/07/19 14:37:56 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on localhost:47649 (size: 912.0 B, free: 265.1 MB) 15/07/19 14:37:56 INFO storage.BlockManagerMaster: Updated info of block broadcast_0_piece0 15/07/19 14:37:56 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:838 15/07/19 14:37:56 INFO scheduler.DAGScheduler: Submitting 4 missing tasks from Stage 0 (ParallelCollectionRDD[0] at parallelize at <console>:14) 15/07/19 14:37:56 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 4 tasks 15/07/19 14:37:56 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, PROCESS_LOCAL, 1204 bytes) 15/07/19 14:37:56 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, localhost, PROCESS_LOCAL, 1204 bytes) 15/07/19 14:37:56 INFO scheduler.TaskSetManager: Starting task 2.0 in stage 0.0 (TID 2, localhost, PROCESS_LOCAL, 1204 bytes) 15/07/19 14:37:56 INFO scheduler.TaskSetManager: Starting task 3.0 in stage 0.0 (TID 3, localhost, PROCESS_LOCAL, 1208 bytes) 15/07/19 14:37:56 INFO executor.Executor: Running task 3.0 in stage 0.0 (TID 3) 15/07/19 14:37:56 INFO executor.Executor: Running task 1.0 in stage 0.0 (TID 1) 15/07/19 14:37:56 INFO executor.Executor: Running task 2.0 in stage 0.0 (TID 2) 15/07/19 14:37:56 INFO executor.Executor: Running task 0.0 in stage 0.0 (TID 0) 15/07/19 14:37:56 INFO executor.Executor: Finished task 0.0 in stage 0.0 (TID 0). 727 bytes result sent to driver 15/07/19 14:37:56 INFO executor.Executor: Finished task 1.0 in stage 0.0 (TID 1). 727 bytes result sent to driver 15/07/19 14:37:56 INFO executor.Executor: Finished task 3.0 in stage 0.0 (TID 3). 727 bytes result sent to driver 15/07/19 14:37:56 INFO executor.Executor: Finished task 2.0 in stage 0.0 (TID 2). 727 bytes result sent to driver 15/07/19 14:37:56 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 60 ms on localhost (1/4) 15/07/19 14:37:56 INFO scheduler.TaskSetManager: Finished task 3.0 in stage 0.0 (TID 3) in 56 ms on localhost (2/4) 15/07/19 14:37:56 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 57 ms on localhost (3/4) 15/07/19 14:37:56 INFO scheduler.TaskSetManager: Finished task 2.0 in stage 0.0 (TID 2) in 58 ms on localhost (4/4) 15/07/19 14:37:56 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool 15/07/19 14:37:56 INFO scheduler.DAGScheduler: Stage 0 (reduce at <console>:17) finished in 0.077 s 15/07/19 14:37:56 INFO scheduler.DAGScheduler: Job 0 finished: reduce at <console>:17, took 0.342516 s res0: Int = 15 //得到运算结果 scala>