需求说明

到网站http://lishi.tianqi.com/kunming/201802.html可以看到昆明2018年2月份的天气信息,然后将数据存储到数据库。

实现代码

#-*-coding:utf-8 -*- import urllib.request import random import pymysql from bs4 import BeautifulSoup user_agent = [ 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.87 Safari/537.36', 'Mozilla/5.0 (X11; U; Linux x86_64; zh-CN; rv:1.9.2.10) Gecko/20100922 Ubuntu/10.10 (maverick) Firefox/3.6.10', 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11', 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/30.0.1599.101 Safari/537.36', 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 (KHTML, like Gecko) Chrome/21.0.1180.71 Safari/537.1 LBBROWSER', 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; .NET4.0E; QQBrowser/7.0.3698.400)', ] headers = {'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8', 'Accept-Encoding': 'gzip, deflate, sdch', 'Accept-Language': 'zh-CN,zh;q=0.8', 'User-Agent': user_agent[random.randint(0,5)]} print("连接到mysql服务器") db = pymysql.connect("192.168.6.128","root","root","test_db",charset="utf8") print("******连接成功********") cursor = db.cursor() cursor.execute("DROP TABLE IF EXISTS TB") sql = """CREATE TABLE TB(DT_DATE VARCHAR(10), HIGH_TEMP int,LOW_TEMP int,WEATHER VARCHAR(40),WIND VARCHAR(40),WIND_TAIL VARCHAR(10))""" cursor.execute(sql) url = "http://lishi.tianqi.com/kunming/201802.html" index = urllib.request.urlopen(url).read() print(index) #print(index) index_soup = BeautifulSoup(index) i = 1 #此处的class_=""是为了过滤calss="t1"的标题栏 uls = index_soup.find("div",class_="tqtongji2").find_all("ul",class_="") #获取全部的ul作为一个列表 for ul in uls: lis = ul.find_all('li') #将每个li下的标签获取为列表 li = [x for x in lis] V_DT_DATE = li[0].text.strip() V_HIGH_TEMP = li[1].text.strip() V_LOW_TEMP = li[2].text.strip() V_WEATHER = li[3].text.strip() V_WIND = li[4].text.strip() print(V_WIND) V_WIND_TAIL = li[5].text.strip() inser_tb = ("INSERT INTO TB " "VALUES(%s,%s,%s,%s,%s,%s)") data = (V_DT_DATE,V_HIGH_TEMP,V_LOW_TEMP,V_WEATHER,V_WIND,V_WIND_TAIL) cursor.execute(inser_tb,data) db.commit() print("数据已经爬取并且存储到Mysql") db.close()

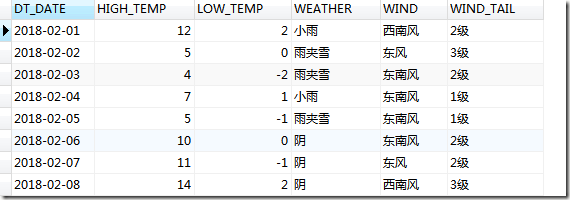

运行上述程序后,在数据库查询结果如下: