一、通知脚本的使用方式

1、示例脚本的使用方式

a、脚本内容

#!/bin/bash # contact='root@localhost' notify(){ local mailsubject="$(hostname) to be $1,vip floating" local mailbody="$(date +'%F%T'):vrrp transltion,$(hostname) changed to be $1" echo "$mailbody" |mail -s "$mailsubject" $contact } case $1 in master) notify master ;; backup) notify backup ;; fault) notify fault ;; *) echo "Usage: $(basename $0) {master|backup|fault}" exit 1 ;; esac

b、脚本配置

1)、在node1中

[root@node1 /]# cat /etc/keepalived/notify.sh #!/bin/bash # contact='root@localhost' notify(){ mailsubject="$(hostname) to be $1,vip floating" mailbody="$(date +'%F%T'):vrrp transltion,$(hostname) changed to be $1" echo "$mailbody" |mail -s "$mailsubject" $contact } case $1 in master) notify master ;; backup) notify backup ;; fault) notify fault ;; *) echo "Usage: $(basename $0) {master|backup|fault}" exit 1 ;; esac

执行

[root@node1 /]# chmod +x /etc/keepalived/notify.sh [root@node1 /]# bash -x /etc/keepalived/notify.sh master + contact=root@localhost + case $1 in + notify master ++ hostname + mailsubject='node1 to be master,vip floating' ++ date +%F%T ++ hostname + mailbody='2021-02-1814:13:21:vrrp transltion,node1 changed to be master' + echo '2021-02-1814:13:21:vrrp transltion,node1 changed to be master' + mail -s 'node1 to be master,vip floating' root@localhost

2)、将脚本复制到node2中

[root@node1 /]# scp -p /etc/keepalived/notify.sh root@192.168.10.42:/etc/keepalived/

2、在配置文件中配置告警脚本

a、在node1中配置

[root@node1 /]# cat /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost } notification_email_from keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node1 vrrp_mcast_group4 224.1.101.33 } vrrp_instance VI_1 { state MASTER priority 100 interface ens33 virtual_router_id 51 advert_int 1 authentication { auth_type PASS auth_pass w0KE4b81 } virtual_ipaddress { 192.168.10.100/24 dev ens33 label ens33:0 } notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault" }

b、在node2中配置

[root@node2 keepalived]# cat /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost } notification_email_from keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node2 vrrp_mcast_group4 224.1.101.33 } vrrp_instance VI_1 { state BACKUP priority 96 interface ens33 virtual_router_id 51 advert_int 1 authentication { auth_type PASS auth_pass w0KE4b81 } virtual_ipaddress { 192.168.10.100/24 dev ens33 label ens33:0 } notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault" }

c、我们来进行实验

1)、我们先停止node1和node2节点的keepalived服务,然后启动node2节点的keepalived服务,此时我们node2就成为了主节点(master)

[root@node2 keepalived]# systemctl start keepalived [root@node2 keepalived]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:d3:d4:07 brd ff:ff:ff:ff:ff:ff inet 192.168.10.42/24 brd 192.168.10.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet 192.168.10.100/24 scope global secondary ens33:0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fed3:d407/64 scope link valid_lft forever preferred_lft forever [root@node2 keepalived]#

2)、此时我们看到我们的node2从backup变成了master

[root@node2 keepalived]# systemctl start keepalived.service [root@node2 keepalived]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:d3:d4:07 brd ff:ff:ff:ff:ff:ff inet 192.168.10.42/24 brd 192.168.10.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet 192.168.10.100/24 scope global secondary ens33:0 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fed3:d407/64 scope link valid_lft forever preferred_lft forever [root@node2 keepalived]# mail Heirloom Mail version 12.5 7/5/10. Type ? for help. "/var/spool/mail/root": 2 messages 2 new >N 1 root Thu Feb 18 15:04 18/671 "node2 to be backup,vip floating" N 2 root Thu Feb 18 15:04 18/671 "node2 to be master,vip floating" &

3)、我们再启动node1的keepalived服务,发现我们node1成为了master

node1中可以看到邮件通知

[root@node1 /]# systemctl start keepalived [root@node1 /]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:0c:29:04:17:d9 brd ff:ff:ff:ff:ff:ff inet 192.168.10.41/24 brd 192.168.10.255 scope global noprefixroute ens33 valid_lft forever preferred_lft forever inet 192.168.10.100/24 scope global secondary ens33:0 valid_lft forever preferred_lft forever inet6 fe80::a4b:2160:4a8b:aa1f/64 scope link noprefixroute valid_lft forever preferred_lft forever [root@node1 /]# mail Heirloom Mail version 12.5 7/5/10. Type ? for help. "/var/spool/mail/root": 6 messages 1 new 6 unread U 1 root Thu Feb 18 14:11 19/663 "node1 to be master,vip floating" U 2 root Thu Feb 18 14:11 19/663 "node1 to be master,vip floating" U 3 root Thu Feb 18 14:12 19/681 "node1 to be master,vip floating" U 4 root Thu Feb 18 14:12 19/681 "node1 to be master,vip floating" U 5 root Thu Feb 18 14:13 19/681 "node1 to be master,vip floating" >N 6 root Thu Feb 18 15:06 18/671 "node1 to be master,vip floating" &

node2中我们可以看到邮件通知

[root@node2 keepalived]# mail Heirloom Mail version 12.5 7/5/10. Type ? for help. "/var/spool/mail/root": 3 messages 1 new 3 unread U 1 root Thu Feb 18 15:04 19/681 "node2 to be backup,vip floating" U 2 root Thu Feb 18 15:04 19/681 "node2 to be master,vip floating" >N 3 root Thu Feb 18 15:06 18/671 "node2 to be backup,vip floating" &

二、虚拟服务器

1、配置参数

a、配置项

Virtual_server IP port |

Virtual_server fwmark Int

{

...

real_server{

...

}

...

}

b、常用参数:

delay_loop <INT>:服务轮询的时间间隔;

lb_algo rr | wrr | lc | wlc | lblc | sh | dh:定义调度方法;

lb_kind NAT | DR | TUN|集群的类型;

persistence_timeout <INT>:持久连接时长;

protocol TCP:服务协议,仅支持TCP;

sorry_server <IPADDR> <PORT>:备用服务器地址;

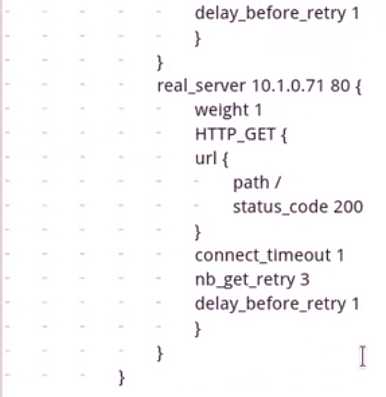

real_server <IPADDR> <PORT>

{

weight <INT>

notify_up <STRING> | <QUOTED-STRING>

notify_down <STRING> | <QUOTED-STRING>

HTTP_GET | SSL_GET | TCP_CHECK | SMTP_CHECK | MISC_CHECK {...}:定义当前主机的健康状态检测方法;

}

HTTP_GET | SSL_GET:应用层检测

HTTP_GET | SSL_GET {

url {

path <URL_PATH>:定义要监控的URL;

status_code <INT>:判断上述检测机制为健康状态的响应码;

digest <STRING>:判断上述检测机制为健康状态的响应的内容的校验码;

}

nb_get_retry <INT>:重试次数;

delay_before_retry <INT>:重试之前的延迟时长;

connect_ip <IP ADDRESS>:向当前RS的哪个IP地址发起健康状态检测请求;

connect_port <PORT>:向当前RS的哪个PORT发起健康状态检测请求;

bindto <IP ADDRESS>:发出健康状态检测请求时使用的源地址;

bind_port <PORT>:发出健康状态检测请求时使用的源端口;

connect_timeout <INTEGER>:连接请求的超时时长

}

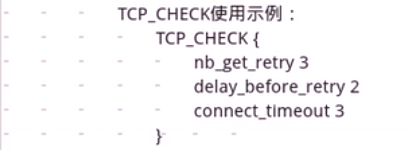

TCP_CHECK {

connect_ip <IP ADDRESS>:向当前RS的哪个IP地址发起健康状态检测请求;

connect_port <PORT>:向当前RS的哪个PORT发起健康状态检测请求;

bindto <IP ADDRESS>:发出健康状态检测请求时使用的源地址;

bind_port <PORT>:发出健康状态检测请求时使用的源端口;

connect_timeout <INTEGER>:连接请求的超时时长

}

c、高可用ipvs集群实例

2、实践

a、我们现在构建一个DR类型的集群,2个调度器做keepalived高可用集群(他有一个DIP),两个RS(他们有自己的RIP和VIP)

b、我们现在将node1和node2模拟成两个DS(一主一备),再用两台主机node3和node4作为RS

1)、分别在node3和node4上安装http服务

[root@node3 ~]# curl 192.168.10.43 <h1>RealServer 1</h1> [root@node3 ~]# curl 192.168.10.44 <h1>RealServer 2</h1>

2)、我们接下来编写脚本将node3和node4设置为RS。node3和node4脚本内容一样

[root@node3 ~]# cat /root/setrs.sh #!/bin/bash # vip='192.168.10.100' netmask='255.255.255.255' iface='lo:0' case $1 in start) echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce ifconfig $iface $vip netmask $netmask broadcast $vip up route add -host $vip dev $iface ;; stop) ifconfig $iface down echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce ;; *) exit 1 esac [root@node3 ~]# bash -x setrs.sh start + vip=192.168.10.100 + netmask=255.255.255.255 + iface=lo:0 + case $1 in + echo 1 + echo 1 + echo 2 + echo 2 + ifconfig lo:0 192.168.10.100 netmask 255.255.255.255 broadcast 192.168.10.100 up + route add -host 192.168.10.100 dev lo:0 [root@node3 ~]# ifconfig ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 192.168.10.43 netmask 255.255.255.0 broadcast 192.168.10.255 inet6 fe80::20c:29ff:fe64:5246 prefixlen 64 scopeid 0x20<link> ether 00:0c:29:64:52:46 txqueuelen 1000 (Ethernet) RX packets 16065 bytes 18040901 (17.2 MiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 4394 bytes 453747 (443.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 112 bytes 9528 (9.3 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 112 bytes 9528 (9.3 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo:0: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 192.168.10.100 netmask 255.255.255.255 loop txqueuelen 1000 (Local Loopback) [root@node3 ~]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.10.254 0.0.0.0 UG 100 0 0 ens33 192.168.10.0 0.0.0.0 255.255.255.0 U 100 0 0 ens33 192.168.10.100 0.0.0.0 255.255.255.255 UH 0 0 0 lo [root@node3 ~]#

同理node4也和node3进行一样的配置。

c、我们现在在两个director(node1和node2)上生成规则。注意我们使用keepalived的时候他会自动生成规则,不用装ipvsadm他就能自动生成,keepalived其实是调用相应的api生成规则的。

1)、我们来在node1和node2中配置生成lvs规则。

node1中

[root@node1 /]# cat /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost } notification_email_from keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node1 vrrp_mcast_group4 224.1.101.33 } vrrp_instance VI_1 { state MASTER priority 100 interface ens33 virtual_router_id 51 advert_int 1 authentication { auth_type PASS auth_pass w0KE4b81 } virtual_ipaddress { 192.168.10.100/24 dev ens33 label ens33:0 } notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault" } virtual_server 192.168.10.100 80 { delay_loop 1 #每隔1s检测一次 lb_algo wrr #调度算法是wrr算法 lb_kind DR #类型为DR protocol TCP #协议为TCP sorry_server 127.0.0.1 80 #当后端节点都挂了时使用本机的80服务作为默认服务。可以在director上装个nginx作为sorry server. real_server 192.168.10.43 80 { weight 1 #权重为1 HTTP_GET { #健康状态检测使用HTTP_GET方式 url { path /index.html #对主页发请求 status_code 200 #返回码为200时表示健康状态检测成功。 } nb_get_retry 3 #尝试3次做检测,三次检测失败表示不健康 delay_before_retry 2 #每次尝试做检测之前先延迟2s connect_timeout 3 #连接超时时长为3s } } real_server 192.168.10.44 80 { weight 1 #权重为1 HTTP_GET { #健康状态检测使用HTTP_GET方式 url { path /index.html #对主页发请求 status_code 200 #返回码为200时表示健康状态检测成功。 } nb_get_retry 3 #尝试3次做检测,三次检测失败表示不健康 delay_before_retry 2 #每次尝试做检测之前先延迟2s connect_timeout 3 #连接超时时长为3s } } }

node2中

[root@node2 keepalived]# cat /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost } notification_email_from keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node2 vrrp_mcast_group4 224.1.101.33 } vrrp_instance VI_1 { state BACKUP priority 96 interface ens33 virtual_router_id 51 advert_int 1 authentication { auth_type PASS auth_pass w0KE4b81 } virtual_ipaddress { 192.168.10.100/24 dev ens33 label ens33:0 } notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault" } virtual_server 192.168.10.100 80 { delay_loop 1 #每隔1s检测一次 lb_algo wrr #调度算法是wrr算法 lb_kind DR #类型为DR protocol TCP #协议为TCP sorry_server 127.0.0.1 80 #当后端节点都挂了时使用本机的80服务作为默认服务。可以在director上装个nginx作为sorry server. real_server 192.168.10.43 80 { weight 1 #权重为1 HTTP_GET { #健康状态检测使用HTTP_GET方式 url { path /index.html #对主页发请求 status_code 200 #返回码为200时表示健康状态检测成功。 } nb_get_retry 3 #尝试3次做检测,三次检测失败表示不健康 delay_before_retry 2 #每次尝试做检测之前先延迟2s connect_timeout 3 #连接超时时长为3s } } real_server 192.168.10.44 80 { weight 1 #权重为1 HTTP_GET { #健康状态检测使用HTTP_GET方式 url { path /index.html #对主页发请求 status_code 200 #返回码为200时表示健康状态检测成功。 } nb_get_retry 3 #尝试3次做检测,三次检测失败表示不健康 delay_before_retry 2 #每次尝试做检测之前先延迟2s connect_timeout 3 #连接超时时长为3s } } }

2)、现在我们启动node1和node2的服务,并且通过ipvsadm查看相应规则

node1中

[root@node1 /]# ipvsadm -ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.10.100:80 wrr -> 192.168.10.43:80 Route 1 0 1 -> 192.168.10.44:80 Route 1 0 1

node2中

[root@node2 keepalived]# ipvsadm -ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.10.100:80 wrr -> 192.168.10.43:80 Route 1 0 0 -> 192.168.10.44:80 Route 1 0 0

3)、我们尝试访问vip,可以看到可以负载均衡到后端主机

[root@node2 keepalived]# curl 192.168.10.100 <h1>RealServer 2</h1> [root@node2 keepalived]# curl 192.168.10.100 <h1>RealServer 1</h1> [root@node2 keepalived]# curl 192.168.10.100 <h1>RealServer 2</h1> [root@node2 keepalived]# curl 192.168.10.100 <h1>RealServer 1</h1> [root@node2 keepalived]# curl 192.168.10.100 <h1>RealServer 2</h1> [root@node2 keepalived]# curl 192.168.10.100 <h1>RealServer 1</h1> [root@node2 keepalived]# curl 192.168.10.100 <h1>RealServer 2</h1> [root@node2 keepalived]# curl 192.168.10.100 <h1>RealServer 1</h1>

4)、我们停止node3中的httpd服务,可以看到我们相应的轮询规则已经被自动移除

[root@node2 ~]# ipvsadm -ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.10.100:80 wrr -> 192.168.10.44:80 Route 1 0 0

d、现在我们来配置node3的检测方式为HTTP_GET,node4的检测方式为TCP_CHECK

1)、node1和node2中的配置方式如下

[root@node1 ~]# cat /etc/keepalived/keepalived.conf global_defs { notification_email { root@localhost } notification_email_from keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node1 vrrp_mcast_group4 224.1.101.33 } vrrp_instance VI_1 { state MASTER priority 100 interface ens33 virtual_router_id 51 advert_int 1 authentication { auth_type PASS auth_pass w0KE4b81 } virtual_ipaddress { 192.168.10.100/24 dev ens33 label ens33:0 } notify_master "/etc/keepalived/notify.sh master" notify_backup "/etc/keepalived/notify.sh backup" notify_fault "/etc/keepalived/notify.sh fault" } virtual_server 192.168.10.100 80 { delay_loop 1 #每隔1s检测一次 lb_algo wrr #调度算法是wrr算法 lb_kind DR #类型为DR protocol TCP #协议为TCP sorry_server 127.0.0.1 80 #当后端节点都挂了时使用本机的80服务作为默认服务。可以在director上装个nginx作为sorry server. real_server 192.168.10.43 80 { weight 1 #权重为1 HTTP_GET { #健康状态检测使用HTTP_GET方式 url { path /index.html #对主页发请求 status_code 200 #返回码为200时表示健康状态检测成功。 } nb_get_retry 3 #尝试3次做检测,三次检测失败表示不健康 delay_before_retry 2 #每次尝试做检测之前先延迟2s connect_timeout 3 #连接超时时长为3s } } real_server 192.168.10.44 80 { weight 1 #权重为1 TCP_CHECK { #健康状态检测使用TCP_CHECK方式 nb_get_retry 3 #尝试3次做检测,三次检测失败表示不健康 delay_before_retry 2 #每次尝试做检测之前先延迟2s connect_timeout 3 #连接超时时长为3s } } }