如果我们需要优化kernel程序,我们必须知道一些GPU的底层知识,本文简单介绍一下GPU内存相关和线程调度知识,并且用一个小示例演示如何简单根据内存结构优化。

一、GPU总线寻址和合并内存访问

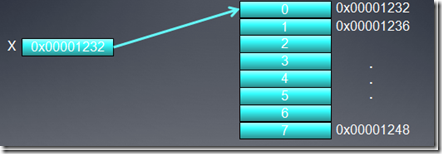

假设X指向一个32位整数数组的指针,数组首地址是0x00001232,那么一个线程需要访问第0个成员时是也许是如下访问的:

int tmp = X[0]假设内存总线宽度是256位,内存访问时必须和总线宽度对齐,所以内存只能访问0x00000020,0x00000040这种地址(0x20=256位),如果要访问0x00001232,那么内存必须同时获取0x00001220-0x0000123f的数据,一次获取了32字节的数据,但是我们有用的只有4字节,这就造成了28个字节的浪费。

事实上,GPU为了利用总线带宽,它会合并内存访问,尽量将多个线程读取内存合并到一起进行访问,例如我们有16个线程,每个线程访问4字节,总共需要访问0x00001232-0x00001272,如果不合并内存访问,那么他需要访问内存16次,每次浪费28字节空间;如果合并内存访问,它第一次访问0x00001220-0x0000123f,第二次访问0x00001240-0x0000125f,第三次访问0x00001260-0x0000133f,总共只需要访问三次,这样可以大大减少内存访问次数。优化kernel的性能。

二、性能优化

考虑一个矩阵相乘的问题,一个MXP的矩阵A,和一个P*N的矩阵B相乘得到MXN的C矩阵,在CPU中计算的代码入下:

#define M 1024 #define P 512 #define N 2048 void RunAsCpu( const float *A, const float *B, float* C) { for (int i = 0; i < M; i++) { for (int j = 0; j < N; j++) { C[i*N + j] = 0.0; for (int k = 0; k < P; k++) { C[i*N + j] += A[i*P + k] * B[k*N + j]; } } } }如果使用GPU运行,那么通过降维操作,创建M*N个线程,第一个维度大小的M,第二个维度大小为N,kernel中代码可能如下:

__kernel void RunAsGpu_1( __global float *A, __global float *B, int M, int N, int P, __global float* C) { int x = get_global_id(0); int y = get_global_id(1); float sum = 0; for(int i = 0;i<P;i++) { sum += A[x*P + i]*B[i*N + y]; } C[x*N + y] = sum; }此时,如果思考一下,可能会发现,还有第二种方案,即第一个维度大小的N,第二个维度大小为M

这两个kernel运行结果是一样的,那运行效率有什么不同呢?host文件用如下代码,然后运行一下看看效果:__kernel void RunAsGpu_2( __global float *A, __global float *B, int M, int N, int P, __global float* C) { int x = get_global_id(0); int y = get_global_id(1); float sum = 0; for(int i = 0;i<P;i++) { sum += A[y*P + i]*B[i*N + x]; } C[y*N + x] = sum; }#include <iostream> #include <CL/cl.h> #include <cassert> #include <windows.h> #include <ctime> using namespace std; #define M 1024 #define P 512 #define N 2048 void RunAsCpu( const float *A, const float *B, float* C) { for (int i = 0; i < M; i++) { for (int j = 0; j < N; j++) { C[i*N + j] = 0.0; for (int k = 0; k < P; k++) { C[i*N + j] += A[i*P + k] * B[k*N + j]; } } } } //计时函数 double time_stamp() { LARGE_INTEGER curclock; LARGE_INTEGER freq; if ( !QueryPerformanceCounter(&curclock) || !QueryPerformanceFrequency(&freq) ) { return -1; } return double(curclock.QuadPart) / freq.QuadPart; } #define OPENCL_CHECK_ERRORS(ERR) if(ERR != CL_SUCCESS) { cerr << "OpenCL error with code " << ERR << " happened in file " << __FILE__ << " at line " << __LINE__ << ". Exiting... "; exit(1); } int main(int argc, const char** argv) { cl_int error = 0; // Used to handle error codes cl_context context; cl_command_queue queue; cl_device_id device; // 遍历系统中所有OpenCL平台 cl_uint num_of_platforms = 0; // 得到平台数目 error = clGetPlatformIDs(0, 0, &num_of_platforms); OPENCL_CHECK_ERRORS(error); cout << "可用平台数: " << num_of_platforms << endl; cl_platform_id* platforms = new cl_platform_id[num_of_platforms]; // 得到所有平台的ID error = clGetPlatformIDs(num_of_platforms, platforms, 0); OPENCL_CHECK_ERRORS(error); //遍历平台,选择一个Intel平台的 cl_uint selected_platform_index = num_of_platforms; for (cl_uint i = 0; i < num_of_platforms; ++i) { size_t platform_name_length = 0; error = clGetPlatformInfo( platforms[i], CL_PLATFORM_NAME, 0, 0, &platform_name_length ); OPENCL_CHECK_ERRORS(error); // 调用两次,第一次是得到名称的长度 char* platform_name = new char[platform_name_length]; error = clGetPlatformInfo( platforms[i], CL_PLATFORM_NAME, platform_name_length, platform_name, 0 ); OPENCL_CHECK_ERRORS(error); cout << " [" << i << "] " << platform_name; if ( strstr(platform_name, "Intel") && selected_platform_index == num_of_platforms // have not selected yet ) { cout << " [Selected]"; selected_platform_index = i; } cout << endl; delete[] platform_name; } if (selected_platform_index == num_of_platforms) { cerr << "没有找到Intel平台 "; return 1; } // Device cl_platform_id platform = platforms[selected_platform_index]; error = clGetDeviceIDs(platform, CL_DEVICE_TYPE_GPU, 1, &device, NULL); OPENCL_CHECK_ERRORS(error) // Context context = clCreateContext(0, 1, &device, NULL, NULL, &error); OPENCL_CHECK_ERRORS(error) // Command-queue CL_QUEUE_PROFILING_ENABLE开启才能计时 queue = clCreateCommandQueue(context, device, CL_QUEUE_PROFILING_ENABLE, &error); OPENCL_CHECK_ERRORS(error) //下面初始化测试数据(主机数据) float* A_h = new float[M*P]; float* B_h = new float[P*N]; float* C_h = new float[M*N]; //srand((unsigned)time(NULL)); srand(100); for (int i = 0; i < M*P; i++) A_h[i] = rand() % 50; for (int i = 0; i < P*N; i++) B_h[i] = rand() % 50; //初始化设备数据 // 标志位表示数据只读,并且从nums1_h和nums2_h复制数据 cl_mem A_d = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR, sizeof(float)*M*P, A_h, &error); OPENCL_CHECK_ERRORS(error) cl_mem B_d = clCreateBuffer(context, CL_MEM_READ_ONLY | CL_MEM_COPY_HOST_PTR, sizeof(float)*P*N, B_h, &error); OPENCL_CHECK_ERRORS(error) cl_mem C_d = clCreateBuffer(context, CL_MEM_WRITE_ONLY, sizeof(float)*M*N, NULL, &error); OPENCL_CHECK_ERRORS(error) cout << "CPU 运行开始:" << time_stamp() << endl; RunAsCpu(A_h, B_h, C_h); cout << "CPU 运行结束:" << time_stamp() << endl; //读取OpenCLSum.cl文件内容 FILE* fp = fopen("OpenCLMulMatrix.cl", "rb"); fseek(fp, 0, SEEK_END); size_t src_size = ftell(fp); fseek(fp, 0, SEEK_SET); const char* source = new char[src_size]; fread((void*)source, 1, src_size, fp); fclose(fp); //创建编译运行kernel函数 cl_program program = clCreateProgramWithSource(context, 1, &source, &src_size, &error); OPENCL_CHECK_ERRORS(error) delete[] source; // Builds the program error = clBuildProgram(program, 1, &device, NULL, NULL, NULL); OPENCL_CHECK_ERRORS(error) // Shows the log char* build_log; size_t log_size; // First call to know the proper size clGetProgramBuildInfo(program, device, CL_PROGRAM_BUILD_LOG, 0, NULL, &log_size); build_log = new char[log_size + 1]; // Second call to get the log clGetProgramBuildInfo(program, device, CL_PROGRAM_BUILD_LOG, log_size, build_log, NULL); build_log[log_size] = '�'; cout << build_log << endl; delete[] build_log; // Extracting the kernel cl_kernel run_as_gpu_1 = clCreateKernel(program, "RunAsGpu_1", &error); OPENCL_CHECK_ERRORS(error) //设置kernel参数 cl_int M_d = M; cl_int P_d = P; cl_int N_d = N; error = clSetKernelArg(run_as_gpu_1, 0, sizeof(cl_mem), &A_d); error |= clSetKernelArg(run_as_gpu_1, 1, sizeof(cl_mem), &B_d); error |= clSetKernelArg(run_as_gpu_1, 2, sizeof(int), &M_d); error |= clSetKernelArg(run_as_gpu_1, 3, sizeof(int), &N_d); error |= clSetKernelArg(run_as_gpu_1, 4, sizeof(int), &P_d); error |= clSetKernelArg(run_as_gpu_1, 5, sizeof(cl_mem), &C_d); OPENCL_CHECK_ERRORS(error) // 启动kernel size_t globalws_1[2] = { M,N }; cl_event ev; error = clEnqueueNDRangeKernel(queue, run_as_gpu_1, 2, NULL, globalws_1, NULL, 0, NULL, &ev); clFinish(queue); OPENCL_CHECK_ERRORS(error) //计算kerenl执行时间 cl_ulong startTime, endTime; clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_START, sizeof(cl_ulong), &startTime, NULL); clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_END, sizeof(cl_ulong), &endTime, NULL); cl_ulong kernelExecTimeNs = endTime - startTime; printf("Gpu_1运行时间 :%8.6f ms ", kernelExecTimeNs*1e-6); //取得kernel返回值 float* gpu_C_1 = new float[M*N]; clEnqueueReadBuffer(queue, C_d, CL_TRUE, 0, M*N*sizeof(float), gpu_C_1, 0, NULL, NULL); assert(memcmp(C_h, gpu_C_1, M*N * sizeof(float)) == 0); // Extracting the kernel cl_kernel run_as_gpu_2 = clCreateKernel(program, "RunAsGpu_2", &error); OPENCL_CHECK_ERRORS(error) //设置kernel参数 error = clSetKernelArg(run_as_gpu_2, 0, sizeof(cl_mem), &A_d); error |= clSetKernelArg(run_as_gpu_2, 1, sizeof(cl_mem), &B_d); error |= clSetKernelArg(run_as_gpu_2, 2, sizeof(int), &M_d); error |= clSetKernelArg(run_as_gpu_2, 3, sizeof(int), &N_d); error |= clSetKernelArg(run_as_gpu_2, 4, sizeof(int), &P_d); error |= clSetKernelArg(run_as_gpu_2, 5, sizeof(cl_mem), &C_d); OPENCL_CHECK_ERRORS(error) // 启动kernel size_t globalws_2[2] = { N,M }; error = clEnqueueNDRangeKernel(queue, run_as_gpu_2, 2, NULL, globalws_2, NULL, 0, NULL, &ev); clFinish(queue); OPENCL_CHECK_ERRORS(error) //计算kerenl执行时间 clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_START, sizeof(cl_ulong), &startTime, NULL); clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_END, sizeof(cl_ulong), &endTime, NULL); kernelExecTimeNs = endTime - startTime; printf("Gpu_2运行时间 :%8.6f ms ", kernelExecTimeNs*1e-6); //取得kernel返回值 float* gpu_C_2 = new float[M*N]; clEnqueueReadBuffer(queue, C_d, CL_TRUE, 0, M*N * sizeof(float), gpu_C_2, 0, NULL, NULL); assert(memcmp(C_h, gpu_C_2, M*N * sizeof(float)) == 0); error = clEnqueueNDRangeKernel(queue, run_as_gpu_1, 2, NULL, globalws_1, NULL, 0, NULL, &ev); clFinish(queue); OPENCL_CHECK_ERRORS(error) //计算kerenl执行时间 clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_START, sizeof(cl_ulong), &startTime, NULL); clGetEventProfilingInfo(ev, CL_PROFILING_COMMAND_END, sizeof(cl_ulong), &endTime, NULL); kernelExecTimeNs = endTime - startTime; printf("Gpu_1运行时间 :%8.6f ms ", kernelExecTimeNs*1e-6); delete[] A_h; delete[] B_h; delete[] C_h; delete[] gpu_C_1; delete[] gpu_C_2; delete[] platforms; clReleaseKernel(run_as_gpu_1); clReleaseKernel(run_as_gpu_2); clReleaseCommandQueue(queue); clReleaseContext(context); clReleaseMemObject(A_d); clReleaseMemObject(B_d); clReleaseMemObject(C_d); return 0; }

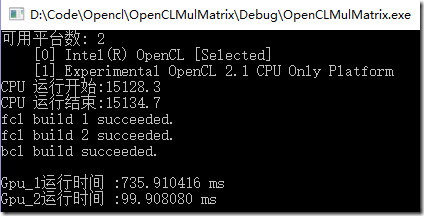

三、运行结果

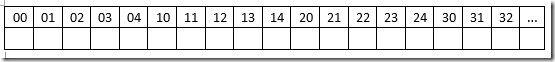

这里可以看出,两个方案虽然结果一样,但是效率是有很大差别的,原因是什么呢?上面说到,GPU会合并内存访问来优化性能,多维情况下,内存空间是按照行主序的方式储存的,如下图,一个5列的二维数组内存排列方式如下:

而在GPU执行过程中,他是先执行第一个纬度,再执行第二个纬度。所以,在第一种情况下,第一维是M,第二维是N,此时,B和C的内存无法合并访问(访问顺序是00 10 20 30 40 01 11 21 …)

在第二种情况下,B和C的内存可以合并访问(访问顺序是00 01 02 03 04 11 12 13 …)

合并访问会减小内存请求,优化性能。

四、其他示例

试试添加一个kernel函数,测试它的运行时间。

__kernel void RunAsGpu_3( __global float *A, __global float *B, int M, int N, int P, __global float* C) { int x = get_global_id(0); int y = get_global_id(1); C[x*N + y] = 0; for(int i = 0;i<P;i++) { C[x*N + y] += A[x*P + i]*B[i*N + y]; } }