1.逻辑回归是怎么防止过拟合的?为什么正则化可以防止过拟合?(大家用自己的话介绍下)

(1)

①. 增加样本量,这是万能的方法

②通过特征选择,剔除一些不重要的特征,从而降低模型复杂度。

(2)过拟合的时候,拟合函数的系数往往非常大,而正则化是通过约束参数的范数使其不要太大,所以可以在一定程度上减少过拟合情况。

2.用logiftic回归来进行实践操作,数据不限。

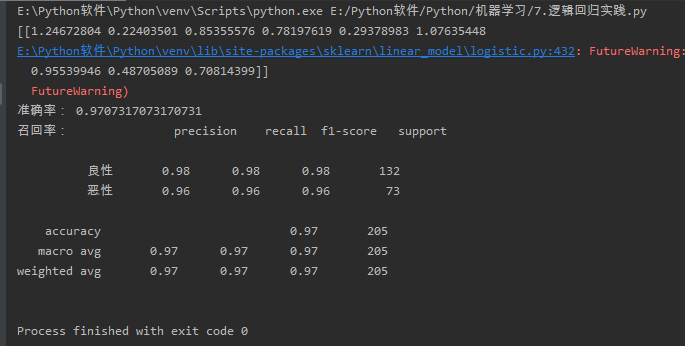

(1)首先使用的是逻辑回归进行肿瘤的预测

from sklearn.linear_model import LogisticRegression ##回归API from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler from sklearn.metrics import classification_report import numpy as np import pandas as pd def logistic(): # '''逻辑回归进行肿瘤的预测''' column = [ '数据编号','属性1','属性2','属性3', '属性4','属性5','属性6','属性7', '属性8','属性9','属性10' ] #读取数据 cancer = pd.read_csv('breast-cancer-wisconsin.csv',names=column) #缺失值处理 cancer = cancer.replace(to_replace='?', value=np.nan) cancer = cancer.dropna() #数据分析 x_train, x_test, y_train, y_test = train_test_split( cancer[column[1:10]], cancer[column[10]], test_size=0.3 ) #进行标准化处理 std = StandardScaler() x_train = std.fit_transform(x_train) x_test = std.transform(x_test) #逻辑回归预测 lg = LogisticRegression() lg.fit(x_train, y_train) print(lg.coef_) lg_predict = lg.predict(x_test) print("准确率:", lg.score(x_test, y_test)) print("召回率:", classification_report(y_test, lg_predict, labels=[2,4], target_names=['良性', '恶性'])) if __name__ == '__main__': logistic()

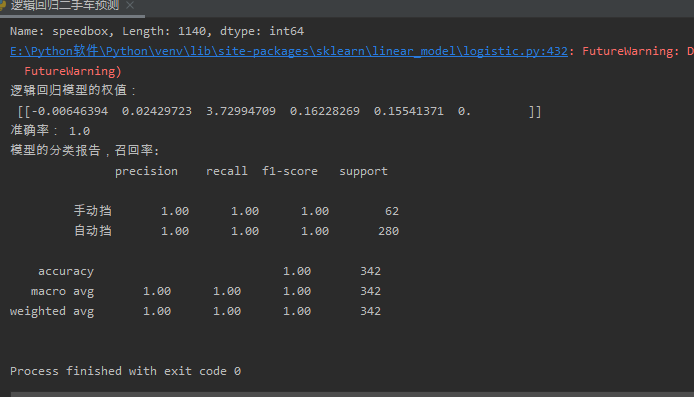

(2)对二手车是否是自动挡进行预测

from sklearn.linear_model import LogisticRegression from sklearn.model_selection import train_test_split from sklearn.preprocessing import StandardScaler from sklearn.metrics import classification_report import pandas as pd def log_R(): data = pd.read_csv('二手车数据1.csv') x = data.loc[:, ['km', 'displacement', 'speedbox', 'price', 'newcarprice', 'baoxian']] print(x) y = data.iloc[:, 2] print(y) #缺失值处理 data = data.dropna(axis=0) #数据分割 x_train,x_test,y_train,y_test = train_test_split(x,y,test_size=0.3) # 进行标准化处理,只处理将x标准化,y不需要,因为y是只有0,1的分类 std = StandardScaler() x_train = std.fit_transform(x_train) x_test = std.transform(x_test) #构建回归模型 LR_model = LogisticRegression() # 模型预测 LR_model.fit(x_train,y_train) # 预测结果 y_pre = LR_model.predict(x_test) print('逻辑回归模型的权值: ', LR_model.coef_) print("准确率:",LR_model.score(x_test,y_test)) print("模型的分类报告,召回率: ",classification_report(y_test,y_pre,target_names=["手动挡","自动挡"])) if __name__ == '__main__': log_R()