from bs4 import BeautifulSoup

import requests

import os

######对风景进行爬出操作

r = requests.get("http://699pic.com/sousuo-218808-13-1-0-0-0.html")

fengjing = r.content

soup = BeautifulSoup(fengjing,"html.parser")

#print(soup.prettify())

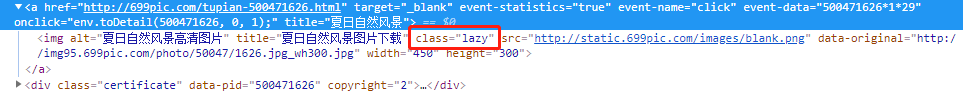

#找出所有class标签

images = soup.find_all(class_="lazy")

print(images)

for item in images:

try:

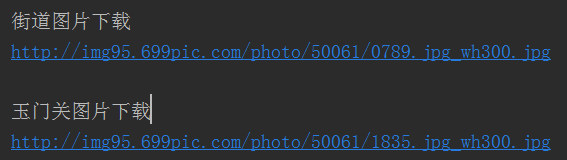

jpg_url = item["data-original"]

title = item["title"]

print(title)

print(jpg_url)

print("")

#保存图片

with open(os.getcwd()+"\jpg"+title+".jpg","wb") as f:

f.write(requests.get(jpg_url).content)

except Exception as e:

pass

另外爬虫Blog如下程序:

from bs4 import BeautifulSoup

import requests

#r = requests.get("https://www.cnblogs.com/Teachertao/")

# 请求首页后获取整个 html 界面

blog = r.content

#print(blog)

#用html.parser解析出html

soup = BeautifulSoup(blog,"html.parser")

# prettify()可以自动解析为html格式

print(soup.prettify())

#获取所有的class属性为"block_title",返回Tag类

time = soup.find_all(class_="block_title")

#print(time)

db = [item for item in time]

print(db)

# 获取title

title = soup.find_all(class_="posttitle")

print(title)

#获取摘要

desc = soup.find_all(class_="c_b_p_desc")

print(desc)

for item in desc:

# tag 的 .contents 属性可以将 tag 的子节点以列表的方式输出

print(item)

########标签如下截图:

########运行结果展示